2023

|

| Kangsoo Kim; Nahal Norouzi; Dongsik Jo; Gerd Bruder; Greg Welch The Augmented Reality Internet of Things: Opportunities of Embodied Interactions in Transreality Book Chapter In: Nee, Andrew Yeh Ching; Ong, Soh Khim (Ed.): Springer Handbook of Augmented Reality, pp. 797–829, Springer International Publishing, Cham, 2023, ISBN: 978-3-030-67822-7. @inbook{Kim2023aa,

title = {The Augmented Reality Internet of Things: Opportunities of Embodied Interactions in Transreality},

author = {Kangsoo Kim and Nahal Norouzi and Dongsik Jo and Gerd Bruder and Greg Welch},

editor = {Andrew Yeh Ching Nee and Soh Khim Ong},

url = {https://doi.org/10.1007/978-3-030-67822-7_32},

doi = {10.1007/978-3-030-67822-7_32},

isbn = {978-3-030-67822-7},

year = {2023},

date = {2023-01-01},

urldate = {2023-01-01},

booktitle = {Springer Handbook of Augmented Reality},

pages = {797--829},

publisher = {Springer International Publishing},

address = {Cham},

abstract = {Human society is encountering a new wave of advancements related to smart connected technologies with the convergence of different traditionally separate fields, which can be characterized by a fusion of technologies that merge and tightly integrate the physical, digital, and biological spheres. In this new paradigm of convergence, all the physical and digital things will become more and more intelligent and connected to each other through the Internet, and the boundary between them will blur and become seamless. In particular, augmented/mixed reality (AR/MR), which combines virtual content with the real environment, is experiencing an unprecedented golden era along with dramatic technological achievements and increasing public interest. Together with advanced artificial intelligence (AI) and ubiquitous computing empowered by the Internet of Things/Everything (IoT/IoE) systems, AR can be our ultimate interface to interact with both digital (virtual) and physical (real) worlds while pervasively mediating and enriching our lives.},

keywords = {},

pubstate = {published},

tppubtype = {inbook}

}

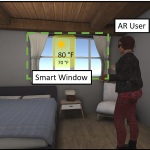

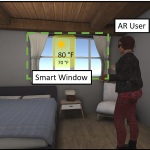

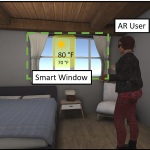

Human society is encountering a new wave of advancements related to smart connected technologies with the convergence of different traditionally separate fields, which can be characterized by a fusion of technologies that merge and tightly integrate the physical, digital, and biological spheres. In this new paradigm of convergence, all the physical and digital things will become more and more intelligent and connected to each other through the Internet, and the boundary between them will blur and become seamless. In particular, augmented/mixed reality (AR/MR), which combines virtual content with the real environment, is experiencing an unprecedented golden era along with dramatic technological achievements and increasing public interest. Together with advanced artificial intelligence (AI) and ubiquitous computing empowered by the Internet of Things/Everything (IoT/IoE) systems, AR can be our ultimate interface to interact with both digital (virtual) and physical (real) worlds while pervasively mediating and enriching our lives. |

2022

|

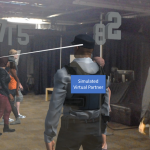

| Zubin Choudhary; Austin Erickson; Nahal Norouzi; Kangsoo Kim; Gerd Bruder; Greg Welch Virtual Big Heads in Extended Reality: Estimation of Ideal Head Scales and Perceptual Thresholds for Comfort and Facial Cues Journal Article In: ACM Transactions on Applied Perception, 2022. @article{Choudhary2022,

title = {Virtual Big Heads in Extended Reality: Estimation of Ideal Head Scales and Perceptual Thresholds for Comfort and Facial Cues},

author = {Zubin Choudhary and Austin Erickson and Nahal Norouzi and Kangsoo Kim and Gerd Bruder and Greg Welch},

url = {https://drive.google.com/file/d/1jdxwLchDH0RPouVENoSx8iSOyDmJhqKb/view?usp=sharing},

year = {2022},

date = {2022-11-02},

urldate = {2022-11-02},

journal = {ACM Transactions on Applied Perception},

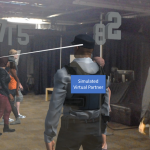

abstract = {Extended reality (XR) technologies, such as virtual reality (VR) and augmented reality (AR), provide users, their avatars, and embodied agents a shared platform to collaborate in a spatial context. While traditional face-to-face communication is limited by users' proximity, meaning that another human's non-verbal embodied cues become more difficult to perceive the farther one is away from that person, In this paper, we describe and evaluate the ``Big Head'' technique, in which a human's head in VR/AR is scaled up relative to their distance from the observer as a mechanism for enhancing the visibility of non-verbal facial cues, such as facial expressions or eye gaze. To better understand and explore this technique, we present two complimentary human-subject experiments in this paper.

In our first experiment, we conducted a VR study with a head-mounted display (HMD) to understand the impact of increased or decreased head scales on participants' ability to perceive facial expressions as well as their sense of comfort and feeling of ``uncannniness'' over distances of up to 10 meters. We explored two different scaling methods and compared perceptual thresholds and user preferences. Our second experiment was performed in an outdoor AR environment with an optical see-through (OST) HMD. Participants were asked to estimate facial expressions and eye gaze, and identify a virtual human over large distances of 30, 60, and 90 meters. In both experiments, our results show significant differences in minimum, maximum, and ideal head scales for different distances and tasks related to perceiving faces, facial expressions, and eye gaze, while we also found that participants were more comfortable with slightly bigger heads at larger distances. We discuss our findings with respect to the technologies used, and we discuss implications and guidelines for practical applications that aim to leverage XR-enhanced facial cues.},

keywords = {},

pubstate = {published},

tppubtype = {article}

}

Extended reality (XR) technologies, such as virtual reality (VR) and augmented reality (AR), provide users, their avatars, and embodied agents a shared platform to collaborate in a spatial context. While traditional face-to-face communication is limited by users' proximity, meaning that another human's non-verbal embodied cues become more difficult to perceive the farther one is away from that person, In this paper, we describe and evaluate the ``Big Head'' technique, in which a human's head in VR/AR is scaled up relative to their distance from the observer as a mechanism for enhancing the visibility of non-verbal facial cues, such as facial expressions or eye gaze. To better understand and explore this technique, we present two complimentary human-subject experiments in this paper.

In our first experiment, we conducted a VR study with a head-mounted display (HMD) to understand the impact of increased or decreased head scales on participants' ability to perceive facial expressions as well as their sense of comfort and feeling of ``uncannniness'' over distances of up to 10 meters. We explored two different scaling methods and compared perceptual thresholds and user preferences. Our second experiment was performed in an outdoor AR environment with an optical see-through (OST) HMD. Participants were asked to estimate facial expressions and eye gaze, and identify a virtual human over large distances of 30, 60, and 90 meters. In both experiments, our results show significant differences in minimum, maximum, and ideal head scales for different distances and tasks related to perceiving faces, facial expressions, and eye gaze, while we also found that participants were more comfortable with slightly bigger heads at larger distances. We discuss our findings with respect to the technologies used, and we discuss implications and guidelines for practical applications that aim to leverage XR-enhanced facial cues. |

| Nahal Norouzi; Kangsoo Kim; Gerd Bruder; Jeremy Bailenson; Pamela J. Wisniewski; Greg Welch The Advantages of Virtual Dogs Over Virtual People: Using Augmented Reality to Provide Social Support in Stressful Situations Journal Article In: International Journal of Human Computer Studies, 2022. @article{Norouzi2022b,

title = {The Advantages of Virtual Dogs Over Virtual People: Using Augmented Reality to Provide Social Support in Stressful Situations},

author = {Nahal Norouzi and Kangsoo Kim and Gerd Bruder and Jeremy Bailenson and Pamela J. Wisniewski and Greg Welch},

url = {https://sreal.ucf.edu/wp-content/uploads/2022/05/1-s2.0-S1071581922000659-main.pdf},

year = {2022},

date = {2022-05-01},

urldate = {2022-05-01},

journal = {International Journal of Human Computer Studies},

abstract = {Past research highlights the potential for leveraging both humans and animals as social support figures in one’s real life to enhance performance and reduce physiological and psychological stress. Some studies have shown that typically dogs are more effective than people. Various situational and interpersonal circumstances limit the opportunities for receiving support from actual animals in the real world introducing the need for alternative approaches. To that end, advances in augmented reality (AR) technology introduce new opportunities for realizing and investigating virtual dogs as social support figures. In this paper, we report on a within-subjects 3x1 (i.e., no support, virtual human, or virtual dog) experimental design study with 33 participants. We examined the effect on performance, attitude towards the task and the support figure, and stress and anxiety measured through both subjective questionnaires and heart rate data. Our mixed-methods analysis revealed that participants significantly preferred, and more positively evaluated, the virtual dog support figure than the other conditions. Emerged themes from a qualitative analysis of our participants’ post-study interview responses are aligned with these findings as some of our participants mentioned feeling more comfortable with the virtual dog compared to the virtual human although the virtual human was deemed more interactive. We did not find significant differences between our conditions in terms of change in average heart rate; however, average heart rate significantly increased during all conditions. Our research contributes to understanding how AR virtual support dogs can potentially be used to provide social support to people in stressful situations, especially when real support figures cannot be present. We discuss the implications of our findings and share insights for future research.},

keywords = {},

pubstate = {published},

tppubtype = {article}

}

Past research highlights the potential for leveraging both humans and animals as social support figures in one’s real life to enhance performance and reduce physiological and psychological stress. Some studies have shown that typically dogs are more effective than people. Various situational and interpersonal circumstances limit the opportunities for receiving support from actual animals in the real world introducing the need for alternative approaches. To that end, advances in augmented reality (AR) technology introduce new opportunities for realizing and investigating virtual dogs as social support figures. In this paper, we report on a within-subjects 3x1 (i.e., no support, virtual human, or virtual dog) experimental design study with 33 participants. We examined the effect on performance, attitude towards the task and the support figure, and stress and anxiety measured through both subjective questionnaires and heart rate data. Our mixed-methods analysis revealed that participants significantly preferred, and more positively evaluated, the virtual dog support figure than the other conditions. Emerged themes from a qualitative analysis of our participants’ post-study interview responses are aligned with these findings as some of our participants mentioned feeling more comfortable with the virtual dog compared to the virtual human although the virtual human was deemed more interactive. We did not find significant differences between our conditions in terms of change in average heart rate; however, average heart rate significantly increased during all conditions. Our research contributes to understanding how AR virtual support dogs can potentially be used to provide social support to people in stressful situations, especially when real support figures cannot be present. We discuss the implications of our findings and share insights for future research. |

2021

|

| Matt Gottsacker; Nahal Norouzi; Kangsoo Kim; Gerd Bruder; Gregory F. Welch Diegetic Representations for Seamless Cross-Reality Interruptions Conference Proceedings of the IEEE International Symposium on Mixed and Augmented Reality (ISMAR), 2021. @conference{Gottsacker2021,

title = {Diegetic Representations for Seamless Cross-Reality Interruptions},

author = {Matt Gottsacker and Nahal Norouzi and Kangsoo Kim and Gerd Bruder and Gregory F. Welch},

url = {https://sreal.ucf.edu/wp-content/uploads/2021/08/ISMAR_2021_Paper__Interruptions_.pdf},

year = {2021},

date = {2021-10-15},

urldate = {2021-10-15},

booktitle = {Proceedings of the IEEE International Symposium on Mixed and Augmented Reality (ISMAR)},

pages = {10},

abstract = {Due to the closed design of modern virtual reality (VR) head-mounted displays (HMDs), users tend to lose awareness of their real-world surroundings. This is particularly challenging when an-other person in the same physical space needs to interrupt the VR user for a brief conversation. Such interruptions, e.g., tapping a VR user on the shoulder, can cause a disruptive break in presence (BIP),which affects their place and plausibility illusions, and may cause a drop in performance of their virtual activity. Recent findings related to the concept of diegesis, which denotes the internal consistency of an experience/story, suggest potential benefits of integrating registered virtual representations for physical interactors, especially when these appear internally consistent in VR. In this paper, we present a human-subject study we conducted to compare and evaluate five different diegetic and non-diegetic methods to facilitate cross-reality interruptions in a virtual office environment, where a user’s task was briefly interrupted by a physical person. We created a Cross-Reality Interaction Questionnaire (CRIQ) to capture the quality of the interaction from the VR user’s perspective. Our results show that the diegetic representations afforded the highest quality inter-actions, the highest place illusions, and caused the least disruption of the participants’ virtual experiences. We found reasonably high senses of co-presence with the partially and fully diegetic virtual representations. We discuss our findings as well as implications for practical applications that aim to leverage virtual representations to ease cross-reality interruptions},

keywords = {},

pubstate = {published},

tppubtype = {conference}

}

Due to the closed design of modern virtual reality (VR) head-mounted displays (HMDs), users tend to lose awareness of their real-world surroundings. This is particularly challenging when an-other person in the same physical space needs to interrupt the VR user for a brief conversation. Such interruptions, e.g., tapping a VR user on the shoulder, can cause a disruptive break in presence (BIP),which affects their place and plausibility illusions, and may cause a drop in performance of their virtual activity. Recent findings related to the concept of diegesis, which denotes the internal consistency of an experience/story, suggest potential benefits of integrating registered virtual representations for physical interactors, especially when these appear internally consistent in VR. In this paper, we present a human-subject study we conducted to compare and evaluate five different diegetic and non-diegetic methods to facilitate cross-reality interruptions in a virtual office environment, where a user’s task was briefly interrupted by a physical person. We created a Cross-Reality Interaction Questionnaire (CRIQ) to capture the quality of the interaction from the VR user’s perspective. Our results show that the diegetic representations afforded the highest quality inter-actions, the highest place illusions, and caused the least disruption of the participants’ virtual experiences. We found reasonably high senses of co-presence with the partially and fully diegetic virtual representations. We discuss our findings as well as implications for practical applications that aim to leverage virtual representations to ease cross-reality interruptions |

| Nahal Norouzi; Gerd Bruder; Austin Erickson; Kangsoo Kim; Jeremy Bailenson; Pamela J. Wisniewski; Charles E. Hughes; and Gregory F. Welch Virtual Animals as Diegetic Attention Guidance Mechanisms in 360-Degree Experiences Journal Article In: IEEE Transactions on Visualization and Computer Graphics (TVCG) Special Issue on ISMAR 2021, pp. 11, 2021. @article{Norouzi2021,

title = {Virtual Animals as Diegetic Attention Guidance Mechanisms in 360-Degree Experiences},

author = {Nahal Norouzi and Gerd Bruder and Austin Erickson and Kangsoo Kim and Jeremy Bailenson and Pamela J. Wisniewski and Charles E. Hughes and and Gregory F. Welch},

url = {https://sreal.ucf.edu/wp-content/uploads/2021/08/IEEE_ISMAR_TVCG_2021.pdf},

year = {2021},

date = {2021-10-15},

urldate = {2021-10-15},

journal = {IEEE Transactions on Visualization and Computer Graphics (TVCG) Special Issue on ISMAR 2021},

pages = {11},

abstract = {360-degree experiences such as cinematic virtual reality and 360-degree videos are becoming increasingly popular. In most examples, viewers can freely explore the content by changing their orientation. However, in some cases, this increased freedom may lead to viewers missing important events within such experiences. Thus, a recent research thrust has focused on studying mechanisms for guiding viewers’ attention while maintaining their sense of presence and fostering a positive user experience. One approach is the utilization of diegetic mechanisms, characterized by an internal consistency with respect to the narrative and the environment, for attention guidance. While such mechanisms are highly attractive, their uses and potential implementations are still not well understood. Additionally, acknowledging the user in 360-degree experiences has been linked to a higher sense of presence and connection. However, less is known when acknowledging behaviors are carried out by attention guiding mechanisms. To close these gaps, we conducted a within-subjects user study with five conditions of no guide and virtual arrows, birds, dogs, and dogs that acknowledge the user and the environment. Through our mixed-methods analysis, we found that the diegetic virtual animals resulted in a more positive user experience, all of which were at least as effective as the non-diegetic arrow in guiding users towards target events. The acknowledging dog received the most positive responses from our participants in terms of preference and user experience and significantly improved their sense of presence compared to the non-diegetic arrow. Lastly, three themes emerged from a qualitative analysis of our participants’ feedback, indicating the importance of the guide’s blending in, its acknowledging behavior, and participants’ positive associations as the main factors for our participants’ preferences.},

keywords = {},

pubstate = {published},

tppubtype = {article}

}

360-degree experiences such as cinematic virtual reality and 360-degree videos are becoming increasingly popular. In most examples, viewers can freely explore the content by changing their orientation. However, in some cases, this increased freedom may lead to viewers missing important events within such experiences. Thus, a recent research thrust has focused on studying mechanisms for guiding viewers’ attention while maintaining their sense of presence and fostering a positive user experience. One approach is the utilization of diegetic mechanisms, characterized by an internal consistency with respect to the narrative and the environment, for attention guidance. While such mechanisms are highly attractive, their uses and potential implementations are still not well understood. Additionally, acknowledging the user in 360-degree experiences has been linked to a higher sense of presence and connection. However, less is known when acknowledging behaviors are carried out by attention guiding mechanisms. To close these gaps, we conducted a within-subjects user study with five conditions of no guide and virtual arrows, birds, dogs, and dogs that acknowledge the user and the environment. Through our mixed-methods analysis, we found that the diegetic virtual animals resulted in a more positive user experience, all of which were at least as effective as the non-diegetic arrow in guiding users towards target events. The acknowledging dog received the most positive responses from our participants in terms of preference and user experience and significantly improved their sense of presence compared to the non-diegetic arrow. Lastly, three themes emerged from a qualitative analysis of our participants’ feedback, indicating the importance of the guide’s blending in, its acknowledging behavior, and participants’ positive associations as the main factors for our participants’ preferences. |

| Kangsoo Kim; Nahal Norouzi; Dongsik Jo; Gerd Bruder; and Gregory F. Welch The Augmented Reality Internet of Things: Opportunities of Embodied Interactions in Transreality Book Chapter In: Nee, A. Y. C.; Ong, S. K. (Ed.): vol. Handbook of Augmented Reality, pp. 60, Springer , 2021. @inbook{Kim2021,

title = {The Augmented Reality Internet of Things: Opportunities of Embodied Interactions in Transreality},

author = {Kangsoo Kim and Nahal Norouzi and Dongsik Jo and Gerd Bruder and and Gregory F. Welch},

editor = {A. Y. C. Nee and S. K. Ong},

year = {2021},

date = {2021-09-01},

urldate = {2021-09-01},

volume = {Handbook of Augmented Reality},

pages = {60},

publisher = {Springer },

abstract = {Human society is encountering a new wave of advancements related to smart connected technologies with the convergence of different traditionally separate fields, which can be characterized by a fusion of technologies that merge and tightly integrate the physical, digital, and biological spheres. In this new paradigm of convergence, all the physical and digital things will become more and more intelligent and connected to each other through the Internet, and the boundary between them will blur and become seamless. In particular, Augmented/Mixed Reality (AR/MR) combines virtual content with the real environment and is experiencing an unprecedented golden era along with dramatic technological achievements and increasing public interest. Together with advanced Artificial Intelligence (AI) and ubiquitous computing empowered by the Internet of Things/Everything (IoT/IoE) systems, AR can be our ultimate interface to interact with both digital (virtual) and physical (real) worlds while pervasively mediating and enriching our lives. In this chapter, we describe the concept of transreality that symbiotically connects the physical and the virtual worlds incorporating the aforementioned advanced technologies, and illustrate how such transreality environments can transform our activities in it, providing intelligent and intuitive interaction with the environment while exploring prior research literature in this domain. We also present the potential of virtually embodied interactions—e.g., employing virtual avatars and agents—in highly connected transreality spaces for enhancing human abilities and perception. Recent ongoing research focusing on the effects of embodied interaction are described and discussed in different aspects such as perceptual, cognitive, and social contexts. The chapter will end with discussions on potential research directions in the future and implications related to the user experience in transreality.},

keywords = {},

pubstate = {published},

tppubtype = {inbook}

}

Human society is encountering a new wave of advancements related to smart connected technologies with the convergence of different traditionally separate fields, which can be characterized by a fusion of technologies that merge and tightly integrate the physical, digital, and biological spheres. In this new paradigm of convergence, all the physical and digital things will become more and more intelligent and connected to each other through the Internet, and the boundary between them will blur and become seamless. In particular, Augmented/Mixed Reality (AR/MR) combines virtual content with the real environment and is experiencing an unprecedented golden era along with dramatic technological achievements and increasing public interest. Together with advanced Artificial Intelligence (AI) and ubiquitous computing empowered by the Internet of Things/Everything (IoT/IoE) systems, AR can be our ultimate interface to interact with both digital (virtual) and physical (real) worlds while pervasively mediating and enriching our lives. In this chapter, we describe the concept of transreality that symbiotically connects the physical and the virtual worlds incorporating the aforementioned advanced technologies, and illustrate how such transreality environments can transform our activities in it, providing intelligent and intuitive interaction with the environment while exploring prior research literature in this domain. We also present the potential of virtually embodied interactions—e.g., employing virtual avatars and agents—in highly connected transreality spaces for enhancing human abilities and perception. Recent ongoing research focusing on the effects of embodied interaction are described and discussed in different aspects such as perceptual, cognitive, and social contexts. The chapter will end with discussions on potential research directions in the future and implications related to the user experience in transreality. |

| Austin Erickson; Kangsoo Kim; Gerd Bruder; Gregory F. Welch Beyond Visible Light: User and Societal Impacts of Egocentric Multispectral Vision Proceedings Article In: Chen, J. Y. C.; Fragomeni, G. (Ed.): In Proceedings of the 2021 International Conference on Virtual, Augmented, and Mixed Reality, pp. 19, Springer Nature, Washington D.C, 2021. @inproceedings{Erickson2020fb,

title = {Beyond Visible Light: User and Societal Impacts of Egocentric Multispectral Vision},

author = {Austin Erickson and Kangsoo Kim and Gerd Bruder and Gregory F. Welch},

editor = {J. Y. C. Chen and G. Fragomeni},

url = {https://sreal.ucf.edu/wp-content/uploads/2021/03/VAMR21-MSV.pdf},

doi = {10.1007/978-3-030-77599-5_23},

year = {2021},

date = {2021-07-24},

booktitle = {In Proceedings of the 2021 International Conference on Virtual, Augmented, and Mixed Reality},

number = {23},

pages = {19},

publisher = {Springer Nature},

address = {Washington D.C},

abstract = {Multi-spectral imagery is becoming popular for a wide range of application fields from agriculture to healthcare, mainly stemming from advances in consumer sensor and display technologies. Modern augmented reality (AR) head-mounted displays already combine a multitude of sensors and are well-suited for integration with additional sensors, such as cameras capturing information from different parts of the electromagnetic spectrum. In this paper, we describe a novel multi-spectral vision prototype based on the Microsoft HoloLens 1, which we extended with two thermal infrared (IR) cameras and two ultraviolet (UV) cameras. We performed an exploratory experiment, in which participants wore the prototype for an extended period of time and assessed its potential to augment our daily activities. Our report covers a discussion of qualitative insights on personal and societal uses of such novel multi-spectral vision systems, including their applicability for use during the COVID-19 pandemic},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

Multi-spectral imagery is becoming popular for a wide range of application fields from agriculture to healthcare, mainly stemming from advances in consumer sensor and display technologies. Modern augmented reality (AR) head-mounted displays already combine a multitude of sensors and are well-suited for integration with additional sensors, such as cameras capturing information from different parts of the electromagnetic spectrum. In this paper, we describe a novel multi-spectral vision prototype based on the Microsoft HoloLens 1, which we extended with two thermal infrared (IR) cameras and two ultraviolet (UV) cameras. We performed an exploratory experiment, in which participants wore the prototype for an extended period of time and assessed its potential to augment our daily activities. Our report covers a discussion of qualitative insights on personal and societal uses of such novel multi-spectral vision systems, including their applicability for use during the COVID-19 pandemic |

| Austin Erickson; Kangsoo Kim; Alexis Lambert; Gerd Bruder; Michael P. Browne; Greg Welch An Extended Analysis on the Benefits of Dark Mode User Interfaces in Optical See-Through Head-Mounted Displays Journal Article In: ACM Transactions on Applied Perception, vol. 18, no. 3, pp. 22, 2021. @article{Erickson2021,

title = {An Extended Analysis on the Benefits of Dark Mode User Interfaces in Optical See-Through Head-Mounted Displays},

author = {Austin Erickson and Kangsoo Kim and Alexis Lambert and Gerd Bruder and Michael P. Browne and Greg Welch},

editor = {Victoria Interrante and Martin Giese},

url = {https://sreal.ucf.edu/wp-content/uploads/2021/03/ACM_TAP2020_DarkMode1_5.pdf},

doi = {https://doi.org/10.1145/3456874},

year = {2021},

date = {2021-05-20},

journal = {ACM Transactions on Applied Perception},

volume = {18},

number = {3},

pages = {22},

abstract = {Light-on-dark color schemes, so-called “Dark Mode,” are becoming more and more popular over a wide range of display technologies and application fields. Many people who have to look at computer screens for hours at a time, such as computer programmers and computer graphics artists, indicate a preference for switching colors on a computer screen from dark text on a light background to light text on a dark background due to perceived advantages related to visual comfort and acuity, specifically when working in low-light environments.

In this paper, we investigate the effects of dark mode color schemes in the field of optical see-through head-mounted displays (OST-HMDs), where the characteristic “additive” light model implies that bright graphics are visible but dark graphics are transparent. We describe two human-subject studies in which we evaluated a normal and inverted color mode in front of different physical backgrounds and different lighting conditions. Our results indicate that dark mode graphics displayed on the HoloLens have significant benefits for visual acuity, and usability, while user preferences depend largely on the lighting in the physical environment. We discuss the implications of these effects on user interfaces and applications.},

keywords = {},

pubstate = {published},

tppubtype = {article}

}

Light-on-dark color schemes, so-called “Dark Mode,” are becoming more and more popular over a wide range of display technologies and application fields. Many people who have to look at computer screens for hours at a time, such as computer programmers and computer graphics artists, indicate a preference for switching colors on a computer screen from dark text on a light background to light text on a dark background due to perceived advantages related to visual comfort and acuity, specifically when working in low-light environments.

In this paper, we investigate the effects of dark mode color schemes in the field of optical see-through head-mounted displays (OST-HMDs), where the characteristic “additive” light model implies that bright graphics are visible but dark graphics are transparent. We describe two human-subject studies in which we evaluated a normal and inverted color mode in front of different physical backgrounds and different lighting conditions. Our results indicate that dark mode graphics displayed on the HoloLens have significant benefits for visual acuity, and usability, while user preferences depend largely on the lighting in the physical environment. We discuss the implications of these effects on user interfaces and applications. |

| Hiroshi Furuya; Kangsoo Kim; Gerd Bruder; Pamela J. Wisniewski; Gregory F. Welch Autonomous Vehicle Visual Embodiment for Pedestrian Interactions in Crossing Scenarios: Virtual Drivers in AVs for Pedestrian Crossing Proceedings Article In: Extended Abstracts of the 2021 CHI Conference on Human Factors in Computing Systems, pp. 7, Association for Computing Machinery, New York, NY, USA, 2021, ISBN: 9781450380959. @inproceedings{Furuya2021,

title = {Autonomous Vehicle Visual Embodiment for Pedestrian Interactions in Crossing Scenarios: Virtual Drivers in AVs for Pedestrian Crossing},

author = {Hiroshi Furuya and Kangsoo Kim and Gerd Bruder and Pamela J. Wisniewski and Gregory F. Welch},

url = {https://sreal.ucf.edu/wp-content/uploads/2021/06/Furuya2021.pdf},

doi = {10.1145/3411763.3451626},

isbn = {9781450380959},

year = {2021},

date = {2021-05-08},

booktitle = {Extended Abstracts of the 2021 CHI Conference on Human Factors in Computing Systems},

number = {304},

pages = {7},

publisher = {Association for Computing Machinery},

address = {New York, NY, USA},

series = {CHI EA'21},

abstract = {This work presents a novel prototype autonomous vehicle (AV) human-machine interface (HMI) in virtual reality (VR) that utilizes a human-like visual embodiment in the driver's seat of an AV to communicate AV intent to pedestrians in a crosswalk scenario. There is currently a gap in understanding the use of virtual humans in AV HMIs for pedestrian crossing despite the demonstrated efficacy of human-like interfaces in improving human-machine relationships. We conduct a 3x2 within-subjects experiment in VR using our prototype to assess the effects of a virtual human visual embodiment AV HMI on pedestrian crossing behavior and experience. In the experiment participants walk across a virtual crosswalk in front of an AV. How long they took to decide to cross and how long it took for them to reach the other side were collected, in addition to their subjective preferences and feelings of safety. Of 26 participants, 25 preferred the condition with the most anthropomorphic features. An intermediate condition where a human-like virtual driver was present but did not exhibit any behaviors was least preferred and also had a significant effect on time to decide. This work contributes the first empirical work on using human-like visual embodiments for AV HMIs.},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

This work presents a novel prototype autonomous vehicle (AV) human-machine interface (HMI) in virtual reality (VR) that utilizes a human-like visual embodiment in the driver's seat of an AV to communicate AV intent to pedestrians in a crosswalk scenario. There is currently a gap in understanding the use of virtual humans in AV HMIs for pedestrian crossing despite the demonstrated efficacy of human-like interfaces in improving human-machine relationships. We conduct a 3x2 within-subjects experiment in VR using our prototype to assess the effects of a virtual human visual embodiment AV HMI on pedestrian crossing behavior and experience. In the experiment participants walk across a virtual crosswalk in front of an AV. How long they took to decide to cross and how long it took for them to reach the other side were collected, in addition to their subjective preferences and feelings of safety. Of 26 participants, 25 preferred the condition with the most anthropomorphic features. An intermediate condition where a human-like virtual driver was present but did not exhibit any behaviors was least preferred and also had a significant effect on time to decide. This work contributes the first empirical work on using human-like visual embodiments for AV HMIs. |

| Zubin Choudhary; Matt Gottsacker; Kangsoo Kim; Ryan Schubert; Jeanine Stefanucci; Gerd Bruder; Greg Welch Revisiting Distance Perception with Scaled Embodied Cues in Social Virtual Reality Proceedings Article In: IEEE Virtual Reality (VR), 2021, 2021. @inproceedings{Choudhary2021,

title = {Revisiting Distance Perception with Scaled Embodied Cues in Social Virtual Reality},

author = {Zubin Choudhary and Matt Gottsacker and Kangsoo Kim and Ryan Schubert and Jeanine Stefanucci and Gerd Bruder and Greg Welch},

url = {https://sreal.ucf.edu/wp-content/uploads/2021/04/C2593-Revisiting-Distance-Perception-with-Scaled-Embodied-Cues-in-Social-Virtual-Reality-7.pdf},

year = {2021},

date = {2021-04-01},

publisher = {IEEE Virtual Reality (VR), 2021},

abstract = {Previous research on distance estimation in virtual reality (VR) has well established that even for geometrically accurate virtual objects and environments users tend to systematically misestimate distances. This has implications for Social VR, where it introduces variables in personal space and proxemics behavior that change social behaviors compared to the real world. One yet unexplored factor is related to the trend that avatars’ embodied cues in Social VR are often scaled, e.g., by making one’s head bigger or one’s voice louder, to make social cues more pronounced over longer distances.

In this paper we investigate how the perception of avatar distance is changed based on two means for scaling embodied social cues: visual head scale and verbal volume scale. We conducted a human subject study employing a mixed factorial design with two Social VR avatar representations (full-body, head-only) as a between factor as well as three visual head scales and three verbal volume scales (up-scaled, accurate, down-scaled) as within factors. For three distances from social to far-public space, we found that visual head scale had a significant effect on distance judgments and should be tuned for Social VR, while conflicting verbal volume scales did not, indicating that voices can be scaled in Social VR without immediate repercussions on spatial estimates. We discuss the interactions between the factors and implications for Social VR.

},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

Previous research on distance estimation in virtual reality (VR) has well established that even for geometrically accurate virtual objects and environments users tend to systematically misestimate distances. This has implications for Social VR, where it introduces variables in personal space and proxemics behavior that change social behaviors compared to the real world. One yet unexplored factor is related to the trend that avatars’ embodied cues in Social VR are often scaled, e.g., by making one’s head bigger or one’s voice louder, to make social cues more pronounced over longer distances.

In this paper we investigate how the perception of avatar distance is changed based on two means for scaling embodied social cues: visual head scale and verbal volume scale. We conducted a human subject study employing a mixed factorial design with two Social VR avatar representations (full-body, head-only) as a between factor as well as three visual head scales and three verbal volume scales (up-scaled, accurate, down-scaled) as within factors. For three distances from social to far-public space, we found that visual head scale had a significant effect on distance judgments and should be tuned for Social VR, while conflicting verbal volume scales did not, indicating that voices can be scaled in Social VR without immediate repercussions on spatial estimates. We discuss the interactions between the factors and implications for Social VR.

|

2020

|

![[Demo] Towards Interactive Virtual Dogs as a Pervasive Social Companion in Augmented Reality](https://sreal.ucf.edu/wp-content/uploads/2020/12/demoFig-e1607360623259.png) | Nahal Norouzi; Kangsoo Kim; Gerd Bruder; Greg Welch [Demo] Towards Interactive Virtual Dogs as a Pervasive Social Companion in Augmented Reality Proceedings Article In: Proceedings of the combined International Conference on Artificial Reality & Telexistence and Eurographics Symposium on Virtual Environments (ICAT-EGVE)., pp. 29-30, 2020, (Best Demo Audience Choice Award). @inproceedings{Norouzi2020d,

title = {[Demo] Towards Interactive Virtual Dogs as a Pervasive Social Companion in Augmented Reality},

author = {Nahal Norouzi and Kangsoo Kim and Gerd Bruder and Greg Welch },

url = {https://sreal.ucf.edu/wp-content/uploads/2020/12/029-030.pdf},

doi = {https://doi.org/10.2312/egve.20201283},

year = {2020},

date = {2020-12-04},

booktitle = {Proceedings of the combined International Conference on Artificial Reality & Telexistence and Eurographics Symposium on Virtual Environments (ICAT-EGVE).},

pages = {29-30},

abstract = {Pets and animal-assisted intervention sessions have shown to be beneficial for humans' mental, social, and physical health. However, for specific populations, factors such as hygiene restrictions, allergies, and care and resource limitations reduce interaction opportunities. In parallel, understanding the capabilities of animals' technological representations, such as robotic and digital forms, have received considerable attention and has fueled the utilization of many of these technological representations. Additionally, recent advances in augmented reality technology have allowed for the realization of virtual animals with flexible appearances and behaviors to exist in the real world. In this demo, we present a companion virtual dog in augmented reality that aims to facilitate a range of interactions with populations, such as children and older adults. We discuss the potential benefits and limitations of such a companion and propose future use cases and research directions.},

note = {Best Demo Audience Choice Award},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

Pets and animal-assisted intervention sessions have shown to be beneficial for humans' mental, social, and physical health. However, for specific populations, factors such as hygiene restrictions, allergies, and care and resource limitations reduce interaction opportunities. In parallel, understanding the capabilities of animals' technological representations, such as robotic and digital forms, have received considerable attention and has fueled the utilization of many of these technological representations. Additionally, recent advances in augmented reality technology have allowed for the realization of virtual animals with flexible appearances and behaviors to exist in the real world. In this demo, we present a companion virtual dog in augmented reality that aims to facilitate a range of interactions with populations, such as children and older adults. We discuss the potential benefits and limitations of such a companion and propose future use cases and research directions. |

![[Demo] Dark/Light Mode Adaptation for Graphical User Interfaces on Near-Eye Displays](https://sreal.ucf.edu/wp-content/uploads/2020/12/OST-mockup-acuity-e1607360140366-150x150.png) | Austin Erickson; Kangsoo Kim; Gerd Bruder; Gregory F. Welch [Demo] Dark/Light Mode Adaptation for Graphical User Interfaces on Near-Eye Displays Proceedings Article In: Kulik, Alexander; Sra, Misha; Kim, Kangsoo; Seo, Byung-Kuk (Ed.): Proceedings of the International Conference on Artificial Reality and Telexistence & Eurographics Symposium on Virtual Environments, pp. 23-24, The Eurographics Association The Eurographics Association, 2020, ISBN: 978-3-03868-112-0. @inproceedings{Erickson2020f,

title = {[Demo] Dark/Light Mode Adaptation for Graphical User Interfaces on Near-Eye Displays},

author = {Austin Erickson and Kangsoo Kim and Gerd Bruder and Gregory F. Welch},

editor = {Kulik, Alexander and Sra, Misha and Kim, Kangsoo and Seo, Byung-Kuk},

url = {https://sreal.ucf.edu/wp-content/uploads/2020/12/DarkmodeDEMO_ICAT_EGVE_2020-2.pdf

https://www.youtube.com/watch?v=VJQTaYyofCw&t=61s

},

doi = {https://doi.org/10.2312/egve.20201280},

isbn = {978-3-03868-112-0},

year = {2020},

date = {2020-12-02},

booktitle = {Proceedings of the International Conference on Artificial Reality and Telexistence & Eurographics Symposium on Virtual Environments},

pages = {23-24},

publisher = {The Eurographics Association},

organization = {The Eurographics Association},

abstract = {In the fields of augmented reality (AR) and virtual reality (VR), many applications involve user interfaces (UIs) to display various types of information to users. Such UIs are an important component that influences user experience and human factors in AR/VR because the users are directly facing and interacting with them to absorb the visualized information and manipulate the content. While consumer’s interests in different forms of near-eye displays, such as AR/VR head-mounted displays (HMDs), are increasing, research on the design standard for AR/VR UIs and human factors becomes more and more interesting and timely important. Although UI configurations, such as dark mode and light mode, have increased in popularity on other display types over the last several years, they have yet to make their way into AR/VR devices as built in features. This demo showcases several use cases of dark mode and light mode UIs on AR/VR HMDs, and provides general guidelines for when they should be used to provide perceptual benefits to the user},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

In the fields of augmented reality (AR) and virtual reality (VR), many applications involve user interfaces (UIs) to display various types of information to users. Such UIs are an important component that influences user experience and human factors in AR/VR because the users are directly facing and interacting with them to absorb the visualized information and manipulate the content. While consumer’s interests in different forms of near-eye displays, such as AR/VR head-mounted displays (HMDs), are increasing, research on the design standard for AR/VR UIs and human factors becomes more and more interesting and timely important. Although UI configurations, such as dark mode and light mode, have increased in popularity on other display types over the last several years, they have yet to make their way into AR/VR devices as built in features. This demo showcases several use cases of dark mode and light mode UIs on AR/VR HMDs, and provides general guidelines for when they should be used to provide perceptual benefits to the user |

| Nahal Norouzi; Kangsoo Kim; Gerd Bruder; Austin Erickson; Zubin Choudhary; Yifan Li; Greg Welch A Systematic Literature Review of Embodied Augmented Reality Agents in Head-Mounted Display Environments Proceedings Article In: In Proceedings of the International Conference on Artificial Reality and Telexistence & Eurographics Symposium on Virtual Environments, pp. 11, 2020. @inproceedings{Norouzi2020c,

title = {A Systematic Literature Review of Embodied Augmented Reality Agents in Head-Mounted Display Environments},

author = {Nahal Norouzi and Kangsoo Kim and Gerd Bruder and Austin Erickson and Zubin Choudhary and Yifan Li and Greg Welch},

url = {https://sreal.ucf.edu/wp-content/uploads/2020/11/IVC_ICAT_EGVE2020.pdf

https://www.youtube.com/watch?v=IsX5q86pH4M},

year = {2020},

date = {2020-12-02},

urldate = {2020-12-02},

booktitle = {In Proceedings of the International Conference on Artificial Reality and Telexistence & Eurographics Symposium on Virtual Environments},

pages = {11},

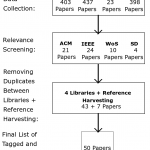

abstract = {Embodied agents, i.e., computer-controlled characters, have proven useful for various applications across a multitude of display setups and modalities. While most traditional work focused on embodied agents presented on a screen or projector, and a growing number of works are focusing on agents in virtual reality, a comparatively small number of publications looked at such agents in augmented reality (AR). Such AR agents, specifically when using see-through head-mounted displays (HMDs)as the display medium, show multiple critical differences to other forms of agents, including their appearances, behaviors, and physical-virtual interactivity. Due to the unique challenges in this specific field, and due to the comparatively limited attention by the research community so far, we believe that it is important to map the field to understand the current trends, challenges, and future research. In this paper, we present a systematic review of the research performed on interactive, embodied AR agents using HMDs. Starting with 1261 broadly related papers, we conducted an in-depth review of 50 directly related papers from2000 to 2020, focusing on papers that reported on user studies aiming to improve our understanding of interactive agents in AR HMD environments or their utilization in specific applications. We identified common research and application areas of AR agents through a structured iterative process, present research trends, and gaps, and share insights on future directions.},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

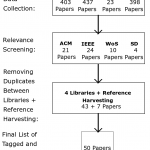

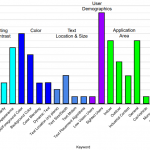

Embodied agents, i.e., computer-controlled characters, have proven useful for various applications across a multitude of display setups and modalities. While most traditional work focused on embodied agents presented on a screen or projector, and a growing number of works are focusing on agents in virtual reality, a comparatively small number of publications looked at such agents in augmented reality (AR). Such AR agents, specifically when using see-through head-mounted displays (HMDs)as the display medium, show multiple critical differences to other forms of agents, including their appearances, behaviors, and physical-virtual interactivity. Due to the unique challenges in this specific field, and due to the comparatively limited attention by the research community so far, we believe that it is important to map the field to understand the current trends, challenges, and future research. In this paper, we present a systematic review of the research performed on interactive, embodied AR agents using HMDs. Starting with 1261 broadly related papers, we conducted an in-depth review of 50 directly related papers from2000 to 2020, focusing on papers that reported on user studies aiming to improve our understanding of interactive agents in AR HMD environments or their utilization in specific applications. We identified common research and application areas of AR agents through a structured iterative process, present research trends, and gaps, and share insights on future directions. |

| Austin Erickson; Kangsoo Kim; Gerd Bruder; Greg Welch A Review of Visual Perception Research in Optical See-Through Augmented Reality Proceedings Article In: In Proceedings of the International Conference on Artificial Reality and Telexistence & Eurographics Symposium on Virtual Environments, pp. 8, The Eurographics Association The Eurographics Association, 2020, ISBN: 978-3-03868-111-3. @inproceedings{Erickson2020e,

title = {A Review of Visual Perception Research in Optical See-Through Augmented Reality},

author = {Austin Erickson and Kangsoo Kim and Gerd Bruder and Greg Welch},

url = {https://sreal.ucf.edu/wp-content/uploads/2021/05/DarkModeSurvey_ICAT_EGVE_2020.pdf},

doi = {10.2312/egve.20201256},

isbn = {978-3-03868-111-3},

year = {2020},

date = {2020-12-02},

booktitle = {In Proceedings of the International Conference on Artificial Reality and Telexistence & Eurographics Symposium on Virtual Environments},

pages = {8},

publisher = {The Eurographics Association},

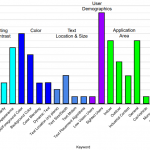

organization = {The Eurographics Association},

abstract = {In the field of augmented reality (AR), many applications involve user interfaces (UIs) that overlay visual information over the user's view of their physical environment, e.g., as text, images, or three-dimensional scene elements. In this scope, optical see-through head-mounted displays (OST-HMDs) are particularly interesting as they typically use an additive light model, which denotes that the perception of the displayed virtual imagery is a composite of the lighting conditions of one's environment, the coloration of the objects that make up the virtual imagery, and the coloration of physical objects that lay behind them. While a large body of literature focused on investigating the visual perception of UI elements in immersive and flat panel displays, comparatively less effort has been spent on OST-HMDs. Due to the unique visual effects with OST-HMDs, we believe that it is important to review the field to understand the perceptual challenges, research trends, and future directions. In this paper, we present a systematic survey of literature based on the IEEE and ACM digital libraries, which explores users' perception of displaying text-based information on an OST-HMD, and aim to provide relevant design suggestions based on the meta-analysis results. We carefully review 14 key papers relevant to the visual perception research in OST-HMDs with UI elements, and present the current state of the research field, associated trends, noticeable research gaps in the literature, and recommendations for potential future research in this domain. },

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

In the field of augmented reality (AR), many applications involve user interfaces (UIs) that overlay visual information over the user's view of their physical environment, e.g., as text, images, or three-dimensional scene elements. In this scope, optical see-through head-mounted displays (OST-HMDs) are particularly interesting as they typically use an additive light model, which denotes that the perception of the displayed virtual imagery is a composite of the lighting conditions of one's environment, the coloration of the objects that make up the virtual imagery, and the coloration of physical objects that lay behind them. While a large body of literature focused on investigating the visual perception of UI elements in immersive and flat panel displays, comparatively less effort has been spent on OST-HMDs. Due to the unique visual effects with OST-HMDs, we believe that it is important to review the field to understand the perceptual challenges, research trends, and future directions. In this paper, we present a systematic survey of literature based on the IEEE and ACM digital libraries, which explores users' perception of displaying text-based information on an OST-HMD, and aim to provide relevant design suggestions based on the meta-analysis results. We carefully review 14 key papers relevant to the visual perception research in OST-HMDs with UI elements, and present the current state of the research field, associated trends, noticeable research gaps in the literature, and recommendations for potential future research in this domain. |

![[Poster] An Automated Virtual Receptionist for Recognizing Visitors and Assuring Mask Wearing](https://sreal.ucf.edu/wp-content/uploads/2020/12/Zehtabian2020aav-300x272.jpg) | Sharare Zehtabian; Siavash Khodadadeh; Kangsoo Kim; Gerd Bruder; Greg Welch; Ladislau Bölöni; Damla Turgut [Poster] An Automated Virtual Receptionist for Recognizing Visitors and Assuring Mask Wearing Proceedings Article In: Proceedings of the International Conference on Artificial Reality and Telexistence & Eurographics Symposium on Virtual Environments, pp. 9-10, 2020. @inproceedings{Zehtabian2020aav,

title = {[Poster] An Automated Virtual Receptionist for Recognizing Visitors and Assuring Mask Wearing},

author = {Sharare Zehtabian and Siavash Khodadadeh and Kangsoo Kim and Gerd Bruder and Greg Welch and Ladislau Bölöni and Damla Turgut},

url = {https://sreal.ucf.edu/wp-content/uploads/2020/12/VirtualReceptionist_Poster_ICAT_EGVE2020.pdf

https://www.youtube.com/watch?v=r6bXNPn3lWU&feature=emb_logo},

doi = {10.2312/egve.20201273},

year = {2020},

date = {2020-12-02},

booktitle = {Proceedings of the International Conference on Artificial Reality and Telexistence & Eurographics Symposium on Virtual Environments},

pages = {9-10},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

|

| Celso M. de Melo; Kangsoo Kim; Nahal Norouzi; Gerd Bruder; Gregory Welch Reducing Cognitive Load and Improving Warfighter Problem Solving with Intelligent Virtual Assistants Journal Article In: Frontiers in Psychology, vol. 11, no. 554706, pp. 1-12, 2020. @article{DeMelo2020rcl,

title = {Reducing Cognitive Load and Improving Warfighter Problem Solving with Intelligent Virtual Assistants},

author = {Celso M. de Melo and Kangsoo Kim and Nahal Norouzi and Gerd Bruder and Gregory Welch},

url = {https://sreal.ucf.edu/wp-content/uploads/2020/11/Melo2020aa-2.pdf},

doi = {10.3389/fpsyg.2020.554706},

year = {2020},

date = {2020-11-17},

journal = {Frontiers in Psychology},

volume = {11},

number = {554706},

pages = {1-12},

keywords = {},

pubstate = {published},

tppubtype = {article}

}

|

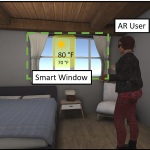

| Austin Erickson; Kangsoo Kim; Gerd Bruder; Gregory F. Welch Exploring the Limitations of Environment Lighting on Optical See-Through Head-Mounted Displays Proceedings Article In: Proceedings of the ACM Symposium on Spatial User Interaction , pp. 1-8, Association for Computing Machinery ACM, New York, NY, USA, 2020, ISBN: 9781450379434. @inproceedings{Erickson2020d,

title = {Exploring the Limitations of Environment Lighting on Optical See-Through Head-Mounted Displays},

author = {Austin Erickson and Kangsoo Kim and Gerd Bruder and Gregory F. Welch},

url = {https://sreal.ucf.edu/wp-content/uploads/2020/09/sui20a-sub1047-cam-i26-1.pdf

https://youtu.be/3jJ-j35oO1I},

doi = {10.1145/3385959.3418445},

isbn = {9781450379434},

year = {2020},

date = {2020-10-31},

booktitle = {Proceedings of the ACM Symposium on Spatial User Interaction },

pages = {1-8},

publisher = {ACM},

address = {New York, NY, USA},

organization = {Association for Computing Machinery},

series = {SUI '20},

abstract = {Due to the additive light model employed by most optical see-through head-mounted displays (OST-HMDs), they provide the best augmented reality (AR) views in dark environments, where the added AR light does not have to compete against existing real-world lighting. AR imagery displayed on such devices loses a significant amount of contrast in well-lit environments such as outdoors in direct sunlight. To compensate for this, OST-HMDs often use a tinted visor to reduce the amount of environment light that reaches the user’s eyes, which in turn results in a loss of contrast in the user’s physical environment. While these effects are well known and grounded in existing literature, formal measurements of the illuminance and contrast of modern OST-HMDs are currently missing. In this paper, we provide illuminance measurements for both the Microsoft HoloLens 1 and its successor the HoloLens 2 under varying environment lighting conditions ranging from 0 to 20,000 lux. We evaluate how environment lighting impacts the user by calculating contrast ratios between rendered black (transparent) and white imagery displayed under these conditions, and evaluate how the intensity of environment lighting is impacted by donning and using the HMD. Our results indicate the further need for refinement in the design of future OST-HMDs to optimize contrast in environments with illuminance values greater than or equal to those found in indoor working environments.},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

Due to the additive light model employed by most optical see-through head-mounted displays (OST-HMDs), they provide the best augmented reality (AR) views in dark environments, where the added AR light does not have to compete against existing real-world lighting. AR imagery displayed on such devices loses a significant amount of contrast in well-lit environments such as outdoors in direct sunlight. To compensate for this, OST-HMDs often use a tinted visor to reduce the amount of environment light that reaches the user’s eyes, which in turn results in a loss of contrast in the user’s physical environment. While these effects are well known and grounded in existing literature, formal measurements of the illuminance and contrast of modern OST-HMDs are currently missing. In this paper, we provide illuminance measurements for both the Microsoft HoloLens 1 and its successor the HoloLens 2 under varying environment lighting conditions ranging from 0 to 20,000 lux. We evaluate how environment lighting impacts the user by calculating contrast ratios between rendered black (transparent) and white imagery displayed under these conditions, and evaluate how the intensity of environment lighting is impacted by donning and using the HMD. Our results indicate the further need for refinement in the design of future OST-HMDs to optimize contrast in environments with illuminance values greater than or equal to those found in indoor working environments. |

| Seungwon Kim; Mark Billinghurst; Kangsoo Kim Multimodal interfaces and communication cues for remote collaboration Journal Article In: Journal on Multimodal User Interfaces, vol. 14, no. 4, pp. 313-319, 2020, ISSN: 1783-7677, (Special Issue Editorial). @article{Kim2020mia,

title = {Multimodal interfaces and communication cues for remote collaboration},

author = {Seungwon Kim and Mark Billinghurst and Kangsoo Kim},

url = {https://sreal.ucf.edu/wp-content/uploads/2020/10/Kim2020mia_Submission.pdf},

doi = {10.1007/s12193-020-00346-8},

issn = {1783-7677},

year = {2020},

date = {2020-10-03},

journal = {Journal on Multimodal User Interfaces},

volume = {14},

number = {4},

pages = {313-319},

note = {Special Issue Editorial},

keywords = {},

pubstate = {published},

tppubtype = {article}

}

|

| Austin Erickson; Nahal Norouzi; Kangsoo Kim; Ryan Schubert; Jonathan Jules; Joseph J. LaViola Jr.; Gerd Bruder; Gregory F. Welch Sharing gaze rays for visual target identification tasks in collaborative augmented reality Journal Article In: Journal on Multimodal User Interfaces: Special Issue on Multimodal Interfaces and Communication Cues for Remote Collaboration, vol. 14, no. 4, pp. 353-371, 2020, ISSN: 1783-8738. @article{EricksonNorouzi2020,

title = {Sharing gaze rays for visual target identification tasks in collaborative augmented reality},

author = {Austin Erickson and Nahal Norouzi and Kangsoo Kim and Ryan Schubert and Jonathan Jules and Joseph J. LaViola Jr. and Gerd Bruder and Gregory F. Welch},

url = {https://sreal.ucf.edu/wp-content/uploads/2020/07/Erickson2020_Article_SharingGazeRaysForVisualTarget.pdf},

doi = {https://doi.org/10.1007/s12193-020-00330-2},

issn = {1783-8738},

year = {2020},

date = {2020-07-09},

urldate = {2020-07-09},

journal = {Journal on Multimodal User Interfaces: Special Issue on Multimodal Interfaces and Communication Cues for Remote Collaboration},

volume = {14},

number = {4},

pages = {353-371},

abstract = {Augmented reality (AR) technologies provide a shared platform for users to collaborate in a physical context involving both real and virtual content. To enhance the quality of interaction between AR users, researchers have proposed augmenting users’ interpersonal space with embodied cues such as their gaze direction. While beneficial in achieving improved interpersonal spatial communication, such shared gaze environments suffer from multiple types of errors related to eye tracking and networking, that can reduce objective performance and subjective experience. In this paper, we present a human-subjects study to understand the impact of accuracy, precision, latency, and dropout based errors on users’ performance when using shared gaze cues to identify a target among a crowd of people. We simulated varying amounts of errors and the target distances and measured participants’ objective performance through their response time and error rate, and their subjective experience and cognitive load through questionnaires. We found significant differences suggesting that the simulated error levels had stronger effects on participants’ performance than target distance with accuracy and latency having a high impact on participants’ error rate. We also observed that participants assessed their own performance as lower than it objectively was. We discuss implications for practical shared gaze applications and we present a multi-user prototype system.},

keywords = {},

pubstate = {published},

tppubtype = {article}

}

Augmented reality (AR) technologies provide a shared platform for users to collaborate in a physical context involving both real and virtual content. To enhance the quality of interaction between AR users, researchers have proposed augmenting users’ interpersonal space with embodied cues such as their gaze direction. While beneficial in achieving improved interpersonal spatial communication, such shared gaze environments suffer from multiple types of errors related to eye tracking and networking, that can reduce objective performance and subjective experience. In this paper, we present a human-subjects study to understand the impact of accuracy, precision, latency, and dropout based errors on users’ performance when using shared gaze cues to identify a target among a crowd of people. We simulated varying amounts of errors and the target distances and measured participants’ objective performance through their response time and error rate, and their subjective experience and cognitive load through questionnaires. We found significant differences suggesting that the simulated error levels had stronger effects on participants’ performance than target distance with accuracy and latency having a high impact on participants’ error rate. We also observed that participants assessed their own performance as lower than it objectively was. We discuss implications for practical shared gaze applications and we present a multi-user prototype system. |

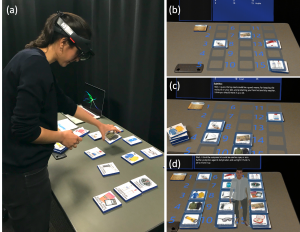

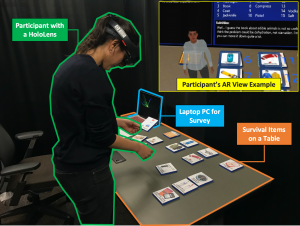

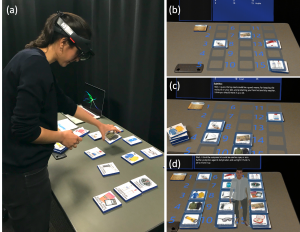

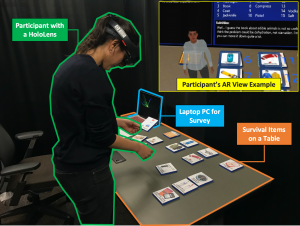

| Kangsoo Kim; Celso M. de Melo; Nahal Norouzi; Gerd Bruder; Gregory F. Welch Reducing Task Load with an Embodied Intelligent Virtual Assistant for Improved Performance in Collaborative Decision Making Proceedings Article In: Proceedings of the IEEE Conference on Virtual Reality and 3D User Interfaces (IEEE VR), pp. 529-538, Atlanta, Georgia, 2020. @inproceedings{Kim2020rtl,

title = {Reducing Task Load with an Embodied Intelligent Virtual Assistant for Improved Performance in Collaborative Decision Making},

author = {Kangsoo Kim and Celso M. de Melo and Nahal Norouzi and Gerd Bruder and Gregory F. Welch},

url = {https://sreal.ucf.edu/wp-content/uploads/2020/02/IEEEVR2020_ARDesertSurvival.pdf

https://www.youtube.com/watch?v=G_iZ_asjp3I&t=6s, YouTube Presentation},

doi = {10.1109/VR46266.2020.00-30},

year = {2020},

date = {2020-03-23},

booktitle = {Proceedings of the IEEE Conference on Virtual Reality and 3D User Interfaces (IEEE VR)},

pages = {529-538},

address = {Atlanta, Georgia},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

|

![[Demo] Towards Interactive Virtual Dogs as a Pervasive Social Companion in Augmented Reality](https://sreal.ucf.edu/wp-content/uploads/2020/12/demoFig-e1607360623259.png)

![[Demo] Dark/Light Mode Adaptation for Graphical User Interfaces on Near-Eye Displays](https://sreal.ucf.edu/wp-content/uploads/2020/12/OST-mockup-acuity-e1607360140366-150x150.png)

![[Poster] An Automated Virtual Receptionist for Recognizing Visitors and Assuring Mask Wearing](https://sreal.ucf.edu/wp-content/uploads/2020/12/Zehtabian2020aav-300x272.jpg)