2020

|

![[Poster] An Automated Virtual Receptionist for Recognizing Visitors and Assuring Mask Wearing](https://sreal.ucf.edu/wp-content/uploads/2020/12/Zehtabian2020aav-300x272.jpg) | Sharare Zehtabian; Siavash Khodadadeh; Kangsoo Kim; Gerd Bruder; Greg Welch; Ladislau Bölöni; Damla Turgut [Poster] An Automated Virtual Receptionist for Recognizing Visitors and Assuring Mask Wearing Proceedings Article In: Proceedings of the International Conference on Artificial Reality and Telexistence & Eurographics Symposium on Virtual Environments, pp. 9-10, 2020. @inproceedings{Zehtabian2020aav,

title = {[Poster] An Automated Virtual Receptionist for Recognizing Visitors and Assuring Mask Wearing},

author = {Sharare Zehtabian and Siavash Khodadadeh and Kangsoo Kim and Gerd Bruder and Greg Welch and Ladislau Bölöni and Damla Turgut},

url = {https://sreal.ucf.edu/wp-content/uploads/2020/12/VirtualReceptionist_Poster_ICAT_EGVE2020.pdf

https://www.youtube.com/watch?v=r6bXNPn3lWU&feature=emb_logo},

doi = {10.2312/egve.20201273},

year = {2020},

date = {2020-12-02},

booktitle = {Proceedings of the International Conference on Artificial Reality and Telexistence & Eurographics Symposium on Virtual Environments},

pages = {9-10},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

|

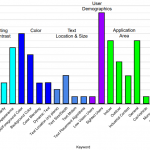

| Austin Erickson; Kangsoo Kim; Gerd Bruder; Greg Welch A Review of Visual Perception Research in Optical See-Through Augmented Reality Proceedings Article In: In Proceedings of the International Conference on Artificial Reality and Telexistence & Eurographics Symposium on Virtual Environments, pp. 8, The Eurographics Association The Eurographics Association, 2020, ISBN: 978-3-03868-111-3. @inproceedings{Erickson2020e,

title = {A Review of Visual Perception Research in Optical See-Through Augmented Reality},

author = {Austin Erickson and Kangsoo Kim and Gerd Bruder and Greg Welch},

url = {https://sreal.ucf.edu/wp-content/uploads/2021/05/DarkModeSurvey_ICAT_EGVE_2020.pdf},

doi = {10.2312/egve.20201256},

isbn = {978-3-03868-111-3},

year = {2020},

date = {2020-12-02},

booktitle = {In Proceedings of the International Conference on Artificial Reality and Telexistence & Eurographics Symposium on Virtual Environments},

pages = {8},

publisher = {The Eurographics Association},

organization = {The Eurographics Association},

abstract = {In the field of augmented reality (AR), many applications involve user interfaces (UIs) that overlay visual information over the user's view of their physical environment, e.g., as text, images, or three-dimensional scene elements. In this scope, optical see-through head-mounted displays (OST-HMDs) are particularly interesting as they typically use an additive light model, which denotes that the perception of the displayed virtual imagery is a composite of the lighting conditions of one's environment, the coloration of the objects that make up the virtual imagery, and the coloration of physical objects that lay behind them. While a large body of literature focused on investigating the visual perception of UI elements in immersive and flat panel displays, comparatively less effort has been spent on OST-HMDs. Due to the unique visual effects with OST-HMDs, we believe that it is important to review the field to understand the perceptual challenges, research trends, and future directions. In this paper, we present a systematic survey of literature based on the IEEE and ACM digital libraries, which explores users' perception of displaying text-based information on an OST-HMD, and aim to provide relevant design suggestions based on the meta-analysis results. We carefully review 14 key papers relevant to the visual perception research in OST-HMDs with UI elements, and present the current state of the research field, associated trends, noticeable research gaps in the literature, and recommendations for potential future research in this domain. },

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

In the field of augmented reality (AR), many applications involve user interfaces (UIs) that overlay visual information over the user's view of their physical environment, e.g., as text, images, or three-dimensional scene elements. In this scope, optical see-through head-mounted displays (OST-HMDs) are particularly interesting as they typically use an additive light model, which denotes that the perception of the displayed virtual imagery is a composite of the lighting conditions of one's environment, the coloration of the objects that make up the virtual imagery, and the coloration of physical objects that lay behind them. While a large body of literature focused on investigating the visual perception of UI elements in immersive and flat panel displays, comparatively less effort has been spent on OST-HMDs. Due to the unique visual effects with OST-HMDs, we believe that it is important to review the field to understand the perceptual challenges, research trends, and future directions. In this paper, we present a systematic survey of literature based on the IEEE and ACM digital libraries, which explores users' perception of displaying text-based information on an OST-HMD, and aim to provide relevant design suggestions based on the meta-analysis results. We carefully review 14 key papers relevant to the visual perception research in OST-HMDs with UI elements, and present the current state of the research field, associated trends, noticeable research gaps in the literature, and recommendations for potential future research in this domain. |

| Gregory F. Welch Kalman Filter Book Chapter In: Rehg, Jim (Ed.): Computer Vision: A Reference Guide, pp. 1–3, Springer International Publishing, Cham, Switzerland, 2020, ISBN: 978-3-030-03243-2. @inbook{Welch2020ab,

title = {Kalman Filter},

author = {Gregory F. Welch},

editor = {Jim Rehg},

url = {https://sreal.ucf.edu/wp-content/uploads/2020/12/Welch2020ae.pdf},

doi = {10.1007/978-3-030-03243-2_716-1},

isbn = {978-3-030-03243-2},

year = {2020},

date = {2020-12-01},

booktitle = {Computer Vision: A Reference Guide},

pages = {1--3},

publisher = {Springer International Publishing},

address = {Cham, Switzerland},

keywords = {},

pubstate = {published},

tppubtype = {inbook}

}

|

| Celso M. de Melo; Kangsoo Kim; Nahal Norouzi; Gerd Bruder; Gregory Welch Reducing Cognitive Load and Improving Warfighter Problem Solving with Intelligent Virtual Assistants Journal Article In: Frontiers in Psychology, vol. 11, no. 554706, pp. 1-12, 2020. @article{DeMelo2020rcl,

title = {Reducing Cognitive Load and Improving Warfighter Problem Solving with Intelligent Virtual Assistants},

author = {Celso M. de Melo and Kangsoo Kim and Nahal Norouzi and Gerd Bruder and Gregory Welch},

url = {https://sreal.ucf.edu/wp-content/uploads/2020/11/Melo2020aa-2.pdf},

doi = {10.3389/fpsyg.2020.554706},

year = {2020},

date = {2020-11-17},

journal = {Frontiers in Psychology},

volume = {11},

number = {554706},

pages = {1-12},

keywords = {},

pubstate = {published},

tppubtype = {article}

}

|

| Austin Erickson; Kangsoo Kim; Gerd Bruder; Gregory F. Welch Exploring the Limitations of Environment Lighting on Optical See-Through Head-Mounted Displays Proceedings Article In: Proceedings of the ACM Symposium on Spatial User Interaction , pp. 1-8, Association for Computing Machinery ACM, New York, NY, USA, 2020, ISBN: 9781450379434. @inproceedings{Erickson2020d,

title = {Exploring the Limitations of Environment Lighting on Optical See-Through Head-Mounted Displays},

author = {Austin Erickson and Kangsoo Kim and Gerd Bruder and Gregory F. Welch},

url = {https://sreal.ucf.edu/wp-content/uploads/2020/09/sui20a-sub1047-cam-i26-1.pdf

https://youtu.be/3jJ-j35oO1I},

doi = {10.1145/3385959.3418445},

isbn = {9781450379434},

year = {2020},

date = {2020-10-31},

booktitle = {Proceedings of the ACM Symposium on Spatial User Interaction },

pages = {1-8},

publisher = {ACM},

address = {New York, NY, USA},

organization = {Association for Computing Machinery},

series = {SUI '20},

abstract = {Due to the additive light model employed by most optical see-through head-mounted displays (OST-HMDs), they provide the best augmented reality (AR) views in dark environments, where the added AR light does not have to compete against existing real-world lighting. AR imagery displayed on such devices loses a significant amount of contrast in well-lit environments such as outdoors in direct sunlight. To compensate for this, OST-HMDs often use a tinted visor to reduce the amount of environment light that reaches the user’s eyes, which in turn results in a loss of contrast in the user’s physical environment. While these effects are well known and grounded in existing literature, formal measurements of the illuminance and contrast of modern OST-HMDs are currently missing. In this paper, we provide illuminance measurements for both the Microsoft HoloLens 1 and its successor the HoloLens 2 under varying environment lighting conditions ranging from 0 to 20,000 lux. We evaluate how environment lighting impacts the user by calculating contrast ratios between rendered black (transparent) and white imagery displayed under these conditions, and evaluate how the intensity of environment lighting is impacted by donning and using the HMD. Our results indicate the further need for refinement in the design of future OST-HMDs to optimize contrast in environments with illuminance values greater than or equal to those found in indoor working environments.},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

Due to the additive light model employed by most optical see-through head-mounted displays (OST-HMDs), they provide the best augmented reality (AR) views in dark environments, where the added AR light does not have to compete against existing real-world lighting. AR imagery displayed on such devices loses a significant amount of contrast in well-lit environments such as outdoors in direct sunlight. To compensate for this, OST-HMDs often use a tinted visor to reduce the amount of environment light that reaches the user’s eyes, which in turn results in a loss of contrast in the user’s physical environment. While these effects are well known and grounded in existing literature, formal measurements of the illuminance and contrast of modern OST-HMDs are currently missing. In this paper, we provide illuminance measurements for both the Microsoft HoloLens 1 and its successor the HoloLens 2 under varying environment lighting conditions ranging from 0 to 20,000 lux. We evaluate how environment lighting impacts the user by calculating contrast ratios between rendered black (transparent) and white imagery displayed under these conditions, and evaluate how the intensity of environment lighting is impacted by donning and using the HMD. Our results indicate the further need for refinement in the design of future OST-HMDs to optimize contrast in environments with illuminance values greater than or equal to those found in indoor working environments. |

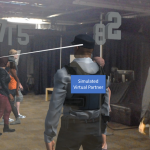

| Gregory F Welch; Ryan Schubert; Gerd Bruder; Derrick P Stockdreher; Adam Casebolt Augmented Reality Promises Mentally and Physically Stressful Training in Real Places Journal Article In: IACLEA Campus Law Enforcement Journal, vol. 50, no. 5, pp. 47–50, 2020. @article{Welch2020aa,

title = {Augmented Reality Promises Mentally and Physically Stressful Training in Real Places},

author = {Gregory F Welch and Ryan Schubert and Gerd Bruder and Derrick P Stockdreher and Adam Casebolt},

url = {https://sreal.ucf.edu/wp-content/uploads/2020/10/Welch2020aa.pdf},

year = {2020},

date = {2020-10-05},

journal = {IACLEA Campus Law Enforcement Journal},

volume = {50},

number = {5},

pages = {47--50},

keywords = {},

pubstate = {published},

tppubtype = {article}

}

|

| Seungwon Kim; Mark Billinghurst; Kangsoo Kim Multimodal interfaces and communication cues for remote collaboration Journal Article In: Journal on Multimodal User Interfaces, vol. 14, no. 4, pp. 313-319, 2020, ISSN: 1783-7677, (Special Issue Editorial). @article{Kim2020mia,

title = {Multimodal interfaces and communication cues for remote collaboration},

author = {Seungwon Kim and Mark Billinghurst and Kangsoo Kim},

url = {https://sreal.ucf.edu/wp-content/uploads/2020/10/Kim2020mia_Submission.pdf},

doi = {10.1007/s12193-020-00346-8},

issn = {1783-7677},

year = {2020},

date = {2020-10-03},

journal = {Journal on Multimodal User Interfaces},

volume = {14},

number = {4},

pages = {313-319},

note = {Special Issue Editorial},

keywords = {},

pubstate = {published},

tppubtype = {article}

}

|

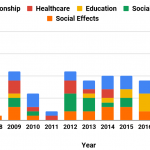

| Alexis Lambert; Nahal Norouzi; Gerd Bruder; Greg Welch

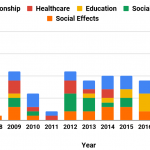

A Systematic Review of Ten Years of Research on Human Interaction with Social Robots Journal Article In: International Journal of Human-Computer Interaction, pp. 10, 2020. @article{Lambert2020,

title = {A Systematic Review of Ten Years of Research on Human Interaction with Social Robots},

author = {Alexis Lambert and Nahal Norouzi and Gerd Bruder and Greg Welch

},

editor = {Constantine Stephanidis},

url = {https://sreal.ucf.edu/wp-content/uploads/2020/08/8_25_2020_A-Systemat.pdf},

doi = {10.1080/10447318.2020.1801172},

year = {2020},

date = {2020-08-25},

journal = {International Journal of Human-Computer Interaction},

pages = {10},

abstract = {While research and development related to robotics has been going on for decades, the past decade in particular has seen a marked increase in related efforts, in part due to technological advances, increased technological accessibility and reliability, and increased commercial availability. What have come to be known as social robots are now being used to explore novel forms of human-robot interaction, to understand social norms, and to test expectations and human responses. To capture the contributions of these research efforts, identify the current trends, and future directions, we systematically review ten years of research in the field of social robotics between 2008 and 2018, which includes 86 publications with 70 user studies. We classify the past work based on the research topics and application areas, and provide information about the publications, their user studies, and the capabilities of the social robots utilized. We also discuss selected papers in detail and outline overall trends. Based on these findings, we identify some areas of potential future research.},

keywords = {},

pubstate = {published},

tppubtype = {article}

}

While research and development related to robotics has been going on for decades, the past decade in particular has seen a marked increase in related efforts, in part due to technological advances, increased technological accessibility and reliability, and increased commercial availability. What have come to be known as social robots are now being used to explore novel forms of human-robot interaction, to understand social norms, and to test expectations and human responses. To capture the contributions of these research efforts, identify the current trends, and future directions, we systematically review ten years of research in the field of social robotics between 2008 and 2018, which includes 86 publications with 70 user studies. We classify the past work based on the research topics and application areas, and provide information about the publications, their user studies, and the capabilities of the social robots utilized. We also discuss selected papers in detail and outline overall trends. Based on these findings, we identify some areas of potential future research. |

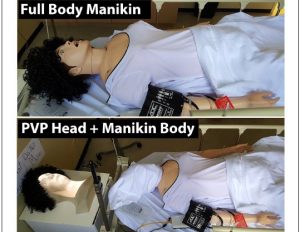

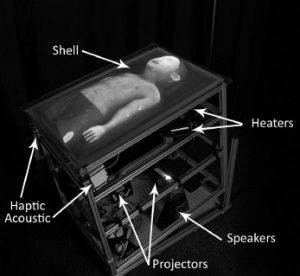

| Laura Gonzalez; Salam Daher; Greg Welch Neurological Assessment Using a Physical-Virtual Patient (PVP) Journal Article In: Simulation & Gaming, pp. 1–17, 2020. @article{Gonzalez2020aa,

title = {Neurological Assessment Using a Physical-Virtual Patient (PVP)},

author = {Laura Gonzalez and Salam Daher and Greg Welch},

url = {https://sreal.ucf.edu/wp-content/uploads/2020/08/Gonzalez2020aa.pdf},

year = {2020},

date = {2020-08-12},

journal = {Simulation & Gaming},

pages = {1--17},

keywords = {},

pubstate = {published},

tppubtype = {article}

}

|

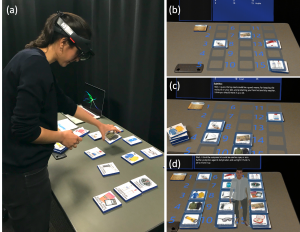

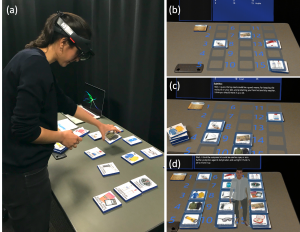

| Austin Erickson; Nahal Norouzi; Kangsoo Kim; Ryan Schubert; Jonathan Jules; Joseph J. LaViola Jr.; Gerd Bruder; Gregory F. Welch Sharing gaze rays for visual target identification tasks in collaborative augmented reality Journal Article In: Journal on Multimodal User Interfaces: Special Issue on Multimodal Interfaces and Communication Cues for Remote Collaboration, vol. 14, no. 4, pp. 353-371, 2020, ISSN: 1783-8738. @article{EricksonNorouzi2020,

title = {Sharing gaze rays for visual target identification tasks in collaborative augmented reality},

author = {Austin Erickson and Nahal Norouzi and Kangsoo Kim and Ryan Schubert and Jonathan Jules and Joseph J. LaViola Jr. and Gerd Bruder and Gregory F. Welch},

url = {https://sreal.ucf.edu/wp-content/uploads/2020/07/Erickson2020_Article_SharingGazeRaysForVisualTarget.pdf},

doi = {https://doi.org/10.1007/s12193-020-00330-2},

issn = {1783-8738},

year = {2020},

date = {2020-07-09},

urldate = {2020-07-09},

journal = {Journal on Multimodal User Interfaces: Special Issue on Multimodal Interfaces and Communication Cues for Remote Collaboration},

volume = {14},

number = {4},

pages = {353-371},

abstract = {Augmented reality (AR) technologies provide a shared platform for users to collaborate in a physical context involving both real and virtual content. To enhance the quality of interaction between AR users, researchers have proposed augmenting users’ interpersonal space with embodied cues such as their gaze direction. While beneficial in achieving improved interpersonal spatial communication, such shared gaze environments suffer from multiple types of errors related to eye tracking and networking, that can reduce objective performance and subjective experience. In this paper, we present a human-subjects study to understand the impact of accuracy, precision, latency, and dropout based errors on users’ performance when using shared gaze cues to identify a target among a crowd of people. We simulated varying amounts of errors and the target distances and measured participants’ objective performance through their response time and error rate, and their subjective experience and cognitive load through questionnaires. We found significant differences suggesting that the simulated error levels had stronger effects on participants’ performance than target distance with accuracy and latency having a high impact on participants’ error rate. We also observed that participants assessed their own performance as lower than it objectively was. We discuss implications for practical shared gaze applications and we present a multi-user prototype system.},

keywords = {},

pubstate = {published},

tppubtype = {article}

}

Augmented reality (AR) technologies provide a shared platform for users to collaborate in a physical context involving both real and virtual content. To enhance the quality of interaction between AR users, researchers have proposed augmenting users’ interpersonal space with embodied cues such as their gaze direction. While beneficial in achieving improved interpersonal spatial communication, such shared gaze environments suffer from multiple types of errors related to eye tracking and networking, that can reduce objective performance and subjective experience. In this paper, we present a human-subjects study to understand the impact of accuracy, precision, latency, and dropout based errors on users’ performance when using shared gaze cues to identify a target among a crowd of people. We simulated varying amounts of errors and the target distances and measured participants’ objective performance through their response time and error rate, and their subjective experience and cognitive load through questionnaires. We found significant differences suggesting that the simulated error levels had stronger effects on participants’ performance than target distance with accuracy and latency having a high impact on participants’ error rate. We also observed that participants assessed their own performance as lower than it objectively was. We discuss implications for practical shared gaze applications and we present a multi-user prototype system. |

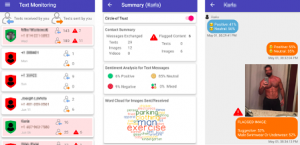

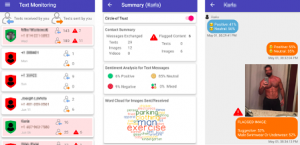

| Arup Kumar Ghosh; Charles E. Hughes; Pamela J. Wisniewski Circle of Trust: A New Approach to Mobile Online Safety for Teens and Parents Proceedings Article In: Proceedings of CHI Conference on Human Factors in Computing Systems, pp. 618:1-14, 2020. @inproceedings{Ghosh2020cot,

title = {Circle of Trust: A New Approach to Mobile Online Safety for Teens and Parents},

author = {Arup Kumar Ghosh and Charles E. Hughes and Pamela J. Wisniewski },

doi = {10.1145/3313831.3376747},

year = {2020},

date = {2020-04-25},

booktitle = {Proceedings of CHI Conference on Human Factors in Computing Systems},

pages = {618:1-14},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

|

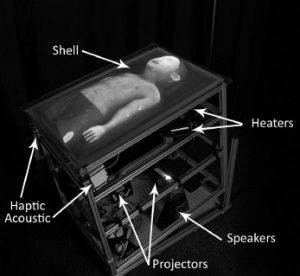

| Salam Daher; Jason Hochreiter; Ryan Schubert; Laura Gonzalez; Juan Cendan; Mindi Anderson; Desiree A Diaz; Gregory F. Welch The Physical-Virtual Patient Simulator: A Physical Human Form with Virtual Appearance and Behavior Journal Article In: Simulation in Healthcare, vol. 15, no. 2, pp. 115–121, 2020, (see erratum at DOI: 10.1097/SIH.0000000000000481). @article{Daher2020aa,

title = {The Physical-Virtual Patient Simulator: A Physical Human Form with Virtual Appearance and Behavior},

author = {Salam Daher and Jason Hochreiter and Ryan Schubert and Laura Gonzalez and Juan Cendan and Mindi Anderson and Desiree A Diaz and Gregory F. Welch},

url = {https://sreal.ucf.edu/wp-content/uploads/2020/06/Daher2020aa1.pdf

https://journals.lww.com/simulationinhealthcare/Fulltext/2020/04000/The_Physical_Virtual_Patient_Simulator__A_Physical.9.aspx

https://journals.lww.com/simulationinhealthcare/Fulltext/2020/06000/Erratum_to_the_Physical_Virtual_Patient_Simulator_.12.aspx},

doi = {10.1097/SIH.0000000000000409},

year = {2020},

date = {2020-04-01},

journal = {Simulation in Healthcare},

volume = {15},

number = {2},

pages = {115--121},

note = {see erratum at DOI: 10.1097/SIH.0000000000000481},

keywords = {},

pubstate = {published},

tppubtype = {article}

}

|

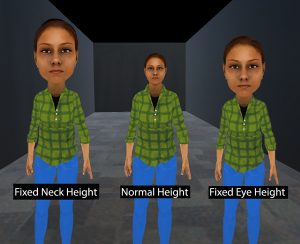

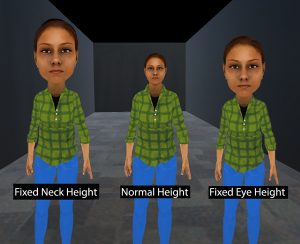

| Zubin Choudhary; Kangsoo Kim; Ryan Schubert; Gerd Bruder; Gregory F. Welch Virtual Big Heads: Analysis of Human Perception and Comfort of Head Scales in Social Virtual Reality Proceedings Article In: Proceedings of the IEEE Conference on Virtual Reality and 3D User Interfaces (IEEE VR), pp. 425-433, Atlanta, Georgia, 2020. @inproceedings{Choudhary2020vbh,

title = {Virtual Big Heads: Analysis of Human Perception and Comfort of Head Scales in Social Virtual Reality},

author = {Zubin Choudhary and Kangsoo Kim and Ryan Schubert and Gerd Bruder and Gregory F. Welch},

url = {https://sreal.ucf.edu/wp-content/uploads/2020/02/IEEEVR2020_BigHead.pdf

https://www.youtube.com/watch?v=14289nufYf0, YouTube Presentation},

doi = {10.1109/VR46266.2020.00-41},

year = {2020},

date = {2020-03-23},

booktitle = {Proceedings of the IEEE Conference on Virtual Reality and 3D User Interfaces (IEEE VR)},

pages = {425-433},

address = {Atlanta, Georgia},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

|

| Austin Erickson; Gerd Bruder; Pamela J. Wisniewski; Greg Welch Examining Whether Secondary Effects of Temperature-Associated Virtual Stimuli Influence Subjective Perception of Duration Proceedings Article In: Proceedings of IEEE International Conference on Virtual Reality and 3D User Interfaces (IEEE VR), pp. 493-499, Atlanta, Georgia, 2020. @inproceedings{Erickson2020b,

title = {Examining Whether Secondary Effects of Temperature-Associated Virtual Stimuli Influence Subjective Perception of Duration},

author = {Austin Erickson and Gerd Bruder and Pamela J. Wisniewski and Greg Welch},

url = {https://sreal.ucf.edu/wp-content/uploads/2020/02/TimePerception_VR2020.pdf

https://www.youtube.com/watch?v=kG2M-cbjS3s&t=1s, YouTube Presentation},

doi = {10.1109/VR46266.2020.00-34},

year = {2020},

date = {2020-03-23},

urldate = {2020-03-23},

booktitle = {Proceedings of IEEE International Conference on Virtual Reality and 3D User Interfaces (IEEE VR)},

pages = {493-499},

address = {Atlanta, Georgia},

abstract = {Past work in augmented reality has shown that temperature-associated AR stimuli can induce warming and cooling sensations in the user, and prior work in psychology suggests that a person's body temperature can influence that person's sense of subjective perception of duration. In this paper, we present a user study to evaluate the relationship between temperature-associated virtual stimuli presented on an AR-HMD and the user's sense of subjective perception of duration and temperature. In particular, we investigate two independent variables: the apparent temperature of the virtual stimuli presented to the participant, which could be hot or cold, and the location of the stimuli, which could be in direct contact with the user, in indirect contact with the user, or both in direct and indirect contact simultaneously. We investigate how these variables affect the users' perception of duration and perception of body and environment temperature by having participants make prospective time estimations while observing the virtual stimulus and answering subjective questions regarding their body and environment temperatures. Our work confirms that temperature-associated virtual stimuli are capable of having significant effects on the users' perception of temperature, and highlights a possible limitation in the current augmented reality technology in that no secondary effects on the users' perception of duration were observed.},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

Past work in augmented reality has shown that temperature-associated AR stimuli can induce warming and cooling sensations in the user, and prior work in psychology suggests that a person's body temperature can influence that person's sense of subjective perception of duration. In this paper, we present a user study to evaluate the relationship between temperature-associated virtual stimuli presented on an AR-HMD and the user's sense of subjective perception of duration and temperature. In particular, we investigate two independent variables: the apparent temperature of the virtual stimuli presented to the participant, which could be hot or cold, and the location of the stimuli, which could be in direct contact with the user, in indirect contact with the user, or both in direct and indirect contact simultaneously. We investigate how these variables affect the users' perception of duration and perception of body and environment temperature by having participants make prospective time estimations while observing the virtual stimulus and answering subjective questions regarding their body and environment temperatures. Our work confirms that temperature-associated virtual stimuli are capable of having significant effects on the users' perception of temperature, and highlights a possible limitation in the current augmented reality technology in that no secondary effects on the users' perception of duration were observed. |

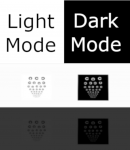

| Austin Erickson; Kangsoo Kim; Gerd Bruder; Greg Welch Effects of Dark Mode Graphics on Visual Acuity and Fatigue with Virtual Reality Head-Mounted Displays Proceedings Article In: Proceedings of IEEE International Conference on Virtual Reality and 3D User Interfaces (IEEE VR), pp. 434-442, Atlanta, Georgia, 2020. @inproceedings{Erickson2020,

title = {Effects of Dark Mode Graphics on Visual Acuity and Fatigue with Virtual Reality Head-Mounted Displays},

author = {Austin Erickson and Kangsoo Kim and Gerd Bruder and Greg Welch},

url = {https://sreal.ucf.edu/wp-content/uploads/2020/02/VR2020_DarkMode2_0.pdf

https://www.youtube.com/watch?v=wePUk0xTLA0&t=5s, YouTube Presentation},

doi = {10.1109/VR46266.2020.00-40},

year = {2020},

date = {2020-03-23},

urldate = {2020-03-23},

booktitle = {Proceedings of IEEE International Conference on Virtual Reality and 3D User Interfaces (IEEE VR)},

pages = {434-442},

address = {Atlanta, Georgia},

abstract = {Current virtual reality (VR) head-mounted displays (HMDs) are characterized by a low angular resolution that makes it difficult to make out details, leading to reduced legibility of text and increased visual fatigue. Light-on-dark graphics modes, so-called ``dark mode'' graphics, are becoming more and more popular over a wide range of display technologies, and have been correlated with increased visual comfort and acuity, specifically when working in low-light environments, which suggests that they might provide significant advantages for VR HMDs.

In this paper, we present a human-subject study investigating the correlations between the color mode and the ambient lighting with respect to visual acuity and fatigue on VR HMDs.

We compare two color schemes, characterized by light letters on a dark background (dark mode), or dark letters on a light background (light mode), and show that the dark background in dark mode provides a significant advantage in terms of reduced visual fatigue and increased visual acuity in dim virtual environments on current HMDs. Based on our results, we discuss guidelines for user interfaces and applications.},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

Current virtual reality (VR) head-mounted displays (HMDs) are characterized by a low angular resolution that makes it difficult to make out details, leading to reduced legibility of text and increased visual fatigue. Light-on-dark graphics modes, so-called ``dark mode'' graphics, are becoming more and more popular over a wide range of display technologies, and have been correlated with increased visual comfort and acuity, specifically when working in low-light environments, which suggests that they might provide significant advantages for VR HMDs.

In this paper, we present a human-subject study investigating the correlations between the color mode and the ambient lighting with respect to visual acuity and fatigue on VR HMDs.

We compare two color schemes, characterized by light letters on a dark background (dark mode), or dark letters on a light background (light mode), and show that the dark background in dark mode provides a significant advantage in terms of reduced visual fatigue and increased visual acuity in dim virtual environments on current HMDs. Based on our results, we discuss guidelines for user interfaces and applications. |

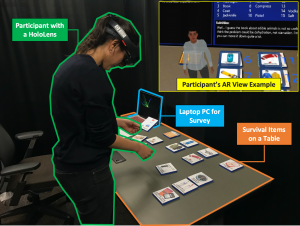

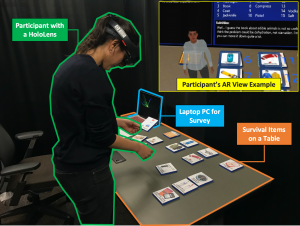

| Kangsoo Kim; Celso M. de Melo; Nahal Norouzi; Gerd Bruder; Gregory F. Welch Reducing Task Load with an Embodied Intelligent Virtual Assistant for Improved Performance in Collaborative Decision Making Proceedings Article In: Proceedings of the IEEE Conference on Virtual Reality and 3D User Interfaces (IEEE VR), pp. 529-538, Atlanta, Georgia, 2020. @inproceedings{Kim2020rtl,

title = {Reducing Task Load with an Embodied Intelligent Virtual Assistant for Improved Performance in Collaborative Decision Making},

author = {Kangsoo Kim and Celso M. de Melo and Nahal Norouzi and Gerd Bruder and Gregory F. Welch},

url = {https://sreal.ucf.edu/wp-content/uploads/2020/02/IEEEVR2020_ARDesertSurvival.pdf

https://www.youtube.com/watch?v=G_iZ_asjp3I&t=6s, YouTube Presentation},

doi = {10.1109/VR46266.2020.00-30},

year = {2020},

date = {2020-03-23},

booktitle = {Proceedings of the IEEE Conference on Virtual Reality and 3D User Interfaces (IEEE VR)},

pages = {529-538},

address = {Atlanta, Georgia},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

|

![[Poster] Applying Stress Management Techniques in Augmented Reality: Stress Induction and Reduction in Healthcare Providers during Virtual Triage Simulation](https://sreal.ucf.edu/wp-content/uploads/2020/07/Annotation-2020-07-28-132554-300x124.jpg) | Jacob Stuart; Ileri Akinnola; Frank Guido-Sanz; Mindi Anderson; Desiree Diaz; Greg Welch; Benjamin Lok [Poster] Applying Stress Management Techniques in Augmented Reality: Stress Induction and Reduction in Healthcare Providers during Virtual Triage Simulation Proceedings Article In: Proceedings of the IEEE Conference on Virtual Reality and 3D User Interfaces Abstracts and Workshops (VRW), pp. 171-172, 2020. @inproceedings{Stuart2019asm,

title = {[Poster] Applying Stress Management Techniques in Augmented Reality: Stress Induction and Reduction in Healthcare Providers during Virtual Triage Simulation},

author = {Jacob Stuart and Ileri Akinnola and Frank Guido-Sanz and Mindi Anderson and Desiree Diaz and Greg Welch and Benjamin Lok},

url = {https://sreal.ucf.edu/wp-content/uploads/2020/07/09090656.pdf},

doi = {10.1109/VRW50115.2020.00037},

year = {2020},

date = {2020-03-22},

booktitle = {Proceedings of the IEEE Conference on Virtual Reality and 3D User Interfaces Abstracts and Workshops (VRW)},

pages = {171-172},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

|

![[Tutorial] Developing Embodied Interactive Virtual Characters for Human-Subjects Studies](https://sreal.ucf.edu/wp-content/uploads/2020/04/IEEEVR2020Tutorial-300x176.png) | Kangsoo Kim; Nahal Norouzi; Austin Erickson [Tutorial] Developing Embodied Interactive Virtual Characters for Human-Subjects Studies Presentation 22.03.2020. @misc{Kim2020dei,

title = {[Tutorial] Developing Embodied Interactive Virtual Characters for Human-Subjects Studies},

author = {Kangsoo Kim and Nahal Norouzi and Austin Erickson},

url = {https://www.youtube.com/watch?v=UgT_-LVrQlc&list=PLMvKdHzC3SyacMfUj3qqd-pIjKmjtmwnz

https://sreal.ucf.edu/ieee-vr-2020-tutorial-developing-embodied-interactive-virtual-characters-for-human-subjects-studies/},

year = {2020},

date = {2020-03-22},

urldate = {2020-03-22},

booktitle = {IEEE International Conference on Virtual Reality and 3D User Interfaces (IEEE VR)},

keywords = {},

pubstate = {published},

tppubtype = {presentation}

}

|

| Nahal Norouzi Augmented Reality Animals: Are They Our Future Companions? Presentation 22.03.2020, (IEEE VR 2020 Doctoral Consortium). @misc{Norouzi2020,

title = {Augmented Reality Animals: Are They Our Future Companions?},

author = {Nahal Norouzi },

url = {https://sreal.ucf.edu/wp-content/uploads/2020/03/vr20c-sub1054-cam-i5.pdf},

year = {2020},

date = {2020-03-22},

abstract = {Previous research in the field of human-animal interaction has captured the multitude of benefits of this relationship on different aspects of human health. Existing limitations for accompanying pets/animals in some public spaces, allergies, and inability to provide adequate care for animals/pets limits the possible benefits of this relationship. However, the increased popularity of augmented reality and virtual reality devices and the introduction of new social behaviors since their utilization offers the opportunity of using such platforms for the realization of virtual animals and investigation of their influences on human perception and behavior.

In this paper, two prior experiments are presented, which were designed to provide a better understanding of the requirements of virtual animals in augmented reality as companions and investigate some of their capabilities in the provision of support. Through these findings, future research directions are identified and discussed.

},

note = {IEEE VR 2020 Doctoral Consortium},

keywords = {},

pubstate = {published},

tppubtype = {presentation}

}

Previous research in the field of human-animal interaction has captured the multitude of benefits of this relationship on different aspects of human health. Existing limitations for accompanying pets/animals in some public spaces, allergies, and inability to provide adequate care for animals/pets limits the possible benefits of this relationship. However, the increased popularity of augmented reality and virtual reality devices and the introduction of new social behaviors since their utilization offers the opportunity of using such platforms for the realization of virtual animals and investigation of their influences on human perception and behavior.

In this paper, two prior experiments are presented, which were designed to provide a better understanding of the requirements of virtual animals in augmented reality as companions and investigate some of their capabilities in the provision of support. Through these findings, future research directions are identified and discussed.

|

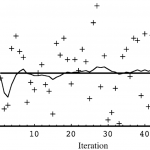

| Austin Erickson; Nahal Norouzi; Kangsoo Kim; Joseph J. LaViola Jr.; Gerd Bruder; Gregory F. Welch Effects of Depth Information on Visual Target Identification Task Performance in Shared Gaze Environments Journal Article In: IEEE Transactions on Visualization and Computer Graphics, vol. 26, no. 5, pp. 1934-1944, 2020, ISSN: 1077-2626, (Presented at IEEE VR 2020). @article{Erickson2020c,

title = {Effects of Depth Information on Visual Target Identification Task Performance in Shared Gaze Environments},

author = {Austin Erickson and Nahal Norouzi and Kangsoo Kim and Joseph J. LaViola Jr. and Gerd Bruder and Gregory F. Welch},

url = {https://sreal.ucf.edu/wp-content/uploads/2020/02/shared_gaze_2_FINAL.pdf

https://www.youtube.com/watch?v=JQO_iosY62Y&t=6s, YouTube Presentation},

doi = {10.1109/TVCG.2020.2973054},

issn = {1077-2626},

year = {2020},

date = {2020-02-13},

urldate = {2020-02-13},

journal = {IEEE Transactions on Visualization and Computer Graphics},

volume = {26},

number = {5},

pages = {1934-1944},

abstract = {Human gaze awareness is important for social and collaborative interactions. Recent technological advances in augmented reality (AR) displays and sensors provide us with the means to extend collaborative spaces with real-time dynamic AR indicators of one's gaze, for example via three-dimensional cursors or rays emanating from a partner's head. However, such gaze cues are only as useful as the quality of the underlying gaze estimation and the accuracy of the display mechanism. Depending on the type of the visualization, and the characteristics of the errors, AR gaze cues could either enhance or interfere with collaborations. In this paper, we present two human-subject studies in which we investigate the influence of angular and depth errors, target distance, and the type of gaze visualization on participants' performance and subjective evaluation during a collaborative task with a virtual human partner, where participants identified targets within a dynamically walking crowd. First, our results show that there is a significant difference in performance for the two gaze visualizations ray and cursor in conditions with simulated angular and depth errors: the ray visualization provided significantly faster response times and fewer errors compared to the cursor visualization. Second, our results show that under optimal conditions, among four different gaze visualization methods, a ray without depth information provides the worst performance and is rated lowest, while a combination of a ray and cursor with depth information is rated highest. We discuss the subjective and objective performance thresholds and provide guidelines for practitioners in this field.},

note = {Presented at IEEE VR 2020},

keywords = {},

pubstate = {published},

tppubtype = {article}

}

Human gaze awareness is important for social and collaborative interactions. Recent technological advances in augmented reality (AR) displays and sensors provide us with the means to extend collaborative spaces with real-time dynamic AR indicators of one's gaze, for example via three-dimensional cursors or rays emanating from a partner's head. However, such gaze cues are only as useful as the quality of the underlying gaze estimation and the accuracy of the display mechanism. Depending on the type of the visualization, and the characteristics of the errors, AR gaze cues could either enhance or interfere with collaborations. In this paper, we present two human-subject studies in which we investigate the influence of angular and depth errors, target distance, and the type of gaze visualization on participants' performance and subjective evaluation during a collaborative task with a virtual human partner, where participants identified targets within a dynamically walking crowd. First, our results show that there is a significant difference in performance for the two gaze visualizations ray and cursor in conditions with simulated angular and depth errors: the ray visualization provided significantly faster response times and fewer errors compared to the cursor visualization. Second, our results show that under optimal conditions, among four different gaze visualization methods, a ray without depth information provides the worst performance and is rated lowest, while a combination of a ray and cursor with depth information is rated highest. We discuss the subjective and objective performance thresholds and provide guidelines for practitioners in this field. |

![[Poster] An Automated Virtual Receptionist for Recognizing Visitors and Assuring Mask Wearing](https://sreal.ucf.edu/wp-content/uploads/2020/12/Zehtabian2020aav-300x272.jpg)

![[Poster] Applying Stress Management Techniques in Augmented Reality: Stress Induction and Reduction in Healthcare Providers during Virtual Triage Simulation](https://sreal.ucf.edu/wp-content/uploads/2020/07/Annotation-2020-07-28-132554-300x124.jpg)

![[Tutorial] Developing Embodied Interactive Virtual Characters for Human-Subjects Studies](https://sreal.ucf.edu/wp-content/uploads/2020/04/IEEEVR2020Tutorial-300x176.png)