2024

|

| Matt Gottsacker; Hiroshi Furuya; Zubin Choudhary; Austin Erickson; Ryan Schubert; Gerd Bruder; Michael P. Browne; Gregory F. Welch Investigating the relationships between user behaviors and tracking factors on task performance and trust in augmented reality Journal Article In: Elsevier Computers & Graphics, vol. 123, pp. 1-14, 2024. @article{gottsacker2024trust,

title = {Investigating the relationships between user behaviors and tracking factors on task performance and trust in augmented reality},

author = {Matt Gottsacker and Hiroshi Furuya and Zubin Choudhary and Austin Erickson and Ryan Schubert and Gerd Bruder and Michael P. Browne and Gregory F. Welch},

url = {https://sreal.ucf.edu/wp-content/uploads/2024/08/C_G____ARTrust____Accuracy___Precision.pdf},

doi = {https://doi.org/10.1016/j.cag.2024.104035},

year = {2024},

date = {2024-08-06},

urldate = {2024-08-06},

journal = {Elsevier Computers & Graphics},

volume = {123},

pages = {1-14},

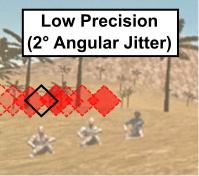

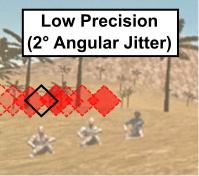

abstract = {This research paper explores the impact of augmented reality (AR) tracking characteristics, specifically an AR head-worn display's tracking registration accuracy and precision, on users' spatial abilities and subjective perceptions of trust in and reliance on the technology. Our study aims to clarify the relationships between user performance and the different behaviors users may employ based on varying degrees of trust in and reliance on AR. Our controlled experimental setup used a 360 field-of-regard search-and-selection task and combines the immersive aspects of a CAVE-like environment with AR overlays viewed with a head-worn display. We investigated three levels of simulated AR tracking errors in terms of both accuracy and precision (+0, +1, +2). We controlled for four user task behaviors that correspond to different levels of trust in and reliance on an AR system: AR-Only (only relying on AR), AR-First (prioritizing AR over real world), Real-Only (only relying on real world), and Real-First (prioritizing real world over AR). By controlling for these behaviors, our results showed that even small amounts of AR tracking errors had noticeable effects on users' task performance, especially if they relied completely on the AR cues (AR-Only). Our results link AR tracking characteristics with user behavior, highlighting the importance of understanding these elements to improve AR technology and user satisfaction.},

keywords = {},

pubstate = {published},

tppubtype = {article}

}

This research paper explores the impact of augmented reality (AR) tracking characteristics, specifically an AR head-worn display's tracking registration accuracy and precision, on users' spatial abilities and subjective perceptions of trust in and reliance on the technology. Our study aims to clarify the relationships between user performance and the different behaviors users may employ based on varying degrees of trust in and reliance on AR. Our controlled experimental setup used a 360 field-of-regard search-and-selection task and combines the immersive aspects of a CAVE-like environment with AR overlays viewed with a head-worn display. We investigated three levels of simulated AR tracking errors in terms of both accuracy and precision (+0, +1, +2). We controlled for four user task behaviors that correspond to different levels of trust in and reliance on an AR system: AR-Only (only relying on AR), AR-First (prioritizing AR over real world), Real-Only (only relying on real world), and Real-First (prioritizing real world over AR). By controlling for these behaviors, our results showed that even small amounts of AR tracking errors had noticeable effects on users' task performance, especially if they relied completely on the AR cues (AR-Only). Our results link AR tracking characteristics with user behavior, highlighting the importance of understanding these elements to improve AR technology and user satisfaction. |

| Gerd Bruder; Michael Browne; Zubin Choudhary; Austin Erickson; Hiroshi Furuya; Matt Gottsacker; Ryan Schubert; Gregory Welch Visual Factors Influencing Trust and Reliance with Augmented Reality Systems Journal Article In: Journal of Vision Abstracts—Vision Sciences Society (VSS) Annual Meeting, 2024. @article{Bruder2024,

title = {Visual Factors Influencing Trust and Reliance with Augmented Reality Systems},

author = {Gerd Bruder and Michael Browne and Zubin Choudhary and Austin Erickson and Hiroshi Furuya and Matt Gottsacker and Ryan Schubert and Gregory Welch},

year = {2024},

date = {2024-05-17},

urldate = {2024-05-17},

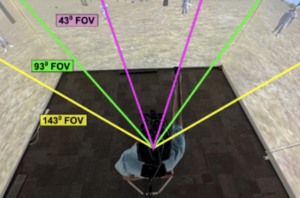

journal = {Journal of Vision Abstracts—Vision Sciences Society (VSS) Annual Meeting},

abstract = {Augmented Reality (AR) systems are increasingly used for simulations, training, and operations across a wide range of application fields. Unfortunately, the imagery that current AR systems create often does not match our visual perception of the real world, which can make users feel like the AR system is not believable. This lack of belief can lead to negative training or experiences, where users lose trust in the AR system and adjust their reliance on AR. The latter is characterized by users adopting different cognitive perception-action pathways by which they integrate AR visual information for spatial tasks. In this work, we present a series of six within-subjects experiments (each N=20) in which we investigated trust in AR with respect to two display factors (field of view and visual contrast), two tracking factors (accuracy and precision), and two network factors (latency and dropouts). Participants performed a 360-degree visual search-and-selection task in a hybrid setup involving an AR head-mounted display and a CAVE-like simulated real environment. Participants completed the experiments with four perception-action pathways that represent different levels of the users’ reliance on an AR system: AR-Only (only relying on AR), AR-First (prioritizing AR over real world), Real-First (prioritizing real world over AR), and Real-Only (only relying on real world). Our results show that participants’ perception-action pathways and objective task performance were significantly affected by all six tested AR factors. In contrast, we found that their subjective responses for trust and reliance were often more affected by slight AR system differences than would elicit objective performance differences, and participants tended to overestimate or underestimate the trustworthiness of the AR system. Participants showed significantly higher task performance gains if their sense of trust was well-calibrated to the trustworthiness of the AR system, highlighting the importance of effectively managing users’ trust in future AR systems.

Acknowledgements: This material includes work supported in part by Vision Products LLC via US Air Force Research Laboratory (AFRL) Award Number FA864922P1038, and the Office of Naval Research under Award Numbers N00014-21-1-2578 and N00014-21-1-2882 (Dr. Peter Squire, Code 34).},

keywords = {},

pubstate = {published},

tppubtype = {article}

}

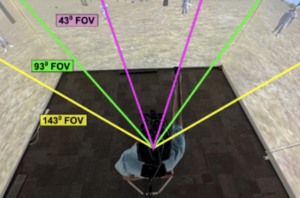

Augmented Reality (AR) systems are increasingly used for simulations, training, and operations across a wide range of application fields. Unfortunately, the imagery that current AR systems create often does not match our visual perception of the real world, which can make users feel like the AR system is not believable. This lack of belief can lead to negative training or experiences, where users lose trust in the AR system and adjust their reliance on AR. The latter is characterized by users adopting different cognitive perception-action pathways by which they integrate AR visual information for spatial tasks. In this work, we present a series of six within-subjects experiments (each N=20) in which we investigated trust in AR with respect to two display factors (field of view and visual contrast), two tracking factors (accuracy and precision), and two network factors (latency and dropouts). Participants performed a 360-degree visual search-and-selection task in a hybrid setup involving an AR head-mounted display and a CAVE-like simulated real environment. Participants completed the experiments with four perception-action pathways that represent different levels of the users’ reliance on an AR system: AR-Only (only relying on AR), AR-First (prioritizing AR over real world), Real-First (prioritizing real world over AR), and Real-Only (only relying on real world). Our results show that participants’ perception-action pathways and objective task performance were significantly affected by all six tested AR factors. In contrast, we found that their subjective responses for trust and reliance were often more affected by slight AR system differences than would elicit objective performance differences, and participants tended to overestimate or underestimate the trustworthiness of the AR system. Participants showed significantly higher task performance gains if their sense of trust was well-calibrated to the trustworthiness of the AR system, highlighting the importance of effectively managing users’ trust in future AR systems.

Acknowledgements: This material includes work supported in part by Vision Products LLC via US Air Force Research Laboratory (AFRL) Award Number FA864922P1038, and the Office of Naval Research under Award Numbers N00014-21-1-2578 and N00014-21-1-2882 (Dr. Peter Squire, Code 34). |

| Michael P. Browne; Gregory F. Welch; Gerd Bruder; Ryan Schubert Understanding the impact of trust on performance in a training system using augmented reality Proceedings Article In: Proceedings of SPIE Conference 13051: Artificial Intelligence and Machine Learning for Multi-Domain Operations Applications VI, 2024. @inproceedings{Browne2024ut,

title = {Understanding the impact of trust on performance in a training system using augmented reality},

author = {Michael P. Browne and Gregory F. Welch and Gerd Bruder and Ryan Schubert},

year = {2024},

date = {2024-04-22},

urldate = {2024-04-22},

booktitle = {Proceedings of SPIE Conference 13051: Artificial Intelligence and Machine Learning for Multi-Domain Operations Applications VI},

abstract = {The information presented by AR systems may not be 100% accurate, and anomalies like tracker errors, lack of opacity compared to the background and reduced field of view (FOV) can make users feel like an AR training system is not believable. This lack of belief can lead to negative training, where trainees adjust how they train due to flaws in the training system and are therefore less prepared for actual battlefield situations. We have completed an experiment to investigate trust, reliance, and human task performance in an augmented reality three-dimensional experimental scenario. Specifically, we used a methodology in which simulated real (complex) entities are supplemented by abstracted (basic) cues presented as overlays in an AR head mounted display (HMD) in a visual search and awareness task. We simulated properties of different AR displays to determine which of the properties most affect training efficacy. Results from our experiment will feed directly into the design of training systems that use AR/MR displays and will help increase the efficacy of training.},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

The information presented by AR systems may not be 100% accurate, and anomalies like tracker errors, lack of opacity compared to the background and reduced field of view (FOV) can make users feel like an AR training system is not believable. This lack of belief can lead to negative training, where trainees adjust how they train due to flaws in the training system and are therefore less prepared for actual battlefield situations. We have completed an experiment to investigate trust, reliance, and human task performance in an augmented reality three-dimensional experimental scenario. Specifically, we used a methodology in which simulated real (complex) entities are supplemented by abstracted (basic) cues presented as overlays in an AR head mounted display (HMD) in a visual search and awareness task. We simulated properties of different AR displays to determine which of the properties most affect training efficacy. Results from our experiment will feed directly into the design of training systems that use AR/MR displays and will help increase the efficacy of training. |

2023

|

| Ryan Schubert; Gerd Bruder; Gregory F. Welch Testbed for Intuitive Magnification in Augmented Reality Proceedings Article In: Proceedings IEEE International Symposium on Mixed and Augmented Reality Adjunct (ISMAR-Adjunct), pp. 1–2, 2023. @inproceedings{schubert2023tf,

title = {Testbed for Intuitive Magnification in Augmented Reality},

author = {Ryan Schubert and Gerd Bruder and Gregory F. Welch},

url = {https://sreal.ucf.edu/wp-content/uploads/2023/08/ismar23_schubert2023tf.pdf

https://sreal.ucf.edu/wp-content/uploads/2023/08/ismar23_schubert2023tf.mp4},

year = {2023},

date = {2023-10-16},

urldate = {2023-10-16},

booktitle = {Proceedings IEEE International Symposium on Mixed and Augmented Reality Adjunct (ISMAR-Adjunct)},

pages = {1--2},

abstract = {Humans strive to magnify portions of our visually perceived surroundings for various reasons, e.g., because they are too far away or too small to see. Different technologies have been introduced for magnification, from monoculars to binoculars, and telescopes to microscopes. A promising technology are modern high-resolution digital cameras, which are capable of optical or digital zoom and very flexible as their imagery can be presented to users in real-time with mobile or head-mounted displays and intuitive 3D user interfaces allowing control over the magnification. In this demo, we present a novel design space and testbed for intuitive augmented reality (AR) magnifications, where an AR optical see-through head-mounted display is used for the presentation of real-time magnified camera imagery. The testbed includes different unimanual and bimanual AR interaction techniques for defining the scale factor and portion of the user's visual field that should be magnified.},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

Humans strive to magnify portions of our visually perceived surroundings for various reasons, e.g., because they are too far away or too small to see. Different technologies have been introduced for magnification, from monoculars to binoculars, and telescopes to microscopes. A promising technology are modern high-resolution digital cameras, which are capable of optical or digital zoom and very flexible as their imagery can be presented to users in real-time with mobile or head-mounted displays and intuitive 3D user interfaces allowing control over the magnification. In this demo, we present a novel design space and testbed for intuitive augmented reality (AR) magnifications, where an AR optical see-through head-mounted display is used for the presentation of real-time magnified camera imagery. The testbed includes different unimanual and bimanual AR interaction techniques for defining the scale factor and portion of the user's visual field that should be magnified. |

| Ryan Schubert; Gerd Bruder; Gregory F. Welch Intuitive User Interfaces for Real-Time Magnification in Augmented Reality Proceedings Article In: Proceedings of the ACM Symposium on Virtual Reality Software and Technology (VRST), pp. 1–10, 2023, (Best Paper Award). @inproceedings{schubert2023iu,

title = {Intuitive User Interfaces for Real-Time Magnification in Augmented Reality },

author = {Ryan Schubert and Gerd Bruder and Gregory F. Welch},

url = {https://sreal.ucf.edu/wp-content/uploads/2023/08/vrst23_bruder2023iu.pdf},

year = {2023},

date = {2023-10-09},

urldate = {2023-10-09},

booktitle = {Proceedings of the ACM Symposium on Virtual Reality Software and Technology (VRST)},

pages = {1--10},

abstract = {Various reasons exist why humans desire to magnify portions of our visually perceived surroundings, e.g., because they are too far away or too small to see with the naked eye. Different technologies are used to facilitate magnification, from telescopes to microscopes using monocular or binocular designs. In particular, modern digital cameras capable of optical and/or digital zoom are very flexible as their high-resolution imagery can be presented to users in real-time with displays and interfaces allowing control over the magnification. In this paper, we present a novel design space of intuitive augmented reality (AR) magnifications where an AR head-mounted display is used for the presentation of real-time magnified camera imagery. We present a user study evaluating and comparing different visual presentation methods and AR interaction techniques. Our results show different advantages for unimanual, bimanual, and situated AR magnification window interfaces, near versus far vergence distances for the image presentation, and five different user interfaces for specifying the scaling factor of the imagery.},

note = {Best Paper Award},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

Various reasons exist why humans desire to magnify portions of our visually perceived surroundings, e.g., because they are too far away or too small to see with the naked eye. Different technologies are used to facilitate magnification, from telescopes to microscopes using monocular or binocular designs. In particular, modern digital cameras capable of optical and/or digital zoom are very flexible as their high-resolution imagery can be presented to users in real-time with displays and interfaces allowing control over the magnification. In this paper, we present a novel design space of intuitive augmented reality (AR) magnifications where an AR head-mounted display is used for the presentation of real-time magnified camera imagery. We present a user study evaluating and comparing different visual presentation methods and AR interaction techniques. Our results show different advantages for unimanual, bimanual, and situated AR magnification window interfaces, near versus far vergence distances for the image presentation, and five different user interfaces for specifying the scaling factor of the imagery. |

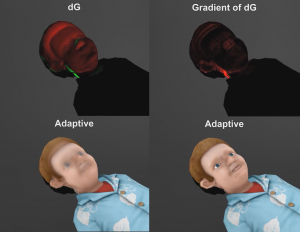

| Zubin Choudhary; Nahal Norouzi; Austin Erickson; Ryan Schubert; Gerd Bruder; Gregory F. Welch Exploring the Social Influence of Virtual Humans Unintentionally Conveying Conflicting Emotions Conference Proceedings of the 30th IEEE Conference on Virtual Reality and 3D User Interfaces, IEEE VR 2023, 2023. @conference{Choudhary2023,

title = {Exploring the Social Influence of Virtual Humans Unintentionally Conveying Conflicting Emotions},

author = {Zubin Choudhary and Nahal Norouzi and Austin Erickson and Ryan Schubert and Gerd Bruder and Gregory F. Welch},

url = {https://sreal.ucf.edu/wp-content/uploads/2023/01/PostReview_ConflictingEmotions_IEEEVR23-1.pdf},

year = {2023},

date = {2023-03-29},

urldate = {2023-03-29},

booktitle = {Proceedings of the 30th IEEE Conference on Virtual Reality and 3D User Interfaces, IEEE VR 2023},

abstract = {The expression of human emotion is integral to social interaction, and in virtual reality it is increasingly common to develop virtual avatars that attempt to convey emotions by mimicking these visual and aural cues, i.e. the facial and vocal expressions. However, errors in (or the absence of) facial tracking can result in the rendering of incorrect facial expressions on these virtual avatars. For example, a virtual avatar may speak with a happy or unhappy vocal inflection while their facial expression remains otherwise neutral. In circumstances where there is conflict between the avatar’s facial and vocal expressions, it is possible that users will incorrectly interpret the

avatar’s emotion, which may have unintended consequences in terms of social influence or in terms of the outcome of the interaction.

In this paper, we present a human-subjects study (N = 22 ) aimed at understanding the impact of conflicting facial and vocal emotional expressions. Specifically we explored three levels of emotional valence (unhappy, neutral, and happy) expressed in both visual (facial) and aural (vocal) forms. We also investigate three levels of head scales (down-scaled, accurate, and up-scaled) to evaluate whether head scale affects user interpretation of the conveyed emotion. We find significant effects of different multimodal expressions on happiness and trust perception, while no significant effect was observed for head scales. Evidence from our results suggest that facial expressions have a stronger impact than vocal expressions. Additionally, as the difference between the two expressions increase, the less predictable the multimodal expression becomes. For example, for the happy-looking and happy-sounding multimodal expression, we expect and see high happiness rating and high trust, however if one of the two expressions change, this mismatch makes the expression less predictable. We discuss the relationships, implications, and guidelines for social applications that aim to leverage multimodal social cues.},

keywords = {},

pubstate = {published},

tppubtype = {conference}

}

The expression of human emotion is integral to social interaction, and in virtual reality it is increasingly common to develop virtual avatars that attempt to convey emotions by mimicking these visual and aural cues, i.e. the facial and vocal expressions. However, errors in (or the absence of) facial tracking can result in the rendering of incorrect facial expressions on these virtual avatars. For example, a virtual avatar may speak with a happy or unhappy vocal inflection while their facial expression remains otherwise neutral. In circumstances where there is conflict between the avatar’s facial and vocal expressions, it is possible that users will incorrectly interpret the

avatar’s emotion, which may have unintended consequences in terms of social influence or in terms of the outcome of the interaction.

In this paper, we present a human-subjects study (N = 22 ) aimed at understanding the impact of conflicting facial and vocal emotional expressions. Specifically we explored three levels of emotional valence (unhappy, neutral, and happy) expressed in both visual (facial) and aural (vocal) forms. We also investigate three levels of head scales (down-scaled, accurate, and up-scaled) to evaluate whether head scale affects user interpretation of the conveyed emotion. We find significant effects of different multimodal expressions on happiness and trust perception, while no significant effect was observed for head scales. Evidence from our results suggest that facial expressions have a stronger impact than vocal expressions. Additionally, as the difference between the two expressions increase, the less predictable the multimodal expression becomes. For example, for the happy-looking and happy-sounding multimodal expression, we expect and see high happiness rating and high trust, however if one of the two expressions change, this mismatch makes the expression less predictable. We discuss the relationships, implications, and guidelines for social applications that aim to leverage multimodal social cues. |

2022

|

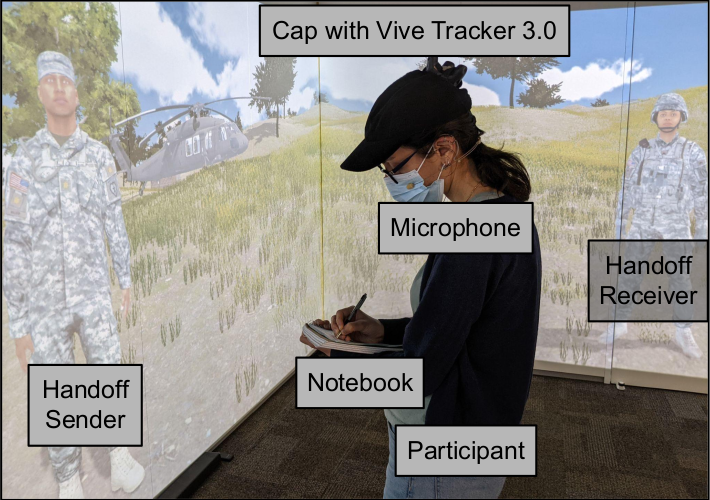

| Matt Gottsacker; Nahal Norouzi; Ryan Schubert; Frank Guido-Sanz; Gerd Bruder; Gregory F. Welch Effects of Environmental Noise Levels on Patient Handoff Communication in a Mixed Reality Simulation Proceedings Article In: 28th ACM Symposium on Virtual Reality Software and Technology (VRST '22), pp. 1-10, 2022, ISBN: 978-1-4503-9889-3/22/11. @inproceedings{gottsacker2022noise,

title = {Effects of Environmental Noise Levels on Patient Handoff Communication in a Mixed Reality Simulation},

author = {Matt Gottsacker and Nahal Norouzi and Ryan Schubert and Frank Guido-Sanz and Gerd Bruder and Gregory F. Welch},

url = {https://sreal.ucf.edu/wp-content/uploads/2022/10/main.pdf},

doi = {10.1145/3562939.3565627},

isbn = {978-1-4503-9889-3/22/11},

year = {2022},

date = {2022-10-27},

urldate = {2022-10-27},

booktitle = {28th ACM Symposium on Virtual Reality Software and Technology (VRST '22)},

pages = {1-10},

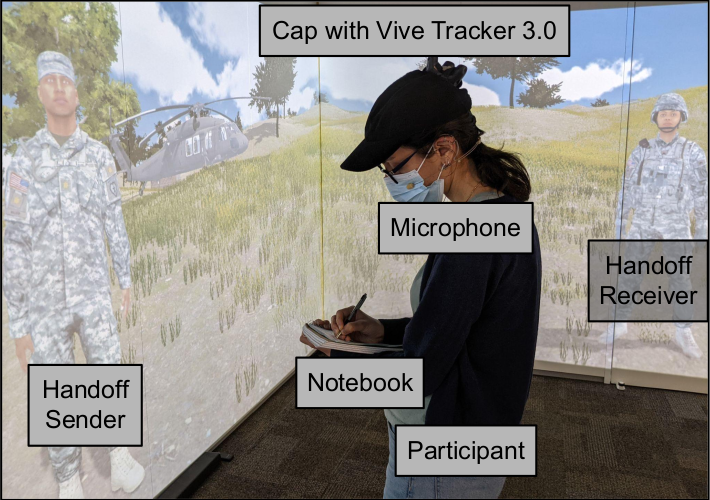

abstract = {When medical caregivers transfer patients to another person's care (a patient handoff), it is essential they effectively communicate the patient's condition to ensure the best possible health outcomes. Emergency situations caused by mass casualty events (e.g., natural disasters) introduce additional difficulties to handoff procedures such as environmental noise. We created a projected mixed reality simulation of a handoff scenario involving a medical evacuation by air and tested how low, medium, and high levels of helicopter noise affected participants' handoff experience, handoff performance, and behaviors. Through a human-subjects experimental design study (N = 21), we found that the addition of noise increased participants' subjective stress and task load, decreased their self-assessed and actual performance, and caused participants to speak louder. Participants also stood closer to the virtual human sending the handoff information when listening to the handoff than they stood to the receiver when relaying the handoff information. We discuss implications for the design of handoff training simulations and avenues for future handoff communication research.},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

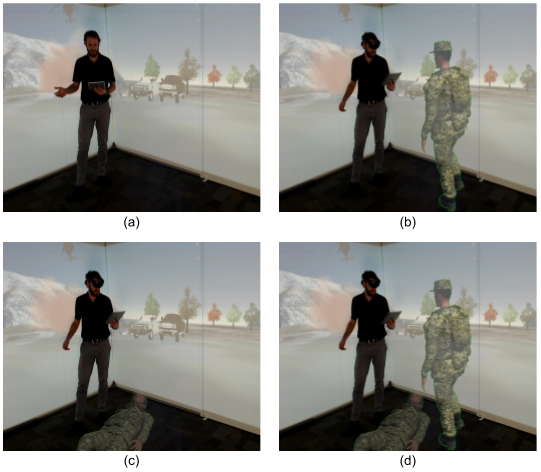

When medical caregivers transfer patients to another person's care (a patient handoff), it is essential they effectively communicate the patient's condition to ensure the best possible health outcomes. Emergency situations caused by mass casualty events (e.g., natural disasters) introduce additional difficulties to handoff procedures such as environmental noise. We created a projected mixed reality simulation of a handoff scenario involving a medical evacuation by air and tested how low, medium, and high levels of helicopter noise affected participants' handoff experience, handoff performance, and behaviors. Through a human-subjects experimental design study (N = 21), we found that the addition of noise increased participants' subjective stress and task load, decreased their self-assessed and actual performance, and caused participants to speak louder. Participants also stood closer to the virtual human sending the handoff information when listening to the handoff than they stood to the receiver when relaying the handoff information. We discuss implications for the design of handoff training simulations and avenues for future handoff communication research. |

2021

|

| Ryan Schubert; Gerd Bruder; Alyssa Tanaka; Francisco Guido-Sanz; Gregory F. Welch Mixed Reality Technology Capabilities for Combat-Casualty Handoff Training Proceedings Article In: Chen, Jessie Y. C.; Fragomeni, Gino (Ed.): International Conference on Human-Computer Interaction, pp. 695-711, Springer International Publishing, Cham, 2021, ISBN: 978-3-030-77599-5. @inproceedings{Schubert2021mixed,

title = {Mixed Reality Technology Capabilities for Combat-Casualty Handoff Training},

author = {Ryan Schubert and Gerd Bruder and Alyssa Tanaka and Francisco Guido-Sanz and Gregory F. Welch},

editor = {Jessie Y. C. Chen and Gino Fragomeni},

url = {https://sreal.ucf.edu/wp-content/uploads/2021/07/Schubert2021_MixedRealityTechnologyCapabiliesForCombatCasualtyHandoffTraining-2.pdf},

doi = {10.1007/978-3-030-77599-5_47},

isbn = {978-3-030-77599-5},

year = {2021},

date = {2021-07-03},

booktitle = {International Conference on Human-Computer Interaction},

volume = {12770},

pages = {695-711},

publisher = {Springer International Publishing},

address = {Cham},

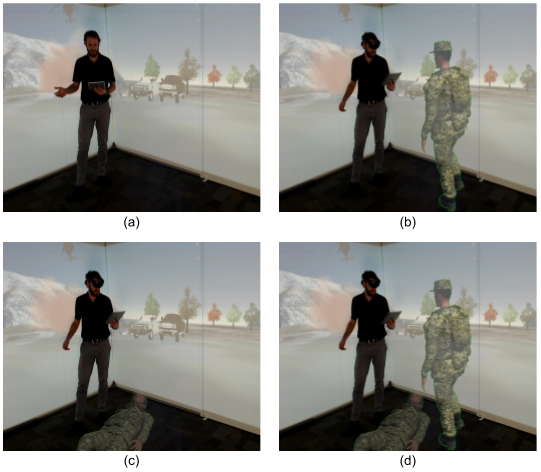

abstract = {Patient handoffs are a common, yet frequently error prone occurrence, particularly in complex or challenging battlefield situations.

Specific protocols exist to help simplify and reinforce conveying of necessary information during a combat-casualty handoff, and training can both reinforce correct behavior and protocol usage while providing relatively safe initial exposure to many of the complexities and variabilities of real handoff situations, before a patient’s life is at stake. Here we discuss a variety of mixed reality capabilities and training contexts that can manipulate many of these handoff complexities in a controlled manner. We finally discuss some future human-subject user study design considerations, including aspects of handoff training, evaluation or improvement of a specific handoff protocol, and how the same technology could be leveraged for operational use.},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

Patient handoffs are a common, yet frequently error prone occurrence, particularly in complex or challenging battlefield situations.

Specific protocols exist to help simplify and reinforce conveying of necessary information during a combat-casualty handoff, and training can both reinforce correct behavior and protocol usage while providing relatively safe initial exposure to many of the complexities and variabilities of real handoff situations, before a patient’s life is at stake. Here we discuss a variety of mixed reality capabilities and training contexts that can manipulate many of these handoff complexities in a controlled manner. We finally discuss some future human-subject user study design considerations, including aspects of handoff training, evaluation or improvement of a specific handoff protocol, and how the same technology could be leveraged for operational use. |

| Zubin Choudhary; Matt Gottsacker; Kangsoo Kim; Ryan Schubert; Jeanine Stefanucci; Gerd Bruder; Greg Welch Revisiting Distance Perception with Scaled Embodied Cues in Social Virtual Reality Proceedings Article In: IEEE Virtual Reality (VR), 2021, 2021. @inproceedings{Choudhary2021,

title = {Revisiting Distance Perception with Scaled Embodied Cues in Social Virtual Reality},

author = {Zubin Choudhary and Matt Gottsacker and Kangsoo Kim and Ryan Schubert and Jeanine Stefanucci and Gerd Bruder and Greg Welch},

url = {https://sreal.ucf.edu/wp-content/uploads/2021/04/C2593-Revisiting-Distance-Perception-with-Scaled-Embodied-Cues-in-Social-Virtual-Reality-7.pdf},

year = {2021},

date = {2021-04-01},

publisher = {IEEE Virtual Reality (VR), 2021},

abstract = {Previous research on distance estimation in virtual reality (VR) has well established that even for geometrically accurate virtual objects and environments users tend to systematically misestimate distances. This has implications for Social VR, where it introduces variables in personal space and proxemics behavior that change social behaviors compared to the real world. One yet unexplored factor is related to the trend that avatars’ embodied cues in Social VR are often scaled, e.g., by making one’s head bigger or one’s voice louder, to make social cues more pronounced over longer distances.

In this paper we investigate how the perception of avatar distance is changed based on two means for scaling embodied social cues: visual head scale and verbal volume scale. We conducted a human subject study employing a mixed factorial design with two Social VR avatar representations (full-body, head-only) as a between factor as well as three visual head scales and three verbal volume scales (up-scaled, accurate, down-scaled) as within factors. For three distances from social to far-public space, we found that visual head scale had a significant effect on distance judgments and should be tuned for Social VR, while conflicting verbal volume scales did not, indicating that voices can be scaled in Social VR without immediate repercussions on spatial estimates. We discuss the interactions between the factors and implications for Social VR.

},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

Previous research on distance estimation in virtual reality (VR) has well established that even for geometrically accurate virtual objects and environments users tend to systematically misestimate distances. This has implications for Social VR, where it introduces variables in personal space and proxemics behavior that change social behaviors compared to the real world. One yet unexplored factor is related to the trend that avatars’ embodied cues in Social VR are often scaled, e.g., by making one’s head bigger or one’s voice louder, to make social cues more pronounced over longer distances.

In this paper we investigate how the perception of avatar distance is changed based on two means for scaling embodied social cues: visual head scale and verbal volume scale. We conducted a human subject study employing a mixed factorial design with two Social VR avatar representations (full-body, head-only) as a between factor as well as three visual head scales and three verbal volume scales (up-scaled, accurate, down-scaled) as within factors. For three distances from social to far-public space, we found that visual head scale had a significant effect on distance judgments and should be tuned for Social VR, while conflicting verbal volume scales did not, indicating that voices can be scaled in Social VR without immediate repercussions on spatial estimates. We discuss the interactions between the factors and implications for Social VR.

|

2020

|

| Gregory F Welch; Ryan Schubert; Gerd Bruder; Derrick P Stockdreher; Adam Casebolt Augmented Reality Promises Mentally and Physically Stressful Training in Real Places Journal Article In: IACLEA Campus Law Enforcement Journal, vol. 50, no. 5, pp. 47–50, 2020. @article{Welch2020aa,

title = {Augmented Reality Promises Mentally and Physically Stressful Training in Real Places},

author = {Gregory F Welch and Ryan Schubert and Gerd Bruder and Derrick P Stockdreher and Adam Casebolt},

url = {https://sreal.ucf.edu/wp-content/uploads/2020/10/Welch2020aa.pdf},

year = {2020},

date = {2020-10-05},

journal = {IACLEA Campus Law Enforcement Journal},

volume = {50},

number = {5},

pages = {47--50},

keywords = {},

pubstate = {published},

tppubtype = {article}

}

|

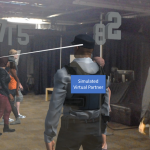

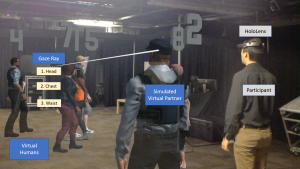

| Austin Erickson; Nahal Norouzi; Kangsoo Kim; Ryan Schubert; Jonathan Jules; Joseph J. LaViola Jr.; Gerd Bruder; Gregory F. Welch Sharing gaze rays for visual target identification tasks in collaborative augmented reality Journal Article In: Journal on Multimodal User Interfaces: Special Issue on Multimodal Interfaces and Communication Cues for Remote Collaboration, vol. 14, no. 4, pp. 353-371, 2020, ISSN: 1783-8738. @article{EricksonNorouzi2020,

title = {Sharing gaze rays for visual target identification tasks in collaborative augmented reality},

author = {Austin Erickson and Nahal Norouzi and Kangsoo Kim and Ryan Schubert and Jonathan Jules and Joseph J. LaViola Jr. and Gerd Bruder and Gregory F. Welch},

url = {https://sreal.ucf.edu/wp-content/uploads/2020/07/Erickson2020_Article_SharingGazeRaysForVisualTarget.pdf},

doi = {https://doi.org/10.1007/s12193-020-00330-2},

issn = {1783-8738},

year = {2020},

date = {2020-07-09},

urldate = {2020-07-09},

journal = {Journal on Multimodal User Interfaces: Special Issue on Multimodal Interfaces and Communication Cues for Remote Collaboration},

volume = {14},

number = {4},

pages = {353-371},

abstract = {Augmented reality (AR) technologies provide a shared platform for users to collaborate in a physical context involving both real and virtual content. To enhance the quality of interaction between AR users, researchers have proposed augmenting users’ interpersonal space with embodied cues such as their gaze direction. While beneficial in achieving improved interpersonal spatial communication, such shared gaze environments suffer from multiple types of errors related to eye tracking and networking, that can reduce objective performance and subjective experience. In this paper, we present a human-subjects study to understand the impact of accuracy, precision, latency, and dropout based errors on users’ performance when using shared gaze cues to identify a target among a crowd of people. We simulated varying amounts of errors and the target distances and measured participants’ objective performance through their response time and error rate, and their subjective experience and cognitive load through questionnaires. We found significant differences suggesting that the simulated error levels had stronger effects on participants’ performance than target distance with accuracy and latency having a high impact on participants’ error rate. We also observed that participants assessed their own performance as lower than it objectively was. We discuss implications for practical shared gaze applications and we present a multi-user prototype system.},

keywords = {},

pubstate = {published},

tppubtype = {article}

}

Augmented reality (AR) technologies provide a shared platform for users to collaborate in a physical context involving both real and virtual content. To enhance the quality of interaction between AR users, researchers have proposed augmenting users’ interpersonal space with embodied cues such as their gaze direction. While beneficial in achieving improved interpersonal spatial communication, such shared gaze environments suffer from multiple types of errors related to eye tracking and networking, that can reduce objective performance and subjective experience. In this paper, we present a human-subjects study to understand the impact of accuracy, precision, latency, and dropout based errors on users’ performance when using shared gaze cues to identify a target among a crowd of people. We simulated varying amounts of errors and the target distances and measured participants’ objective performance through their response time and error rate, and their subjective experience and cognitive load through questionnaires. We found significant differences suggesting that the simulated error levels had stronger effects on participants’ performance than target distance with accuracy and latency having a high impact on participants’ error rate. We also observed that participants assessed their own performance as lower than it objectively was. We discuss implications for practical shared gaze applications and we present a multi-user prototype system. |

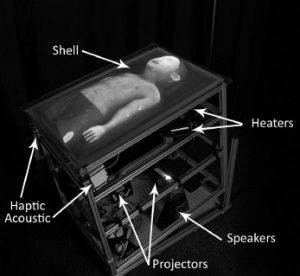

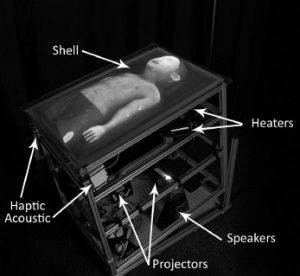

| Salam Daher; Jason Hochreiter; Ryan Schubert; Laura Gonzalez; Juan Cendan; Mindi Anderson; Desiree A Diaz; Gregory F. Welch The Physical-Virtual Patient Simulator: A Physical Human Form with Virtual Appearance and Behavior Journal Article In: Simulation in Healthcare, vol. 15, no. 2, pp. 115–121, 2020, (see erratum at DOI: 10.1097/SIH.0000000000000481). @article{Daher2020aa,

title = {The Physical-Virtual Patient Simulator: A Physical Human Form with Virtual Appearance and Behavior},

author = {Salam Daher and Jason Hochreiter and Ryan Schubert and Laura Gonzalez and Juan Cendan and Mindi Anderson and Desiree A Diaz and Gregory F. Welch},

url = {https://sreal.ucf.edu/wp-content/uploads/2020/06/Daher2020aa1.pdf

https://journals.lww.com/simulationinhealthcare/Fulltext/2020/04000/The_Physical_Virtual_Patient_Simulator__A_Physical.9.aspx

https://journals.lww.com/simulationinhealthcare/Fulltext/2020/06000/Erratum_to_the_Physical_Virtual_Patient_Simulator_.12.aspx},

doi = {10.1097/SIH.0000000000000409},

year = {2020},

date = {2020-04-01},

journal = {Simulation in Healthcare},

volume = {15},

number = {2},

pages = {115--121},

note = {see erratum at DOI: 10.1097/SIH.0000000000000481},

keywords = {},

pubstate = {published},

tppubtype = {article}

}

|

2019

|

| Nahal Norouzi; Austin Erickson; Kangsoo Kim; Ryan Schubert; Joseph J. LaViola Jr.; Gerd Bruder; Gregory F. Welch Effects of Shared Gaze Parameters on Visual Target Identification Task Performance in Augmented Reality Proceedings Article In: Proceedings of the ACM Symposium on Spatial User Interaction (SUI), pp. 12:1-12:11, ACM, 2019, ISBN: 978-1-4503-6975-6/19/10, (Best Paper Award). @inproceedings{Norouzi2019esg,

title = {Effects of Shared Gaze Parameters on Visual Target Identification Task Performance in Augmented Reality},

author = {Nahal Norouzi and Austin Erickson and Kangsoo Kim and Ryan Schubert and Joseph J. LaViola Jr. and Gerd Bruder and Gregory F. Welch},

url = {https://sreal.ucf.edu/wp-content/uploads/2019/10/a12-norouzi.pdf},

doi = {10.1145/3357251.3357587},

isbn = {978-1-4503-6975-6/19/10},

year = {2019},

date = {2019-10-19},

urldate = {2019-10-19},

booktitle = {Proceedings of the ACM Symposium on Spatial User Interaction (SUI)},

pages = {12:1-12:11},

publisher = {ACM},

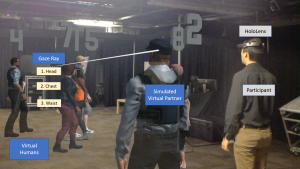

abstract = {Augmented reality (AR) technologies provide a shared platform for users to collaborate in a physical context involving both real and virtual content. To enhance the quality of interaction between AR users, researchers have proposed augmenting users' interpersonal space with embodied cues such as their gaze direction. While beneficial in achieving improved interpersonal spatial communication, such shared gaze environments suffer from multiple types of errors related to eye tracking and networking, that can reduce objective performance and subjective experience.

In this paper, we conducted a human-subject study to understand the impact of accuracy, precision, latency, and dropout based errors on users' performance when using shared gaze cues to identify a target among a crowd of people. We simulated varying amounts of errors and the target distances and measured participants' objective performance through their response time and error rate, and their subjective experience and cognitive load through questionnaires. We found some significant differences suggesting that the simulated error levels had stronger effects on participants' performance than target distance with accuracy and latency having a high impact on participants' error rate. We also observed that participants assessed their own performance as lower than it objectively was, and we discuss implications for practical shared gaze applications.},

note = {Best Paper Award},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

Augmented reality (AR) technologies provide a shared platform for users to collaborate in a physical context involving both real and virtual content. To enhance the quality of interaction between AR users, researchers have proposed augmenting users' interpersonal space with embodied cues such as their gaze direction. While beneficial in achieving improved interpersonal spatial communication, such shared gaze environments suffer from multiple types of errors related to eye tracking and networking, that can reduce objective performance and subjective experience.

In this paper, we conducted a human-subject study to understand the impact of accuracy, precision, latency, and dropout based errors on users' performance when using shared gaze cues to identify a target among a crowd of people. We simulated varying amounts of errors and the target distances and measured participants' objective performance through their response time and error rate, and their subjective experience and cognitive load through questionnaires. We found some significant differences suggesting that the simulated error levels had stronger effects on participants' performance than target distance with accuracy and latency having a high impact on participants' error rate. We also observed that participants assessed their own performance as lower than it objectively was, and we discuss implications for practical shared gaze applications. |

| Kendra Richards; Nikhil Mahalanobis; Kangsoo Kim; Ryan Schubert; Myungho Lee; Salam Daher; Nahal Norouzi; Jason Hochreiter; Gerd Bruder; Gregory F. Welch Analysis of Peripheral Vision and Vibrotactile Feedback During Proximal Search Tasks with Dynamic Virtual Entities in Augmented Reality Proceedings Article In: Proceedings of the ACM Symposium on Spatial User Interaction (SUI), pp. 3:1-3:9, ACM, 2019, ISBN: 978-1-4503-6975-6/19/10. @inproceedings{Richards2019b,

title = {Analysis of Peripheral Vision and Vibrotactile Feedback During Proximal Search Tasks with Dynamic Virtual Entities in Augmented Reality},

author = {Kendra Richards and Nikhil Mahalanobis and Kangsoo Kim and Ryan Schubert and Myungho Lee and Salam Daher and Nahal Norouzi and Jason Hochreiter and Gerd Bruder and Gregory F. Welch},

url = {https://sreal.ucf.edu/wp-content/uploads/2019/10/Richards2019b.pdf},

doi = {10.1145/3357251.3357585},

isbn = {978-1-4503-6975-6/19/10},

year = {2019},

date = {2019-10-19},

booktitle = {Proceedings of the ACM Symposium on Spatial User Interaction (SUI)},

pages = {3:1-3:9},

publisher = {ACM},

abstract = {A primary goal of augmented reality (AR) is to seamlessly embed virtual content into a real environment. There are many factors that can affect the perceived physicality and co-presence of virtual entities, including the hardware capabilities, the fidelity of the virtual behaviors, and sensory feedback associated with the interactions. In this paper, we present a study investigating participants' perceptions and behaviors during a time-limited search task in close proximity with virtual entities in AR. In particular, we analyze the effects of (i) visual conflicts in the periphery of an optical see-through head-mounted display, a Microsoft HoloLens, (ii) overall lighting in the physical environment, and (iii) multimodal feedback based on vibrotactile transducers mounted on a physical platform. Our results show significant benefits of vibrotactile feedback and reduced peripheral lighting for spatial and social presence, and engagement. We discuss implications of these effects for AR applications.},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

A primary goal of augmented reality (AR) is to seamlessly embed virtual content into a real environment. There are many factors that can affect the perceived physicality and co-presence of virtual entities, including the hardware capabilities, the fidelity of the virtual behaviors, and sensory feedback associated with the interactions. In this paper, we present a study investigating participants' perceptions and behaviors during a time-limited search task in close proximity with virtual entities in AR. In particular, we analyze the effects of (i) visual conflicts in the periphery of an optical see-through head-mounted display, a Microsoft HoloLens, (ii) overall lighting in the physical environment, and (iii) multimodal feedback based on vibrotactile transducers mounted on a physical platform. Our results show significant benefits of vibrotactile feedback and reduced peripheral lighting for spatial and social presence, and engagement. We discuss implications of these effects for AR applications. |

| Austin Erickson; Ryan Schubert; Kangsoo Kim; Gerd Bruder; Greg Welch Is It Cold in Here or Is It Just Me? Analysis of Augmented Reality Temperature Visualization for Computer-Mediated Thermoception Proceedings Article In: Proceedings of the IEEE International Symposium on Mixed and Augmented Reality (ISMAR), pp. 319-327, IEEE, 2019, ISBN: 978-1-7281-4765-9. @inproceedings{Erickson2019iic,

title = {Is It Cold in Here or Is It Just Me? Analysis of Augmented Reality Temperature Visualization for Computer-Mediated Thermoception},

author = {Austin Erickson and Ryan Schubert and Kangsoo Kim and Gerd Bruder and Greg Welch},

url = {https://sreal.ucf.edu/wp-content/uploads/2019/10/Erickson2019IIC.pdf},

doi = {10.1109/ISMAR.2019.00046},

isbn = {978-1-7281-4765-9},

year = {2019},

date = {2019-10-19},

urldate = {2019-10-19},

booktitle = {Proceedings of the IEEE International Symposium on Mixed and Augmented Reality (ISMAR)},

pages = {319-327},

publisher = {IEEE},

abstract = {Modern augmented reality (AR) head-mounted displays comprise a multitude of sensors that allow them to sense the environment around them. We have extended these capabilities by mounting two heat-wavelength infrared cameras to a Microsoft HoloLens, facilitating the acquisition of thermal data and enabling stereoscopic thermal overlays in the user’s augmented view. The ability to visualize live thermal information opens several avenues of investigation on how that thermal awareness may affect a user’s thermoception. We present a human-subject study, in which we simulated different temperature shifts using either heat vision overlays or 3D AR virtual effects associated with thermal cause-effect relationships (e.g., flames burn and ice cools). We further investigated differences in estimated temperatures when the stimuli were applied to either the user’s body or their environment. Our analysis showed significant effects and first trends for the AR virtual effects and heat vision, respectively, on participants’ temperature estimates for their body and the environment though with different strengths and characteristics, which we discuss in this paper. },

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

Modern augmented reality (AR) head-mounted displays comprise a multitude of sensors that allow them to sense the environment around them. We have extended these capabilities by mounting two heat-wavelength infrared cameras to a Microsoft HoloLens, facilitating the acquisition of thermal data and enabling stereoscopic thermal overlays in the user’s augmented view. The ability to visualize live thermal information opens several avenues of investigation on how that thermal awareness may affect a user’s thermoception. We present a human-subject study, in which we simulated different temperature shifts using either heat vision overlays or 3D AR virtual effects associated with thermal cause-effect relationships (e.g., flames burn and ice cools). We further investigated differences in estimated temperatures when the stimuli were applied to either the user’s body or their environment. Our analysis showed significant effects and first trends for the AR virtual effects and heat vision, respectively, on participants’ temperature estimates for their body and the environment though with different strengths and characteristics, which we discuss in this paper. |

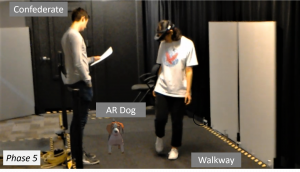

| Nahal Norouzi; Kangsoo Kim; Myungho Lee; Ryan Schubert; Austin Erickson; Jeremy Bailenson; Gerd Bruder; Greg Welch

Walking Your Virtual Dog: Analysis of Awareness and Proxemics with Simulated Support Animals in Augmented Reality Proceedings Article In: Proceedings of the IEEE International Symposium on Mixed and Augmented Reality (ISMAR), 2019, pp. 253-264, IEEE, 2019, ISBN: 978-1-7281-4765-9. @inproceedings{Norouzi2019cb,

title = {Walking Your Virtual Dog: Analysis of Awareness and Proxemics with Simulated Support Animals in Augmented Reality },

author = {Nahal Norouzi and Kangsoo Kim and Myungho Lee and Ryan Schubert and Austin Erickson and Jeremy Bailenson and Gerd Bruder and Greg Welch

},

url = {https://sreal.ucf.edu/wp-content/uploads/2019/10/Final__AR_Animal_ISMAR.pdf},

doi = {10.1109/ISMAR.2019.00040},

isbn = {978-1-7281-4765-9},

year = {2019},

date = {2019-10-16},

urldate = {2019-10-16},

booktitle = {Proceedings of the IEEE International Symposium on Mixed and Augmented Reality (ISMAR), 2019},

pages = {253-264},

publisher = {IEEE},

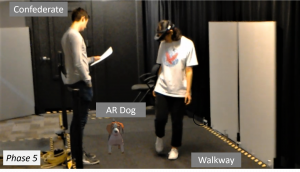

abstract = {Domestic animals have a long history of enriching human lives physically and mentally by filling a variety of different roles, such as service animals, emotional support animals, companions, and pets. Despite this, technological realizations of such animals in augmented reality (AR) are largely underexplored in terms of their behavior and interactions as well as effects they might have on human users' perception or behavior. In this paper, we describe a simulated virtual companion animal, in the form of a dog, in a shared AR space. We investigated its effects on participants' perception and behavior, including locomotion related to proxemics, with respect to their AR dog and other real people in the environment. We conducted a 2 by 2 mixed factorial human-subject study, in which we varied (i) the AR dog's awareness and behavior with respect to other people in the physical environment and (ii) the awareness and behavior of those people with respect to the AR dog. Our results show that having an AR companion dog changes participants' locomotion behavior, proxemics, and social interaction with other people who can or can not see the AR dog. We also show that the AR dog's simulated awareness and behaviors have an impact on participants' perception, including co-presence, animalism, perceived physicality, and dog's perceived awareness of the participant and environment. We discuss our findings and present insights and implications for the realization of effective AR animal companions.},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

Domestic animals have a long history of enriching human lives physically and mentally by filling a variety of different roles, such as service animals, emotional support animals, companions, and pets. Despite this, technological realizations of such animals in augmented reality (AR) are largely underexplored in terms of their behavior and interactions as well as effects they might have on human users' perception or behavior. In this paper, we describe a simulated virtual companion animal, in the form of a dog, in a shared AR space. We investigated its effects on participants' perception and behavior, including locomotion related to proxemics, with respect to their AR dog and other real people in the environment. We conducted a 2 by 2 mixed factorial human-subject study, in which we varied (i) the AR dog's awareness and behavior with respect to other people in the physical environment and (ii) the awareness and behavior of those people with respect to the AR dog. Our results show that having an AR companion dog changes participants' locomotion behavior, proxemics, and social interaction with other people who can or can not see the AR dog. We also show that the AR dog's simulated awareness and behaviors have an impact on participants' perception, including co-presence, animalism, perceived physicality, and dog's perceived awareness of the participant and environment. We discuss our findings and present insights and implications for the realization of effective AR animal companions. |

| Gregory F. Welch; Gerd Bruder; Peter Squire; Ryan Schubert Anticipating Widespread Augmented Reality: Insights from the 2018 AR Visioning Workshop Technical Report University of Central Florida and Office of Naval Research no. 786, 2019. @techreport{Welch2019b,

title = {Anticipating Widespread Augmented Reality: Insights from the 2018 AR Visioning Workshop},

author = {Gregory F. Welch and Gerd Bruder and Peter Squire and Ryan Schubert},

url = {https://stars.library.ucf.edu/ucfscholar/786/

https://sreal.ucf.edu/wp-content/uploads/2019/08/Welch2019b-1.pdf},

year = {2019},

date = {2019-08-06},

issuetitle = {Faculty Scholarship and Creative Works},

number = {786},

institution = {University of Central Florida and Office of Naval Research},

abstract = {In August of 2018 a group of academic, government, and industry experts in the field of Augmented Reality gathered for four days to consider potential technological and societal issues and opportunities that could accompany a future where AR is pervasive in location and duration of use. This report is intended to summarize some of the most novel and potentially impactful insights and opportunities identified by the group.

Our target audience includes AR researchers, government leaders, and thought leaders in general. It is our intent to share some compelling technological and societal questions that we believe are unique to AR, and to engender new thinking about the potentially impactful synergies associated with the convergence of AR and some other conventionally distinct areas of research.},

keywords = {},

pubstate = {published},

tppubtype = {techreport}

}

In August of 2018 a group of academic, government, and industry experts in the field of Augmented Reality gathered for four days to consider potential technological and societal issues and opportunities that could accompany a future where AR is pervasive in location and duration of use. This report is intended to summarize some of the most novel and potentially impactful insights and opportunities identified by the group.

Our target audience includes AR researchers, government leaders, and thought leaders in general. It is our intent to share some compelling technological and societal questions that we believe are unique to AR, and to engender new thinking about the potentially impactful synergies associated with the convergence of AR and some other conventionally distinct areas of research. |

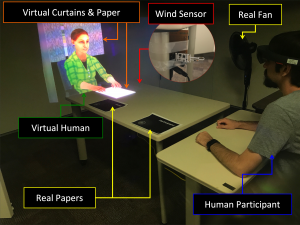

| Kangsoo Kim; Ryan Schubert; Jason Hochreiter; Gerd Bruder; Gregory Welch Blowing in the Wind: Increasing Social Presence with a Virtual Human via Environmental Airflow Interaction in Mixed Reality Journal Article In: Elsevier Computers and Graphics, vol. 83, no. October 2019, pp. 23-32, 2019. @article{Kim2019blow,

title = {Blowing in the Wind: Increasing Social Presence with a Virtual Human via Environmental Airflow Interaction in Mixed Reality},

author = {Kangsoo Kim and Ryan Schubert and Jason Hochreiter and Gerd Bruder and Gregory Welch},

url = {https://sreal.ucf.edu/wp-content/uploads/2019/06/ELSEVIER_C_G2019_Special_BlowWindinMR_ICAT_EGVE2018_20190606_reduced.pdf},

doi = {10.1016/j.cag.2019.06.006},

year = {2019},

date = {2019-07-05},

journal = {Elsevier Computers and Graphics},

volume = {83},

number = {October 2019},

pages = {23-32},

abstract = {In this paper, we describe two human-subject studies in which we explored and investigated the effects of subtle multimodal interaction on social presence with a virtual human (VH) in mixed reality (MR). In the studies, participants interacted with a VH, which was co-located with them across a table, with two different platforms: a projection based MR environment and an optical see-through head-mounted display (OST-HMD) based MR environment. While the two studies were not intended to be directly comparable, the second study with an OST-HMD was carefully designed based on the insights and lessons learned from the first projection-based study. For both studies, we compared two levels of gradually increased multimodal interaction: (i) virtual objects being affected by real airflow (e.g., as commonly experienced with fans during warm weather), and (ii) a VH showing awareness of this airflow. We hypothesized that our two levels of treatment would increase the sense of being together with the VH gradually, i.e., participants would report higher social presence with airflow influence than without it, and the social presence would be even higher when the VH showed awareness of the airflow. We observed an increased social presence in the second study when both physical–virtual interaction via airflow and VH awareness behaviors were present, but we observed no clear difference in participant-reported social presence with the VH in the first study. As the considered environmental factors are incidental to the direct interaction with the real human, i.e., they are not significant or necessary for the interaction task, they can provide a reasonably generalizable approach to increase social presence in HMD-based MR environments beyond the specific scenario and environment described here.},

keywords = {},

pubstate = {published},

tppubtype = {article}

}

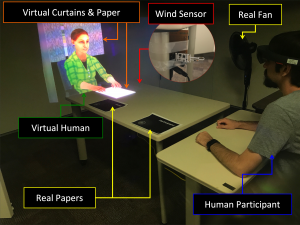

In this paper, we describe two human-subject studies in which we explored and investigated the effects of subtle multimodal interaction on social presence with a virtual human (VH) in mixed reality (MR). In the studies, participants interacted with a VH, which was co-located with them across a table, with two different platforms: a projection based MR environment and an optical see-through head-mounted display (OST-HMD) based MR environment. While the two studies were not intended to be directly comparable, the second study with an OST-HMD was carefully designed based on the insights and lessons learned from the first projection-based study. For both studies, we compared two levels of gradually increased multimodal interaction: (i) virtual objects being affected by real airflow (e.g., as commonly experienced with fans during warm weather), and (ii) a VH showing awareness of this airflow. We hypothesized that our two levels of treatment would increase the sense of being together with the VH gradually, i.e., participants would report higher social presence with airflow influence than without it, and the social presence would be even higher when the VH showed awareness of the airflow. We observed an increased social presence in the second study when both physical–virtual interaction via airflow and VH awareness behaviors were present, but we observed no clear difference in participant-reported social presence with the VH in the first study. As the considered environmental factors are incidental to the direct interaction with the real human, i.e., they are not significant or necessary for the interaction task, they can provide a reasonably generalizable approach to increase social presence in HMD-based MR environments beyond the specific scenario and environment described here. |

![[POSTER] Matching vs. Non-Matching Visuals and Shape for Embodied Virtual Healthcare Agents](https://sreal.ucf.edu/wp-content/uploads/2019/03/ieeevr_poster_thumbnail-1.png) | Salam Daher; Jason Hochreiter; Nahal Norouzi; Ryan Schubert; Gerd Bruder; Laura Gonzalez; Mindi Anderson; Desiree Diaz; Juan Cendan; Greg Welch [POSTER] Matching vs. Non-Matching Visuals and Shape for Embodied Virtual Healthcare Agents Proceedings Article In: Proceedings of IEEE Virtual Reality (VR), 2019, 2019. @inproceedings{daher2019matching,

title = {[POSTER] Matching vs. Non-Matching Visuals and Shape for Embodied Virtual Healthcare Agents},

author = {Salam Daher and Jason Hochreiter and Nahal Norouzi and Ryan Schubert and Gerd Bruder and Laura Gonzalez and Mindi Anderson and Desiree Diaz and Juan Cendan and Greg Welch},

url = {https://sreal.ucf.edu/wp-content/uploads/2019/03/IEEEVR2019_Poster_PVChildStudy.pdf},

year = {2019},

date = {2019-03-27},

publisher = {Proceedings of IEEE Virtual Reality (VR), 2019},

abstract = {Embodied virtual agents serving as patient simulators are widely used in medical training scenarios, ranging from physical patients to virtual patients presented via virtual and augmented reality technologies. Physical-virtual patients are a hybrid solution that combines the benefits of dynamic visuals integrated into a human-shaped physical

form that can also present other cues, such as pulse, breathing sounds, and temperature. Sometimes in simulation the visuals and shape do not match. We carried out a human-participant study employing graduate nursing students in pediatric patient simulations comprising conditions associated with matching/non-matching of the visuals and shape.},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

Embodied virtual agents serving as patient simulators are widely used in medical training scenarios, ranging from physical patients to virtual patients presented via virtual and augmented reality technologies. Physical-virtual patients are a hybrid solution that combines the benefits of dynamic visuals integrated into a human-shaped physical

form that can also present other cues, such as pulse, breathing sounds, and temperature. Sometimes in simulation the visuals and shape do not match. We carried out a human-participant study employing graduate nursing students in pediatric patient simulations comprising conditions associated with matching/non-matching of the visuals and shape. |

2018

|

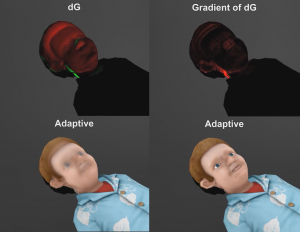

| Ryan Schubert; Gerd Bruder; Greg Welch Adaptive filtering of physical-virtual artifacts for synthetic animatronics Proceedings Article In: Bruder, G.; Cobb, S.; Yoshimoto, S. (Ed.): ICAT-EGVE 2018 - International Conference on Artificial Reality and Telexistence and Eurographics Symposium on Virtual Environments, Limassol, Cyprus, November 7-9 2018, 2018. @inproceedings{Schubert2018,

title = {Adaptive filtering of physical-virtual artifacts for synthetic animatronics},

author = {Ryan Schubert and Gerd Bruder and Greg Welch},

editor = {G. Bruder and S. Cobb and S. Yoshimoto},

url = {https://sreal.ucf.edu/wp-content/uploads/2019/01/Schubert2018.pdf},

year = {2018},

date = {2018-11-07},

booktitle = {ICAT-EGVE 2018 - International Conference on Artificial Reality and Telexistence and Eurographics Symposium on Virtual Environments, Limassol, Cyprus, November 7-9 2018},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

|

![[POSTER] Matching vs. Non-Matching Visuals and Shape for Embodied Virtual Healthcare Agents](https://sreal.ucf.edu/wp-content/uploads/2019/03/ieeevr_poster_thumbnail-1.png)