2023

|

| Zubin Choudhary; Nahal Norouzi; Austin Erickson; Ryan Schubert; Gerd Bruder; Gregory F. Welch Exploring the Social Influence of Virtual Humans Unintentionally Conveying Conflicting Emotions Conference Proceedings of the 30th IEEE Conference on Virtual Reality and 3D User Interfaces, IEEE VR 2023, 2023. @conference{Choudhary2023,

title = {Exploring the Social Influence of Virtual Humans Unintentionally Conveying Conflicting Emotions},

author = {Zubin Choudhary and Nahal Norouzi and Austin Erickson and Ryan Schubert and Gerd Bruder and Gregory F. Welch},

url = {https://sreal.ucf.edu/wp-content/uploads/2023/01/PostReview_ConflictingEmotions_IEEEVR23-1.pdf},

year = {2023},

date = {2023-03-29},

urldate = {2023-03-29},

booktitle = {Proceedings of the 30th IEEE Conference on Virtual Reality and 3D User Interfaces, IEEE VR 2023},

abstract = {The expression of human emotion is integral to social interaction, and in virtual reality it is increasingly common to develop virtual avatars that attempt to convey emotions by mimicking these visual and aural cues, i.e. the facial and vocal expressions. However, errors in (or the absence of) facial tracking can result in the rendering of incorrect facial expressions on these virtual avatars. For example, a virtual avatar may speak with a happy or unhappy vocal inflection while their facial expression remains otherwise neutral. In circumstances where there is conflict between the avatar’s facial and vocal expressions, it is possible that users will incorrectly interpret the

avatar’s emotion, which may have unintended consequences in terms of social influence or in terms of the outcome of the interaction.

In this paper, we present a human-subjects study (N = 22 ) aimed at understanding the impact of conflicting facial and vocal emotional expressions. Specifically we explored three levels of emotional valence (unhappy, neutral, and happy) expressed in both visual (facial) and aural (vocal) forms. We also investigate three levels of head scales (down-scaled, accurate, and up-scaled) to evaluate whether head scale affects user interpretation of the conveyed emotion. We find significant effects of different multimodal expressions on happiness and trust perception, while no significant effect was observed for head scales. Evidence from our results suggest that facial expressions have a stronger impact than vocal expressions. Additionally, as the difference between the two expressions increase, the less predictable the multimodal expression becomes. For example, for the happy-looking and happy-sounding multimodal expression, we expect and see high happiness rating and high trust, however if one of the two expressions change, this mismatch makes the expression less predictable. We discuss the relationships, implications, and guidelines for social applications that aim to leverage multimodal social cues.},

keywords = {},

pubstate = {published},

tppubtype = {conference}

}

The expression of human emotion is integral to social interaction, and in virtual reality it is increasingly common to develop virtual avatars that attempt to convey emotions by mimicking these visual and aural cues, i.e. the facial and vocal expressions. However, errors in (or the absence of) facial tracking can result in the rendering of incorrect facial expressions on these virtual avatars. For example, a virtual avatar may speak with a happy or unhappy vocal inflection while their facial expression remains otherwise neutral. In circumstances where there is conflict between the avatar’s facial and vocal expressions, it is possible that users will incorrectly interpret the

avatar’s emotion, which may have unintended consequences in terms of social influence or in terms of the outcome of the interaction.

In this paper, we present a human-subjects study (N = 22 ) aimed at understanding the impact of conflicting facial and vocal emotional expressions. Specifically we explored three levels of emotional valence (unhappy, neutral, and happy) expressed in both visual (facial) and aural (vocal) forms. We also investigate three levels of head scales (down-scaled, accurate, and up-scaled) to evaluate whether head scale affects user interpretation of the conveyed emotion. We find significant effects of different multimodal expressions on happiness and trust perception, while no significant effect was observed for head scales. Evidence from our results suggest that facial expressions have a stronger impact than vocal expressions. Additionally, as the difference between the two expressions increase, the less predictable the multimodal expression becomes. For example, for the happy-looking and happy-sounding multimodal expression, we expect and see high happiness rating and high trust, however if one of the two expressions change, this mismatch makes the expression less predictable. We discuss the relationships, implications, and guidelines for social applications that aim to leverage multimodal social cues. |

| Kangsoo Kim; Nahal Norouzi; Dongsik Jo; Gerd Bruder; Greg Welch The Augmented Reality Internet of Things: Opportunities of Embodied Interactions in Transreality Book Chapter In: Nee, Andrew Yeh Ching; Ong, Soh Khim (Ed.): Springer Handbook of Augmented Reality, pp. 797–829, Springer International Publishing, Cham, 2023, ISBN: 978-3-030-67822-7. @inbook{Kim2023aa,

title = {The Augmented Reality Internet of Things: Opportunities of Embodied Interactions in Transreality},

author = {Kangsoo Kim and Nahal Norouzi and Dongsik Jo and Gerd Bruder and Greg Welch},

editor = {Andrew Yeh Ching Nee and Soh Khim Ong},

url = {https://doi.org/10.1007/978-3-030-67822-7_32},

doi = {10.1007/978-3-030-67822-7_32},

isbn = {978-3-030-67822-7},

year = {2023},

date = {2023-01-01},

urldate = {2023-01-01},

booktitle = {Springer Handbook of Augmented Reality},

pages = {797--829},

publisher = {Springer International Publishing},

address = {Cham},

abstract = {Human society is encountering a new wave of advancements related to smart connected technologies with the convergence of different traditionally separate fields, which can be characterized by a fusion of technologies that merge and tightly integrate the physical, digital, and biological spheres. In this new paradigm of convergence, all the physical and digital things will become more and more intelligent and connected to each other through the Internet, and the boundary between them will blur and become seamless. In particular, augmented/mixed reality (AR/MR), which combines virtual content with the real environment, is experiencing an unprecedented golden era along with dramatic technological achievements and increasing public interest. Together with advanced artificial intelligence (AI) and ubiquitous computing empowered by the Internet of Things/Everything (IoT/IoE) systems, AR can be our ultimate interface to interact with both digital (virtual) and physical (real) worlds while pervasively mediating and enriching our lives.},

keywords = {},

pubstate = {published},

tppubtype = {inbook}

}

Human society is encountering a new wave of advancements related to smart connected technologies with the convergence of different traditionally separate fields, which can be characterized by a fusion of technologies that merge and tightly integrate the physical, digital, and biological spheres. In this new paradigm of convergence, all the physical and digital things will become more and more intelligent and connected to each other through the Internet, and the boundary between them will blur and become seamless. In particular, augmented/mixed reality (AR/MR), which combines virtual content with the real environment, is experiencing an unprecedented golden era along with dramatic technological achievements and increasing public interest. Together with advanced artificial intelligence (AI) and ubiquitous computing empowered by the Internet of Things/Everything (IoT/IoE) systems, AR can be our ultimate interface to interact with both digital (virtual) and physical (real) worlds while pervasively mediating and enriching our lives. |

2022

|

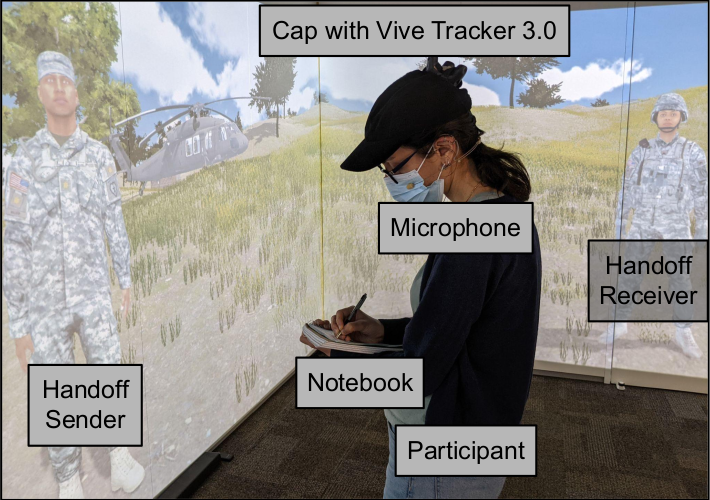

| Matt Gottsacker; Nahal Norouzi; Ryan Schubert; Frank Guido-Sanz; Gerd Bruder; Gregory F. Welch Effects of Environmental Noise Levels on Patient Handoff Communication in a Mixed Reality Simulation Proceedings Article In: 28th ACM Symposium on Virtual Reality Software and Technology (VRST '22), pp. 1-10, 2022, ISBN: 978-1-4503-9889-3/22/11. @inproceedings{gottsacker2022noise,

title = {Effects of Environmental Noise Levels on Patient Handoff Communication in a Mixed Reality Simulation},

author = {Matt Gottsacker and Nahal Norouzi and Ryan Schubert and Frank Guido-Sanz and Gerd Bruder and Gregory F. Welch},

url = {https://sreal.ucf.edu/wp-content/uploads/2022/10/main.pdf},

doi = {10.1145/3562939.3565627},

isbn = {978-1-4503-9889-3/22/11},

year = {2022},

date = {2022-10-27},

urldate = {2022-10-27},

booktitle = {28th ACM Symposium on Virtual Reality Software and Technology (VRST '22)},

pages = {1-10},

abstract = {When medical caregivers transfer patients to another person's care (a patient handoff), it is essential they effectively communicate the patient's condition to ensure the best possible health outcomes. Emergency situations caused by mass casualty events (e.g., natural disasters) introduce additional difficulties to handoff procedures such as environmental noise. We created a projected mixed reality simulation of a handoff scenario involving a medical evacuation by air and tested how low, medium, and high levels of helicopter noise affected participants' handoff experience, handoff performance, and behaviors. Through a human-subjects experimental design study (N = 21), we found that the addition of noise increased participants' subjective stress and task load, decreased their self-assessed and actual performance, and caused participants to speak louder. Participants also stood closer to the virtual human sending the handoff information when listening to the handoff than they stood to the receiver when relaying the handoff information. We discuss implications for the design of handoff training simulations and avenues for future handoff communication research.},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

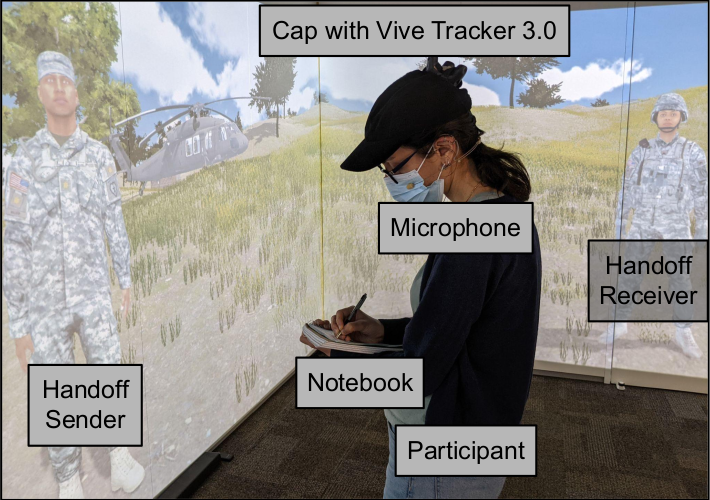

When medical caregivers transfer patients to another person's care (a patient handoff), it is essential they effectively communicate the patient's condition to ensure the best possible health outcomes. Emergency situations caused by mass casualty events (e.g., natural disasters) introduce additional difficulties to handoff procedures such as environmental noise. We created a projected mixed reality simulation of a handoff scenario involving a medical evacuation by air and tested how low, medium, and high levels of helicopter noise affected participants' handoff experience, handoff performance, and behaviors. Through a human-subjects experimental design study (N = 21), we found that the addition of noise increased participants' subjective stress and task load, decreased their self-assessed and actual performance, and caused participants to speak louder. Participants also stood closer to the virtual human sending the handoff information when listening to the handoff than they stood to the receiver when relaying the handoff information. We discuss implications for the design of handoff training simulations and avenues for future handoff communication research. |

| Nahal Norouzi; Matthew Gottsacker; Gerd Bruder; Pamela Wisniewski; Jeremy Bailenson; Greg Welch Virtual Humans with Pets and Robots: Exploring the Influence of Social Priming on One’s Perception of a Virtual Human Proceedings Article In: Proceedings of the IEEE Conference on Virtual Reality and 3D User Interfaces (IEEE VR), Christchurch, New Zealand, 2022., pp. 10, IEEE, 2022. @inproceedings{Norouzi2022,

title = {Virtual Humans with Pets and Robots: Exploring the Influence of Social Priming on One’s Perception of a Virtual Human},

author = {Nahal Norouzi and Matthew Gottsacker and Gerd Bruder and Pamela Wisniewski and Jeremy Bailenson and Greg Welch},

url = {https://sreal.ucf.edu/wp-content/uploads/2022/01/2022007720.pdf},

year = {2022},

date = {2022-03-16},

urldate = {2022-03-16},

booktitle = {Proceedings of the IEEE Conference on Virtual Reality and 3D User Interfaces (IEEE VR), Christchurch, New Zealand, 2022.},

pages = {10},

publisher = {IEEE},

abstract = {Social priming is the idea that observations of a virtual human (VH)engaged in short social interactions with a real or virtual human bystander can positively influence users’ subsequent interactions with that VH. In this paper we investigate the question of whether the positive effects of social priming are limited to interactions with humanoid entities. For instance, virtual dogs offer an attractive candidate for non-humanoid entities, as previous research suggests multiple positive effects. In particular, real human dog owners receive more positive attention from strangers than non-dog owners. To examine the influence of such social priming we carried out a human-subjects experiment with four conditions: three social priming conditions where a participant initially observed a VH interacting with one of three virtual entities (another VH, a virtual pet dog, or a virtual personal robot), and a non-social priming condition where a VH (alone) was intently looking at her phone as if reading something. We recruited 24 participants and conducted a mixed-methods analysis. We found that a VH’s prior social interactions with another VH and a virtual dog significantly increased participants’ perceptions of the VHs’ affective attraction. Also, participants felt more inclined to interact with the VH in the future in all of the social priming conditions. Qualitatively, we found that the social priming conditions resulted in a more positive user experience than the non-social priming condition. Also, the virtual dog and the virtual robot were perceived as a source of positive surprise, with participants appreciating the non-humanoid interactions for various reasons, such as the avoidance of social anxieties sometimes associated with humans.},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

Social priming is the idea that observations of a virtual human (VH)engaged in short social interactions with a real or virtual human bystander can positively influence users’ subsequent interactions with that VH. In this paper we investigate the question of whether the positive effects of social priming are limited to interactions with humanoid entities. For instance, virtual dogs offer an attractive candidate for non-humanoid entities, as previous research suggests multiple positive effects. In particular, real human dog owners receive more positive attention from strangers than non-dog owners. To examine the influence of such social priming we carried out a human-subjects experiment with four conditions: three social priming conditions where a participant initially observed a VH interacting with one of three virtual entities (another VH, a virtual pet dog, or a virtual personal robot), and a non-social priming condition where a VH (alone) was intently looking at her phone as if reading something. We recruited 24 participants and conducted a mixed-methods analysis. We found that a VH’s prior social interactions with another VH and a virtual dog significantly increased participants’ perceptions of the VHs’ affective attraction. Also, participants felt more inclined to interact with the VH in the future in all of the social priming conditions. Qualitatively, we found that the social priming conditions resulted in a more positive user experience than the non-social priming condition. Also, the virtual dog and the virtual robot were perceived as a source of positive surprise, with participants appreciating the non-humanoid interactions for various reasons, such as the avoidance of social anxieties sometimes associated with humans. |

| Jesus Ugarte; Nahal Norouzi; Austin Erickson; Gerd Bruder; Greg Welch

Distant Hand Interaction Framework in Augmented Reality Proceedings Article In: Proceedings of the 2022 IEEE Conference on Virtual Reality and 3D User Interfaces (VR), pp. 962-963, IEEE IEEE, Christchurch, New Zealand, 2022. @inproceedings{Ugarte2022,

title = {Distant Hand Interaction Framework in Augmented Reality},

author = {Jesus Ugarte and Nahal Norouzi and Austin Erickson and Gerd Bruder and Greg Welch

},

url = {https://sreal.ucf.edu/wp-content/uploads/2022/05/Distant_Hand_Interaction_Framework_in_Augmented_Reality.pdf},

doi = {10.1109/VRW55335.2022.00332},

year = {2022},

date = {2022-03-16},

urldate = {2022-03-16},

booktitle = {Proceedings of the 2022 IEEE Conference on Virtual Reality and 3D User Interfaces (VR)},

pages = {962-963},

publisher = {IEEE},

address = {Christchurch, New Zealand},

organization = {IEEE},

series = {2022 IEEE Conference on Virtual Reality and 3D User Interfaces Abstracts and Workshops (VRW)},

abstract = {Recent augmented reality (AR) head-mounted displays support shared experiences among multiple users in real physical spaces. While previous research looked at different embodied methods to enhance interpersonal communication cues, so far, less research looked at distant interaction in AR and, in particular, distant hand communication, which can open up new possibilities for scenarios, such as large-group collaboration. In this demonstration, we present a research framework for distant hand interaction in AR, including mapping techniques and visualizations. Our techniques are inspired by virtual reality (VR) distant hand interactions, but had to be adjusted due to the different context in AR and limited knowledge about the physical environment. We discuss different techniques for hand communication, including deictic pointing at a distance, distant drawing in AR, and distant communication through symbolic hand gestures.},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

Recent augmented reality (AR) head-mounted displays support shared experiences among multiple users in real physical spaces. While previous research looked at different embodied methods to enhance interpersonal communication cues, so far, less research looked at distant interaction in AR and, in particular, distant hand communication, which can open up new possibilities for scenarios, such as large-group collaboration. In this demonstration, we present a research framework for distant hand interaction in AR, including mapping techniques and visualizations. Our techniques are inspired by virtual reality (VR) distant hand interactions, but had to be adjusted due to the different context in AR and limited knowledge about the physical environment. We discuss different techniques for hand communication, including deictic pointing at a distance, distant drawing in AR, and distant communication through symbolic hand gestures. |

2021

|

| Nahal Norouzi The Social and Behavioral Influences of Interactions with Virtual Dogs as Embodied Agents in Augmented and Virtual Reality PhD Thesis 2021. @phdthesis{Norouzi2021c,

title = {The Social and Behavioral Influences of Interactions with Virtual Dogs as Embodied Agents in Augmented and Virtual Reality},

author = {Nahal Norouzi},

url = {https://sreal.ucf.edu/wp-content/uploads/2022/04/The-Social-and-Behavioral-Influences-of-Interactions-with-Virtual.pdf},

year = {2021},

date = {2021-12-18},

urldate = {2021-12-18},

abstract = {Intelligent virtual agents (IVAs) have been researched for years and recently many of these IVAs have become commercialized and widely used by many individuals as intelligent personal assistants. The majority of these IVAs are anthropomorphic, and many are developed to resemble real humans entirely. However, real humans do not interact only with other humans in the real world, and many benefit from interactions with non-human entities. A prime example is human interactions with animals, such as dogs. Humans and dogs share a historical bond that goes back thousands of years. In the past 30 years, there has been a great deal of research to understand the effects of human-dog interaction, with research findings pointing towards the physical, mental, and social benefits to humans when interacting with dogs. However, limitations such as allergies, stress on dogs, and hygiene issues restrict some needy individuals from receiving such benefits. More recently, advances in augmented and virtual reality technology provide opportunities for realizing virtual dogs and animals, allowing for their three-dimensional presence in the users' real physical environment or while users are immersed in virtual worlds. In this dissertation, I utilize the findings from human-dog interaction research and conduct a systematic literature review on embodied IVAs to define a research scope to understand virtual dogs' social and behavioral influences in augmented and virtual reality. I present the findings of this systematic literature review that informed the creation of the research scope and four human-subjects studies. Through these user studies, I found that virtual dogs bring about a sense of comfort and companionship for users in different contexts. In addition, their responsiveness plays an important role in enhancing users' quality of experience, and they can be effectively utilized as attention guidance mechanisms and social priming stimuli.},

keywords = {},

pubstate = {published},

tppubtype = {phdthesis}

}

Intelligent virtual agents (IVAs) have been researched for years and recently many of these IVAs have become commercialized and widely used by many individuals as intelligent personal assistants. The majority of these IVAs are anthropomorphic, and many are developed to resemble real humans entirely. However, real humans do not interact only with other humans in the real world, and many benefit from interactions with non-human entities. A prime example is human interactions with animals, such as dogs. Humans and dogs share a historical bond that goes back thousands of years. In the past 30 years, there has been a great deal of research to understand the effects of human-dog interaction, with research findings pointing towards the physical, mental, and social benefits to humans when interacting with dogs. However, limitations such as allergies, stress on dogs, and hygiene issues restrict some needy individuals from receiving such benefits. More recently, advances in augmented and virtual reality technology provide opportunities for realizing virtual dogs and animals, allowing for their three-dimensional presence in the users' real physical environment or while users are immersed in virtual worlds. In this dissertation, I utilize the findings from human-dog interaction research and conduct a systematic literature review on embodied IVAs to define a research scope to understand virtual dogs' social and behavioral influences in augmented and virtual reality. I present the findings of this systematic literature review that informed the creation of the research scope and four human-subjects studies. Through these user studies, I found that virtual dogs bring about a sense of comfort and companionship for users in different contexts. In addition, their responsiveness plays an important role in enhancing users' quality of experience, and they can be effectively utilized as attention guidance mechanisms and social priming stimuli. |

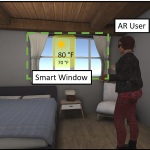

| Connor D. Flick; Courtney J. Harris; Nikolas T. Yonkers; Nahal Norouzi; Austin Erickson; Zubin Choudhary; Matt Gottsacker; Gerd Bruder; Gregory F. Welch Trade-offs in Augmented Reality User Interfaces for Controlling a Smart Environment Proceedings Article In: In Symposium on Spatial User Interaction (SUI '21), pp. 1-11, Association for Computing Machinery, New York, NY, USA, 2021. @inproceedings{Flick2021,

title = {Trade-offs in Augmented Reality User Interfaces for Controlling a Smart Environment},

author = {Connor D. Flick and Courtney J. Harris and Nikolas T. Yonkers and Nahal Norouzi and Austin Erickson and Zubin Choudhary and Matt Gottsacker and Gerd Bruder and Gregory F. Welch},

url = {https://sreal.ucf.edu/wp-content/uploads/2021/09/SUI2021_REU_Paper.pdf},

year = {2021},

date = {2021-11-09},

urldate = {2021-11-09},

booktitle = {In Symposium on Spatial User Interaction (SUI '21)},

pages = {1-11},

publisher = {Association for Computing Machinery},

address = {New York, NY, USA},

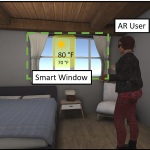

abstract = {Smart devices and Internet of Things (IoT) technologies are replacing or being incorporated into traditional devices at a growing pace. The use of digital interfaces to interact with these devices has become a common occurrence in homes, work spaces, and various industries around the world. The most common interfaces for these connected devices focus on mobile apps or voice control via intelligent virtual assistants. However, with augmented reality (AR) becoming more popular and accessible among consumers, there are new opportunities for spatial user interfaces to seamlessly bridge the gap between digital and physical affordances.

In this paper, we present a human-subject study evaluating and comparing four user interfaces for smart connected environments: gaze input, hand gestures, voice input, and a mobile app. We assessed participants’ user experience, usability, task load, completion time, and preferences. Our results show multiple trade-offs between these interfaces across these measures. In particular, we found that gaze input shows great potential for future use cases, while both gaze input and hand gestures suffer from limited familiarity among users, compared to voice input and mobile apps.},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

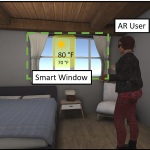

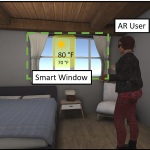

Smart devices and Internet of Things (IoT) technologies are replacing or being incorporated into traditional devices at a growing pace. The use of digital interfaces to interact with these devices has become a common occurrence in homes, work spaces, and various industries around the world. The most common interfaces for these connected devices focus on mobile apps or voice control via intelligent virtual assistants. However, with augmented reality (AR) becoming more popular and accessible among consumers, there are new opportunities for spatial user interfaces to seamlessly bridge the gap between digital and physical affordances.

In this paper, we present a human-subject study evaluating and comparing four user interfaces for smart connected environments: gaze input, hand gestures, voice input, and a mobile app. We assessed participants’ user experience, usability, task load, completion time, and preferences. Our results show multiple trade-offs between these interfaces across these measures. In particular, we found that gaze input shows great potential for future use cases, while both gaze input and hand gestures suffer from limited familiarity among users, compared to voice input and mobile apps. |

| Matt Gottsacker; Nahal Norouzi; Kangsoo Kim; Gerd Bruder; Gregory F. Welch Diegetic Representations for Seamless Cross-Reality Interruptions Conference Proceedings of the IEEE International Symposium on Mixed and Augmented Reality (ISMAR), 2021. @conference{Gottsacker2021,

title = {Diegetic Representations for Seamless Cross-Reality Interruptions},

author = {Matt Gottsacker and Nahal Norouzi and Kangsoo Kim and Gerd Bruder and Gregory F. Welch},

url = {https://sreal.ucf.edu/wp-content/uploads/2021/08/ISMAR_2021_Paper__Interruptions_.pdf},

year = {2021},

date = {2021-10-15},

urldate = {2021-10-15},

booktitle = {Proceedings of the IEEE International Symposium on Mixed and Augmented Reality (ISMAR)},

pages = {10},

abstract = {Due to the closed design of modern virtual reality (VR) head-mounted displays (HMDs), users tend to lose awareness of their real-world surroundings. This is particularly challenging when an-other person in the same physical space needs to interrupt the VR user for a brief conversation. Such interruptions, e.g., tapping a VR user on the shoulder, can cause a disruptive break in presence (BIP),which affects their place and plausibility illusions, and may cause a drop in performance of their virtual activity. Recent findings related to the concept of diegesis, which denotes the internal consistency of an experience/story, suggest potential benefits of integrating registered virtual representations for physical interactors, especially when these appear internally consistent in VR. In this paper, we present a human-subject study we conducted to compare and evaluate five different diegetic and non-diegetic methods to facilitate cross-reality interruptions in a virtual office environment, where a user’s task was briefly interrupted by a physical person. We created a Cross-Reality Interaction Questionnaire (CRIQ) to capture the quality of the interaction from the VR user’s perspective. Our results show that the diegetic representations afforded the highest quality inter-actions, the highest place illusions, and caused the least disruption of the participants’ virtual experiences. We found reasonably high senses of co-presence with the partially and fully diegetic virtual representations. We discuss our findings as well as implications for practical applications that aim to leverage virtual representations to ease cross-reality interruptions},

keywords = {},

pubstate = {published},

tppubtype = {conference}

}

Due to the closed design of modern virtual reality (VR) head-mounted displays (HMDs), users tend to lose awareness of their real-world surroundings. This is particularly challenging when an-other person in the same physical space needs to interrupt the VR user for a brief conversation. Such interruptions, e.g., tapping a VR user on the shoulder, can cause a disruptive break in presence (BIP),which affects their place and plausibility illusions, and may cause a drop in performance of their virtual activity. Recent findings related to the concept of diegesis, which denotes the internal consistency of an experience/story, suggest potential benefits of integrating registered virtual representations for physical interactors, especially when these appear internally consistent in VR. In this paper, we present a human-subject study we conducted to compare and evaluate five different diegetic and non-diegetic methods to facilitate cross-reality interruptions in a virtual office environment, where a user’s task was briefly interrupted by a physical person. We created a Cross-Reality Interaction Questionnaire (CRIQ) to capture the quality of the interaction from the VR user’s perspective. Our results show that the diegetic representations afforded the highest quality inter-actions, the highest place illusions, and caused the least disruption of the participants’ virtual experiences. We found reasonably high senses of co-presence with the partially and fully diegetic virtual representations. We discuss our findings as well as implications for practical applications that aim to leverage virtual representations to ease cross-reality interruptions |

| Kangsoo Kim; Nahal Norouzi; Dongsik Jo; Gerd Bruder; and Gregory F. Welch The Augmented Reality Internet of Things: Opportunities of Embodied Interactions in Transreality Book Chapter Forthcoming In: Nee, A. Y. C.; Ong, S. K. (Ed.): vol. Handbook of Augmented Reality, pp. 60, Springer , Forthcoming. @inbook{Kim2021,

title = {The Augmented Reality Internet of Things: Opportunities of Embodied Interactions in Transreality},

author = {Kangsoo Kim and Nahal Norouzi and Dongsik Jo and Gerd Bruder and and Gregory F. Welch},

editor = {A. Y. C. Nee and S. K. Ong},

year = {2021},

date = {2021-09-01},

urldate = {2021-09-01},

volume = {Handbook of Augmented Reality},

pages = {60},

publisher = {Springer },

abstract = {Human society is encountering a new wave of advancements related to smart connected technologies with the convergence of different traditionally separate fields, which can be characterized by a fusion of technologies that merge and tightly integrate the physical, digital, and biological spheres. In this new paradigm of convergence, all the physical and digital things will become more and more intelligent and connected to each other through the Internet, and the boundary between them will blur and become seamless. In particular, Augmented/Mixed Reality (AR/MR) combines virtual content with the real environment and is experiencing an unprecedented golden era along with dramatic technological achievements and increasing public interest. Together with advanced Artificial Intelligence (AI) and ubiquitous computing empowered by the Internet of Things/Everything (IoT/IoE) systems, AR can be our ultimate interface to interact with both digital (virtual) and physical (real) worlds while pervasively mediating and enriching our lives. In this chapter, we describe the concept of transreality that symbiotically connects the physical and the virtual worlds incorporating the aforementioned advanced technologies, and illustrate how such transreality environments can transform our activities in it, providing intelligent and intuitive interaction with the environment while exploring prior research literature in this domain. We also present the potential of virtually embodied interactions—e.g., employing virtual avatars and agents—in highly connected transreality spaces for enhancing human abilities and perception. Recent ongoing research focusing on the effects of embodied interaction are described and discussed in different aspects such as perceptual, cognitive, and social contexts. The chapter will end with discussions on potential research directions in the future and implications related to the user experience in transreality.},

keywords = {},

pubstate = {forthcoming},

tppubtype = {inbook}

}

Human society is encountering a new wave of advancements related to smart connected technologies with the convergence of different traditionally separate fields, which can be characterized by a fusion of technologies that merge and tightly integrate the physical, digital, and biological spheres. In this new paradigm of convergence, all the physical and digital things will become more and more intelligent and connected to each other through the Internet, and the boundary between them will blur and become seamless. In particular, Augmented/Mixed Reality (AR/MR) combines virtual content with the real environment and is experiencing an unprecedented golden era along with dramatic technological achievements and increasing public interest. Together with advanced Artificial Intelligence (AI) and ubiquitous computing empowered by the Internet of Things/Everything (IoT/IoE) systems, AR can be our ultimate interface to interact with both digital (virtual) and physical (real) worlds while pervasively mediating and enriching our lives. In this chapter, we describe the concept of transreality that symbiotically connects the physical and the virtual worlds incorporating the aforementioned advanced technologies, and illustrate how such transreality environments can transform our activities in it, providing intelligent and intuitive interaction with the environment while exploring prior research literature in this domain. We also present the potential of virtually embodied interactions—e.g., employing virtual avatars and agents—in highly connected transreality spaces for enhancing human abilities and perception. Recent ongoing research focusing on the effects of embodied interaction are described and discussed in different aspects such as perceptual, cognitive, and social contexts. The chapter will end with discussions on potential research directions in the future and implications related to the user experience in transreality. |

2020

|

![[Demo] Towards Interactive Virtual Dogs as a Pervasive Social Companion in Augmented Reality](https://sreal.ucf.edu/wp-content/uploads/2020/12/demoFig-e1607360623259.png) | Nahal Norouzi; Kangsoo Kim; Gerd Bruder; Greg Welch [Demo] Towards Interactive Virtual Dogs as a Pervasive Social Companion in Augmented Reality Proceedings Article In: Proceedings of the combined International Conference on Artificial Reality & Telexistence and Eurographics Symposium on Virtual Environments (ICAT-EGVE)., pp. 29-30, 2020, (Best Demo Audience Choice Award). @inproceedings{Norouzi2020d,

title = {[Demo] Towards Interactive Virtual Dogs as a Pervasive Social Companion in Augmented Reality},

author = {Nahal Norouzi and Kangsoo Kim and Gerd Bruder and Greg Welch },

url = {https://sreal.ucf.edu/wp-content/uploads/2020/12/029-030.pdf},

doi = {https://doi.org/10.2312/egve.20201283},

year = {2020},

date = {2020-12-04},

booktitle = {Proceedings of the combined International Conference on Artificial Reality & Telexistence and Eurographics Symposium on Virtual Environments (ICAT-EGVE).},

pages = {29-30},

abstract = {Pets and animal-assisted intervention sessions have shown to be beneficial for humans' mental, social, and physical health. However, for specific populations, factors such as hygiene restrictions, allergies, and care and resource limitations reduce interaction opportunities. In parallel, understanding the capabilities of animals' technological representations, such as robotic and digital forms, have received considerable attention and has fueled the utilization of many of these technological representations. Additionally, recent advances in augmented reality technology have allowed for the realization of virtual animals with flexible appearances and behaviors to exist in the real world. In this demo, we present a companion virtual dog in augmented reality that aims to facilitate a range of interactions with populations, such as children and older adults. We discuss the potential benefits and limitations of such a companion and propose future use cases and research directions.},

note = {Best Demo Audience Choice Award},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

Pets and animal-assisted intervention sessions have shown to be beneficial for humans' mental, social, and physical health. However, for specific populations, factors such as hygiene restrictions, allergies, and care and resource limitations reduce interaction opportunities. In parallel, understanding the capabilities of animals' technological representations, such as robotic and digital forms, have received considerable attention and has fueled the utilization of many of these technological representations. Additionally, recent advances in augmented reality technology have allowed for the realization of virtual animals with flexible appearances and behaviors to exist in the real world. In this demo, we present a companion virtual dog in augmented reality that aims to facilitate a range of interactions with populations, such as children and older adults. We discuss the potential benefits and limitations of such a companion and propose future use cases and research directions. |

| Nahal Norouzi; Kangsoo Kim; Gerd Bruder; Austin Erickson; Zubin Choudhary; Yifan Li; Greg Welch A Systematic Literature Review of Embodied Augmented Reality Agents in Head-Mounted Display Environments Proceedings Article In: In Proceedings of the International Conference on Artificial Reality and Telexistence & Eurographics Symposium on Virtual Environments, pp. 11, 2020. @inproceedings{Norouzi2020c,

title = {A Systematic Literature Review of Embodied Augmented Reality Agents in Head-Mounted Display Environments},

author = {Nahal Norouzi and Kangsoo Kim and Gerd Bruder and Austin Erickson and Zubin Choudhary and Yifan Li and Greg Welch},

url = {https://sreal.ucf.edu/wp-content/uploads/2020/11/IVC_ICAT_EGVE2020.pdf

https://www.youtube.com/watch?v=IsX5q86pH4M},

year = {2020},

date = {2020-12-02},

urldate = {2020-12-02},

booktitle = {In Proceedings of the International Conference on Artificial Reality and Telexistence & Eurographics Symposium on Virtual Environments},

pages = {11},

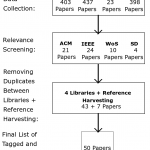

abstract = {Embodied agents, i.e., computer-controlled characters, have proven useful for various applications across a multitude of display setups and modalities. While most traditional work focused on embodied agents presented on a screen or projector, and a growing number of works are focusing on agents in virtual reality, a comparatively small number of publications looked at such agents in augmented reality (AR). Such AR agents, specifically when using see-through head-mounted displays (HMDs)as the display medium, show multiple critical differences to other forms of agents, including their appearances, behaviors, and physical-virtual interactivity. Due to the unique challenges in this specific field, and due to the comparatively limited attention by the research community so far, we believe that it is important to map the field to understand the current trends, challenges, and future research. In this paper, we present a systematic review of the research performed on interactive, embodied AR agents using HMDs. Starting with 1261 broadly related papers, we conducted an in-depth review of 50 directly related papers from2000 to 2020, focusing on papers that reported on user studies aiming to improve our understanding of interactive agents in AR HMD environments or their utilization in specific applications. We identified common research and application areas of AR agents through a structured iterative process, present research trends, and gaps, and share insights on future directions.},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

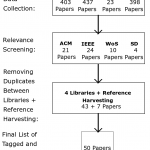

Embodied agents, i.e., computer-controlled characters, have proven useful for various applications across a multitude of display setups and modalities. While most traditional work focused on embodied agents presented on a screen or projector, and a growing number of works are focusing on agents in virtual reality, a comparatively small number of publications looked at such agents in augmented reality (AR). Such AR agents, specifically when using see-through head-mounted displays (HMDs)as the display medium, show multiple critical differences to other forms of agents, including their appearances, behaviors, and physical-virtual interactivity. Due to the unique challenges in this specific field, and due to the comparatively limited attention by the research community so far, we believe that it is important to map the field to understand the current trends, challenges, and future research. In this paper, we present a systematic review of the research performed on interactive, embodied AR agents using HMDs. Starting with 1261 broadly related papers, we conducted an in-depth review of 50 directly related papers from2000 to 2020, focusing on papers that reported on user studies aiming to improve our understanding of interactive agents in AR HMD environments or their utilization in specific applications. We identified common research and application areas of AR agents through a structured iterative process, present research trends, and gaps, and share insights on future directions. |

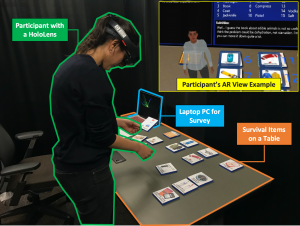

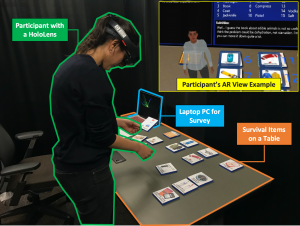

| Kangsoo Kim; Celso M. de Melo; Nahal Norouzi; Gerd Bruder; Gregory F. Welch Reducing Task Load with an Embodied Intelligent Virtual Assistant for Improved Performance in Collaborative Decision Making Proceedings Article In: Proceedings of the IEEE Conference on Virtual Reality and 3D User Interfaces (IEEE VR), pp. 529-538, Atlanta, Georgia, 2020. @inproceedings{Kim2020rtl,

title = {Reducing Task Load with an Embodied Intelligent Virtual Assistant for Improved Performance in Collaborative Decision Making},

author = {Kangsoo Kim and Celso M. de Melo and Nahal Norouzi and Gerd Bruder and Gregory F. Welch},

url = {https://sreal.ucf.edu/wp-content/uploads/2020/02/IEEEVR2020_ARDesertSurvival.pdf

https://www.youtube.com/watch?v=G_iZ_asjp3I&t=6s, YouTube Presentation},

doi = {10.1109/VR46266.2020.00-30},

year = {2020},

date = {2020-03-23},

booktitle = {Proceedings of the IEEE Conference on Virtual Reality and 3D User Interfaces (IEEE VR)},

pages = {529-538},

address = {Atlanta, Georgia},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

|

2019

|

| Kangsoo Kim; Nahal Norouzi; Tiffany Losekamp; Gerd Bruder; Mindi Anderson; Gregory Welch Effects of Patient Care Assistant Embodiment and Computer Mediation on User Experience Proceedings Article In: Proceedings of the IEEE International Conference on Artificial Intelligence & Virtual Reality (AIVR), pp. 17-24, IEEE, 2019. @inproceedings{Kim2019epc,

title = {Effects of Patient Care Assistant Embodiment and Computer Mediation on User Experience},

author = {Kangsoo Kim and Nahal Norouzi and Tiffany Losekamp and Gerd Bruder and Mindi Anderson and Gregory Welch},

url = {https://sreal.ucf.edu/wp-content/uploads/2019/11/AIVR2019_Caregiver.pdf},

doi = {10.1109/AIVR46125.2019.00013},

year = {2019},

date = {2019-12-09},

booktitle = {Proceedings of the IEEE International Conference on Artificial Intelligence & Virtual Reality (AIVR)},

pages = {17-24},

publisher = {IEEE},

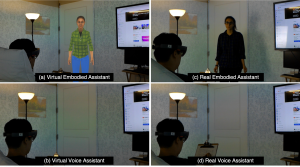

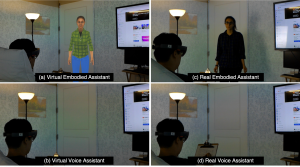

abstract = {Providers of patient care environments are facing an increasing demand for technological solutions that can facilitate increased patient satisfaction while being cost effective and practically feasible. Recent developments with respect to smart hospital room setups and smart home care environments have an immense potential to leverage advances in technologies such as Intelligent Virtual Agents, Internet of Things devices, and Augmented Reality to enable novel forms of patient interaction with caregivers and their environment.

In this paper, we present a human-subjects study in which we compared four types of simulated patient care environments for a range of typical tasks. In particular, we tested two forms of caregiver mediation with a real person or a virtual agent, and we compared two forms of caregiver embodiment with disembodied verbal or embodied interaction. Our results show that, as expected, a real caregiver provides the optimal user experience but an embodied virtual assistant is also a viable option for patient care environments, providing significantly higher social presence and engagement than voice-only interaction. We discuss the implications in the field of patient care and digital assistant.},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

Providers of patient care environments are facing an increasing demand for technological solutions that can facilitate increased patient satisfaction while being cost effective and practically feasible. Recent developments with respect to smart hospital room setups and smart home care environments have an immense potential to leverage advances in technologies such as Intelligent Virtual Agents, Internet of Things devices, and Augmented Reality to enable novel forms of patient interaction with caregivers and their environment.

In this paper, we present a human-subjects study in which we compared four types of simulated patient care environments for a range of typical tasks. In particular, we tested two forms of caregiver mediation with a real person or a virtual agent, and we compared two forms of caregiver embodiment with disembodied verbal or embodied interaction. Our results show that, as expected, a real caregiver provides the optimal user experience but an embodied virtual assistant is also a viable option for patient care environments, providing significantly higher social presence and engagement than voice-only interaction. We discuss the implications in the field of patient care and digital assistant. |

| Kendra Richards; Nikhil Mahalanobis; Kangsoo Kim; Ryan Schubert; Myungho Lee; Salam Daher; Nahal Norouzi; Jason Hochreiter; Gerd Bruder; Gregory F. Welch Analysis of Peripheral Vision and Vibrotactile Feedback During Proximal Search Tasks with Dynamic Virtual Entities in Augmented Reality Proceedings Article In: Proceedings of the ACM Symposium on Spatial User Interaction (SUI), pp. 3:1-3:9, ACM, 2019, ISBN: 978-1-4503-6975-6/19/10. @inproceedings{Richards2019b,

title = {Analysis of Peripheral Vision and Vibrotactile Feedback During Proximal Search Tasks with Dynamic Virtual Entities in Augmented Reality},

author = {Kendra Richards and Nikhil Mahalanobis and Kangsoo Kim and Ryan Schubert and Myungho Lee and Salam Daher and Nahal Norouzi and Jason Hochreiter and Gerd Bruder and Gregory F. Welch},

url = {https://sreal.ucf.edu/wp-content/uploads/2019/10/Richards2019b.pdf},

doi = {10.1145/3357251.3357585},

isbn = {978-1-4503-6975-6/19/10},

year = {2019},

date = {2019-10-19},

booktitle = {Proceedings of the ACM Symposium on Spatial User Interaction (SUI)},

pages = {3:1-3:9},

publisher = {ACM},

abstract = {A primary goal of augmented reality (AR) is to seamlessly embed virtual content into a real environment. There are many factors that can affect the perceived physicality and co-presence of virtual entities, including the hardware capabilities, the fidelity of the virtual behaviors, and sensory feedback associated with the interactions. In this paper, we present a study investigating participants' perceptions and behaviors during a time-limited search task in close proximity with virtual entities in AR. In particular, we analyze the effects of (i) visual conflicts in the periphery of an optical see-through head-mounted display, a Microsoft HoloLens, (ii) overall lighting in the physical environment, and (iii) multimodal feedback based on vibrotactile transducers mounted on a physical platform. Our results show significant benefits of vibrotactile feedback and reduced peripheral lighting for spatial and social presence, and engagement. We discuss implications of these effects for AR applications.},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

A primary goal of augmented reality (AR) is to seamlessly embed virtual content into a real environment. There are many factors that can affect the perceived physicality and co-presence of virtual entities, including the hardware capabilities, the fidelity of the virtual behaviors, and sensory feedback associated with the interactions. In this paper, we present a study investigating participants' perceptions and behaviors during a time-limited search task in close proximity with virtual entities in AR. In particular, we analyze the effects of (i) visual conflicts in the periphery of an optical see-through head-mounted display, a Microsoft HoloLens, (ii) overall lighting in the physical environment, and (iii) multimodal feedback based on vibrotactile transducers mounted on a physical platform. Our results show significant benefits of vibrotactile feedback and reduced peripheral lighting for spatial and social presence, and engagement. We discuss implications of these effects for AR applications. |

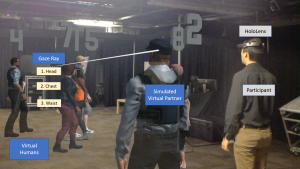

| Nahal Norouzi; Austin Erickson; Kangsoo Kim; Ryan Schubert; Joseph J. LaViola Jr.; Gerd Bruder; Gregory F. Welch Effects of Shared Gaze Parameters on Visual Target Identification Task Performance in Augmented Reality Proceedings Article In: Proceedings of the ACM Symposium on Spatial User Interaction (SUI), pp. 12:1-12:11, ACM, 2019, ISBN: 978-1-4503-6975-6/19/10, (Best Paper Award). @inproceedings{Norouzi2019esg,

title = {Effects of Shared Gaze Parameters on Visual Target Identification Task Performance in Augmented Reality},

author = {Nahal Norouzi and Austin Erickson and Kangsoo Kim and Ryan Schubert and Joseph J. LaViola Jr. and Gerd Bruder and Gregory F. Welch},

url = {https://sreal.ucf.edu/wp-content/uploads/2019/10/a12-norouzi.pdf},

doi = {10.1145/3357251.3357587},

isbn = {978-1-4503-6975-6/19/10},

year = {2019},

date = {2019-10-19},

urldate = {2019-10-19},

booktitle = {Proceedings of the ACM Symposium on Spatial User Interaction (SUI)},

pages = {12:1-12:11},

publisher = {ACM},

abstract = {Augmented reality (AR) technologies provide a shared platform for users to collaborate in a physical context involving both real and virtual content. To enhance the quality of interaction between AR users, researchers have proposed augmenting users' interpersonal space with embodied cues such as their gaze direction. While beneficial in achieving improved interpersonal spatial communication, such shared gaze environments suffer from multiple types of errors related to eye tracking and networking, that can reduce objective performance and subjective experience.

In this paper, we conducted a human-subject study to understand the impact of accuracy, precision, latency, and dropout based errors on users' performance when using shared gaze cues to identify a target among a crowd of people. We simulated varying amounts of errors and the target distances and measured participants' objective performance through their response time and error rate, and their subjective experience and cognitive load through questionnaires. We found some significant differences suggesting that the simulated error levels had stronger effects on participants' performance than target distance with accuracy and latency having a high impact on participants' error rate. We also observed that participants assessed their own performance as lower than it objectively was, and we discuss implications for practical shared gaze applications.},

note = {Best Paper Award},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

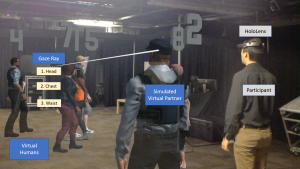

Augmented reality (AR) technologies provide a shared platform for users to collaborate in a physical context involving both real and virtual content. To enhance the quality of interaction between AR users, researchers have proposed augmenting users' interpersonal space with embodied cues such as their gaze direction. While beneficial in achieving improved interpersonal spatial communication, such shared gaze environments suffer from multiple types of errors related to eye tracking and networking, that can reduce objective performance and subjective experience.

In this paper, we conducted a human-subject study to understand the impact of accuracy, precision, latency, and dropout based errors on users' performance when using shared gaze cues to identify a target among a crowd of people. We simulated varying amounts of errors and the target distances and measured participants' objective performance through their response time and error rate, and their subjective experience and cognitive load through questionnaires. We found some significant differences suggesting that the simulated error levels had stronger effects on participants' performance than target distance with accuracy and latency having a high impact on participants' error rate. We also observed that participants assessed their own performance as lower than it objectively was, and we discuss implications for practical shared gaze applications. |

| Nahal Norouzi; Kangsoo Kim; Myungho Lee; Ryan Schubert; Austin Erickson; Jeremy Bailenson; Gerd Bruder; Greg Welch

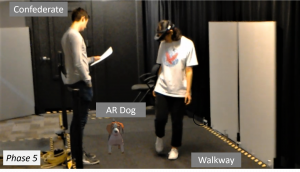

Walking Your Virtual Dog: Analysis of Awareness and Proxemics with Simulated Support Animals in Augmented Reality Proceedings Article In: Proceedings of the IEEE International Symposium on Mixed and Augmented Reality (ISMAR), 2019, pp. 253-264, IEEE, 2019, ISBN: 978-1-7281-4765-9. @inproceedings{Norouzi2019cb,

title = {Walking Your Virtual Dog: Analysis of Awareness and Proxemics with Simulated Support Animals in Augmented Reality },

author = {Nahal Norouzi and Kangsoo Kim and Myungho Lee and Ryan Schubert and Austin Erickson and Jeremy Bailenson and Gerd Bruder and Greg Welch

},

url = {https://sreal.ucf.edu/wp-content/uploads/2019/10/Final__AR_Animal_ISMAR.pdf},

doi = {10.1109/ISMAR.2019.00040},

isbn = {978-1-7281-4765-9},

year = {2019},

date = {2019-10-16},

urldate = {2019-10-16},

booktitle = {Proceedings of the IEEE International Symposium on Mixed and Augmented Reality (ISMAR), 2019},

pages = {253-264},

publisher = {IEEE},

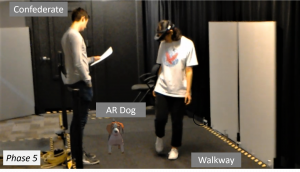

abstract = {Domestic animals have a long history of enriching human lives physically and mentally by filling a variety of different roles, such as service animals, emotional support animals, companions, and pets. Despite this, technological realizations of such animals in augmented reality (AR) are largely underexplored in terms of their behavior and interactions as well as effects they might have on human users' perception or behavior. In this paper, we describe a simulated virtual companion animal, in the form of a dog, in a shared AR space. We investigated its effects on participants' perception and behavior, including locomotion related to proxemics, with respect to their AR dog and other real people in the environment. We conducted a 2 by 2 mixed factorial human-subject study, in which we varied (i) the AR dog's awareness and behavior with respect to other people in the physical environment and (ii) the awareness and behavior of those people with respect to the AR dog. Our results show that having an AR companion dog changes participants' locomotion behavior, proxemics, and social interaction with other people who can or can not see the AR dog. We also show that the AR dog's simulated awareness and behaviors have an impact on participants' perception, including co-presence, animalism, perceived physicality, and dog's perceived awareness of the participant and environment. We discuss our findings and present insights and implications for the realization of effective AR animal companions.},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

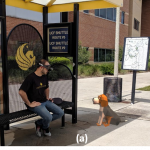

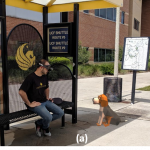

Domestic animals have a long history of enriching human lives physically and mentally by filling a variety of different roles, such as service animals, emotional support animals, companions, and pets. Despite this, technological realizations of such animals in augmented reality (AR) are largely underexplored in terms of their behavior and interactions as well as effects they might have on human users' perception or behavior. In this paper, we describe a simulated virtual companion animal, in the form of a dog, in a shared AR space. We investigated its effects on participants' perception and behavior, including locomotion related to proxemics, with respect to their AR dog and other real people in the environment. We conducted a 2 by 2 mixed factorial human-subject study, in which we varied (i) the AR dog's awareness and behavior with respect to other people in the physical environment and (ii) the awareness and behavior of those people with respect to the AR dog. Our results show that having an AR companion dog changes participants' locomotion behavior, proxemics, and social interaction with other people who can or can not see the AR dog. We also show that the AR dog's simulated awareness and behaviors have an impact on participants' perception, including co-presence, animalism, perceived physicality, and dog's perceived awareness of the participant and environment. We discuss our findings and present insights and implications for the realization of effective AR animal companions. |

![[POSTER] Matching vs. Non-Matching Visuals and Shape for Embodied Virtual Healthcare Agents](https://sreal.ucf.edu/wp-content/uploads/2019/03/ieeevr_poster_thumbnail-1.png) | Salam Daher; Jason Hochreiter; Nahal Norouzi; Ryan Schubert; Gerd Bruder; Laura Gonzalez; Mindi Anderson; Desiree Diaz; Juan Cendan; Greg Welch [POSTER] Matching vs. Non-Matching Visuals and Shape for Embodied Virtual Healthcare Agents Proceedings Article In: Proceedings of IEEE Virtual Reality (VR), 2019, 2019. @inproceedings{daher2019matching,

title = {[POSTER] Matching vs. Non-Matching Visuals and Shape for Embodied Virtual Healthcare Agents},

author = {Salam Daher and Jason Hochreiter and Nahal Norouzi and Ryan Schubert and Gerd Bruder and Laura Gonzalez and Mindi Anderson and Desiree Diaz and Juan Cendan and Greg Welch},

url = {https://sreal.ucf.edu/wp-content/uploads/2019/03/IEEEVR2019_Poster_PVChildStudy.pdf},

year = {2019},

date = {2019-03-27},

publisher = {Proceedings of IEEE Virtual Reality (VR), 2019},

abstract = {Embodied virtual agents serving as patient simulators are widely used in medical training scenarios, ranging from physical patients to virtual patients presented via virtual and augmented reality technologies. Physical-virtual patients are a hybrid solution that combines the benefits of dynamic visuals integrated into a human-shaped physical

form that can also present other cues, such as pulse, breathing sounds, and temperature. Sometimes in simulation the visuals and shape do not match. We carried out a human-participant study employing graduate nursing students in pediatric patient simulations comprising conditions associated with matching/non-matching of the visuals and shape.},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

Embodied virtual agents serving as patient simulators are widely used in medical training scenarios, ranging from physical patients to virtual patients presented via virtual and augmented reality technologies. Physical-virtual patients are a hybrid solution that combines the benefits of dynamic visuals integrated into a human-shaped physical

form that can also present other cues, such as pulse, breathing sounds, and temperature. Sometimes in simulation the visuals and shape do not match. We carried out a human-participant study employing graduate nursing students in pediatric patient simulations comprising conditions associated with matching/non-matching of the visuals and shape. |

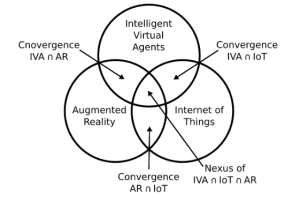

| Nahal Norouzi; Gerd Bruder; Brandon Belna; Stefanie Mutter; Damla Turgut; Greg Welch A Systematic Review of the Convergence of Augmented Reality, Intelligent Virtual Agents, and the Internet of Things Book Chapter In: Artificial Intelligence in IoT, pp. 37, Springer, 2019, ISBN: 978-3-030-04109-0. @inbook{Norouzi2019,

title = {A Systematic Review of the Convergence of Augmented Reality, Intelligent Virtual Agents, and the Internet of Things},

author = {Nahal Norouzi and Gerd Bruder and Brandon Belna and Stefanie Mutter and Damla Turgut and Greg Welch},

url = {https://sreal.ucf.edu/wp-content/uploads/2019/05/Norouzi-2019-IoT-AR-Final.pdf},

doi = {10.1007/978-3-030-04110-6_1},

isbn = {978-3-030-04109-0},

year = {2019},

date = {2019-01-10},

booktitle = {Artificial Intelligence in IoT},

pages = {37},

publisher = {Springer},

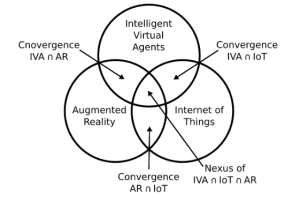

abstract = {In recent years we are beginning to see the convergence of three distinct research fields: Augmented Reality (AR), Intelligent Virtual Agents (IVAs), and the Internet of Things (IoT). Each of these has been classified as a disruptive technology for our society. Since their inception, the advancement of knowledge and development of technologies and systems in these fields was traditionally performed with limited input from each other. However, over the last years, we have seen research prototypes and commercial products being developed that cross the boundaries between these distinct fields to leverage their collective strengths. In this review paper, we resume the body of literature published at the intersections between each two of these fields, and we discuss a vision for the nexus of all three technologies.},

keywords = {},

pubstate = {published},

tppubtype = {inbook}

}

In recent years we are beginning to see the convergence of three distinct research fields: Augmented Reality (AR), Intelligent Virtual Agents (IVAs), and the Internet of Things (IoT). Each of these has been classified as a disruptive technology for our society. Since their inception, the advancement of knowledge and development of technologies and systems in these fields was traditionally performed with limited input from each other. However, over the last years, we have seen research prototypes and commercial products being developed that cross the boundaries between these distinct fields to leverage their collective strengths. In this review paper, we resume the body of literature published at the intersections between each two of these fields, and we discuss a vision for the nexus of all three technologies. |

2018

|

| Myungho Lee; Nahal Norouzi; Gerd Bruder; Pamela J. Wisniewski; Gregory F. Welch The Physical-virtual Table: Exploring the Effects of a Virtual Human's Physical Influence on Social Interaction Proceedings Article In: Proceedings of the 24th ACM Symposium on Virtual Reality Software and Technology, pp. 25:1–25:11, ACM, New York, NY, USA, 2018, ISBN: 978-1-4503-6086-9, (Best Paper Award). @inproceedings{Lee2018ac,

title = {The Physical-virtual Table: Exploring the Effects of a Virtual Human's Physical Influence on Social Interaction},

author = {Myungho Lee and Nahal Norouzi and Gerd Bruder and Pamela J. Wisniewski and Gregory F. Welch},

url = {https://sreal.ucf.edu/wp-content/uploads/2018/11/Lee2018ab.pdf},

doi = {10.1145/3281505.3281533},

isbn = {978-1-4503-6086-9},

year = {2018},

date = {2018-11-28},

booktitle = {Proceedings of the 24th ACM Symposium on Virtual Reality Software and Technology},

journal = {Proceedings of the 24th ACM Symposium on Virtual Reality Software and Technology},

pages = {25:1--25:11},

publisher = {ACM},

address = {New York, NY, USA},

series = {VRST '18},

abstract = {In this paper, we investigate the effects of the physical influence of a virtual human (VH) in the context of face-to-face interaction in augmented reality (AR). In our study, participants played a tabletop game with a VH, in which each player takes a turn and moves their own token along the designated spots on the shared table. We com- pared two conditions as follows: the VH in the virtual condition moves a virtual token that can only be seen through AR glasses, while the VH in the physical condition moves a physical token as the participants do; therefore the VH’s token can be seen even in the periphery of the AR glasses. For the physical condition, we designed an actuator system underneath the table. The actuator moves a magnet under the table which then moves the VH’s phys- ical token over the surface of the table. Our results indicate that participants felt higher co-presence with the VH in the physical condition, and participants assessed the VH as a more physical entity compared to the VH in the virtual condition. We further ob- served transference effects when participants attributed the VH’s ability to move physical objects to other elements in the real world. Also, the VH’s physical influence improved participants’ overall experience with the VH. We discuss potential explanations for the findings and implications for future shared AR tabletop setups.},

note = {Best Paper Award},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

In this paper, we investigate the effects of the physical influence of a virtual human (VH) in the context of face-to-face interaction in augmented reality (AR). In our study, participants played a tabletop game with a VH, in which each player takes a turn and moves their own token along the designated spots on the shared table. We com- pared two conditions as follows: the VH in the virtual condition moves a virtual token that can only be seen through AR glasses, while the VH in the physical condition moves a physical token as the participants do; therefore the VH’s token can be seen even in the periphery of the AR glasses. For the physical condition, we designed an actuator system underneath the table. The actuator moves a magnet under the table which then moves the VH’s phys- ical token over the surface of the table. Our results indicate that participants felt higher co-presence with the VH in the physical condition, and participants assessed the VH as a more physical entity compared to the VH in the virtual condition. We further ob- served transference effects when participants attributed the VH’s ability to move physical objects to other elements in the real world. Also, the VH’s physical influence improved participants’ overall experience with the VH. We discuss potential explanations for the findings and implications for future shared AR tabletop setups. |

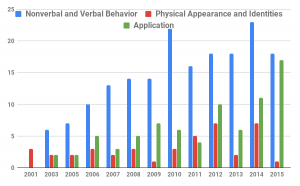

| Nahal Norouzi; Kangsoo Kim; Jason Hochreiter; Myungho Lee; Salam Daher; Gerd Bruder; Gregory Welch A Systematic Survey of 15 Years of User Studies Published in the Intelligent Virtual Agents Conference Proceedings Article In: IVA '18 Proceedings of the 18th International Conference on Intelligent Virtual Agents, pp. 17-22, ACM ACM, 2018, ISBN: 978-1-4503-6013-5/18/11. @inproceedings{Norouzi2018c,

title = {A Systematic Survey of 15 Years of User Studies Published in the Intelligent Virtual Agents Conference},

author = {Nahal Norouzi and Kangsoo Kim and Jason Hochreiter and Myungho Lee and Salam Daher and Gerd Bruder and Gregory Welch },

url = {https://sreal.ucf.edu/wp-content/uploads/2018/11/p17-norouzi-2.pdf},

doi = {10.1145/3267851.3267901},

isbn = {978-1-4503-6013-5/18/11},

year = {2018},

date = {2018-11-05},

booktitle = {IVA '18 Proceedings of the 18th International Conference on Intelligent Virtual Agents},

pages = {17-22},

publisher = {ACM},

organization = {ACM},

abstract = {The field of intelligent virtual agents (IVAs) has evolved immensely over the past 15 years, introducing new application opportunities in areas such as training, health care, and virtual assistants. In this survey paper, we provide a systematic review of the most influential user studies published in the IVA conference from 2001 to 2015 focusing on IVA development, human perception, and interactions. A total of 247 papers with 276 user studies have been classified and reviewed based on their contributions and impact. We identify the different areas of research and provide a summary of the papers with the highest impact. With the trends of past user studies and the current state of technology, we provide insights into future trends and research challenges.},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

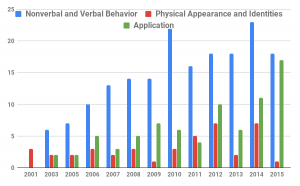

The field of intelligent virtual agents (IVAs) has evolved immensely over the past 15 years, introducing new application opportunities in areas such as training, health care, and virtual assistants. In this survey paper, we provide a systematic review of the most influential user studies published in the IVA conference from 2001 to 2015 focusing on IVA development, human perception, and interactions. A total of 247 papers with 276 user studies have been classified and reviewed based on their contributions and impact. We identify the different areas of research and provide a summary of the papers with the highest impact. With the trends of past user studies and the current state of technology, we provide insights into future trends and research challenges. |

![[Demo] Towards Interactive Virtual Dogs as a Pervasive Social Companion in Augmented Reality](https://sreal.ucf.edu/wp-content/uploads/2020/12/demoFig-e1607360623259.png)

![[POSTER] Matching vs. Non-Matching Visuals and Shape for Embodied Virtual Healthcare Agents](https://sreal.ucf.edu/wp-content/uploads/2019/03/ieeevr_poster_thumbnail-1.png)