2024

|

| Gerd Bruder; Michael Browne; Zubin Choudhary; Austin Erickson; Hiroshi Furuya; Matt Gottsacker; Ryan Schubert; Gregory Welch Visual Factors Influencing Trust and Reliance with Augmented Reality Systems Journal Article In: Journal of Vision Abstracts—Vision Sciences Society (VSS) Annual Meeting, 2024. @article{Bruder2024,

title = {Visual Factors Influencing Trust and Reliance with Augmented Reality Systems},

author = {Gerd Bruder and Michael Browne and Zubin Choudhary and Austin Erickson and Hiroshi Furuya and Matt Gottsacker and Ryan Schubert and Gregory Welch},

year = {2024},

date = {2024-05-17},

urldate = {2024-05-17},

journal = {Journal of Vision Abstracts—Vision Sciences Society (VSS) Annual Meeting},

abstract = {Augmented Reality (AR) systems are increasingly used for simulations, training, and operations across a wide range of application fields. Unfortunately, the imagery that current AR systems create often does not match our visual perception of the real world, which can make users feel like the AR system is not believable. This lack of belief can lead to negative training or experiences, where users lose trust in the AR system and adjust their reliance on AR. The latter is characterized by users adopting different cognitive perception-action pathways by which they integrate AR visual information for spatial tasks. In this work, we present a series of six within-subjects experiments (each N=20) in which we investigated trust in AR with respect to two display factors (field of view and visual contrast), two tracking factors (accuracy and precision), and two network factors (latency and dropouts). Participants performed a 360-degree visual search-and-selection task in a hybrid setup involving an AR head-mounted display and a CAVE-like simulated real environment. Participants completed the experiments with four perception-action pathways that represent different levels of the users’ reliance on an AR system: AR-Only (only relying on AR), AR-First (prioritizing AR over real world), Real-First (prioritizing real world over AR), and Real-Only (only relying on real world). Our results show that participants’ perception-action pathways and objective task performance were significantly affected by all six tested AR factors. In contrast, we found that their subjective responses for trust and reliance were often more affected by slight AR system differences than would elicit objective performance differences, and participants tended to overestimate or underestimate the trustworthiness of the AR system. Participants showed significantly higher task performance gains if their sense of trust was well-calibrated to the trustworthiness of the AR system, highlighting the importance of effectively managing users’ trust in future AR systems.

Acknowledgements: This material includes work supported in part by Vision Products LLC via US Air Force Research Laboratory (AFRL) Award Number FA864922P1038, and the Office of Naval Research under Award Numbers N00014-21-1-2578 and N00014-21-1-2882 (Dr. Peter Squire, Code 34).},

keywords = {},

pubstate = {published},

tppubtype = {article}

}

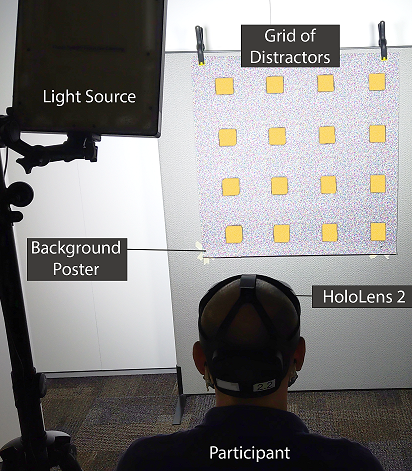

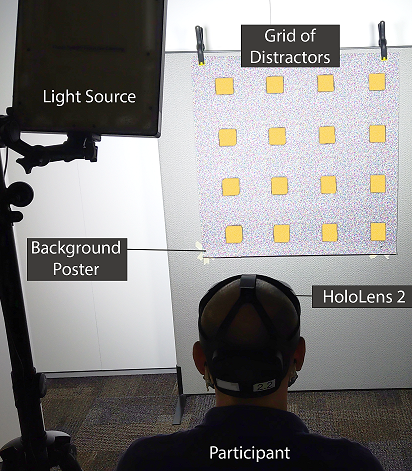

Augmented Reality (AR) systems are increasingly used for simulations, training, and operations across a wide range of application fields. Unfortunately, the imagery that current AR systems create often does not match our visual perception of the real world, which can make users feel like the AR system is not believable. This lack of belief can lead to negative training or experiences, where users lose trust in the AR system and adjust their reliance on AR. The latter is characterized by users adopting different cognitive perception-action pathways by which they integrate AR visual information for spatial tasks. In this work, we present a series of six within-subjects experiments (each N=20) in which we investigated trust in AR with respect to two display factors (field of view and visual contrast), two tracking factors (accuracy and precision), and two network factors (latency and dropouts). Participants performed a 360-degree visual search-and-selection task in a hybrid setup involving an AR head-mounted display and a CAVE-like simulated real environment. Participants completed the experiments with four perception-action pathways that represent different levels of the users’ reliance on an AR system: AR-Only (only relying on AR), AR-First (prioritizing AR over real world), Real-First (prioritizing real world over AR), and Real-Only (only relying on real world). Our results show that participants’ perception-action pathways and objective task performance were significantly affected by all six tested AR factors. In contrast, we found that their subjective responses for trust and reliance were often more affected by slight AR system differences than would elicit objective performance differences, and participants tended to overestimate or underestimate the trustworthiness of the AR system. Participants showed significantly higher task performance gains if their sense of trust was well-calibrated to the trustworthiness of the AR system, highlighting the importance of effectively managing users’ trust in future AR systems.

Acknowledgements: This material includes work supported in part by Vision Products LLC via US Air Force Research Laboratory (AFRL) Award Number FA864922P1038, and the Office of Naval Research under Award Numbers N00014-21-1-2578 and N00014-21-1-2882 (Dr. Peter Squire, Code 34). |

| Michael P. Browne; Gregory F. Welch; Gerd Bruder; Ryan Schubert Understanding the impact of trust on performance in a training system using augmented reality Proceedings Article In: Proceedings of SPIE Conference 13051: Artificial Intelligence and Machine Learning for Multi-Domain Operations Applications VI, 2024. @inproceedings{Browne2024ut,

title = {Understanding the impact of trust on performance in a training system using augmented reality},

author = {Michael P. Browne and Gregory F. Welch and Gerd Bruder and Ryan Schubert},

year = {2024},

date = {2024-04-22},

urldate = {2024-04-22},

booktitle = {Proceedings of SPIE Conference 13051: Artificial Intelligence and Machine Learning for Multi-Domain Operations Applications VI},

abstract = {The information presented by AR systems may not be 100% accurate, and anomalies like tracker errors, lack of opacity compared to the background and reduced field of view (FOV) can make users feel like an AR training system is not believable. This lack of belief can lead to negative training, where trainees adjust how they train due to flaws in the training system and are therefore less prepared for actual battlefield situations. We have completed an experiment to investigate trust, reliance, and human task performance in an augmented reality three-dimensional experimental scenario. Specifically, we used a methodology in which simulated real (complex) entities are supplemented by abstracted (basic) cues presented as overlays in an AR head mounted display (HMD) in a visual search and awareness task. We simulated properties of different AR displays to determine which of the properties most affect training efficacy. Results from our experiment will feed directly into the design of training systems that use AR/MR displays and will help increase the efficacy of training.},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

The information presented by AR systems may not be 100% accurate, and anomalies like tracker errors, lack of opacity compared to the background and reduced field of view (FOV) can make users feel like an AR training system is not believable. This lack of belief can lead to negative training, where trainees adjust how they train due to flaws in the training system and are therefore less prepared for actual battlefield situations. We have completed an experiment to investigate trust, reliance, and human task performance in an augmented reality three-dimensional experimental scenario. Specifically, we used a methodology in which simulated real (complex) entities are supplemented by abstracted (basic) cues presented as overlays in an AR head mounted display (HMD) in a visual search and awareness task. We simulated properties of different AR displays to determine which of the properties most affect training efficacy. Results from our experiment will feed directly into the design of training systems that use AR/MR displays and will help increase the efficacy of training. |

| Laura Battistel; Matt Gottsacker; Greg Welch; Gerd Bruder; Massimiliano Zampini; Riccardo Parin Chill or Warmth: Exploring Temperature's Impact on Interpersonal Boundaries in VR Proceedings Article In: Adjunct Proceedings of the IEEE Conference on Virtual Reality and 3D User Interfaces (IEEE VR): 2nd Annual Workshop on Multi-modal Affective and Social Behavior Analysis and Synthesis in Extended Reality (MASSXR), pp. 1-3, 2024. @inproceedings{Battistel2024co,

title = {Chill or Warmth: Exploring Temperature's Impact on Interpersonal Boundaries in VR},

author = {Laura Battistel and Matt Gottsacker and Greg Welch and Gerd Bruder and Massimiliano Zampini and Riccardo Parin},

url = {https://sreal.ucf.edu/wp-content/uploads/2024/02/Battistel2024co.pdf},

year = {2024},

date = {2024-03-16},

urldate = {2024-03-16},

booktitle = {Adjunct Proceedings of the IEEE Conference on Virtual Reality and 3D User Interfaces (IEEE VR): 2nd Annual Workshop on Multi-modal Affective and Social Behavior Analysis and Synthesis in Extended Reality (MASSXR)},

pages = {1-3},

abstract = {This position paper outlines a study on the influence of avatars displaying warmth or coldness cues on interpersonal space in virtual reality. Participants will engage in a comfort-distance task, approaching avatars exhibiting thermoregulatory behaviors. Anticipated findings include a reduction in interpersonal distance with warm cues and an increase with cold cues. The study will offer insights into the complex interplay between temperature, social perception, and interpersonal space.},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

This position paper outlines a study on the influence of avatars displaying warmth or coldness cues on interpersonal space in virtual reality. Participants will engage in a comfort-distance task, approaching avatars exhibiting thermoregulatory behaviors. Anticipated findings include a reduction in interpersonal distance with warm cues and an increase with cold cues. The study will offer insights into the complex interplay between temperature, social perception, and interpersonal space. |

| Fariba Mostajeran; Sebastian Schneider; Gerd Bruder; Simone Kühn; Frank Steinicke Analyzing Cognitive Demands and Detection Thresholds for Redirected Walking in Immersive Forest and Urban Environments Proceedings Article In: Proceedings of IEEE Virtual Reality (VR), pp. 1-11, 2024. @inproceedings{Mostajeran2024ac,

title = {Analyzing Cognitive Demands and Detection Thresholds for Redirected Walking in Immersive Forest and Urban Environments},

author = {Fariba Mostajeran and Sebastian Schneider and Gerd Bruder and Simone Kühn and Frank Steinicke},

year = {2024},

date = {2024-03-16},

booktitle = {Proceedings of IEEE Virtual Reality (VR)},

pages = {1-11},

abstract = {Redirected walking is a locomotion technique that allows users to naturally walk through large immersive virtual environments (IVEs) by guiding them on paths that might vary from the paths they walk in the real world. While this technique enables the exploration of larger spaces in the IVE via natural walking, previous work has shown that it induces extra cognitive load for the users. On the other hand, previous research has shown that exposure to virtual nature environments can restore users’ diminished attentional capacities and lead to enhanced cognitive performances. Therefore, the aim of this paper is to investigate if the environment in which the user is redirected has the potential to reduce its cognitive demands. For this purpose, we conducted an experiment with 28 participants, who performed a spatial working memory task (i.e., 2-back test) while walking and being redirected with different gains in two different IVEs (i.e., (i) forest and (ii) urban). The results of frequentist and Bayesian analysis are consistent and provide evidence against an effect of the type of IVE on detection thresholds as well as cognitive and locomotion performances. Therefore, redirected walking is robust to the variation of the IVEs tested in this experiment. The results partly challenge previous research findings and, therefore, require future work in this direction.},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

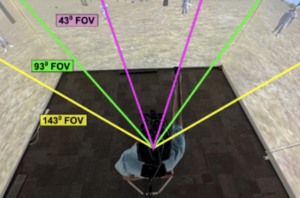

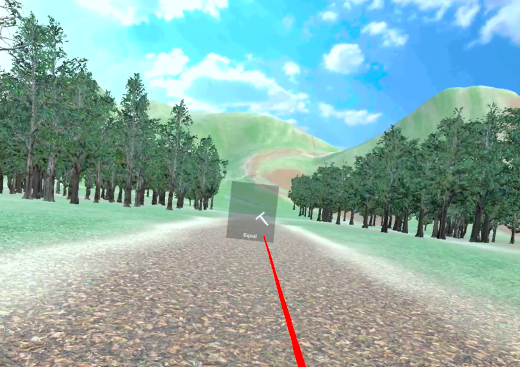

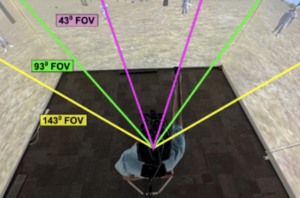

Redirected walking is a locomotion technique that allows users to naturally walk through large immersive virtual environments (IVEs) by guiding them on paths that might vary from the paths they walk in the real world. While this technique enables the exploration of larger spaces in the IVE via natural walking, previous work has shown that it induces extra cognitive load for the users. On the other hand, previous research has shown that exposure to virtual nature environments can restore users’ diminished attentional capacities and lead to enhanced cognitive performances. Therefore, the aim of this paper is to investigate if the environment in which the user is redirected has the potential to reduce its cognitive demands. For this purpose, we conducted an experiment with 28 participants, who performed a spatial working memory task (i.e., 2-back test) while walking and being redirected with different gains in two different IVEs (i.e., (i) forest and (ii) urban). The results of frequentist and Bayesian analysis are consistent and provide evidence against an effect of the type of IVE on detection thresholds as well as cognitive and locomotion performances. Therefore, redirected walking is robust to the variation of the IVEs tested in this experiment. The results partly challenge previous research findings and, therefore, require future work in this direction. |

| Juanita Benjamin; Austin Erickson; Matt Gottsacker; Gerd Bruder; Gregory Welch Evaluating Transitive Perceptual Effects Between Virtual Entities in Outdoor Augmented Reality Proceedings Article In: Proceedings of IEEE Virtual Reality (VR), pp. 1-11, 2024. @inproceedings{Benjamin2024et,

title = {Evaluating Transitive Perceptual Effects Between Virtual Entities in Outdoor Augmented Reality},

author = {Juanita Benjamin and Austin Erickson and Matt Gottsacker and Gerd Bruder and Gregory Welch},

url = {https://sreal.ucf.edu/wp-content/uploads/2024/02/Benjamin2024.pdf},

year = {2024},

date = {2024-03-16},

urldate = {2024-03-16},

booktitle = {Proceedings of IEEE Virtual Reality (VR)},

pages = {1-11},

abstract = {Augmented reality (AR) head-mounted displays (HMDs) provide users with a view in which digital content is blended spatially with the outside world. However, one critical issue faced with such display technologies is misperception, i.e., perceptions of computer-generated content that differs from our human perception of other real-world objects or entities. Misperception can lead to mistrust in these systems and negative impacts in a variety of application fields. Although there is a considerable amount of research investigating either size, distance, or speed misperception in AR, far less is known about the relationships between these aspects. In this paper, we present an outdoor AR experiment (N=20) using a HoloLens 2 HMD. Participants estimated size, distance, and speed of Familiar and Unfamiliar outdoor animals at three distances (30, 60, 90 meters). To investigate whether providing information about one aspect may influence another, we divided our experiment into three phases. In Phase I, participants estimated the three aspects without any provided information. In Phase II, participants were given accurate size information, then asked to estimate distance and speed. In Phase III, participants were given accurate distance and size information, then asked to estimate speed. Our results show that estimates of speed in particular of the Unfamiliar animals benefited from provided size information, while speed estimates of all animals benefited from provided distance information. We found no support for the assumption that distance estimates benefited from provided size information.},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

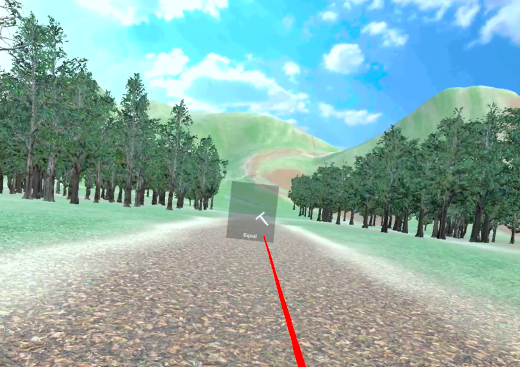

Augmented reality (AR) head-mounted displays (HMDs) provide users with a view in which digital content is blended spatially with the outside world. However, one critical issue faced with such display technologies is misperception, i.e., perceptions of computer-generated content that differs from our human perception of other real-world objects or entities. Misperception can lead to mistrust in these systems and negative impacts in a variety of application fields. Although there is a considerable amount of research investigating either size, distance, or speed misperception in AR, far less is known about the relationships between these aspects. In this paper, we present an outdoor AR experiment (N=20) using a HoloLens 2 HMD. Participants estimated size, distance, and speed of Familiar and Unfamiliar outdoor animals at three distances (30, 60, 90 meters). To investigate whether providing information about one aspect may influence another, we divided our experiment into three phases. In Phase I, participants estimated the three aspects without any provided information. In Phase II, participants were given accurate size information, then asked to estimate distance and speed. In Phase III, participants were given accurate distance and size information, then asked to estimate speed. Our results show that estimates of speed in particular of the Unfamiliar animals benefited from provided size information, while speed estimates of all animals benefited from provided distance information. We found no support for the assumption that distance estimates benefited from provided size information. |

| Matt Gottsacker; Gerd Bruder; Gregory F. Welch rlty2rlty: Transitioning Between Realities with Generative AI Proceedings Article Forthcoming In: Proceedings of IEEE Conference on Virtual Reality and 3D User Interfaces (VR), pp. 1–2, Forthcoming. @inproceedings{nokey,

title = {rlty2rlty: Transitioning Between Realities with Generative AI},

author = {Matt Gottsacker and Gerd Bruder and Gregory F. Welch},

url = {https://sreal.ucf.edu/wp-content/uploads/2024/02/vr24d-sub1075-cam-i5.pdf

https://www.youtube.com/watch?v=u4CyvdE3Y3g},

year = {2024},

date = {2024-02-20},

booktitle = {Proceedings of IEEE Conference on Virtual Reality and 3D User Interfaces (VR)},

pages = {1--2},

abstract = {We present a system for visually transitioning a mixed reality (MR) user between two arbitrary realities (e.g., between two virtual worlds or between the real environment and a virtual world). The system uses artificial intelligence (AI) to generate a 360° video that transforms the user’s starting environment to another environment, passing through a liminal space that could help them relax between tasks or prepare them for the ending environment. The video can then be viewed on an MR headset.},

keywords = {},

pubstate = {forthcoming},

tppubtype = {inproceedings}

}

We present a system for visually transitioning a mixed reality (MR) user between two arbitrary realities (e.g., between two virtual worlds or between the real environment and a virtual world). The system uses artificial intelligence (AI) to generate a 360° video that transforms the user’s starting environment to another environment, passing through a liminal space that could help them relax between tasks or prepare them for the ending environment. The video can then be viewed on an MR headset. |

2023

|

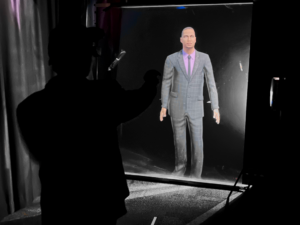

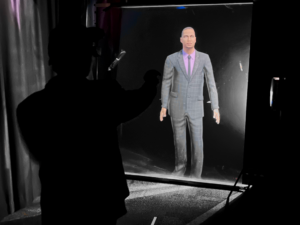

| Juanita Benjamin; Gerd Bruder; Carsten Neumann; Dirk Reiners; Carolina Cruz-Neira; Gregory F Welch Perception and Proxemics with Virtual Humans on Transparent Display Installations in Augmented Reality Proceedings Article Forthcoming In: Proceedings of the IEEE Conference on International Symposium on Mixed and Augmented Reality (ISMAR) 2023., pp. 1–10, Forthcoming. @inproceedings{benjamin2023arscreen,

title = {Perception and Proxemics with Virtual Humans on Transparent Display Installations in Augmented Reality},

author = {Juanita Benjamin and Gerd Bruder and Carsten Neumann and Dirk Reiners and Carolina Cruz-Neira and Gregory F Welch },

url = {https://sreal.ucf.edu/wp-content/uploads/2023/08/Perception-and-Proxemics-ISMAR-23-2.pdf},

year = {2023},

date = {2023-10-21},

urldate = {2023-10-21},

booktitle = {Proceedings of the IEEE Conference on International Symposium on Mixed and Augmented Reality (ISMAR) 2023.},

pages = {1--10},

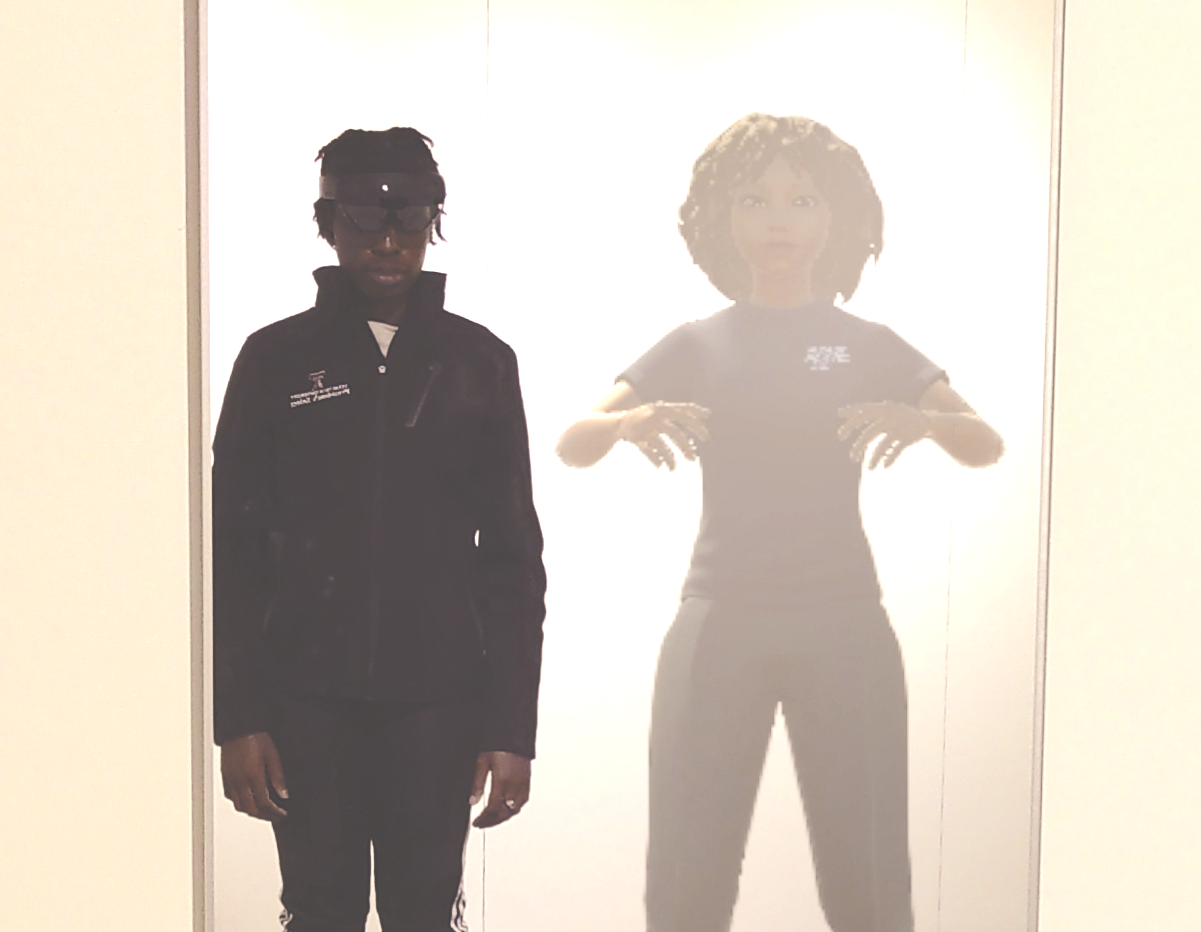

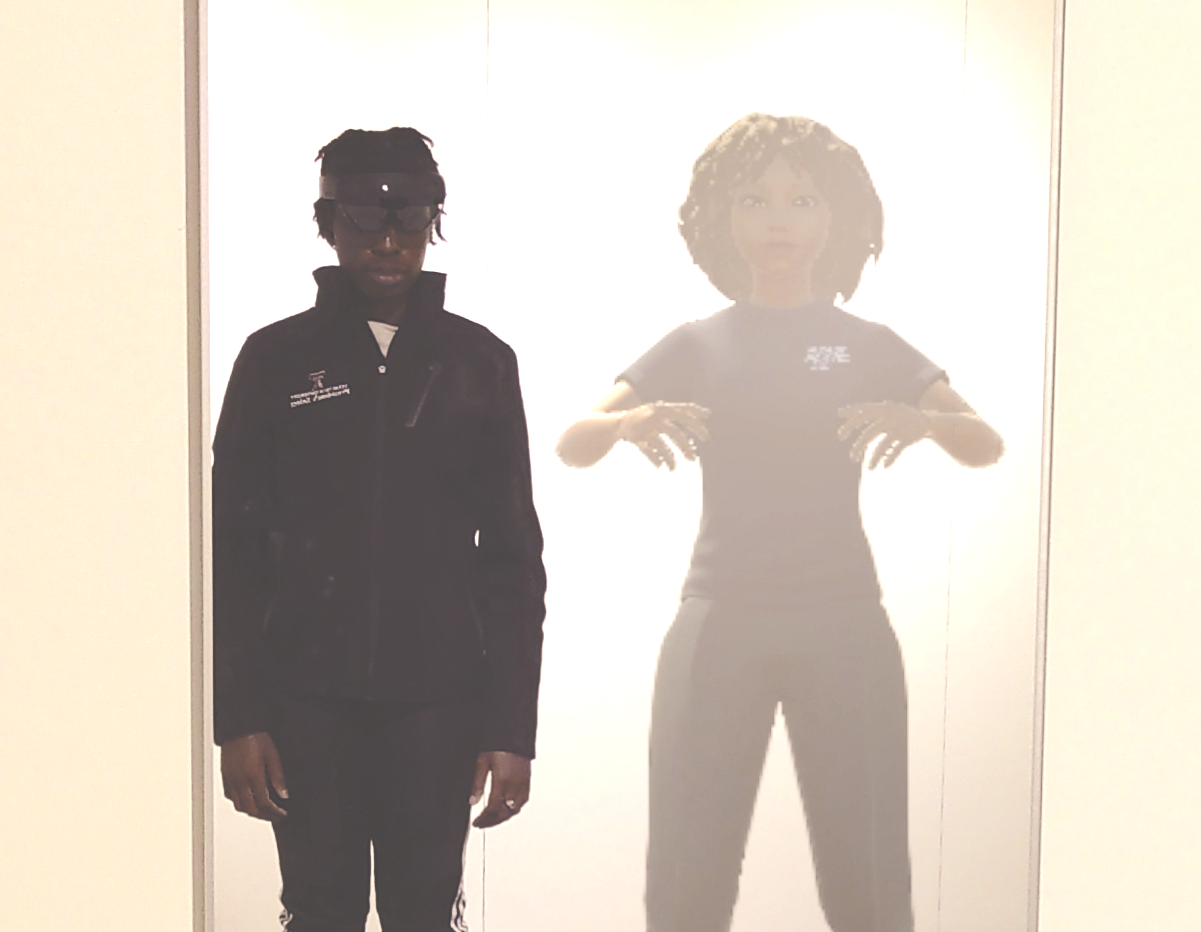

abstract = {It is not uncommon for science fiction movies to portray futuristic user interfaces that can only be realized decades later with state-of-the-art technology. In this work, we present a prototypical augmented reality (AR) installation that was inspired by the movie The Time Machine (2002). It consists of a transparent screen that acts as a window through which users can see the stereoscopic projection of a three-dimensional virtual human (VH). However, there are some key differences between the vision of this technology and the way VHs on these displays are actually perceived. In particular, the additive light model of these displays causes darker VHs to appear more transparent, while light in the physical environment further increases transparency, which may affect the way VHs are perceived, to what degree they are trusted, and the distances one maintains from them in a spatial setting. In this paper, we present a user study in which we investigate how transparency in the scope of transparent AR screens affects the perception of a VH's appearance, social presence with the VH, and the social space around users as defined by proxemics theory. Our results indicate that appearances are comparatively robust to transparency, while social presence improves in darker physical environments, and proxemic distances to the VH largely depend on one's distance from the screen but are not noticeably affected by transparency. Overall, our results suggest that such transparent AR screens can be an effective technology for facilitating social interactions between users and VHs in a shared physical space.},

keywords = {},

pubstate = {forthcoming},

tppubtype = {inproceedings}

}

It is not uncommon for science fiction movies to portray futuristic user interfaces that can only be realized decades later with state-of-the-art technology. In this work, we present a prototypical augmented reality (AR) installation that was inspired by the movie The Time Machine (2002). It consists of a transparent screen that acts as a window through which users can see the stereoscopic projection of a three-dimensional virtual human (VH). However, there are some key differences between the vision of this technology and the way VHs on these displays are actually perceived. In particular, the additive light model of these displays causes darker VHs to appear more transparent, while light in the physical environment further increases transparency, which may affect the way VHs are perceived, to what degree they are trusted, and the distances one maintains from them in a spatial setting. In this paper, we present a user study in which we investigate how transparency in the scope of transparent AR screens affects the perception of a VH's appearance, social presence with the VH, and the social space around users as defined by proxemics theory. Our results indicate that appearances are comparatively robust to transparency, while social presence improves in darker physical environments, and proxemic distances to the VH largely depend on one's distance from the screen but are not noticeably affected by transparency. Overall, our results suggest that such transparent AR screens can be an effective technology for facilitating social interactions between users and VHs in a shared physical space. |

| Zubin Choudhary; Gerd Bruder; Gregory F. Welch Visual Facial Enhancements Can Significantly Improve Speech Perception in the Presence of Noise Journal Article Forthcoming In: IEEE Transactions on Visualization and Computer Graphics, Special Issue on the IEEE International Symposium on Mixed and Augmented Reality (ISMAR) 2023., Forthcoming. @article{Choudhary2023Speech,

title = {Visual Facial Enhancements Can Significantly Improve Speech Perception in the Presence of Noise},

author = {Zubin Choudhary and Gerd Bruder and Gregory F. Welch},

url = {https://sreal.ucf.edu/wp-content/uploads/2023/07/final_sub1046_ISMAR23-compressed.pdf},

year = {2023},

date = {2023-10-17},

urldate = {2023-10-17},

journal = {IEEE Transactions on Visualization and Computer Graphics, Special Issue on the IEEE International Symposium on Mixed and Augmented Reality (ISMAR) 2023.},

abstract = {Human speech perception is generally optimal in quiet environments, however it becomes more difficult and error prone in the presence of noise, such as other humans speaking nearby or ambient noise. In such situations, human speech perception is improved by speech reading, i.e., watching the movements of a speaker’s mouth and face, either consciously as done by people with hearing loss or subconsciously by other humans. While previous work focused largely on speech perception of two-dimensional videos of faces, there is a gap in the research field focusing on facial features as seen in head-mounted displays, including the impacts of display resolution, and the effectiveness of visually enhancing a virtual human face on speech perception in the presence of noise.

In this paper, we present a comparative user study (N = 21) in which we investigated an audio-only condition compared to two levels of head-mounted display resolution (1832×1920 or 916×960 pixels per eye) and two levels of the native or visually enhanced appearance of a virtual human, the latter consisting of an up-scaled facial representation and simulated lipstick (lip coloring) added to increase contrast. To understand effects on speech perception in noise, we measured participants’ speech reception thresholds (SRTs) for each audio-visual stimulus condition. These thresholds indicate the decibel levels of the speech signal that are necessary for a listener to receive the speech correctly 50% of the time. First, we show that the display resolution significantly affected participants’ ability to perceive the speech signal in noise, which has practical implications for the field, especially in social virtual environments. Second, we show that our visual enhancement method was able to compensate for limited display resolution and was generally preferred by participants. Specifically, our participants indicated that they benefited from the head scaling more than the added facial contrast from the simulated lipstick. We discuss relationships, implications, and guidelines for applications that aim to leverage such enhancements.},

keywords = {},

pubstate = {forthcoming},

tppubtype = {article}

}

Human speech perception is generally optimal in quiet environments, however it becomes more difficult and error prone in the presence of noise, such as other humans speaking nearby or ambient noise. In such situations, human speech perception is improved by speech reading, i.e., watching the movements of a speaker’s mouth and face, either consciously as done by people with hearing loss or subconsciously by other humans. While previous work focused largely on speech perception of two-dimensional videos of faces, there is a gap in the research field focusing on facial features as seen in head-mounted displays, including the impacts of display resolution, and the effectiveness of visually enhancing a virtual human face on speech perception in the presence of noise.

In this paper, we present a comparative user study (N = 21) in which we investigated an audio-only condition compared to two levels of head-mounted display resolution (1832×1920 or 916×960 pixels per eye) and two levels of the native or visually enhanced appearance of a virtual human, the latter consisting of an up-scaled facial representation and simulated lipstick (lip coloring) added to increase contrast. To understand effects on speech perception in noise, we measured participants’ speech reception thresholds (SRTs) for each audio-visual stimulus condition. These thresholds indicate the decibel levels of the speech signal that are necessary for a listener to receive the speech correctly 50% of the time. First, we show that the display resolution significantly affected participants’ ability to perceive the speech signal in noise, which has practical implications for the field, especially in social virtual environments. Second, we show that our visual enhancement method was able to compensate for limited display resolution and was generally preferred by participants. Specifically, our participants indicated that they benefited from the head scaling more than the added facial contrast from the simulated lipstick. We discuss relationships, implications, and guidelines for applications that aim to leverage such enhancements. |

| Ryan Schubert; Gerd Bruder; Gregory F. Welch Testbed for Intuitive Magnification in Augmented Reality Proceedings Article In: Proceedings IEEE International Symposium on Mixed and Augmented Reality Adjunct (ISMAR-Adjunct), pp. 1–2, 2023. @inproceedings{schubert2023tf,

title = {Testbed for Intuitive Magnification in Augmented Reality},

author = {Ryan Schubert and Gerd Bruder and Gregory F. Welch},

url = {https://sreal.ucf.edu/wp-content/uploads/2023/08/ismar23_schubert2023tf.pdf

https://sreal.ucf.edu/wp-content/uploads/2023/08/ismar23_schubert2023tf.mp4},

year = {2023},

date = {2023-10-16},

urldate = {2023-10-16},

booktitle = {Proceedings IEEE International Symposium on Mixed and Augmented Reality Adjunct (ISMAR-Adjunct)},

pages = {1--2},

abstract = {Humans strive to magnify portions of our visually perceived surroundings for various reasons, e.g., because they are too far away or too small to see. Different technologies have been introduced for magnification, from monoculars to binoculars, and telescopes to microscopes. A promising technology are modern high-resolution digital cameras, which are capable of optical or digital zoom and very flexible as their imagery can be presented to users in real-time with mobile or head-mounted displays and intuitive 3D user interfaces allowing control over the magnification. In this demo, we present a novel design space and testbed for intuitive augmented reality (AR) magnifications, where an AR optical see-through head-mounted display is used for the presentation of real-time magnified camera imagery. The testbed includes different unimanual and bimanual AR interaction techniques for defining the scale factor and portion of the user's visual field that should be magnified.},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

Humans strive to magnify portions of our visually perceived surroundings for various reasons, e.g., because they are too far away or too small to see. Different technologies have been introduced for magnification, from monoculars to binoculars, and telescopes to microscopes. A promising technology are modern high-resolution digital cameras, which are capable of optical or digital zoom and very flexible as their imagery can be presented to users in real-time with mobile or head-mounted displays and intuitive 3D user interfaces allowing control over the magnification. In this demo, we present a novel design space and testbed for intuitive augmented reality (AR) magnifications, where an AR optical see-through head-mounted display is used for the presentation of real-time magnified camera imagery. The testbed includes different unimanual and bimanual AR interaction techniques for defining the scale factor and portion of the user's visual field that should be magnified. |

| Zubin Choudhary; Gerd Bruder; Greg Welch Visual Hearing Aids: Artificial Visual Speech Stimuli for Audiovisual Speech Perception in Noise Conference Forthcoming Proceedings of the 29th ACM Symposium on Virtual Reality Software and Technology, 2023, Forthcoming. @conference{Choudhary2023aids,

title = {Visual Hearing Aids: Artificial Visual Speech Stimuli for Audiovisual Speech Perception in Noise},

author = {Zubin Choudhary and Gerd Bruder and Greg Welch},

url = {https://sreal.ucf.edu/wp-content/uploads/2023/09/MAIN_VRST_23_SpeechPerception_Phone.pdf},

year = {2023},

date = {2023-10-09},

urldate = {2023-10-09},

booktitle = {Proceedings of the 29th ACM Symposium on Virtual Reality Software and Technology, 2023},

abstract = {Speech perception is optimal in quiet environments, but noise can impair comprehension and increase errors. In these situations, lip reading can help, but it is not always possible, such as during an audio call or when wearing a face mask. One approach to improve speech perception in these situations is to use an artificial visual lip reading aid. In this paper, we present a user study (𝑁 = 17) in which we compared three levels of audio stimuli visualizations and two levels of modulating the appearance of the visualization based on the speech signal, and we compared them against two control conditions: an audio-only condition, and a real human speaking. We measured participants’ speech reception thresholds (SRTs) to understand the effects of these visualizations on speech perception in noise. These thresholds indicate the decibel levels of the speech signal that are necessary for a listener to receive the speech correctly 50% of the time. Additionally, we measured the usability of the approaches and the user experience. We found that the different artificial visualizations improved participants’ speech reception compared to the audio-only baseline condition, but they were significantly poorer than the real human condition. This suggests that different visualizations can improve speech perception when the speaker’s face is not available. However, we also discuss limitations of current plug-and-play lip sync software and abstract representations of the speaker in the context of speech perception.},

keywords = {},

pubstate = {forthcoming},

tppubtype = {conference}

}

Speech perception is optimal in quiet environments, but noise can impair comprehension and increase errors. In these situations, lip reading can help, but it is not always possible, such as during an audio call or when wearing a face mask. One approach to improve speech perception in these situations is to use an artificial visual lip reading aid. In this paper, we present a user study (𝑁 = 17) in which we compared three levels of audio stimuli visualizations and two levels of modulating the appearance of the visualization based on the speech signal, and we compared them against two control conditions: an audio-only condition, and a real human speaking. We measured participants’ speech reception thresholds (SRTs) to understand the effects of these visualizations on speech perception in noise. These thresholds indicate the decibel levels of the speech signal that are necessary for a listener to receive the speech correctly 50% of the time. Additionally, we measured the usability of the approaches and the user experience. We found that the different artificial visualizations improved participants’ speech reception compared to the audio-only baseline condition, but they were significantly poorer than the real human condition. This suggests that different visualizations can improve speech perception when the speaker’s face is not available. However, we also discuss limitations of current plug-and-play lip sync software and abstract representations of the speaker in the context of speech perception. |

| Ryan Schubert; Gerd Bruder; Gregory F. Welch Intuitive User Interfaces for Real-Time Magnification in Augmented Reality Proceedings Article In: Proceedings of the ACM Symposium on Virtual Reality Software and Technology (VRST), pp. 1–10, 2023. @inproceedings{schubert2023iu,

title = {Intuitive User Interfaces for Real-Time Magnification in Augmented Reality },

author = {Ryan Schubert and Gerd Bruder and Gregory F. Welch},

url = {https://sreal.ucf.edu/wp-content/uploads/2023/08/vrst23_bruder2023iu.pdf},

year = {2023},

date = {2023-10-09},

urldate = {2023-10-09},

booktitle = {Proceedings of the ACM Symposium on Virtual Reality Software and Technology (VRST)},

pages = {1--10},

abstract = {Various reasons exist why humans desire to magnify portions of our visually perceived surroundings, e.g., because they are too far away or too small to see with the naked eye. Different technologies are used to facilitate magnification, from telescopes to microscopes using monocular or binocular designs. In particular, modern digital cameras capable of optical and/or digital zoom are very flexible as their high-resolution imagery can be presented to users in real-time with displays and interfaces allowing control over the magnification. In this paper, we present a novel design space of intuitive augmented reality (AR) magnifications where an AR head-mounted display is used for the presentation of real-time magnified camera imagery. We present a user study evaluating and comparing different visual presentation methods and AR interaction techniques. Our results show different advantages for unimanual, bimanual, and situated AR magnification window interfaces, near versus far vergence distances for the image presentation, and five different user interfaces for specifying the scaling factor of the imagery.},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

Various reasons exist why humans desire to magnify portions of our visually perceived surroundings, e.g., because they are too far away or too small to see with the naked eye. Different technologies are used to facilitate magnification, from telescopes to microscopes using monocular or binocular designs. In particular, modern digital cameras capable of optical and/or digital zoom are very flexible as their high-resolution imagery can be presented to users in real-time with displays and interfaces allowing control over the magnification. In this paper, we present a novel design space of intuitive augmented reality (AR) magnifications where an AR head-mounted display is used for the presentation of real-time magnified camera imagery. We present a user study evaluating and comparing different visual presentation methods and AR interaction techniques. Our results show different advantages for unimanual, bimanual, and situated AR magnification window interfaces, near versus far vergence distances for the image presentation, and five different user interfaces for specifying the scaling factor of the imagery. |

| Zubin Choudhary; Nahal Norouzi; Austin Erickson; Ryan Schubert; Gerd Bruder; Gregory F. Welch Exploring the Social Influence of Virtual Humans Unintentionally Conveying Conflicting Emotions Conference Proceedings of the 30th IEEE Conference on Virtual Reality and 3D User Interfaces, IEEE VR 2023, 2023. @conference{Choudhary2023,

title = {Exploring the Social Influence of Virtual Humans Unintentionally Conveying Conflicting Emotions},

author = {Zubin Choudhary and Nahal Norouzi and Austin Erickson and Ryan Schubert and Gerd Bruder and Gregory F. Welch},

url = {https://sreal.ucf.edu/wp-content/uploads/2023/01/PostReview_ConflictingEmotions_IEEEVR23-1.pdf},

year = {2023},

date = {2023-03-29},

urldate = {2023-03-29},

booktitle = {Proceedings of the 30th IEEE Conference on Virtual Reality and 3D User Interfaces, IEEE VR 2023},

abstract = {The expression of human emotion is integral to social interaction, and in virtual reality it is increasingly common to develop virtual avatars that attempt to convey emotions by mimicking these visual and aural cues, i.e. the facial and vocal expressions. However, errors in (or the absence of) facial tracking can result in the rendering of incorrect facial expressions on these virtual avatars. For example, a virtual avatar may speak with a happy or unhappy vocal inflection while their facial expression remains otherwise neutral. In circumstances where there is conflict between the avatar’s facial and vocal expressions, it is possible that users will incorrectly interpret the

avatar’s emotion, which may have unintended consequences in terms of social influence or in terms of the outcome of the interaction.

In this paper, we present a human-subjects study (N = 22 ) aimed at understanding the impact of conflicting facial and vocal emotional expressions. Specifically we explored three levels of emotional valence (unhappy, neutral, and happy) expressed in both visual (facial) and aural (vocal) forms. We also investigate three levels of head scales (down-scaled, accurate, and up-scaled) to evaluate whether head scale affects user interpretation of the conveyed emotion. We find significant effects of different multimodal expressions on happiness and trust perception, while no significant effect was observed for head scales. Evidence from our results suggest that facial expressions have a stronger impact than vocal expressions. Additionally, as the difference between the two expressions increase, the less predictable the multimodal expression becomes. For example, for the happy-looking and happy-sounding multimodal expression, we expect and see high happiness rating and high trust, however if one of the two expressions change, this mismatch makes the expression less predictable. We discuss the relationships, implications, and guidelines for social applications that aim to leverage multimodal social cues.},

keywords = {},

pubstate = {published},

tppubtype = {conference}

}

The expression of human emotion is integral to social interaction, and in virtual reality it is increasingly common to develop virtual avatars that attempt to convey emotions by mimicking these visual and aural cues, i.e. the facial and vocal expressions. However, errors in (or the absence of) facial tracking can result in the rendering of incorrect facial expressions on these virtual avatars. For example, a virtual avatar may speak with a happy or unhappy vocal inflection while their facial expression remains otherwise neutral. In circumstances where there is conflict between the avatar’s facial and vocal expressions, it is possible that users will incorrectly interpret the

avatar’s emotion, which may have unintended consequences in terms of social influence or in terms of the outcome of the interaction.

In this paper, we present a human-subjects study (N = 22 ) aimed at understanding the impact of conflicting facial and vocal emotional expressions. Specifically we explored three levels of emotional valence (unhappy, neutral, and happy) expressed in both visual (facial) and aural (vocal) forms. We also investigate three levels of head scales (down-scaled, accurate, and up-scaled) to evaluate whether head scale affects user interpretation of the conveyed emotion. We find significant effects of different multimodal expressions on happiness and trust perception, while no significant effect was observed for head scales. Evidence from our results suggest that facial expressions have a stronger impact than vocal expressions. Additionally, as the difference between the two expressions increase, the less predictable the multimodal expression becomes. For example, for the happy-looking and happy-sounding multimodal expression, we expect and see high happiness rating and high trust, however if one of the two expressions change, this mismatch makes the expression less predictable. We discuss the relationships, implications, and guidelines for social applications that aim to leverage multimodal social cues. |

| Kangsoo Kim; Nahal Norouzi; Dongsik Jo; Gerd Bruder; Greg Welch The Augmented Reality Internet of Things: Opportunities of Embodied Interactions in Transreality Book Chapter In: Nee, Andrew Yeh Ching; Ong, Soh Khim (Ed.): Springer Handbook of Augmented Reality, pp. 797–829, Springer International Publishing, Cham, 2023, ISBN: 978-3-030-67822-7. @inbook{Kim2023aa,

title = {The Augmented Reality Internet of Things: Opportunities of Embodied Interactions in Transreality},

author = {Kangsoo Kim and Nahal Norouzi and Dongsik Jo and Gerd Bruder and Greg Welch},

editor = {Andrew Yeh Ching Nee and Soh Khim Ong},

url = {https://doi.org/10.1007/978-3-030-67822-7_32},

doi = {10.1007/978-3-030-67822-7_32},

isbn = {978-3-030-67822-7},

year = {2023},

date = {2023-01-01},

urldate = {2023-01-01},

booktitle = {Springer Handbook of Augmented Reality},

pages = {797--829},

publisher = {Springer International Publishing},

address = {Cham},

abstract = {Human society is encountering a new wave of advancements related to smart connected technologies with the convergence of different traditionally separate fields, which can be characterized by a fusion of technologies that merge and tightly integrate the physical, digital, and biological spheres. In this new paradigm of convergence, all the physical and digital things will become more and more intelligent and connected to each other through the Internet, and the boundary between them will blur and become seamless. In particular, augmented/mixed reality (AR/MR), which combines virtual content with the real environment, is experiencing an unprecedented golden era along with dramatic technological achievements and increasing public interest. Together with advanced artificial intelligence (AI) and ubiquitous computing empowered by the Internet of Things/Everything (IoT/IoE) systems, AR can be our ultimate interface to interact with both digital (virtual) and physical (real) worlds while pervasively mediating and enriching our lives.},

keywords = {},

pubstate = {published},

tppubtype = {inbook}

}

Human society is encountering a new wave of advancements related to smart connected technologies with the convergence of different traditionally separate fields, which can be characterized by a fusion of technologies that merge and tightly integrate the physical, digital, and biological spheres. In this new paradigm of convergence, all the physical and digital things will become more and more intelligent and connected to each other through the Internet, and the boundary between them will blur and become seamless. In particular, augmented/mixed reality (AR/MR), which combines virtual content with the real environment, is experiencing an unprecedented golden era along with dramatic technological achievements and increasing public interest. Together with advanced artificial intelligence (AI) and ubiquitous computing empowered by the Internet of Things/Everything (IoT/IoE) systems, AR can be our ultimate interface to interact with both digital (virtual) and physical (real) worlds while pervasively mediating and enriching our lives. |

2022

|

| Zubin Choudhary; Austin Erickson; Nahal Norouzi; Kangsoo Kim; Gerd Bruder; Greg Welch Virtual Big Heads in Extended Reality: Estimation of Ideal Head Scales and Perceptual Thresholds for Comfort and Facial Cues Journal Article In: ACM Transactions on Applied Perception, 2022. @article{Choudhary2022,

title = {Virtual Big Heads in Extended Reality: Estimation of Ideal Head Scales and Perceptual Thresholds for Comfort and Facial Cues},

author = {Zubin Choudhary and Austin Erickson and Nahal Norouzi and Kangsoo Kim and Gerd Bruder and Greg Welch},

url = {https://drive.google.com/file/d/1jdxwLchDH0RPouVENoSx8iSOyDmJhqKb/view?usp=sharing},

year = {2022},

date = {2022-11-02},

urldate = {2022-11-02},

journal = {ACM Transactions on Applied Perception},

abstract = {Extended reality (XR) technologies, such as virtual reality (VR) and augmented reality (AR), provide users, their avatars, and embodied agents a shared platform to collaborate in a spatial context. While traditional face-to-face communication is limited by users' proximity, meaning that another human's non-verbal embodied cues become more difficult to perceive the farther one is away from that person, In this paper, we describe and evaluate the ``Big Head'' technique, in which a human's head in VR/AR is scaled up relative to their distance from the observer as a mechanism for enhancing the visibility of non-verbal facial cues, such as facial expressions or eye gaze. To better understand and explore this technique, we present two complimentary human-subject experiments in this paper.

In our first experiment, we conducted a VR study with a head-mounted display (HMD) to understand the impact of increased or decreased head scales on participants' ability to perceive facial expressions as well as their sense of comfort and feeling of ``uncannniness'' over distances of up to 10 meters. We explored two different scaling methods and compared perceptual thresholds and user preferences. Our second experiment was performed in an outdoor AR environment with an optical see-through (OST) HMD. Participants were asked to estimate facial expressions and eye gaze, and identify a virtual human over large distances of 30, 60, and 90 meters. In both experiments, our results show significant differences in minimum, maximum, and ideal head scales for different distances and tasks related to perceiving faces, facial expressions, and eye gaze, while we also found that participants were more comfortable with slightly bigger heads at larger distances. We discuss our findings with respect to the technologies used, and we discuss implications and guidelines for practical applications that aim to leverage XR-enhanced facial cues.},

keywords = {},

pubstate = {published},

tppubtype = {article}

}

Extended reality (XR) technologies, such as virtual reality (VR) and augmented reality (AR), provide users, their avatars, and embodied agents a shared platform to collaborate in a spatial context. While traditional face-to-face communication is limited by users' proximity, meaning that another human's non-verbal embodied cues become more difficult to perceive the farther one is away from that person, In this paper, we describe and evaluate the ``Big Head'' technique, in which a human's head in VR/AR is scaled up relative to their distance from the observer as a mechanism for enhancing the visibility of non-verbal facial cues, such as facial expressions or eye gaze. To better understand and explore this technique, we present two complimentary human-subject experiments in this paper.

In our first experiment, we conducted a VR study with a head-mounted display (HMD) to understand the impact of increased or decreased head scales on participants' ability to perceive facial expressions as well as their sense of comfort and feeling of ``uncannniness'' over distances of up to 10 meters. We explored two different scaling methods and compared perceptual thresholds and user preferences. Our second experiment was performed in an outdoor AR environment with an optical see-through (OST) HMD. Participants were asked to estimate facial expressions and eye gaze, and identify a virtual human over large distances of 30, 60, and 90 meters. In both experiments, our results show significant differences in minimum, maximum, and ideal head scales for different distances and tasks related to perceiving faces, facial expressions, and eye gaze, while we also found that participants were more comfortable with slightly bigger heads at larger distances. We discuss our findings with respect to the technologies used, and we discuss implications and guidelines for practical applications that aim to leverage XR-enhanced facial cues. |

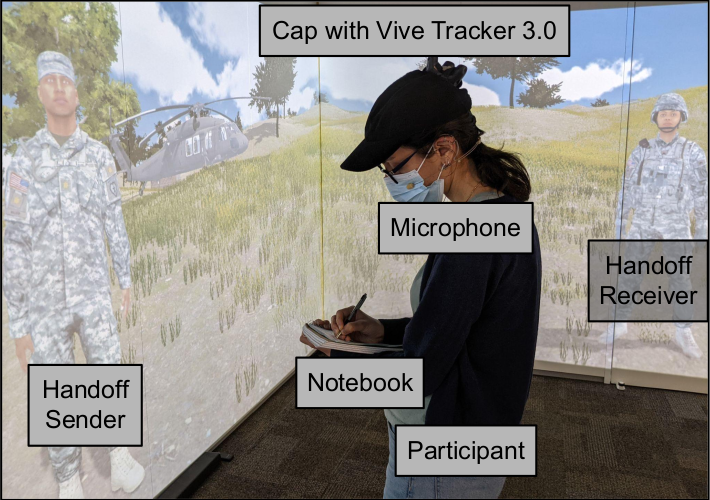

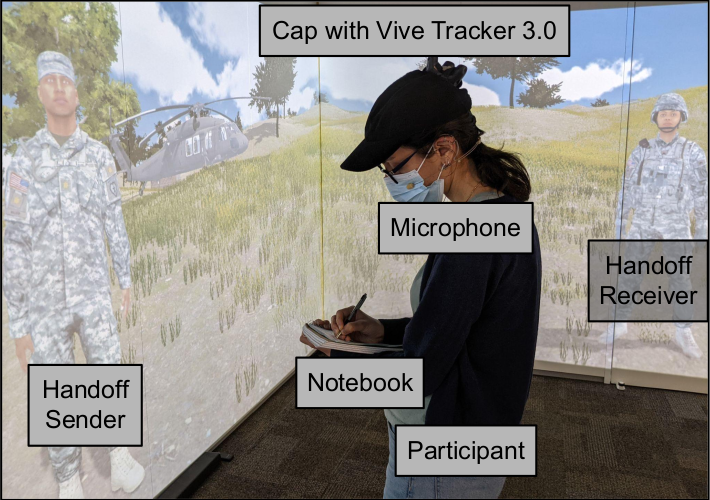

| Matt Gottsacker; Nahal Norouzi; Ryan Schubert; Frank Guido-Sanz; Gerd Bruder; Gregory F. Welch Effects of Environmental Noise Levels on Patient Handoff Communication in a Mixed Reality Simulation Proceedings Article In: 28th ACM Symposium on Virtual Reality Software and Technology (VRST '22), pp. 1-10, 2022, ISBN: 978-1-4503-9889-3/22/11. @inproceedings{gottsacker2022noise,

title = {Effects of Environmental Noise Levels on Patient Handoff Communication in a Mixed Reality Simulation},

author = {Matt Gottsacker and Nahal Norouzi and Ryan Schubert and Frank Guido-Sanz and Gerd Bruder and Gregory F. Welch},

url = {https://sreal.ucf.edu/wp-content/uploads/2022/10/main.pdf},

doi = {10.1145/3562939.3565627},

isbn = {978-1-4503-9889-3/22/11},

year = {2022},

date = {2022-10-27},

urldate = {2022-10-27},

booktitle = {28th ACM Symposium on Virtual Reality Software and Technology (VRST '22)},

pages = {1-10},

abstract = {When medical caregivers transfer patients to another person's care (a patient handoff), it is essential they effectively communicate the patient's condition to ensure the best possible health outcomes. Emergency situations caused by mass casualty events (e.g., natural disasters) introduce additional difficulties to handoff procedures such as environmental noise. We created a projected mixed reality simulation of a handoff scenario involving a medical evacuation by air and tested how low, medium, and high levels of helicopter noise affected participants' handoff experience, handoff performance, and behaviors. Through a human-subjects experimental design study (N = 21), we found that the addition of noise increased participants' subjective stress and task load, decreased their self-assessed and actual performance, and caused participants to speak louder. Participants also stood closer to the virtual human sending the handoff information when listening to the handoff than they stood to the receiver when relaying the handoff information. We discuss implications for the design of handoff training simulations and avenues for future handoff communication research.},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

When medical caregivers transfer patients to another person's care (a patient handoff), it is essential they effectively communicate the patient's condition to ensure the best possible health outcomes. Emergency situations caused by mass casualty events (e.g., natural disasters) introduce additional difficulties to handoff procedures such as environmental noise. We created a projected mixed reality simulation of a handoff scenario involving a medical evacuation by air and tested how low, medium, and high levels of helicopter noise affected participants' handoff experience, handoff performance, and behaviors. Through a human-subjects experimental design study (N = 21), we found that the addition of noise increased participants' subjective stress and task load, decreased their self-assessed and actual performance, and caused participants to speak louder. Participants also stood closer to the virtual human sending the handoff information when listening to the handoff than they stood to the receiver when relaying the handoff information. We discuss implications for the design of handoff training simulations and avenues for future handoff communication research. |

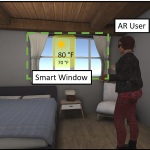

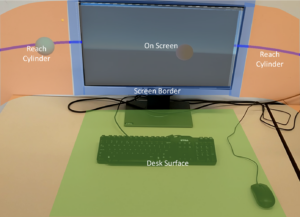

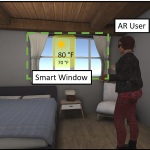

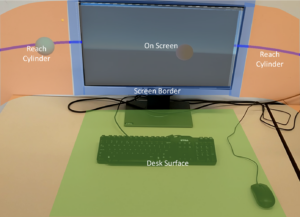

| Robbe Cools; Matt Gottsacker; Adalberto Simeone; Gerd Bruder; Gregory F. Welch; Steven Feiner Towards a Desktop–AR Prototyping Framework: Prototyping Cross-Reality Between Desktops and Augmented Reality Proceedings Article In: Adjunct Proceedings of IEEE International Symposium on Mixed and Augmented Reality (ISMAR), pp. 175-182, 2022. @inproceedings{gottsacker2022desktopar,

title = {Towards a Desktop–AR Prototyping Framework: Prototyping Cross-Reality Between Desktops and Augmented Reality},

author = {Robbe Cools and Matt Gottsacker and Adalberto Simeone and Gerd Bruder and Gregory F. Welch and Steven Feiner},

url = {https://sreal.ucf.edu/wp-content/uploads/2022/10/ISMAR2022_Workshop_on_Prototyping_Cross_Reality_Systems.pdf},

doi = {10.1109/ISMAR-Adjunct57072.2022.00040},

year = {2022},

date = {2022-10-22},

urldate = {2022-10-22},

booktitle = {Adjunct Proceedings of IEEE International Symposium on Mixed and Augmented Reality (ISMAR)},

pages = {175-182},

abstract = {Augmented reality (AR) head-worn displays (HWDs) allow users to view and interact with virtual objects anchored in the 3D space around them. These devices extend users’ digital interaction space compared to traditional desktop computing environments by both allowing users to interact with a larger virtual display and by affording new interactions (e.g., intuitive 3D manipulations) with virtual content. Yet, 2D desktop displays still have advantages over AR HWDs for common computing tasks and will continue to be used well into the future. Because of their not entirely overlapping set of affordances, AR HWDs and 2D desktops may be useful in a hybrid configuration; that is, users may benefit from being able to

work on computing tasks in either environment (or simultaneously in both environments) while transitioning virtual content between them. In support of such computing environments, we propose a prototyping framework for bidirectional Cross-Reality interactions between a desktop and an AR HWD. We further implemented a proof-of-concept seamless Desktop–AR display space, and describe two concrete use cases for our framework. In future work we aim to further develop our proof-of-concept into the proposed framework.},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

Augmented reality (AR) head-worn displays (HWDs) allow users to view and interact with virtual objects anchored in the 3D space around them. These devices extend users’ digital interaction space compared to traditional desktop computing environments by both allowing users to interact with a larger virtual display and by affording new interactions (e.g., intuitive 3D manipulations) with virtual content. Yet, 2D desktop displays still have advantages over AR HWDs for common computing tasks and will continue to be used well into the future. Because of their not entirely overlapping set of affordances, AR HWDs and 2D desktops may be useful in a hybrid configuration; that is, users may benefit from being able to

work on computing tasks in either environment (or simultaneously in both environments) while transitioning virtual content between them. In support of such computing environments, we propose a prototyping framework for bidirectional Cross-Reality interactions between a desktop and an AR HWD. We further implemented a proof-of-concept seamless Desktop–AR display space, and describe two concrete use cases for our framework. In future work we aim to further develop our proof-of-concept into the proposed framework. |

![[Poster] Adapting Michelson Contrast for use with Optical See-Through Displays](https://sreal.ucf.edu/wp-content/uploads/2022/10/phonefigure2-e1664926618216-150x150.png) | Austin Erickson; Gerd Bruder; Gregory F Welch [Poster] Adapting Michelson Contrast for use with Optical See-Through Displays Proceedings Article In: In Adjunct Proceedings of the IEEE International Symposium on Mixed and Augmented Reality, pp. 1–2, IEEE IEEE, 2022. @inproceedings{Erickson2022c,

title = {[Poster] Adapting Michelson Contrast for use with Optical See-Through Displays},

author = {Austin Erickson and Gerd Bruder and Gregory F Welch},

url = {https://sreal.ucf.edu/wp-content/uploads/2022/10/ISMARContrastModel_POSTER.pdf},

year = {2022},

date = {2022-10-17},

urldate = {2022-10-17},

booktitle = {In Adjunct Proceedings of the IEEE International Symposium on Mixed and Augmented Reality},

pages = {1--2},

publisher = {IEEE},

organization = {IEEE},

abstract = {Due to the additive light model employed by current optical see-through head-mounted displays (OST-HMDs), the perceived contrast of displayed imagery is reduced with increased environment luminance, often to the point where it becomes difficult for the user to accurately distinguish the presence of visual imagery. While existing contrast models, such as Weber contrast and Michelson contrast, can be used to predict when the observer will experience difficulty distinguishing and interpreting stimuli on traditional displays, these models must be adapted for use with additive displays. In this paper, we present a simplified model of luminance contrast for optical see-through displays derived from Michelson’s contrast equation and demonstrate two applications of the model: informing design decisions involving the color of virtual imagery and optimizing environment light attenuation through the use of neutral density filters.},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

Due to the additive light model employed by current optical see-through head-mounted displays (OST-HMDs), the perceived contrast of displayed imagery is reduced with increased environment luminance, often to the point where it becomes difficult for the user to accurately distinguish the presence of visual imagery. While existing contrast models, such as Weber contrast and Michelson contrast, can be used to predict when the observer will experience difficulty distinguishing and interpreting stimuli on traditional displays, these models must be adapted for use with additive displays. In this paper, we present a simplified model of luminance contrast for optical see-through displays derived from Michelson’s contrast equation and demonstrate two applications of the model: informing design decisions involving the color of virtual imagery and optimizing environment light attenuation through the use of neutral density filters. |

![[POSTER] Exploring Cues and Signaling to Improve Cross-Reality Interruptions](https://sreal.ucf.edu/wp-content/uploads/2022/06/would_interrupt_red-300x300.png) | Matt Gottsacker; Raiffa Syamil; Pamela Wisniewski; Gerd Bruder; Carolina Cruz-Neira; Gregory F. Welch [POSTER] Exploring Cues and Signaling to Improve Cross-Reality Interruptions Proceedings Article In: Adjunct Proceedings of IEEE International Symposium on Mixed and Augmented Reality (ISMAR), pp. 827-832, 2022. @inproceedings{nokey,

title = {[POSTER] Exploring Cues and Signaling to Improve Cross-Reality Interruptions},

author = {Matt Gottsacker and Raiffa Syamil and Pamela Wisniewski and Gerd Bruder and Carolina Cruz-Neira and Gregory F. Welch},

url = {https://sreal.ucf.edu/wp-content/uploads/2022/09/ISMAR22_CrossReality_camready_3.pdf},

doi = {10.1109/ISMAR-Adjunct57072.2022.00179},

year = {2022},

date = {2022-10-15},

urldate = {2022-10-15},

booktitle = {Adjunct Proceedings of IEEE International Symposium on Mixed and Augmented Reality (ISMAR)},

pages = {827-832},

abstract = {In this paper, we report on initial work exploring the potential value of technology-mediated cues and signals to improve cross-reality interruptions. We investigated the use of color-coded visual cues (LED lights) to help a person decide when to interrupt a virtual reality (VR) user, and a gesture-based mechanism (waving at the user) to signal their desire to do so. To assess the potential value of these mechanisms we conducted a preliminary 2×3 within-subjects experimental design user study (N = 10) where the participants acted in the role of the interrupter. While we found that our visual cues improved participants’ experiences, our gesture-based signaling mechanism did not, as users did not trust it nor consider it as intuitive as a speech-based mechanism might be. Our preliminary findings motivate further investigation of interruption cues and signaling mechanisms to inform future VR head-worn display system designs.},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

In this paper, we report on initial work exploring the potential value of technology-mediated cues and signals to improve cross-reality interruptions. We investigated the use of color-coded visual cues (LED lights) to help a person decide when to interrupt a virtual reality (VR) user, and a gesture-based mechanism (waving at the user) to signal their desire to do so. To assess the potential value of these mechanisms we conducted a preliminary 2×3 within-subjects experimental design user study (N = 10) where the participants acted in the role of the interrupter. While we found that our visual cues improved participants’ experiences, our gesture-based signaling mechanism did not, as users did not trust it nor consider it as intuitive as a speech-based mechanism might be. Our preliminary findings motivate further investigation of interruption cues and signaling mechanisms to inform future VR head-worn display system designs. |

| Meelad Doroodchi; Priscilla Ramos; Austin Erickson; Hiroshi Furuya; Juanita Benjamin; Gerd Bruder; Gregory F. Welch Effects of Optical See-Through Displays on Self-Avatar Appearance in Augmented Reality Proceedings Article In: Proceedings of the International Symposium on Mixed and Augmented Reality (ISMAR), IEEE 2022. @inproceedings{Ramos2022,

title = {Effects of Optical See-Through Displays on Self-Avatar Appearance in Augmented Reality},

author = {Meelad Doroodchi and Priscilla Ramos and Austin Erickson and Hiroshi Furuya and Juanita Benjamin and Gerd Bruder and Gregory F. Welch},

url = {https://sreal.ucf.edu/wp-content/uploads/2022/10/IDEATExR2022_REU_Paper.pdf},

year = {2022},

date = {2022-08-17},

urldate = {2022-08-31},

booktitle = {Proceedings of the International Symposium on Mixed and Augmented Reality (ISMAR)},

organization = {IEEE},

abstract = {Display technologies in the fields of virtual and augmented reality can affect the appearance of human representations, such as avatars used in telepresence or entertainment applications. In this paper, we describe a user study (N=20) where participants saw themselves in a mirror side-by-side with their own avatar, through use of a HoloLens 2 optical see-through head-mounted display. Participants were tasked to match their avatar’s appearance to their own under two environment lighting conditions (200 lux and 2,000 lux). Our results showed that the intensity of environment lighting had a significant effect on participants selected skin colors for their avatars, where participants with dark skin colors tended to make their avatar’s skin color lighter, nearly to the level of participants with light skin color. Further, in particular female participants made their avatar’s hair color darker for the lighter environment lighting condition. We discuss our results with a view on technological limitations and effects on the diversity of avatar representations on optical see-through displays.},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

Display technologies in the fields of virtual and augmented reality can affect the appearance of human representations, such as avatars used in telepresence or entertainment applications. In this paper, we describe a user study (N=20) where participants saw themselves in a mirror side-by-side with their own avatar, through use of a HoloLens 2 optical see-through head-mounted display. Participants were tasked to match their avatar’s appearance to their own under two environment lighting conditions (200 lux and 2,000 lux). Our results showed that the intensity of environment lighting had a significant effect on participants selected skin colors for their avatars, where participants with dark skin colors tended to make their avatar’s skin color lighter, nearly to the level of participants with light skin color. Further, in particular female participants made their avatar’s hair color darker for the lighter environment lighting condition. We discuss our results with a view on technological limitations and effects on the diversity of avatar representations on optical see-through displays. |

| Austin Erickson; Gerd Bruder; Gregory F. Welch Analysis of the Saliency of Color-Based Dichoptic Cues in Optical See-Through Augmented Reality Journal Article In: Transactions on Visualization and Computer Graphics, pp. 1-15, 2022. @article{Erickson2022b,

title = {Analysis of the Saliency of Color-Based Dichoptic Cues in Optical See-Through Augmented Reality},

author = {Austin Erickson and Gerd Bruder and Gregory F. Welch},

editor = {Klaus Mueller},

url = {https://sreal.ucf.edu/wp-content/uploads/2022/07/ARPreattentiveCues-1.pdf},

doi = {10.1109/TVCG.2022.3195111},

year = {2022},

date = {2022-07-26},

urldate = {2022-07-26},

journal = {Transactions on Visualization and Computer Graphics},

pages = {1-15},

abstract = {In a future of pervasive augmented reality (AR), AR systems will need to be able to efficiently draw or guide the attention of the user to visual points of interest in their physical-virtual environment. Since AR imagery is overlaid on top of the user’s view of their physical environment, these attention guidance techniques must not only compete with other virtual imagery, but also with distracting or attention-grabbing features in the user’s physical environment. Because of the wide range of physical-virtual environments that pervasive AR users will find themselves in, it is difficult to design visual cues that “pop out” to the user without performing a visual analysis of the user’s environment, and changing the appearance of the cue to stand out from its surroundings.

In this paper, we present an initial investigation into the potential uses of dichoptic visual cues for optical see-through AR displays, specifically cues that involve having a difference in hue, saturation, or value between the user’s eyes. These types of cues have been shown to be preattentively processed by the user when presented on other stereoscopic displays, and may also be an effective method of drawing user attention on optical see-through AR displays. We present two user studies: one that evaluates the saliency of dichoptic visual cues on optical see-through displays, and one that evaluates their subjective qualities. Our results suggest that hue-based dichoptic cues or “Forbidden Colors” may be particularly effective for these purposes, achieving significantly lower error rates in a pop out task compared to value-based and saturation-based cues.},

keywords = {},

pubstate = {published},

tppubtype = {article}

}

In a future of pervasive augmented reality (AR), AR systems will need to be able to efficiently draw or guide the attention of the user to visual points of interest in their physical-virtual environment. Since AR imagery is overlaid on top of the user’s view of their physical environment, these attention guidance techniques must not only compete with other virtual imagery, but also with distracting or attention-grabbing features in the user’s physical environment. Because of the wide range of physical-virtual environments that pervasive AR users will find themselves in, it is difficult to design visual cues that “pop out” to the user without performing a visual analysis of the user’s environment, and changing the appearance of the cue to stand out from its surroundings.

In this paper, we present an initial investigation into the potential uses of dichoptic visual cues for optical see-through AR displays, specifically cues that involve having a difference in hue, saturation, or value between the user’s eyes. These types of cues have been shown to be preattentively processed by the user when presented on other stereoscopic displays, and may also be an effective method of drawing user attention on optical see-through AR displays. We present two user studies: one that evaluates the saliency of dichoptic visual cues on optical see-through displays, and one that evaluates their subjective qualities. Our results suggest that hue-based dichoptic cues or “Forbidden Colors” may be particularly effective for these purposes, achieving significantly lower error rates in a pop out task compared to value-based and saturation-based cues. |

![[Poster] Adapting Michelson Contrast for use with Optical See-Through Displays](https://sreal.ucf.edu/wp-content/uploads/2022/10/phonefigure2-e1664926618216-150x150.png)

![[POSTER] Exploring Cues and Signaling to Improve Cross-Reality Interruptions](https://sreal.ucf.edu/wp-content/uploads/2022/06/would_interrupt_red-300x300.png)