2024

|

| Matt Gottsacker; Hiroshi Furuya; Zubin Choudhary; Austin Erickson; Ryan Schubert; Gerd Bruder; Michael P. Browne; Gregory F. Welch Investigating the relationships between user behaviors and tracking factors on task performance and trust in augmented reality Journal Article In: Elsevier Computers & Graphics, vol. 123, pp. 1-14, 2024. @article{gottsacker2024trust,

title = {Investigating the relationships between user behaviors and tracking factors on task performance and trust in augmented reality},

author = {Matt Gottsacker and Hiroshi Furuya and Zubin Choudhary and Austin Erickson and Ryan Schubert and Gerd Bruder and Michael P. Browne and Gregory F. Welch},

url = {https://sreal.ucf.edu/wp-content/uploads/2024/08/C_G____ARTrust____Accuracy___Precision.pdf},

doi = {https://doi.org/10.1016/j.cag.2024.104035},

year = {2024},

date = {2024-08-06},

urldate = {2024-08-06},

journal = {Elsevier Computers & Graphics},

volume = {123},

pages = {1-14},

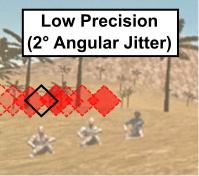

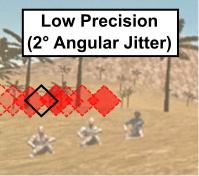

abstract = {This research paper explores the impact of augmented reality (AR) tracking characteristics, specifically an AR head-worn display's tracking registration accuracy and precision, on users' spatial abilities and subjective perceptions of trust in and reliance on the technology. Our study aims to clarify the relationships between user performance and the different behaviors users may employ based on varying degrees of trust in and reliance on AR. Our controlled experimental setup used a 360 field-of-regard search-and-selection task and combines the immersive aspects of a CAVE-like environment with AR overlays viewed with a head-worn display. We investigated three levels of simulated AR tracking errors in terms of both accuracy and precision (+0, +1, +2). We controlled for four user task behaviors that correspond to different levels of trust in and reliance on an AR system: AR-Only (only relying on AR), AR-First (prioritizing AR over real world), Real-Only (only relying on real world), and Real-First (prioritizing real world over AR). By controlling for these behaviors, our results showed that even small amounts of AR tracking errors had noticeable effects on users' task performance, especially if they relied completely on the AR cues (AR-Only). Our results link AR tracking characteristics with user behavior, highlighting the importance of understanding these elements to improve AR technology and user satisfaction.},

keywords = {},

pubstate = {published},

tppubtype = {article}

}

This research paper explores the impact of augmented reality (AR) tracking characteristics, specifically an AR head-worn display's tracking registration accuracy and precision, on users' spatial abilities and subjective perceptions of trust in and reliance on the technology. Our study aims to clarify the relationships between user performance and the different behaviors users may employ based on varying degrees of trust in and reliance on AR. Our controlled experimental setup used a 360 field-of-regard search-and-selection task and combines the immersive aspects of a CAVE-like environment with AR overlays viewed with a head-worn display. We investigated three levels of simulated AR tracking errors in terms of both accuracy and precision (+0, +1, +2). We controlled for four user task behaviors that correspond to different levels of trust in and reliance on an AR system: AR-Only (only relying on AR), AR-First (prioritizing AR over real world), Real-Only (only relying on real world), and Real-First (prioritizing real world over AR). By controlling for these behaviors, our results showed that even small amounts of AR tracking errors had noticeable effects on users' task performance, especially if they relied completely on the AR cues (AR-Only). Our results link AR tracking characteristics with user behavior, highlighting the importance of understanding these elements to improve AR technology and user satisfaction. |

| Gerd Bruder; Michael Browne; Zubin Choudhary; Austin Erickson; Hiroshi Furuya; Matt Gottsacker; Ryan Schubert; Gregory Welch Visual Factors Influencing Trust and Reliance with Augmented Reality Systems Journal Article In: Journal of Vision Abstracts—Vision Sciences Society (VSS) Annual Meeting, 2024. @article{Bruder2024,

title = {Visual Factors Influencing Trust and Reliance with Augmented Reality Systems},

author = {Gerd Bruder and Michael Browne and Zubin Choudhary and Austin Erickson and Hiroshi Furuya and Matt Gottsacker and Ryan Schubert and Gregory Welch},

year = {2024},

date = {2024-05-17},

urldate = {2024-05-17},

journal = {Journal of Vision Abstracts—Vision Sciences Society (VSS) Annual Meeting},

abstract = {Augmented Reality (AR) systems are increasingly used for simulations, training, and operations across a wide range of application fields. Unfortunately, the imagery that current AR systems create often does not match our visual perception of the real world, which can make users feel like the AR system is not believable. This lack of belief can lead to negative training or experiences, where users lose trust in the AR system and adjust their reliance on AR. The latter is characterized by users adopting different cognitive perception-action pathways by which they integrate AR visual information for spatial tasks. In this work, we present a series of six within-subjects experiments (each N=20) in which we investigated trust in AR with respect to two display factors (field of view and visual contrast), two tracking factors (accuracy and precision), and two network factors (latency and dropouts). Participants performed a 360-degree visual search-and-selection task in a hybrid setup involving an AR head-mounted display and a CAVE-like simulated real environment. Participants completed the experiments with four perception-action pathways that represent different levels of the users’ reliance on an AR system: AR-Only (only relying on AR), AR-First (prioritizing AR over real world), Real-First (prioritizing real world over AR), and Real-Only (only relying on real world). Our results show that participants’ perception-action pathways and objective task performance were significantly affected by all six tested AR factors. In contrast, we found that their subjective responses for trust and reliance were often more affected by slight AR system differences than would elicit objective performance differences, and participants tended to overestimate or underestimate the trustworthiness of the AR system. Participants showed significantly higher task performance gains if their sense of trust was well-calibrated to the trustworthiness of the AR system, highlighting the importance of effectively managing users’ trust in future AR systems.

Acknowledgements: This material includes work supported in part by Vision Products LLC via US Air Force Research Laboratory (AFRL) Award Number FA864922P1038, and the Office of Naval Research under Award Numbers N00014-21-1-2578 and N00014-21-1-2882 (Dr. Peter Squire, Code 34).},

keywords = {},

pubstate = {published},

tppubtype = {article}

}

Augmented Reality (AR) systems are increasingly used for simulations, training, and operations across a wide range of application fields. Unfortunately, the imagery that current AR systems create often does not match our visual perception of the real world, which can make users feel like the AR system is not believable. This lack of belief can lead to negative training or experiences, where users lose trust in the AR system and adjust their reliance on AR. The latter is characterized by users adopting different cognitive perception-action pathways by which they integrate AR visual information for spatial tasks. In this work, we present a series of six within-subjects experiments (each N=20) in which we investigated trust in AR with respect to two display factors (field of view and visual contrast), two tracking factors (accuracy and precision), and two network factors (latency and dropouts). Participants performed a 360-degree visual search-and-selection task in a hybrid setup involving an AR head-mounted display and a CAVE-like simulated real environment. Participants completed the experiments with four perception-action pathways that represent different levels of the users’ reliance on an AR system: AR-Only (only relying on AR), AR-First (prioritizing AR over real world), Real-First (prioritizing real world over AR), and Real-Only (only relying on real world). Our results show that participants’ perception-action pathways and objective task performance were significantly affected by all six tested AR factors. In contrast, we found that their subjective responses for trust and reliance were often more affected by slight AR system differences than would elicit objective performance differences, and participants tended to overestimate or underestimate the trustworthiness of the AR system. Participants showed significantly higher task performance gains if their sense of trust was well-calibrated to the trustworthiness of the AR system, highlighting the importance of effectively managing users’ trust in future AR systems.

Acknowledgements: This material includes work supported in part by Vision Products LLC via US Air Force Research Laboratory (AFRL) Award Number FA864922P1038, and the Office of Naval Research under Award Numbers N00014-21-1-2578 and N00014-21-1-2882 (Dr. Peter Squire, Code 34). |

| Juanita Benjamin; Austin Erickson; Matt Gottsacker; Gerd Bruder; Gregory Welch Evaluating Transitive Perceptual Effects Between Virtual Entities in Outdoor Augmented Reality Proceedings Article In: Proceedings of IEEE Virtual Reality (VR), pp. 1-11, 2024. @inproceedings{Benjamin2024et,

title = {Evaluating Transitive Perceptual Effects Between Virtual Entities in Outdoor Augmented Reality},

author = {Juanita Benjamin and Austin Erickson and Matt Gottsacker and Gerd Bruder and Gregory Welch},

url = {https://sreal.ucf.edu/wp-content/uploads/2024/02/Benjamin2024.pdf},

year = {2024},

date = {2024-03-16},

urldate = {2024-03-16},

booktitle = {Proceedings of IEEE Virtual Reality (VR)},

pages = {1-11},

abstract = {Augmented reality (AR) head-mounted displays (HMDs) provide users with a view in which digital content is blended spatially with the outside world. However, one critical issue faced with such display technologies is misperception, i.e., perceptions of computer-generated content that differs from our human perception of other real-world objects or entities. Misperception can lead to mistrust in these systems and negative impacts in a variety of application fields. Although there is a considerable amount of research investigating either size, distance, or speed misperception in AR, far less is known about the relationships between these aspects. In this paper, we present an outdoor AR experiment (N=20) using a HoloLens 2 HMD. Participants estimated size, distance, and speed of Familiar and Unfamiliar outdoor animals at three distances (30, 60, 90 meters). To investigate whether providing information about one aspect may influence another, we divided our experiment into three phases. In Phase I, participants estimated the three aspects without any provided information. In Phase II, participants were given accurate size information, then asked to estimate distance and speed. In Phase III, participants were given accurate distance and size information, then asked to estimate speed. Our results show that estimates of speed in particular of the Unfamiliar animals benefited from provided size information, while speed estimates of all animals benefited from provided distance information. We found no support for the assumption that distance estimates benefited from provided size information.},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

Augmented reality (AR) head-mounted displays (HMDs) provide users with a view in which digital content is blended spatially with the outside world. However, one critical issue faced with such display technologies is misperception, i.e., perceptions of computer-generated content that differs from our human perception of other real-world objects or entities. Misperception can lead to mistrust in these systems and negative impacts in a variety of application fields. Although there is a considerable amount of research investigating either size, distance, or speed misperception in AR, far less is known about the relationships between these aspects. In this paper, we present an outdoor AR experiment (N=20) using a HoloLens 2 HMD. Participants estimated size, distance, and speed of Familiar and Unfamiliar outdoor animals at three distances (30, 60, 90 meters). To investigate whether providing information about one aspect may influence another, we divided our experiment into three phases. In Phase I, participants estimated the three aspects without any provided information. In Phase II, participants were given accurate size information, then asked to estimate distance and speed. In Phase III, participants were given accurate distance and size information, then asked to estimate speed. Our results show that estimates of speed in particular of the Unfamiliar animals benefited from provided size information, while speed estimates of all animals benefited from provided distance information. We found no support for the assumption that distance estimates benefited from provided size information. |

2023

|

| Zubin Choudhary; Nahal Norouzi; Austin Erickson; Ryan Schubert; Gerd Bruder; Gregory F. Welch Exploring the Social Influence of Virtual Humans Unintentionally Conveying Conflicting Emotions Conference Proceedings of the 30th IEEE Conference on Virtual Reality and 3D User Interfaces, IEEE VR 2023, 2023. @conference{Choudhary2023,

title = {Exploring the Social Influence of Virtual Humans Unintentionally Conveying Conflicting Emotions},

author = {Zubin Choudhary and Nahal Norouzi and Austin Erickson and Ryan Schubert and Gerd Bruder and Gregory F. Welch},

url = {https://sreal.ucf.edu/wp-content/uploads/2023/01/PostReview_ConflictingEmotions_IEEEVR23-1.pdf},

year = {2023},

date = {2023-03-29},

urldate = {2023-03-29},

booktitle = {Proceedings of the 30th IEEE Conference on Virtual Reality and 3D User Interfaces, IEEE VR 2023},

abstract = {The expression of human emotion is integral to social interaction, and in virtual reality it is increasingly common to develop virtual avatars that attempt to convey emotions by mimicking these visual and aural cues, i.e. the facial and vocal expressions. However, errors in (or the absence of) facial tracking can result in the rendering of incorrect facial expressions on these virtual avatars. For example, a virtual avatar may speak with a happy or unhappy vocal inflection while their facial expression remains otherwise neutral. In circumstances where there is conflict between the avatar’s facial and vocal expressions, it is possible that users will incorrectly interpret the

avatar’s emotion, which may have unintended consequences in terms of social influence or in terms of the outcome of the interaction.

In this paper, we present a human-subjects study (N = 22 ) aimed at understanding the impact of conflicting facial and vocal emotional expressions. Specifically we explored three levels of emotional valence (unhappy, neutral, and happy) expressed in both visual (facial) and aural (vocal) forms. We also investigate three levels of head scales (down-scaled, accurate, and up-scaled) to evaluate whether head scale affects user interpretation of the conveyed emotion. We find significant effects of different multimodal expressions on happiness and trust perception, while no significant effect was observed for head scales. Evidence from our results suggest that facial expressions have a stronger impact than vocal expressions. Additionally, as the difference between the two expressions increase, the less predictable the multimodal expression becomes. For example, for the happy-looking and happy-sounding multimodal expression, we expect and see high happiness rating and high trust, however if one of the two expressions change, this mismatch makes the expression less predictable. We discuss the relationships, implications, and guidelines for social applications that aim to leverage multimodal social cues.},

keywords = {},

pubstate = {published},

tppubtype = {conference}

}

The expression of human emotion is integral to social interaction, and in virtual reality it is increasingly common to develop virtual avatars that attempt to convey emotions by mimicking these visual and aural cues, i.e. the facial and vocal expressions. However, errors in (or the absence of) facial tracking can result in the rendering of incorrect facial expressions on these virtual avatars. For example, a virtual avatar may speak with a happy or unhappy vocal inflection while their facial expression remains otherwise neutral. In circumstances where there is conflict between the avatar’s facial and vocal expressions, it is possible that users will incorrectly interpret the

avatar’s emotion, which may have unintended consequences in terms of social influence or in terms of the outcome of the interaction.

In this paper, we present a human-subjects study (N = 22 ) aimed at understanding the impact of conflicting facial and vocal emotional expressions. Specifically we explored three levels of emotional valence (unhappy, neutral, and happy) expressed in both visual (facial) and aural (vocal) forms. We also investigate three levels of head scales (down-scaled, accurate, and up-scaled) to evaluate whether head scale affects user interpretation of the conveyed emotion. We find significant effects of different multimodal expressions on happiness and trust perception, while no significant effect was observed for head scales. Evidence from our results suggest that facial expressions have a stronger impact than vocal expressions. Additionally, as the difference between the two expressions increase, the less predictable the multimodal expression becomes. For example, for the happy-looking and happy-sounding multimodal expression, we expect and see high happiness rating and high trust, however if one of the two expressions change, this mismatch makes the expression less predictable. We discuss the relationships, implications, and guidelines for social applications that aim to leverage multimodal social cues. |

2022

|

| Zubin Choudhary; Austin Erickson; Nahal Norouzi; Kangsoo Kim; Gerd Bruder; Greg Welch Virtual Big Heads in Extended Reality: Estimation of Ideal Head Scales and Perceptual Thresholds for Comfort and Facial Cues Journal Article In: ACM Transactions on Applied Perception, 2022. @article{Choudhary2022,

title = {Virtual Big Heads in Extended Reality: Estimation of Ideal Head Scales and Perceptual Thresholds for Comfort and Facial Cues},

author = {Zubin Choudhary and Austin Erickson and Nahal Norouzi and Kangsoo Kim and Gerd Bruder and Greg Welch},

url = {https://drive.google.com/file/d/1jdxwLchDH0RPouVENoSx8iSOyDmJhqKb/view?usp=sharing},

year = {2022},

date = {2022-11-02},

urldate = {2022-11-02},

journal = {ACM Transactions on Applied Perception},

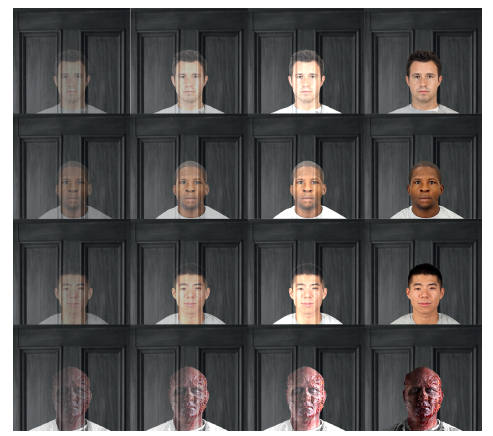

abstract = {Extended reality (XR) technologies, such as virtual reality (VR) and augmented reality (AR), provide users, their avatars, and embodied agents a shared platform to collaborate in a spatial context. While traditional face-to-face communication is limited by users' proximity, meaning that another human's non-verbal embodied cues become more difficult to perceive the farther one is away from that person, In this paper, we describe and evaluate the ``Big Head'' technique, in which a human's head in VR/AR is scaled up relative to their distance from the observer as a mechanism for enhancing the visibility of non-verbal facial cues, such as facial expressions or eye gaze. To better understand and explore this technique, we present two complimentary human-subject experiments in this paper.

In our first experiment, we conducted a VR study with a head-mounted display (HMD) to understand the impact of increased or decreased head scales on participants' ability to perceive facial expressions as well as their sense of comfort and feeling of ``uncannniness'' over distances of up to 10 meters. We explored two different scaling methods and compared perceptual thresholds and user preferences. Our second experiment was performed in an outdoor AR environment with an optical see-through (OST) HMD. Participants were asked to estimate facial expressions and eye gaze, and identify a virtual human over large distances of 30, 60, and 90 meters. In both experiments, our results show significant differences in minimum, maximum, and ideal head scales for different distances and tasks related to perceiving faces, facial expressions, and eye gaze, while we also found that participants were more comfortable with slightly bigger heads at larger distances. We discuss our findings with respect to the technologies used, and we discuss implications and guidelines for practical applications that aim to leverage XR-enhanced facial cues.},

keywords = {},

pubstate = {published},

tppubtype = {article}

}

Extended reality (XR) technologies, such as virtual reality (VR) and augmented reality (AR), provide users, their avatars, and embodied agents a shared platform to collaborate in a spatial context. While traditional face-to-face communication is limited by users' proximity, meaning that another human's non-verbal embodied cues become more difficult to perceive the farther one is away from that person, In this paper, we describe and evaluate the ``Big Head'' technique, in which a human's head in VR/AR is scaled up relative to their distance from the observer as a mechanism for enhancing the visibility of non-verbal facial cues, such as facial expressions or eye gaze. To better understand and explore this technique, we present two complimentary human-subject experiments in this paper.

In our first experiment, we conducted a VR study with a head-mounted display (HMD) to understand the impact of increased or decreased head scales on participants' ability to perceive facial expressions as well as their sense of comfort and feeling of ``uncannniness'' over distances of up to 10 meters. We explored two different scaling methods and compared perceptual thresholds and user preferences. Our second experiment was performed in an outdoor AR environment with an optical see-through (OST) HMD. Participants were asked to estimate facial expressions and eye gaze, and identify a virtual human over large distances of 30, 60, and 90 meters. In both experiments, our results show significant differences in minimum, maximum, and ideal head scales for different distances and tasks related to perceiving faces, facial expressions, and eye gaze, while we also found that participants were more comfortable with slightly bigger heads at larger distances. We discuss our findings with respect to the technologies used, and we discuss implications and guidelines for practical applications that aim to leverage XR-enhanced facial cues. |

![[Poster] Adapting Michelson Contrast for use with Optical See-Through Displays](https://sreal.ucf.edu/wp-content/uploads/2022/10/phonefigure2-e1664926618216-150x150.png) | Austin Erickson; Gerd Bruder; Gregory F Welch [Poster] Adapting Michelson Contrast for use with Optical See-Through Displays Proceedings Article In: In Adjunct Proceedings of the IEEE International Symposium on Mixed and Augmented Reality, pp. 1–2, IEEE IEEE, 2022. @inproceedings{Erickson2022c,

title = {[Poster] Adapting Michelson Contrast for use with Optical See-Through Displays},

author = {Austin Erickson and Gerd Bruder and Gregory F Welch},

url = {https://sreal.ucf.edu/wp-content/uploads/2022/10/ISMARContrastModel_POSTER.pdf},

year = {2022},

date = {2022-10-17},

urldate = {2022-10-17},

booktitle = {In Adjunct Proceedings of the IEEE International Symposium on Mixed and Augmented Reality},

pages = {1--2},

publisher = {IEEE},

organization = {IEEE},

abstract = {Due to the additive light model employed by current optical see-through head-mounted displays (OST-HMDs), the perceived contrast of displayed imagery is reduced with increased environment luminance, often to the point where it becomes difficult for the user to accurately distinguish the presence of visual imagery. While existing contrast models, such as Weber contrast and Michelson contrast, can be used to predict when the observer will experience difficulty distinguishing and interpreting stimuli on traditional displays, these models must be adapted for use with additive displays. In this paper, we present a simplified model of luminance contrast for optical see-through displays derived from Michelson’s contrast equation and demonstrate two applications of the model: informing design decisions involving the color of virtual imagery and optimizing environment light attenuation through the use of neutral density filters.},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

Due to the additive light model employed by current optical see-through head-mounted displays (OST-HMDs), the perceived contrast of displayed imagery is reduced with increased environment luminance, often to the point where it becomes difficult for the user to accurately distinguish the presence of visual imagery. While existing contrast models, such as Weber contrast and Michelson contrast, can be used to predict when the observer will experience difficulty distinguishing and interpreting stimuli on traditional displays, these models must be adapted for use with additive displays. In this paper, we present a simplified model of luminance contrast for optical see-through displays derived from Michelson’s contrast equation and demonstrate two applications of the model: informing design decisions involving the color of virtual imagery and optimizing environment light attenuation through the use of neutral density filters. |

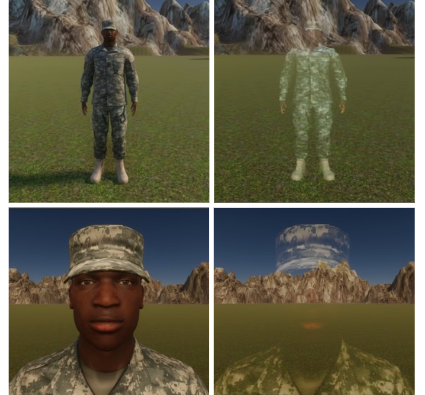

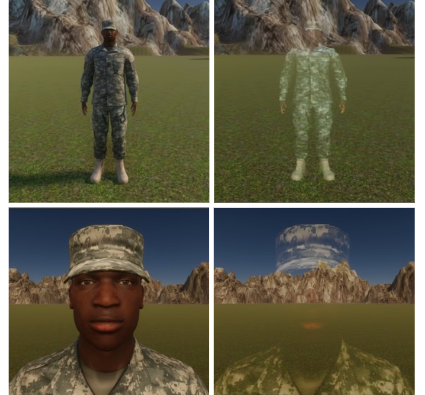

| Meelad Doroodchi; Priscilla Ramos; Austin Erickson; Hiroshi Furuya; Juanita Benjamin; Gerd Bruder; Gregory F. Welch Effects of Optical See-Through Displays on Self-Avatar Appearance in Augmented Reality Proceedings Article In: Proceedings of the International Symposium on Mixed and Augmented Reality (ISMAR), IEEE 2022. @inproceedings{Ramos2022,

title = {Effects of Optical See-Through Displays on Self-Avatar Appearance in Augmented Reality},

author = {Meelad Doroodchi and Priscilla Ramos and Austin Erickson and Hiroshi Furuya and Juanita Benjamin and Gerd Bruder and Gregory F. Welch},

url = {https://sreal.ucf.edu/wp-content/uploads/2022/10/IDEATExR2022_REU_Paper.pdf},

year = {2022},

date = {2022-08-17},

urldate = {2022-08-31},

booktitle = {Proceedings of the International Symposium on Mixed and Augmented Reality (ISMAR)},

organization = {IEEE},

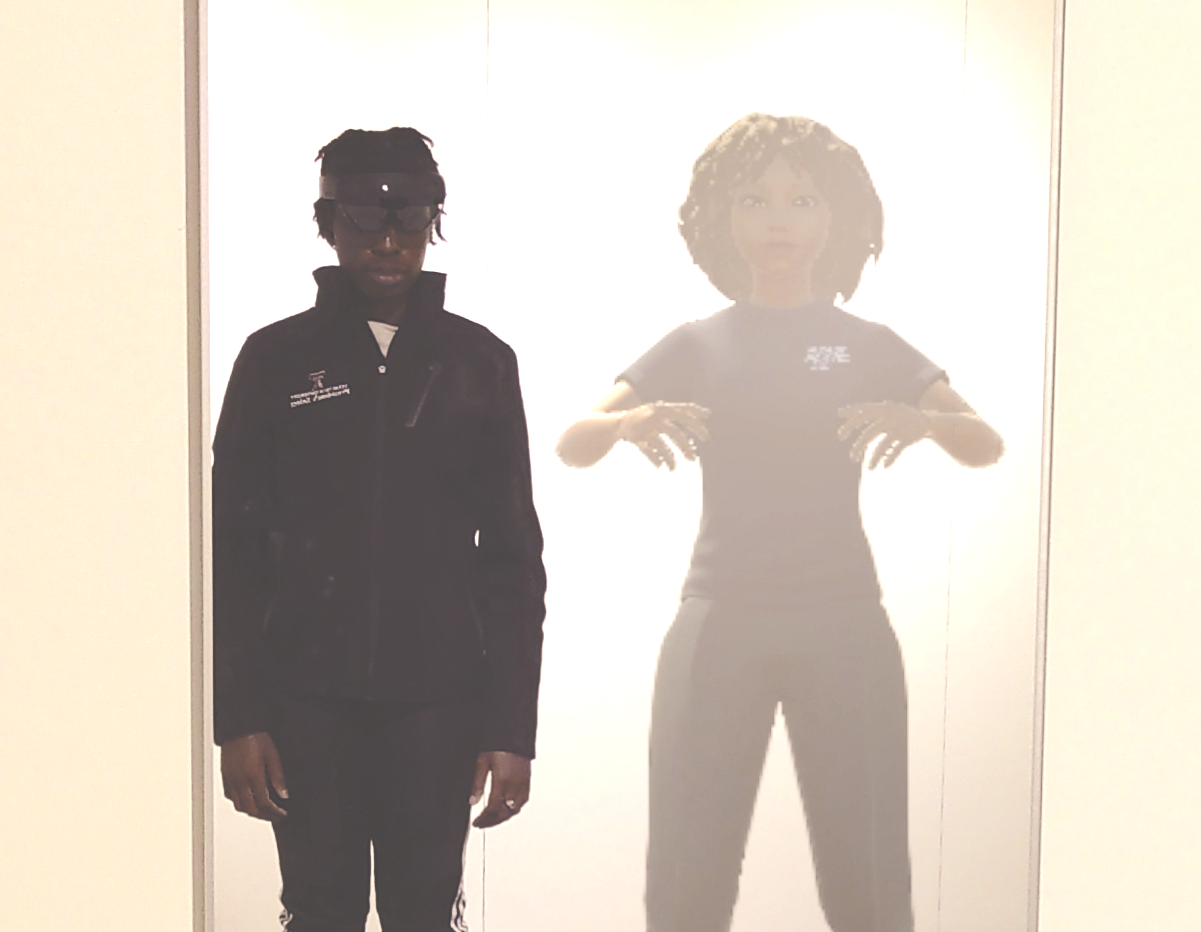

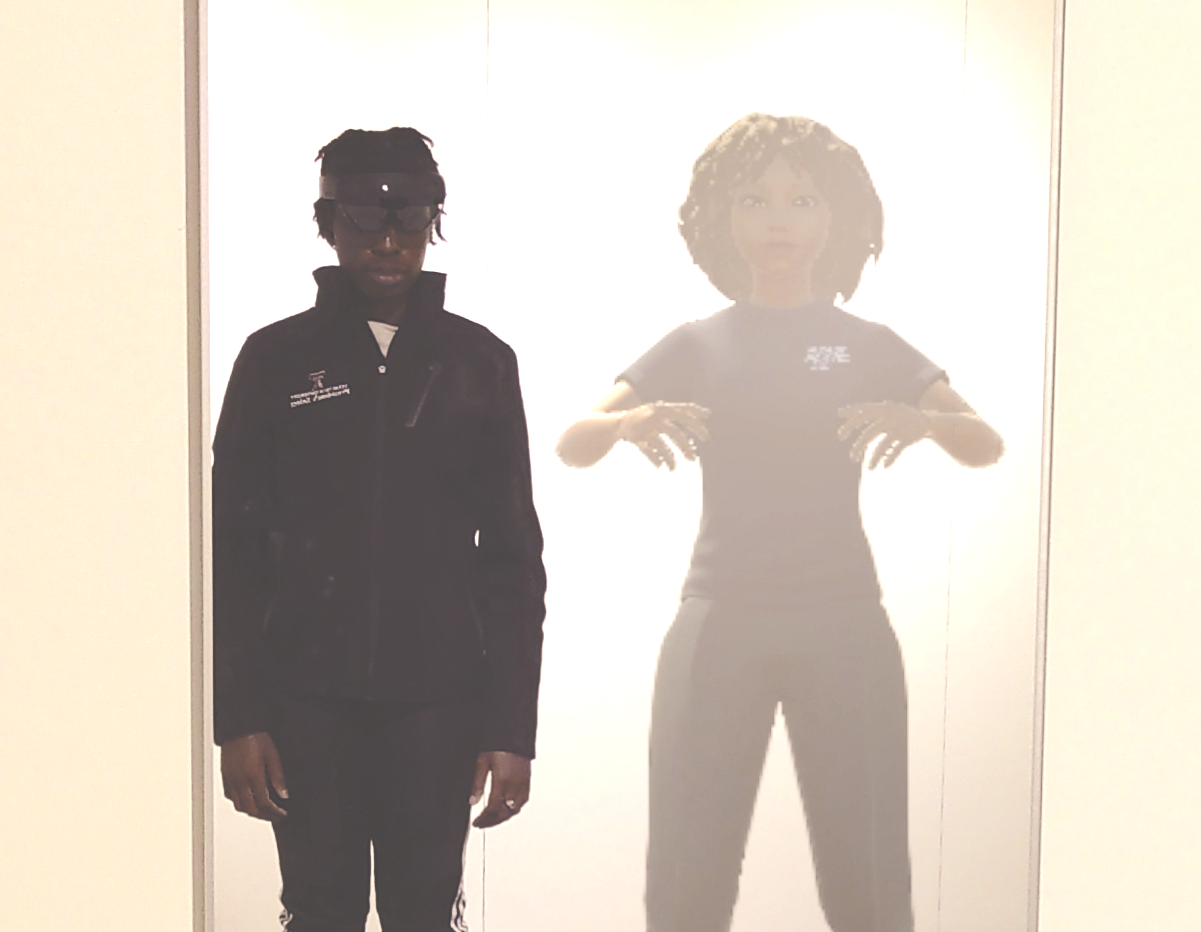

abstract = {Display technologies in the fields of virtual and augmented reality can affect the appearance of human representations, such as avatars used in telepresence or entertainment applications. In this paper, we describe a user study (N=20) where participants saw themselves in a mirror side-by-side with their own avatar, through use of a HoloLens 2 optical see-through head-mounted display. Participants were tasked to match their avatar’s appearance to their own under two environment lighting conditions (200 lux and 2,000 lux). Our results showed that the intensity of environment lighting had a significant effect on participants selected skin colors for their avatars, where participants with dark skin colors tended to make their avatar’s skin color lighter, nearly to the level of participants with light skin color. Further, in particular female participants made their avatar’s hair color darker for the lighter environment lighting condition. We discuss our results with a view on technological limitations and effects on the diversity of avatar representations on optical see-through displays.},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

Display technologies in the fields of virtual and augmented reality can affect the appearance of human representations, such as avatars used in telepresence or entertainment applications. In this paper, we describe a user study (N=20) where participants saw themselves in a mirror side-by-side with their own avatar, through use of a HoloLens 2 optical see-through head-mounted display. Participants were tasked to match their avatar’s appearance to their own under two environment lighting conditions (200 lux and 2,000 lux). Our results showed that the intensity of environment lighting had a significant effect on participants selected skin colors for their avatars, where participants with dark skin colors tended to make their avatar’s skin color lighter, nearly to the level of participants with light skin color. Further, in particular female participants made their avatar’s hair color darker for the lighter environment lighting condition. We discuss our results with a view on technological limitations and effects on the diversity of avatar representations on optical see-through displays. |

| Austin Erickson; Gerd Bruder; Gregory F. Welch Analysis of the Saliency of Color-Based Dichoptic Cues in Optical See-Through Augmented Reality Journal Article In: Transactions on Visualization and Computer Graphics, pp. 1-15, 2022. @article{Erickson2022b,

title = {Analysis of the Saliency of Color-Based Dichoptic Cues in Optical See-Through Augmented Reality},

author = {Austin Erickson and Gerd Bruder and Gregory F. Welch},

editor = {Klaus Mueller},

url = {https://sreal.ucf.edu/wp-content/uploads/2022/07/ARPreattentiveCues-1.pdf},

doi = {10.1109/TVCG.2022.3195111},

year = {2022},

date = {2022-07-26},

urldate = {2022-07-26},

journal = {Transactions on Visualization and Computer Graphics},

pages = {1-15},

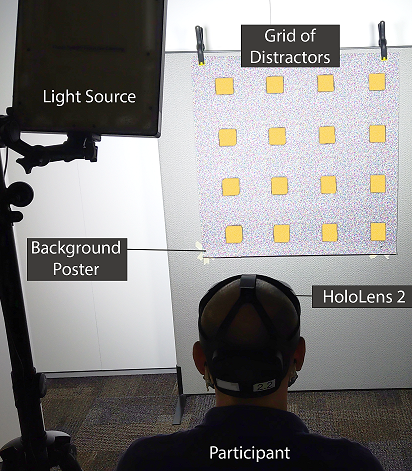

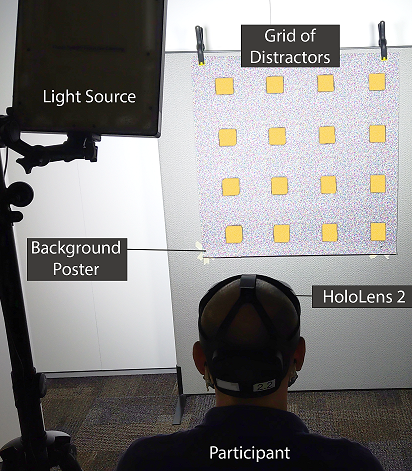

abstract = {In a future of pervasive augmented reality (AR), AR systems will need to be able to efficiently draw or guide the attention of the user to visual points of interest in their physical-virtual environment. Since AR imagery is overlaid on top of the user’s view of their physical environment, these attention guidance techniques must not only compete with other virtual imagery, but also with distracting or attention-grabbing features in the user’s physical environment. Because of the wide range of physical-virtual environments that pervasive AR users will find themselves in, it is difficult to design visual cues that “pop out” to the user without performing a visual analysis of the user’s environment, and changing the appearance of the cue to stand out from its surroundings.

In this paper, we present an initial investigation into the potential uses of dichoptic visual cues for optical see-through AR displays, specifically cues that involve having a difference in hue, saturation, or value between the user’s eyes. These types of cues have been shown to be preattentively processed by the user when presented on other stereoscopic displays, and may also be an effective method of drawing user attention on optical see-through AR displays. We present two user studies: one that evaluates the saliency of dichoptic visual cues on optical see-through displays, and one that evaluates their subjective qualities. Our results suggest that hue-based dichoptic cues or “Forbidden Colors” may be particularly effective for these purposes, achieving significantly lower error rates in a pop out task compared to value-based and saturation-based cues.},

keywords = {},

pubstate = {published},

tppubtype = {article}

}

In a future of pervasive augmented reality (AR), AR systems will need to be able to efficiently draw or guide the attention of the user to visual points of interest in their physical-virtual environment. Since AR imagery is overlaid on top of the user’s view of their physical environment, these attention guidance techniques must not only compete with other virtual imagery, but also with distracting or attention-grabbing features in the user’s physical environment. Because of the wide range of physical-virtual environments that pervasive AR users will find themselves in, it is difficult to design visual cues that “pop out” to the user without performing a visual analysis of the user’s environment, and changing the appearance of the cue to stand out from its surroundings.

In this paper, we present an initial investigation into the potential uses of dichoptic visual cues for optical see-through AR displays, specifically cues that involve having a difference in hue, saturation, or value between the user’s eyes. These types of cues have been shown to be preattentively processed by the user when presented on other stereoscopic displays, and may also be an effective method of drawing user attention on optical see-through AR displays. We present two user studies: one that evaluates the saliency of dichoptic visual cues on optical see-through displays, and one that evaluates their subjective qualities. Our results suggest that hue-based dichoptic cues or “Forbidden Colors” may be particularly effective for these purposes, achieving significantly lower error rates in a pop out task compared to value-based and saturation-based cues. |

| Austin Erickson; Gerd Bruder; Gregory Welch; Isaac Bynum; Tabitha Peck; Jessica Good Perceived Humanness Bias in Additive Light Model Displays (Poster) Journal Article In: Journal of Vision, iss. Journal of Vision, 2022. @article{Erickson2022,

title = {Perceived Humanness Bias in Additive Light Model Displays (Poster)},

author = {Austin Erickson and Gerd Bruder and Gregory Welch and Isaac Bynum and Tabitha Peck and Jessica Good},

url = {https://www.visionsciences.org/presentation/?id=4201

},

year = {2022},

date = {2022-05-17},

urldate = {2022-05-17},

journal = {Journal of Vision},

issue = {Journal of Vision},

publisher = {Association for Research in Vision and Ophthalmology (ARVO)},

abstract = {Additive light model displays, such as optical see-through augmented reality displays, create imagery by adding light over a physical scene. While these types of displays are commonly used, they are limited in their ability to display dark, low-luminance colors. As a result of this, these displays cannot render the color black and other similar colors, and instead the resulting color is rendered as completely transparent. This optical limitation introduces perceptual problems, as virtual imagery with dark colors appears semi-transparent, while lighter colored imagery is more opaque. We generated an image set of virtual humans that captures the peculiarities of imagery shown on an additive display by performing a perceptual matching task between imagery shown on a Microsoft HoloLens and imagery shown on a flat panel display. We then used this image set to run an online user study to explore whether this optical limitation introduces bias in user perception of virtual humans of different skin colors. We evaluated virtual avatars and virtual humans at different opacity levels ranging from how they currently appear on the Microsoft HoloLens, to how they would appear on a display without transparency and color blending issues. Our results indicate that, regardless of skin tone, the perceived humanness of the virtual humans and avatars decreases with respect to opacity level. As a result of this, virtual humans with darker skin tones are perceived as less human compared to those with lighter skin tones. This result suggests that there may be an unintentional racial bias when using applications involving telepresence or virtual humans on additive light model displays. While optical and hardware solutions to this problem are likely years away, we emphasize that future work should investigate how some of these perceptual issues may be overcome via software-based methods.},

keywords = {},

pubstate = {published},

tppubtype = {article}

}

Additive light model displays, such as optical see-through augmented reality displays, create imagery by adding light over a physical scene. While these types of displays are commonly used, they are limited in their ability to display dark, low-luminance colors. As a result of this, these displays cannot render the color black and other similar colors, and instead the resulting color is rendered as completely transparent. This optical limitation introduces perceptual problems, as virtual imagery with dark colors appears semi-transparent, while lighter colored imagery is more opaque. We generated an image set of virtual humans that captures the peculiarities of imagery shown on an additive display by performing a perceptual matching task between imagery shown on a Microsoft HoloLens and imagery shown on a flat panel display. We then used this image set to run an online user study to explore whether this optical limitation introduces bias in user perception of virtual humans of different skin colors. We evaluated virtual avatars and virtual humans at different opacity levels ranging from how they currently appear on the Microsoft HoloLens, to how they would appear on a display without transparency and color blending issues. Our results indicate that, regardless of skin tone, the perceived humanness of the virtual humans and avatars decreases with respect to opacity level. As a result of this, virtual humans with darker skin tones are perceived as less human compared to those with lighter skin tones. This result suggests that there may be an unintentional racial bias when using applications involving telepresence or virtual humans on additive light model displays. While optical and hardware solutions to this problem are likely years away, we emphasize that future work should investigate how some of these perceptual issues may be overcome via software-based methods. |

| Yifan Li, Kangsoo Kim, Austin Erickson, Nahal Norouzi, Jonathan Jules, Gerd Bruder, Greg Welch A Scoping Review of Assistance and Therapy with Head-Mounted Displays for People Who Are Visually Impaired Journal Article In: ACM Transactions on Accessible Computing, vol. 00, iss. 00, no. 00, pp. 25, 2022, ISSN: 1936-7228. @article{Li2022,

title = {A Scoping Review of Assistance and Therapy with Head-Mounted Displays for People Who Are Visually Impaired},

author = {Yifan Li, Kangsoo Kim, Austin Erickson, Nahal Norouzi, Jonathan Jules, Gerd Bruder, Greg Welch},

url = {https://sreal.ucf.edu/wp-content/uploads/2022/04/scoping.pdf},

doi = {10.1145/3522693},

issn = {1936-7228},

year = {2022},

date = {2022-04-21},

urldate = {2022-04-21},

journal = {ACM Transactions on Accessible Computing},

volume = {00},

number = {00},

issue = {00},

pages = {25},

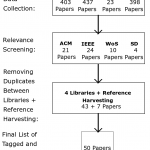

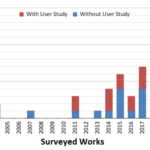

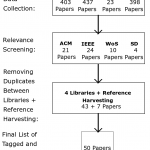

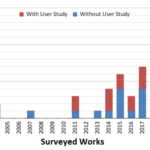

abstract = {Given the inherent visual affordances of Head-Mounted Displays (HMDs) used for Virtual and Augmented Reality (VR/AR), they have been actively used over many years as assistive and therapeutic devices for the people who are visually impaired. In this paper, we report on a scoping review of literature describing the use of HMDs in these areas. Our high-level objectives included detailed reviews and quantitative analyses of the literature, and the development of insights related to emerging trends and future research directions.

Our review began with a pool of 1251 papers collected through a variety of mechanisms. Through a structured screening process, we identified 61 English research papers employing HMDs to enhance the visual sense of people with visual impairments for more detailed analyses. Our analyses reveal that there is an increasing amount of HMD-based research on visual assistance and therapy, and there are trends in the approaches associated with the research objectives. For example, AR is most often used for visual assistive purposes, whereas VR is used for therapeutic purposes. We report on eight existing survey papers, and present detailed analyses of the 61 research papers, looking at the mitigation objectives of the researchers (assistive versus therapeutic), the approaches used, the types of HMDs, the targeted visual conditions, and the inclusion of user studies. In addition to our detailed reviews and analyses of the various characteristics, we present observations related to apparent emerging trends and future research directions.},

keywords = {},

pubstate = {published},

tppubtype = {article}

}

Given the inherent visual affordances of Head-Mounted Displays (HMDs) used for Virtual and Augmented Reality (VR/AR), they have been actively used over many years as assistive and therapeutic devices for the people who are visually impaired. In this paper, we report on a scoping review of literature describing the use of HMDs in these areas. Our high-level objectives included detailed reviews and quantitative analyses of the literature, and the development of insights related to emerging trends and future research directions.

Our review began with a pool of 1251 papers collected through a variety of mechanisms. Through a structured screening process, we identified 61 English research papers employing HMDs to enhance the visual sense of people with visual impairments for more detailed analyses. Our analyses reveal that there is an increasing amount of HMD-based research on visual assistance and therapy, and there are trends in the approaches associated with the research objectives. For example, AR is most often used for visual assistive purposes, whereas VR is used for therapeutic purposes. We report on eight existing survey papers, and present detailed analyses of the 61 research papers, looking at the mitigation objectives of the researchers (assistive versus therapeutic), the approaches used, the types of HMDs, the targeted visual conditions, and the inclusion of user studies. In addition to our detailed reviews and analyses of the various characteristics, we present observations related to apparent emerging trends and future research directions. |

| Jesus Ugarte; Nahal Norouzi; Austin Erickson; Gerd Bruder; Greg Welch

Distant Hand Interaction Framework in Augmented Reality Proceedings Article In: Proceedings of the 2022 IEEE Conference on Virtual Reality and 3D User Interfaces (VR), pp. 962-963, IEEE IEEE, Christchurch, New Zealand, 2022. @inproceedings{Ugarte2022,

title = {Distant Hand Interaction Framework in Augmented Reality},

author = {Jesus Ugarte and Nahal Norouzi and Austin Erickson and Gerd Bruder and Greg Welch

},

url = {https://sreal.ucf.edu/wp-content/uploads/2022/05/Distant_Hand_Interaction_Framework_in_Augmented_Reality.pdf},

doi = {10.1109/VRW55335.2022.00332},

year = {2022},

date = {2022-03-16},

urldate = {2022-03-16},

booktitle = {Proceedings of the 2022 IEEE Conference on Virtual Reality and 3D User Interfaces (VR)},

pages = {962-963},

publisher = {IEEE},

address = {Christchurch, New Zealand},

organization = {IEEE},

series = {2022 IEEE Conference on Virtual Reality and 3D User Interfaces Abstracts and Workshops (VRW)},

abstract = {Recent augmented reality (AR) head-mounted displays support shared experiences among multiple users in real physical spaces. While previous research looked at different embodied methods to enhance interpersonal communication cues, so far, less research looked at distant interaction in AR and, in particular, distant hand communication, which can open up new possibilities for scenarios, such as large-group collaboration. In this demonstration, we present a research framework for distant hand interaction in AR, including mapping techniques and visualizations. Our techniques are inspired by virtual reality (VR) distant hand interactions, but had to be adjusted due to the different context in AR and limited knowledge about the physical environment. We discuss different techniques for hand communication, including deictic pointing at a distance, distant drawing in AR, and distant communication through symbolic hand gestures.},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

Recent augmented reality (AR) head-mounted displays support shared experiences among multiple users in real physical spaces. While previous research looked at different embodied methods to enhance interpersonal communication cues, so far, less research looked at distant interaction in AR and, in particular, distant hand communication, which can open up new possibilities for scenarios, such as large-group collaboration. In this demonstration, we present a research framework for distant hand interaction in AR, including mapping techniques and visualizations. Our techniques are inspired by virtual reality (VR) distant hand interactions, but had to be adjusted due to the different context in AR and limited knowledge about the physical environment. We discuss different techniques for hand communication, including deictic pointing at a distance, distant drawing in AR, and distant communication through symbolic hand gestures. |

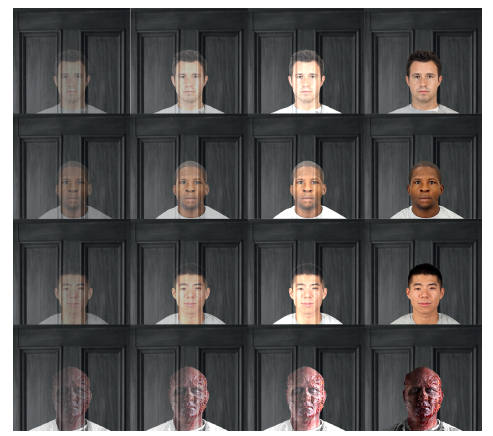

| Tabitha C. Peck; Jessica J. Good; Austin Erickson; Isaac Bynum; Gerd Bruder Effects of Transparency on Perceived Humanness: Implications for Rendering Skin Tones Using Optical See-Through Displays Journal Article In: IEEE Transactions on Visualization and Computer Graphics (TVCG), no. 01, pp. 1-11, 2022, ISSN: 1941-0506. @article{nokey,

title = {Effects of Transparency on Perceived Humanness: Implications for Rendering Skin Tones Using Optical See-Through Displays},

author = {Tabitha C. Peck; Jessica J. Good; Austin Erickson; Isaac Bynum; Gerd Bruder},

url = {https://sreal.ucf.edu/wp-content/uploads/2022/02/AR_and_Avatar_Transparency.pdf

https://www.youtube.com/watch?v=0tUlhbxhE6U&t=59s},

doi = {10.1109/TVCG.2022.3150521},

issn = {1941-0506},

year = {2022},

date = {2022-03-15},

urldate = {2022-03-15},

journal = {IEEE Transactions on Visualization and Computer Graphics (TVCG)},

number = {01},

pages = {1-11},

abstract = {Current optical see-through displays in the field of augmented reality are limited in their ability to display colors with low lightness in the hue, saturation, lightness (HSL) color space, causing such colors to appear transparent. This hardware limitation may add unintended bias into scenarios with virtual humans. Humans have varying skin tones including HSL colors with low lightness. When virtual humans are displayed with optical see-through devices, people with low lightness skin tones may be displayed semi-transparently while those with high lightness skin tones will be displayed more opaquely. For example, a Black avatar may appear semi-transparent in the same scene as a White avatar who will appear more opaque. We present an exploratory user study (N = 160) investigating whether differing opacity levels result in dehumanizing avatar and human faces. Results support that dehumanization occurs as opacity decreases. This suggests that in similar lighting, low lightness skin tones (e.g., Black faces) will be viewed as less human than high lightness skin tones (e.g., White faces). Additionally, the perceived emotionality of virtual human faces also predicts perceived humanness. Angry faces were seen overall as less human, and at lower opacity levels happy faces were seen as more human. Our results suggest that additional research is needed to understand the effects and interactions of emotionality and opacity on dehumanization. Further, we provide evidence that unintentional racial bias may be added when developing for optical see-through devices using virtual humans. We highlight the potential bias and discuss implications and directions for future research.},

keywords = {},

pubstate = {published},

tppubtype = {article}

}

Current optical see-through displays in the field of augmented reality are limited in their ability to display colors with low lightness in the hue, saturation, lightness (HSL) color space, causing such colors to appear transparent. This hardware limitation may add unintended bias into scenarios with virtual humans. Humans have varying skin tones including HSL colors with low lightness. When virtual humans are displayed with optical see-through devices, people with low lightness skin tones may be displayed semi-transparently while those with high lightness skin tones will be displayed more opaquely. For example, a Black avatar may appear semi-transparent in the same scene as a White avatar who will appear more opaque. We present an exploratory user study (N = 160) investigating whether differing opacity levels result in dehumanizing avatar and human faces. Results support that dehumanization occurs as opacity decreases. This suggests that in similar lighting, low lightness skin tones (e.g., Black faces) will be viewed as less human than high lightness skin tones (e.g., White faces). Additionally, the perceived emotionality of virtual human faces also predicts perceived humanness. Angry faces were seen overall as less human, and at lower opacity levels happy faces were seen as more human. Our results suggest that additional research is needed to understand the effects and interactions of emotionality and opacity on dehumanization. Further, we provide evidence that unintentional racial bias may be added when developing for optical see-through devices using virtual humans. We highlight the potential bias and discuss implications and directions for future research. |

| Isaac Bynum; Jessica J. Good; Gerd Bruder; Austin Erickson; Tabitha C. Peck The Effects of Transparency on Dehumanization of Black Avatars in Augmented Reality Proceedings Article In: Proceedings of the Annual Conference of the Society for Personality and Social Psychology, Society for Personality and Social Psychology San Francisco, CA, 2022. @inproceedings{Bynum2022,

title = {The Effects of Transparency on Dehumanization of Black Avatars in Augmented Reality},

author = {Isaac Bynum and Jessica J. Good and Gerd Bruder and Austin Erickson and Tabitha C. Peck},

url = {https://sreal.ucf.edu/wp-content/uploads/2022/07/spspPoster.pdf},

year = {2022},

date = {2022-02-16},

urldate = {2022-02-16},

booktitle = {Proceedings of the Annual Conference of the Society for Personality and Social Psychology},

address = {San Francisco, CA},

organization = {Society for Personality and Social Psychology},

series = {Annual Conference of the Society for Personality and Social Psychology},

howpublished = {Poster at Annual Conference of the Society for Personality and Social Psychology 2022},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

|

2021

|

| Connor D. Flick; Courtney J. Harris; Nikolas T. Yonkers; Nahal Norouzi; Austin Erickson; Zubin Choudhary; Matt Gottsacker; Gerd Bruder; Gregory F. Welch Trade-offs in Augmented Reality User Interfaces for Controlling a Smart Environment Proceedings Article In: In Symposium on Spatial User Interaction (SUI '21), pp. 1-11, Association for Computing Machinery, New York, NY, USA, 2021. @inproceedings{Flick2021,

title = {Trade-offs in Augmented Reality User Interfaces for Controlling a Smart Environment},

author = {Connor D. Flick and Courtney J. Harris and Nikolas T. Yonkers and Nahal Norouzi and Austin Erickson and Zubin Choudhary and Matt Gottsacker and Gerd Bruder and Gregory F. Welch},

url = {https://sreal.ucf.edu/wp-content/uploads/2021/09/SUI2021_REU_Paper.pdf},

year = {2021},

date = {2021-11-09},

urldate = {2021-11-09},

booktitle = {In Symposium on Spatial User Interaction (SUI '21)},

pages = {1-11},

publisher = {Association for Computing Machinery},

address = {New York, NY, USA},

abstract = {Smart devices and Internet of Things (IoT) technologies are replacing or being incorporated into traditional devices at a growing pace. The use of digital interfaces to interact with these devices has become a common occurrence in homes, work spaces, and various industries around the world. The most common interfaces for these connected devices focus on mobile apps or voice control via intelligent virtual assistants. However, with augmented reality (AR) becoming more popular and accessible among consumers, there are new opportunities for spatial user interfaces to seamlessly bridge the gap between digital and physical affordances.

In this paper, we present a human-subject study evaluating and comparing four user interfaces for smart connected environments: gaze input, hand gestures, voice input, and a mobile app. We assessed participants’ user experience, usability, task load, completion time, and preferences. Our results show multiple trade-offs between these interfaces across these measures. In particular, we found that gaze input shows great potential for future use cases, while both gaze input and hand gestures suffer from limited familiarity among users, compared to voice input and mobile apps.},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

Smart devices and Internet of Things (IoT) technologies are replacing or being incorporated into traditional devices at a growing pace. The use of digital interfaces to interact with these devices has become a common occurrence in homes, work spaces, and various industries around the world. The most common interfaces for these connected devices focus on mobile apps or voice control via intelligent virtual assistants. However, with augmented reality (AR) becoming more popular and accessible among consumers, there are new opportunities for spatial user interfaces to seamlessly bridge the gap between digital and physical affordances.

In this paper, we present a human-subject study evaluating and comparing four user interfaces for smart connected environments: gaze input, hand gestures, voice input, and a mobile app. We assessed participants’ user experience, usability, task load, completion time, and preferences. Our results show multiple trade-offs between these interfaces across these measures. In particular, we found that gaze input shows great potential for future use cases, while both gaze input and hand gestures suffer from limited familiarity among users, compared to voice input and mobile apps. |

| Nahal Norouzi; Gerd Bruder; Austin Erickson; Kangsoo Kim; Jeremy Bailenson; Pamela J. Wisniewski; Charles E. Hughes; and Gregory F. Welch Virtual Animals as Diegetic Attention Guidance Mechanisms in 360-Degree Experiences Journal Article In: IEEE Transactions on Visualization and Computer Graphics (TVCG) Special Issue on ISMAR 2021, pp. 11, 2021. @article{Norouzi2021,

title = {Virtual Animals as Diegetic Attention Guidance Mechanisms in 360-Degree Experiences},

author = {Nahal Norouzi and Gerd Bruder and Austin Erickson and Kangsoo Kim and Jeremy Bailenson and Pamela J. Wisniewski and Charles E. Hughes and and Gregory F. Welch},

url = {https://sreal.ucf.edu/wp-content/uploads/2021/08/IEEE_ISMAR_TVCG_2021.pdf},

year = {2021},

date = {2021-10-15},

urldate = {2021-10-15},

journal = {IEEE Transactions on Visualization and Computer Graphics (TVCG) Special Issue on ISMAR 2021},

pages = {11},

abstract = {360-degree experiences such as cinematic virtual reality and 360-degree videos are becoming increasingly popular. In most examples, viewers can freely explore the content by changing their orientation. However, in some cases, this increased freedom may lead to viewers missing important events within such experiences. Thus, a recent research thrust has focused on studying mechanisms for guiding viewers’ attention while maintaining their sense of presence and fostering a positive user experience. One approach is the utilization of diegetic mechanisms, characterized by an internal consistency with respect to the narrative and the environment, for attention guidance. While such mechanisms are highly attractive, their uses and potential implementations are still not well understood. Additionally, acknowledging the user in 360-degree experiences has been linked to a higher sense of presence and connection. However, less is known when acknowledging behaviors are carried out by attention guiding mechanisms. To close these gaps, we conducted a within-subjects user study with five conditions of no guide and virtual arrows, birds, dogs, and dogs that acknowledge the user and the environment. Through our mixed-methods analysis, we found that the diegetic virtual animals resulted in a more positive user experience, all of which were at least as effective as the non-diegetic arrow in guiding users towards target events. The acknowledging dog received the most positive responses from our participants in terms of preference and user experience and significantly improved their sense of presence compared to the non-diegetic arrow. Lastly, three themes emerged from a qualitative analysis of our participants’ feedback, indicating the importance of the guide’s blending in, its acknowledging behavior, and participants’ positive associations as the main factors for our participants’ preferences.},

keywords = {},

pubstate = {published},

tppubtype = {article}

}

360-degree experiences such as cinematic virtual reality and 360-degree videos are becoming increasingly popular. In most examples, viewers can freely explore the content by changing their orientation. However, in some cases, this increased freedom may lead to viewers missing important events within such experiences. Thus, a recent research thrust has focused on studying mechanisms for guiding viewers’ attention while maintaining their sense of presence and fostering a positive user experience. One approach is the utilization of diegetic mechanisms, characterized by an internal consistency with respect to the narrative and the environment, for attention guidance. While such mechanisms are highly attractive, their uses and potential implementations are still not well understood. Additionally, acknowledging the user in 360-degree experiences has been linked to a higher sense of presence and connection. However, less is known when acknowledging behaviors are carried out by attention guiding mechanisms. To close these gaps, we conducted a within-subjects user study with five conditions of no guide and virtual arrows, birds, dogs, and dogs that acknowledge the user and the environment. Through our mixed-methods analysis, we found that the diegetic virtual animals resulted in a more positive user experience, all of which were at least as effective as the non-diegetic arrow in guiding users towards target events. The acknowledging dog received the most positive responses from our participants in terms of preference and user experience and significantly improved their sense of presence compared to the non-diegetic arrow. Lastly, three themes emerged from a qualitative analysis of our participants’ feedback, indicating the importance of the guide’s blending in, its acknowledging behavior, and participants’ positive associations as the main factors for our participants’ preferences. |

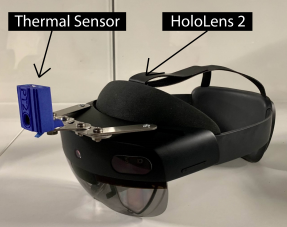

| Austin Erickson; Dirk Reiners; Gerd Bruder; Greg Welch Augmenting Human Perception: Mediation of Extrasensory Signals in Head-Worn Augmented Reality Proceedings Article In: Proceedings of the 2021 International Symposium on Mixed and Augmented Reality, pp. 373-377, IEEE IEEE, 2021. @inproceedings{Erickson2021b,

title = {Augmenting Human Perception: Mediation of Extrasensory Signals in Head-Worn Augmented Reality},

author = {Austin Erickson and Dirk Reiners and Gerd Bruder and Greg Welch},

url = {https://sreal.ucf.edu/wp-content/uploads/2021/08/ismar21d-sub1093-i6.pdf},

doi = {10.1109/ISMAR-Adjunct54149.2021.00085},

year = {2021},

date = {2021-10-04},

urldate = {2021-10-04},

booktitle = {Proceedings of the 2021 International Symposium on Mixed and Augmented Reality},

pages = {373-377},

publisher = {IEEE},

organization = {IEEE},

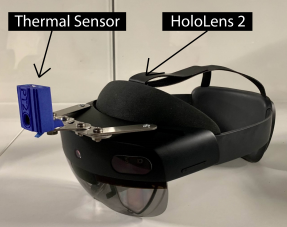

series = {ISMAR 2021},

abstract = {Mediated perception systems are systems in which sensory signals from the user's environment are mediated to the user's sensory channels. This type of system has great potential for enhancing the perception of the user via augmenting and/or diminishing incoming sensory signals according to the user's context, preferences, and perceptual capability. They also allow for extending the perception of the user to enable them to sense signals typically imperceivable to human senses, such as regions of the electromagnetic spectrum beyond visible light. However, in order to effectively mediate extrasensory data to the user, we need to understand when and how such data should be presented to them.

In this paper, we present a prototype mediated perception system that maps extrasensory spatial data into visible light displayed within an augmented reality (AR) optical see-through head-mounted display (OST-HMD). Although the system is generalized such that it could support any spatial sensor data with minor modification, we chose to test the system using thermal infrared sensors. This system improves upon previous extended perception augmented reality prototypes in that it is capable of projecting egocentric sensor data in real time onto a 3D mesh generated by the OST-HMD that is representative of the user's environment. We present the lessons learned through iterative improvements to the system, as well as a performance analysis of the system and recommendations for future work.},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

Mediated perception systems are systems in which sensory signals from the user's environment are mediated to the user's sensory channels. This type of system has great potential for enhancing the perception of the user via augmenting and/or diminishing incoming sensory signals according to the user's context, preferences, and perceptual capability. They also allow for extending the perception of the user to enable them to sense signals typically imperceivable to human senses, such as regions of the electromagnetic spectrum beyond visible light. However, in order to effectively mediate extrasensory data to the user, we need to understand when and how such data should be presented to them.

In this paper, we present a prototype mediated perception system that maps extrasensory spatial data into visible light displayed within an augmented reality (AR) optical see-through head-mounted display (OST-HMD). Although the system is generalized such that it could support any spatial sensor data with minor modification, we chose to test the system using thermal infrared sensors. This system improves upon previous extended perception augmented reality prototypes in that it is capable of projecting egocentric sensor data in real time onto a 3D mesh generated by the OST-HMD that is representative of the user's environment. We present the lessons learned through iterative improvements to the system, as well as a performance analysis of the system and recommendations for future work. |

| Austin Erickson; Kangsoo Kim; Gerd Bruder; Gregory F. Welch Beyond Visible Light: User and Societal Impacts of Egocentric Multispectral Vision Proceedings Article In: Chen, J. Y. C.; Fragomeni, G. (Ed.): In Proceedings of the 2021 International Conference on Virtual, Augmented, and Mixed Reality, pp. 19, Springer Nature, Washington D.C, 2021. @inproceedings{Erickson2020fb,

title = {Beyond Visible Light: User and Societal Impacts of Egocentric Multispectral Vision},

author = {Austin Erickson and Kangsoo Kim and Gerd Bruder and Gregory F. Welch},

editor = {J. Y. C. Chen and G. Fragomeni},

url = {https://sreal.ucf.edu/wp-content/uploads/2021/03/VAMR21-MSV.pdf},

doi = {10.1007/978-3-030-77599-5_23},

year = {2021},

date = {2021-07-24},

booktitle = {In Proceedings of the 2021 International Conference on Virtual, Augmented, and Mixed Reality},

number = {23},

pages = {19},

publisher = {Springer Nature},

address = {Washington D.C},

abstract = {Multi-spectral imagery is becoming popular for a wide range of application fields from agriculture to healthcare, mainly stemming from advances in consumer sensor and display technologies. Modern augmented reality (AR) head-mounted displays already combine a multitude of sensors and are well-suited for integration with additional sensors, such as cameras capturing information from different parts of the electromagnetic spectrum. In this paper, we describe a novel multi-spectral vision prototype based on the Microsoft HoloLens 1, which we extended with two thermal infrared (IR) cameras and two ultraviolet (UV) cameras. We performed an exploratory experiment, in which participants wore the prototype for an extended period of time and assessed its potential to augment our daily activities. Our report covers a discussion of qualitative insights on personal and societal uses of such novel multi-spectral vision systems, including their applicability for use during the COVID-19 pandemic},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

Multi-spectral imagery is becoming popular for a wide range of application fields from agriculture to healthcare, mainly stemming from advances in consumer sensor and display technologies. Modern augmented reality (AR) head-mounted displays already combine a multitude of sensors and are well-suited for integration with additional sensors, such as cameras capturing information from different parts of the electromagnetic spectrum. In this paper, we describe a novel multi-spectral vision prototype based on the Microsoft HoloLens 1, which we extended with two thermal infrared (IR) cameras and two ultraviolet (UV) cameras. We performed an exploratory experiment, in which participants wore the prototype for an extended period of time and assessed its potential to augment our daily activities. Our report covers a discussion of qualitative insights on personal and societal uses of such novel multi-spectral vision systems, including their applicability for use during the COVID-19 pandemic |

| Austin Erickson; Kangsoo Kim; Alexis Lambert; Gerd Bruder; Michael P. Browne; Greg Welch An Extended Analysis on the Benefits of Dark Mode User Interfaces in Optical See-Through Head-Mounted Displays Journal Article In: ACM Transactions on Applied Perception, vol. 18, no. 3, pp. 22, 2021. @article{Erickson2021,

title = {An Extended Analysis on the Benefits of Dark Mode User Interfaces in Optical See-Through Head-Mounted Displays},

author = {Austin Erickson and Kangsoo Kim and Alexis Lambert and Gerd Bruder and Michael P. Browne and Greg Welch},

editor = {Victoria Interrante and Martin Giese},

url = {https://sreal.ucf.edu/wp-content/uploads/2021/03/ACM_TAP2020_DarkMode1_5.pdf},

doi = {https://doi.org/10.1145/3456874},

year = {2021},

date = {2021-05-20},

journal = {ACM Transactions on Applied Perception},

volume = {18},

number = {3},

pages = {22},

abstract = {Light-on-dark color schemes, so-called “Dark Mode,” are becoming more and more popular over a wide range of display technologies and application fields. Many people who have to look at computer screens for hours at a time, such as computer programmers and computer graphics artists, indicate a preference for switching colors on a computer screen from dark text on a light background to light text on a dark background due to perceived advantages related to visual comfort and acuity, specifically when working in low-light environments.

In this paper, we investigate the effects of dark mode color schemes in the field of optical see-through head-mounted displays (OST-HMDs), where the characteristic “additive” light model implies that bright graphics are visible but dark graphics are transparent. We describe two human-subject studies in which we evaluated a normal and inverted color mode in front of different physical backgrounds and different lighting conditions. Our results indicate that dark mode graphics displayed on the HoloLens have significant benefits for visual acuity, and usability, while user preferences depend largely on the lighting in the physical environment. We discuss the implications of these effects on user interfaces and applications.},

keywords = {},

pubstate = {published},

tppubtype = {article}

}

Light-on-dark color schemes, so-called “Dark Mode,” are becoming more and more popular over a wide range of display technologies and application fields. Many people who have to look at computer screens for hours at a time, such as computer programmers and computer graphics artists, indicate a preference for switching colors on a computer screen from dark text on a light background to light text on a dark background due to perceived advantages related to visual comfort and acuity, specifically when working in low-light environments.

In this paper, we investigate the effects of dark mode color schemes in the field of optical see-through head-mounted displays (OST-HMDs), where the characteristic “additive” light model implies that bright graphics are visible but dark graphics are transparent. We describe two human-subject studies in which we evaluated a normal and inverted color mode in front of different physical backgrounds and different lighting conditions. Our results indicate that dark mode graphics displayed on the HoloLens have significant benefits for visual acuity, and usability, while user preferences depend largely on the lighting in the physical environment. We discuss the implications of these effects on user interfaces and applications. |

2020

|

![[Demo] Dark/Light Mode Adaptation for Graphical User Interfaces on Near-Eye Displays](https://sreal.ucf.edu/wp-content/uploads/2020/12/OST-mockup-acuity-e1607360140366-150x150.png) | Austin Erickson; Kangsoo Kim; Gerd Bruder; Gregory F. Welch [Demo] Dark/Light Mode Adaptation for Graphical User Interfaces on Near-Eye Displays Proceedings Article In: Kulik, Alexander; Sra, Misha; Kim, Kangsoo; Seo, Byung-Kuk (Ed.): Proceedings of the International Conference on Artificial Reality and Telexistence & Eurographics Symposium on Virtual Environments, pp. 23-24, The Eurographics Association The Eurographics Association, 2020, ISBN: 978-3-03868-112-0. @inproceedings{Erickson2020f,

title = {[Demo] Dark/Light Mode Adaptation for Graphical User Interfaces on Near-Eye Displays},

author = {Austin Erickson and Kangsoo Kim and Gerd Bruder and Gregory F. Welch},

editor = {Kulik, Alexander and Sra, Misha and Kim, Kangsoo and Seo, Byung-Kuk},

url = {https://sreal.ucf.edu/wp-content/uploads/2020/12/DarkmodeDEMO_ICAT_EGVE_2020-2.pdf

https://www.youtube.com/watch?v=VJQTaYyofCw&t=61s

},

doi = {https://doi.org/10.2312/egve.20201280},

isbn = {978-3-03868-112-0},

year = {2020},

date = {2020-12-02},

booktitle = {Proceedings of the International Conference on Artificial Reality and Telexistence & Eurographics Symposium on Virtual Environments},

pages = {23-24},

publisher = {The Eurographics Association},

organization = {The Eurographics Association},

abstract = {In the fields of augmented reality (AR) and virtual reality (VR), many applications involve user interfaces (UIs) to display various types of information to users. Such UIs are an important component that influences user experience and human factors in AR/VR because the users are directly facing and interacting with them to absorb the visualized information and manipulate the content. While consumer’s interests in different forms of near-eye displays, such as AR/VR head-mounted displays (HMDs), are increasing, research on the design standard for AR/VR UIs and human factors becomes more and more interesting and timely important. Although UI configurations, such as dark mode and light mode, have increased in popularity on other display types over the last several years, they have yet to make their way into AR/VR devices as built in features. This demo showcases several use cases of dark mode and light mode UIs on AR/VR HMDs, and provides general guidelines for when they should be used to provide perceptual benefits to the user},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

In the fields of augmented reality (AR) and virtual reality (VR), many applications involve user interfaces (UIs) to display various types of information to users. Such UIs are an important component that influences user experience and human factors in AR/VR because the users are directly facing and interacting with them to absorb the visualized information and manipulate the content. While consumer’s interests in different forms of near-eye displays, such as AR/VR head-mounted displays (HMDs), are increasing, research on the design standard for AR/VR UIs and human factors becomes more and more interesting and timely important. Although UI configurations, such as dark mode and light mode, have increased in popularity on other display types over the last several years, they have yet to make their way into AR/VR devices as built in features. This demo showcases several use cases of dark mode and light mode UIs on AR/VR HMDs, and provides general guidelines for when they should be used to provide perceptual benefits to the user |

| Nahal Norouzi; Kangsoo Kim; Gerd Bruder; Austin Erickson; Zubin Choudhary; Yifan Li; Greg Welch A Systematic Literature Review of Embodied Augmented Reality Agents in Head-Mounted Display Environments Proceedings Article In: In Proceedings of the International Conference on Artificial Reality and Telexistence & Eurographics Symposium on Virtual Environments, pp. 11, 2020. @inproceedings{Norouzi2020c,

title = {A Systematic Literature Review of Embodied Augmented Reality Agents in Head-Mounted Display Environments},

author = {Nahal Norouzi and Kangsoo Kim and Gerd Bruder and Austin Erickson and Zubin Choudhary and Yifan Li and Greg Welch},

url = {https://sreal.ucf.edu/wp-content/uploads/2020/11/IVC_ICAT_EGVE2020.pdf

https://www.youtube.com/watch?v=IsX5q86pH4M},

year = {2020},

date = {2020-12-02},

urldate = {2020-12-02},

booktitle = {In Proceedings of the International Conference on Artificial Reality and Telexistence & Eurographics Symposium on Virtual Environments},

pages = {11},

abstract = {Embodied agents, i.e., computer-controlled characters, have proven useful for various applications across a multitude of display setups and modalities. While most traditional work focused on embodied agents presented on a screen or projector, and a growing number of works are focusing on agents in virtual reality, a comparatively small number of publications looked at such agents in augmented reality (AR). Such AR agents, specifically when using see-through head-mounted displays (HMDs)as the display medium, show multiple critical differences to other forms of agents, including their appearances, behaviors, and physical-virtual interactivity. Due to the unique challenges in this specific field, and due to the comparatively limited attention by the research community so far, we believe that it is important to map the field to understand the current trends, challenges, and future research. In this paper, we present a systematic review of the research performed on interactive, embodied AR agents using HMDs. Starting with 1261 broadly related papers, we conducted an in-depth review of 50 directly related papers from2000 to 2020, focusing on papers that reported on user studies aiming to improve our understanding of interactive agents in AR HMD environments or their utilization in specific applications. We identified common research and application areas of AR agents through a structured iterative process, present research trends, and gaps, and share insights on future directions.},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

Embodied agents, i.e., computer-controlled characters, have proven useful for various applications across a multitude of display setups and modalities. While most traditional work focused on embodied agents presented on a screen or projector, and a growing number of works are focusing on agents in virtual reality, a comparatively small number of publications looked at such agents in augmented reality (AR). Such AR agents, specifically when using see-through head-mounted displays (HMDs)as the display medium, show multiple critical differences to other forms of agents, including their appearances, behaviors, and physical-virtual interactivity. Due to the unique challenges in this specific field, and due to the comparatively limited attention by the research community so far, we believe that it is important to map the field to understand the current trends, challenges, and future research. In this paper, we present a systematic review of the research performed on interactive, embodied AR agents using HMDs. Starting with 1261 broadly related papers, we conducted an in-depth review of 50 directly related papers from2000 to 2020, focusing on papers that reported on user studies aiming to improve our understanding of interactive agents in AR HMD environments or their utilization in specific applications. We identified common research and application areas of AR agents through a structured iterative process, present research trends, and gaps, and share insights on future directions. |

![[Poster] Adapting Michelson Contrast for use with Optical See-Through Displays](https://sreal.ucf.edu/wp-content/uploads/2022/10/phonefigure2-e1664926618216-150x150.png)

![[Demo] Dark/Light Mode Adaptation for Graphical User Interfaces on Near-Eye Displays](https://sreal.ucf.edu/wp-content/uploads/2020/12/OST-mockup-acuity-e1607360140366-150x150.png)