Dr. Gregory F. Welch – Patents

2024

Greg Welch; Gerd Bruder; Salam Daher; Jason Hochreiter; Mindi Anderson; Laura Gonzalez; Desiree A. Diaz

Physical-Virtual Patient System Patent

US 12,008,917, 2024.

@patent{Welch2024ab,

title = {Physical-Virtual Patient System},

author = {Greg Welch and Gerd Bruder and Salam Daher and Jason Hochreiter and Mindi Anderson and Laura Gonzalez and Desiree A. Diaz},

url = {https://sreal.ucf.edu/wp-content/uploads/2024/06/US12008917.pdf

https://ppubs.uspto.gov/dirsearch-public/print/downloadPdf/12008917},

year = {2024},

date = {2024-06-11},

urldate = {2024-06-11},

number = {US 12,008,917},

abstract = {The invention pertains to methods for monitoring the operational status of a home automation system through extrinsic visual and audible means. Initial training periods involve capturing image and audio data representative of nominal operation, which is then processed to identify operational indicators. Unsupervised machine learning models are trained with these indicators to construct a model of normalcy and identify expectation violations in the system's operational pattern. After meeting specific stopping criteria, real-time monitoring is initiated. When an expectation violation is detected, contrastive collages or sequences are generated comprising nominal and anomalous data. These are then transmitted to an end user, effectively conveying the context of the detected anomalies. Further features include providing deep links to smartphone applications for home automation configuration and the use of auditory scene analysis techniques. The invention provides a multi-modal approach to home automation monitoring, leveraging machine learning for robust anomaly detection.},

keywords = {},

pubstate = {published},

tppubtype = {patent}

}

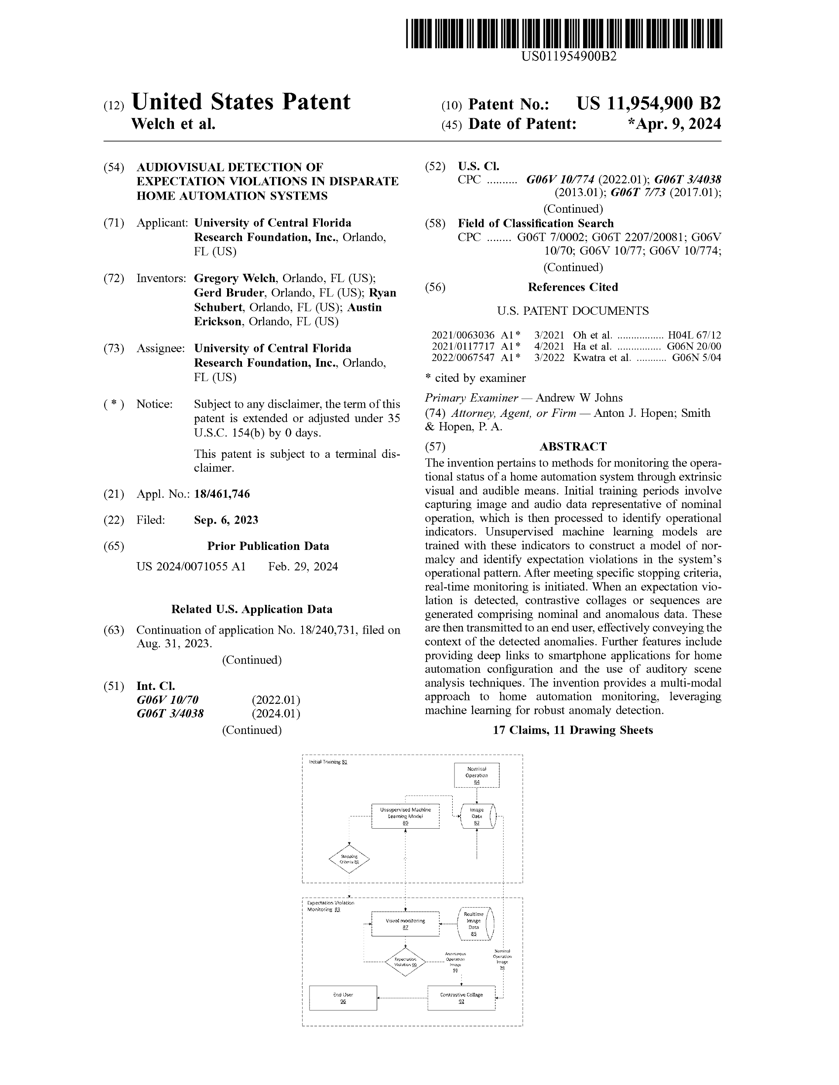

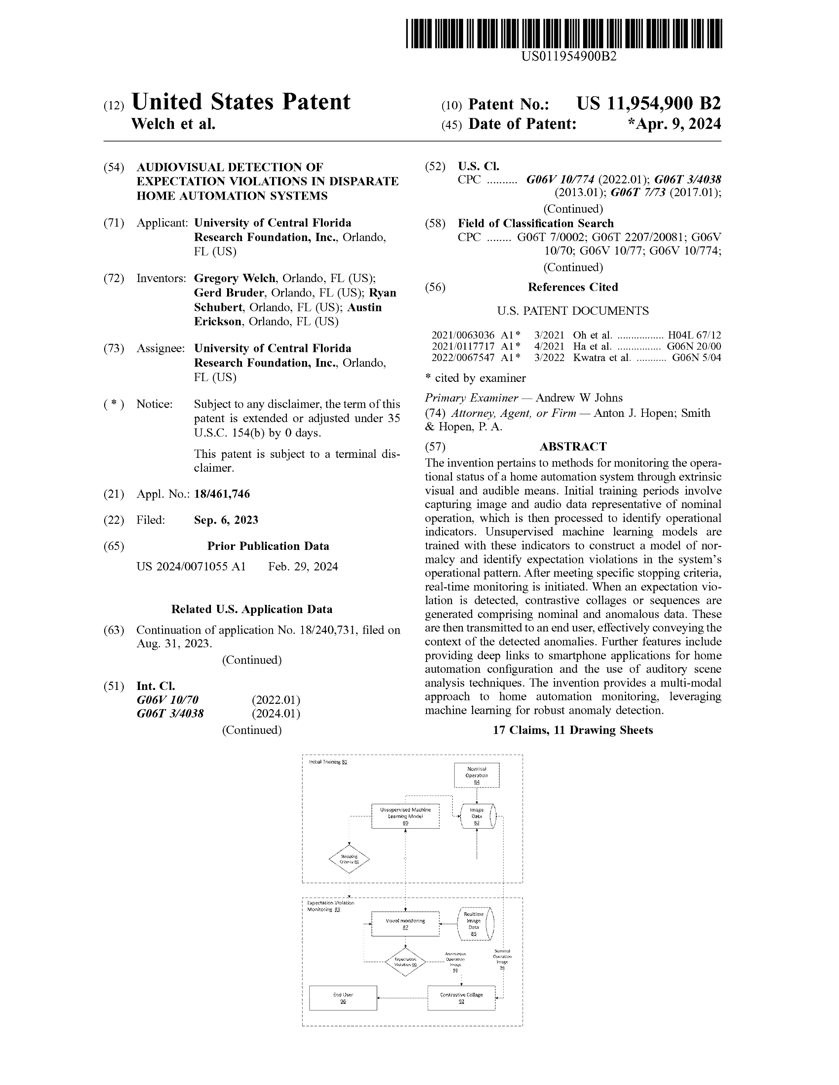

Greg Welch; Gerd Bruder; Ryan Schubert; Austin Erickson

Audiovisual Detection of Expectation Violations in Disparate Home Automation Systems Patent

US 11,954,900, 2024.

@patent{Welch2024aa,

title = {Audiovisual Detection of Expectation Violations in Disparate Home Automation Systems},

author = {Greg Welch and Gerd Bruder and Ryan Schubert and Austin Erickson},

url = {https://sreal.ucf.edu/wp-content/uploads/2024/04/US11954900.pdf

https://ppubs.uspto.gov/dirsearch-public/print/downloadPdf/11954900

},

year = {2024},

date = {2024-04-09},

urldate = {2024-04-09},

number = {US 11,954,900},

abstract = {The invention pertains to methods for monitoring the operational status of a home automation system through extrinsic visual and audible means. Initial training periods involve capturing image and audio data representative of nominal operation, which is then processed to identify operational indicators. Unsupervised machine learning models are trained with these indicators to construct a model of normalcy and identify expectation violations in the system's operational pattern. After meeting specific stopping criteria, real-time monitoring is initiated. When an expectation violation is detected, contrastive collages or sequences are generated comprising nominal and anomalous data. These are then transmitted to an end user, effectively conveying the context of the detected anomalies. Further features include providing deep links to smartphone applications for home automation configuration and the use of auditory scene analysis techniques. The invention provides a multi-modal approach to home automation monitoring, leveraging machine learning for robust anomaly detection.

},

keywords = {},

pubstate = {published},

tppubtype = {patent}

}

2023

Gerd Bruder; Greg Welch; Kangsoo Kim; Zubin Choudhary

Spatial positioning of targeted object magnification Patent

US 11,798,127, 2023.

@patent{Bruder2023aa,

title = {Spatial positioning of targeted object magnification},

author = {Gerd Bruder and Greg Welch and Kangsoo Kim and Zubin Choudhary},

url = {https://image-ppubs.uspto.gov/dirsearch-public/print/downloadPdf/11798127

https://sreal.ucf.edu/wp-content/uploads/2023/10/11798127.pdf},

year = {2023},

date = {2023-10-24},

urldate = {2023-10-24},

number = {US 11,798,127},

abstract = {One or more cameras capture objects at a higher resolution than the human eye can perceive. Objects are segmented from the background of the image and scaled to human perceptible size. The scaled-up objects are superimposed over the unscaled background. This is presented to a user via a display whereby the process selectively amplifies the size of the objects' spatially registered retinal projection while maintaining a natural (unmodified) view in the remainder of the visual field.},

keywords = {},

pubstate = {published},

tppubtype = {patent}

}

Gregory Welch; Matthew Gottsacker; Nahal Norouzi; Gerd Bruder

Intelligent Digital Interruption Management Patent

US 11,729,448, 2023.

@patent{Welch2022ab,

title = {Intelligent Digital Interruption Management},

author = {Gregory Welch and Matthew Gottsacker and Nahal Norouzi and Gerd Bruder},

url = {https://image-ppubs.uspto.gov/dirsearch-public/print/downloadPdf/11729448

https://sreal.ucf.edu/wp-content/uploads/2023/08/US11729448.pdf},

year = {2023},

date = {2023-08-15},

urldate = {2023-08-15},

number = {US 11,729,448},

abstract = {The present invention is a system to manage interrupt notifications on an operating system based on the characteristics of content in which an end user is currently immersed or engaged. For example, relatively high bitrate video throughput is indicative of corresponding high infor- mation depth and more action occurring in the scene. For periods of high information depth, interrupt notifications are deferred until the information depth falls into a relative trough. Additional embodiments of the invention process scene transitions, technical cues, dialog and lyrics to release queued interrupt notification at optimal times. A vamping process is also provided when interrupt notification are released to keep the end user prescient to the background application in which they were engaged prior to the interrupt notification coming into focus.},

keywords = {},

pubstate = {published},

tppubtype = {patent}

}

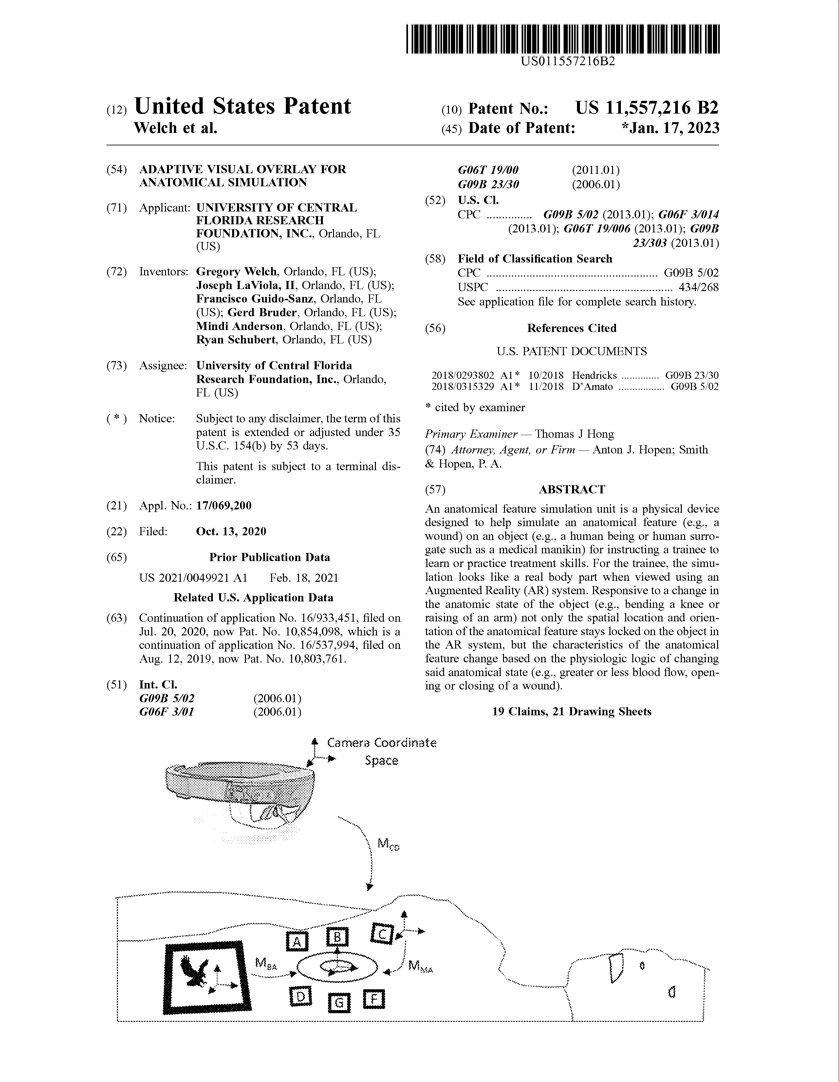

Greg Welch; Joseph LaViola; Francisco Guido-Sanz; Gerd Bruder; Mindi Anderson; Ryan Schubert

Adaptive visual overlay for anatomical simulation Patent

US 11,557,216 B2, 2023.

@patent{Welch2023av,

title = {Adaptive visual overlay for anatomical simulation},

author = {Greg Welch and Joseph LaViola and Francisco Guido-Sanz and Gerd Bruder and Mindi Anderson and Ryan Schubert},

url = {https://image-ppubs.uspto.gov/dirsearch-public/print/downloadPdf/11557216

https://sreal.ucf.edu/wp-content/uploads/2023/11/11557216.pdf},

year = {2023},

date = {2023-01-17},

urldate = {2023-01-17},

number = {US 11,557,216 B2},

abstract = {An anatomical feature simulation unit is a physical device designed to help simulate an anatomical feature (e.g., a wound) on an object (e.g., a human being or human surrogate such as a medical manikin) for instructing a trainee to learn or practice treatment skills. For the trainee, the simulation looks like a real body part when viewed using an Augmented Reality (AR) system. Responsive to a change in the anatomic state of the object (e.g., bending a knee or raising of an arm) not only the spatial location and orientation of the anatomical feature stays locked on the object in the AR system, but the characteristics of the anatomical feature change based on the physiologic logic of changing said anatomical state (e.g., greater or less blood flow, opening or closing of a wound).},

keywords = {},

pubstate = {published},

tppubtype = {patent}

}

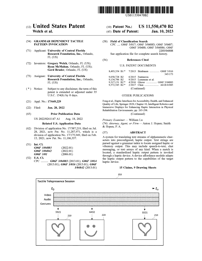

Greg Welch; Gerd Bruder; Ryan McMahan

Grammar Dependent Tactile Pattern Invocation Patent

US 11,550,470, 2023.

@patent{nokey,

title = {Grammar Dependent Tactile Pattern Invocation},

author = {Greg Welch and Gerd Bruder and Ryan McMahan},

url = {https://ppubs.uspto.gov/pubwebapp/external.html?q=11550470

https://sreal.ucf.edu/wp-content/uploads/2023/01/US11550470.pdf},

year = {2023},

date = {2023-01-10},

urldate = {2023-01-10},

number = {US 11,550,470},

abstract = {A system for translating text streams of alphanumeric characters into preconfigured, haptic output. Text strings are parsed against a grammar index to locate assigned haptic or vibratory output. This may include speech-to-text, chat messaging, or text arrays of any kind. When a match is located, a standardized haptic output pattern is invoked through a haptic device. A device affordance module adapts the haptic output pattern to the capabilities of the target haptic device.

},

keywords = {},

pubstate = {published},

tppubtype = {patent}

}

2022

Greg Welch; Gerd Bruder

Medical Monitoring Virtual Human with Situational Awareness Patent

US 11,535,261, 2022.

@patent{Welch2022ac,

title = {Medical Monitoring Virtual Human with Situational Awareness},

author = {Greg Welch and Gerd Bruder},

url = {https://image-ppubs.uspto.gov/dirsearch-public/print/downloadPdf/11535261},

year = {2022},

date = {2022-12-17},

urldate = {2022-12-17},

number = {US 11,535,261},

abstract = {Virtual humans exhibit behaviors associated with inputs and outputs of an autonomous control system for medical monitoring of patients. To foster the awareness and trust, the virtual humans exhibit situational awareness via apparent (e.g., rendered) behaviors based on inputs such as physiological vital signs. The virtual humans also exhibit situational control via apparent behaviors associated with outputs such as direct control of devices, functions of control, actions based on high-level goals, and the optional use of virtual versions of conventional physical controls. A dynamic virtual human who continually exhibits awareness of the system state and relevant contextual circumstances, along with the ability to directly control the system, is used to reduce negative feelings associated with the system such as uncertainty, concern, stress, or anxiety on the part of real human patients.

},

keywords = {},

pubstate = {published},

tppubtype = {patent}

}

Gregory Welch; Gerd Bruder

Augmentation of Relative Pose In Co-located Devices Patent

US 11,467,399, 2022.

@patent{Welch2022aa,

title = {Augmentation of Relative Pose In Co-located Devices},

author = {Gregory Welch and Gerd Bruder},

url = {https://ppubs.uspto.gov/pubwebapp/external.html?q=11467399

https://sreal.ucf.edu/wp-content/uploads/2022/10/US11467399.pdf

},

year = {2022},

date = {2022-10-11},

urldate = {2022-10-11},

number = {US 11,467,399},

abstract = {This invention relates to tracking of hand-held devices and vehicles with respect to each other, in circumstances where there are two or more users or existent objects interacting in the same share space (co-location). It extends conventional global and body-relative approaches to “cooperatively” estimate the relative poses between all useful combinations of user-worn tracked devices such as HMDs and hand-held controllers worn (or held) by multiple users. Additionally, the invention provides for tracking of vehicles such as cars and unmanned aerial vehicles.},

keywords = {},

pubstate = {published},

tppubtype = {patent}

}

Gerd Bruder; Gregory Welch; Kangsoo Kim; Zubin Choudhary

Intelligent Object Magnification for Augmented Reality Displays Patent

US 11,410,270, 2022.

BibTeX | Links:

@patent{Bruder2022ph,

title = {Intelligent Object Magnification for Augmented Reality Displays},

author = {Gerd Bruder and Gregory Welch and Kangsoo Kim and Zubin Choudhary},

url = {https://patft.uspto.gov/netacgi/nph-Parser?Sect1=PTO1&Sect2=HITOFF&d=PALL&p=1&u=%2Fnetahtml%2FPTO%2Fsrchnum.htm&r=1&f=G&l=50&s1=11410270.PN.&OS=PN/11410270&RS=PN/11410270

https://sreal.ucf.edu/wp-content/uploads/2022/08/Bruder2022ph.pdf},

year = {2022},

date = {2022-08-09},

urldate = {2022-08-09},

number = {US 11,410,270},

keywords = {},

pubstate = {published},

tppubtype = {patent}

}

Gregory Welch; Gerd Bruder; Ryan McMahan

Visual-Tactile Virtual Telepresence Patent

US 11,287,971, 2022.

BibTeX | Links:

@patent{US11287971,

title = {Visual-Tactile Virtual Telepresence},

author = {Gregory Welch and Gerd Bruder and Ryan McMahan},

url = {https://patft.uspto.gov/netacgi/nph-Parser?Sect1=PTO1&Sect2=HITOFF&d=PALL&p=1&u=%2Fnetahtml%2FPTO%2Fsrchnum.htm&r=1&f=G&l=50&s1=11287971.PN.&OS=PN/11287971&RS=PN/112879711

https://sreal.ucf.edu/wp-content/uploads/2022/08/US11287971.pdf},

year = {2022},

date = {2022-03-29},

urldate = {2022-03-29},

number = {US 11,287,971},

keywords = {},

pubstate = {published},

tppubtype = {patent}

}

2021

Gregory Welch; Gerd Bruder

Autonomous systems human controller simulation Patent

US 11,148,671, 2021.

BibTeX | Links:

@patent{Welch2021oj,

title = {Autonomous systems human controller simulation},

author = {Gregory Welch and Gerd Bruder},

url = {https://patft.uspto.gov/netacgi/nph-Parser?Sect1=PTO1&Sect2=HITOFF&d=PALL&p=1&u=%2Fnetahtml%2FPTO%2Fsrchnum.htm&r=1&f=G&l=50&s1=11148671.PN.&OS=PN/11148671&RS=PN/11148671

https://sreal.ucf.edu/wp-content/uploads/2021/10/US11148671.pdf},

year = {2021},

date = {2021-10-19},

urldate = {2021-10-19},

number = {US 11,148,671},

location = {US},

keywords = {},

pubstate = {published},

tppubtype = {patent}

}

Gregory Welch; Ryan P. McMahan; Gerd Bruder

Low Latency Tactile Telepresence Patent

US 11,106,357, 2021.

@patent{Welch2021bb,

title = {Low Latency Tactile Telepresence},

author = {Gregory Welch and Ryan P. McMahan and Gerd Bruder},

url = {https://patft.uspto.gov/netacgi/nph-Parser?Sect1=PTO2&Sect2=HITOFF&p=1&u=/netahtml/PTO/search-bool.html&r=1&f=G&l=50&co1=AND&d=PTXT&s1=11106357&OS=11106357&RS=11106357

https://sreal.ucf.edu/wp-content/uploads/2021/09/US11106357.pdf},

year = {2021},

date = {2021-08-31},

urldate = {2021-08-31},

number = {US 11,106,357},

abstract = {A system for remote tactile telepresence wherein an array of predefined touch gestures are abstracted into cataloged values and invoked either by pattern matching, by assigned name or visual indicia. A local and remote cache of the catalog reduces latency even for complicated gestures as only a gesture identifier needs to be transmitted to a haptic output destination. Additional embodiments translate gestures to different haptic device affordances. Tactile telepresence sessions are time-coded along with audiovisual content wherein playback is heard, seen, and felt. Another embodiment associates motion capture associated with the tactile profile so that remote, haptic recipients may see renderings of objects (e.g., hands) imparting vibrotactile sensations.},

keywords = {},

pubstate = {published},

tppubtype = {patent}

}

Gregory Welch; Gerd Bruder

Relative Pose Data Augmentation of Tracked Devices in Virtual Environments Patent

US 11,042,028 B1, 2021.

@patent{Welch2021,

title = {Relative Pose Data Augmentation of Tracked Devices in Virtual Environments},

author = {Gregory Welch and Gerd Bruder},

url = {https://patft.uspto.gov/netacgi/nph-Parser?Sect1=PTO2&Sect2=HITOFF&p=1&u=%2Fnetahtml%2FPTO%2Fsearch-bool.html&r=1&f=G&l=50&co1=AND&d=PTXT&s1=11042028&OS=11042028&RS=11042028

https://sreal.ucf.edu/wp-content/uploads/2021/09/Welch2021wa-002.pdf

},

year = {2021},

date = {2021-06-22},

urldate = {2021-06-22},

number = {US 11,042,028 B1},

abstract = {This invention relates to tracking of user-worn and hand-held devices with respect to each other, in circumstances where there are two or more users interacting in the same share space. It extends conventional global and body-relative approaches to "cooperatively" estimate the relative poses between all useful combinations of user-worn tracked devices such as HMDs and hand-held controllers worn (or held) by multiple users. For example, a first user's HMD estimates its absolute global pose in the coordinate frame associated with the externally-mounted devices, as well as its relative pose with respect to all other HMDs, hand-held controllers, and other user held/worn tracked devices in the environment. In this way, all HMDs (or as many as appropriate) are tracked with respect to each other, all HMDs are tracked with respect to all hand-held controllers, and all hand-held controllers are tracked with respect to all other hand-held controllers.},

keywords = {},

pubstate = {published},

tppubtype = {patent}

}

2020

Gregory Welch; Joseph LaViola Jr.; Francisco Guido-Sanz; Gerd Bruder; Mindi Anderson; Ryan Schubert

Adaptive Visual Overlay Wound Simulation Patent

US 10,854,098 B1, 2020.

@patent{Welch2020c,

title = {Adaptive Visual Overlay Wound Simulation},

author = {Gregory Welch and Joseph LaViola Jr. and Francisco Guido-Sanz and Gerd Bruder and Mindi Anderson and Ryan Schubert},

url = {https://sreal.ucf.edu/wp-content/uploads/2020/12/US10854098.pdf

http://patft.uspto.gov/netacgi/nph-Parser?Sect1=PTO1&Sect2=HITOFF&d=PALL&p=1&u=/netahtml/PTO/srchnum.htm&r=1&f=G&l=50&s1=10,854,098},

year = {2020},

date = {2020-12-01},

number = {US 10,854,098 B1},

abstract = {A wound simulation unit is a physical device designed to help simulate a wound on an object (e.g., a human being or human surrogate such as a medical manikin) for instructing a trainee to learn or practice wound-related treatment skills. For the trainee, the simulation looks like a real wound when viewed using an Augmented Reality (AR) system. Responsive to a change in the anatomic state of the object (e.g., bending a knee or raising o f an arm) not only the spatial location and orientation of the wound stays locked on the object in the AR system, but the characteristics of the wound change based on the physiologic logic o f changing said anatomical state (e.g., greater or less blood flow, opening or closing of the wound).},

keywords = {},

pubstate = {published},

tppubtype = {patent}

}

Gregory Welch; Joseph LaViola Jr.; Francisco Guido-Sanz; Gerd Bruder; Mindi Anderson; Ryan Schubert

Multisensory Wound Simulation Patent

US 10,803,761 B2, 2020.

@patent{Welch2020b,

title = {Multisensory Wound Simulation},

author = {Gregory Welch and Joseph LaViola Jr. and Francisco Guido-Sanz and Gerd Bruder and Mindi Anderson and Ryan Schubert},

url = {http://patft.uspto.gov/netacgi/nph-Parser?Sect1=PTO1&Sect2=HITOFF&d=PALL&p=1&u=/netahtml/PTO/srchnum.htm&r=1&f=G&l=50&s1=10803761.PN.&OS=PN/10803761&RS=PN/10803761

https://sreal.ucf.edu/wp-content/uploads/2020/10/welch2020b.pdf},

year = {2020},

date = {2020-10-13},

number = {US 10,803,761 B2},

abstract = {A Tactile-Visual Wound (TVW) simulation unit is a physical device designed to help simulate a wound on a human being or human surrogate (e.g., a medical manikin) for instructing a trainee to learn or practice wound-related treatment skills. For the trainee, the TVW would feel (to the touch) like a real wound, look like a real wound when viewed using an Augmented Reality (AR) system, and appear to behave like a real wound when manipulated.},

keywords = {},

pubstate = {published},

tppubtype = {patent}

}

2019

Gregory Welch; Karen Aroian; Steven Talbert; Kelly Allred; Patricia Weinstein; Arjun Nagendran; Remo Pillat

Physical-Virtual Patient Bed System Patent

US10410541B2, 2019, (Filed: 2017-06-02).

BibTeX | Links:

@patent{US10410541,

title = {Physical-Virtual Patient Bed System},

author = {Gregory Welch and Karen Aroian and Steven Talbert and Kelly Allred and Patricia Weinstein and Arjun Nagendran and Remo Pillat},

url = {https://patents.google.com/patent/US10410541B2/en?oq=10%2c410%2c541

http://patft.uspto.gov/netacgi/nph-Parser?Sect1=PTO2&Sect2=HITOFF&p=1&u=%2Fnetahtml%2FPTO%2Fsearch-bool.html&r=1&f=G&l=50&co1=AND&d=PTXT&s1=10410541&OS=10410541&RS=10410541

https://sreal.ucf.edu/wp-content/uploads/2022/08/US10410541.pdf},

year = {2019},

date = {2019-09-10},

urldate = {2019-09-10},

number = {US10410541B2},

location = {US},

note = {Filed: 2017-06-02},

keywords = {},

pubstate = {published},

tppubtype = {patent}

}

Gregory Welch; Arjun Nagendran; Mary Lou Sole; Laura Gonzalez

Physical-Virtual Patient Bed System Patent

US10380921B2, 2019, (Filed: 2015-07-01).

@patent{Welch2019c,

title = {Physical-Virtual Patient Bed System},

author = {Gregory Welch and Arjun Nagendran and Mary Lou Sole and Laura Gonzalez},

url = {https://patents.google.com/patent/US10380921B2/en?q=Physical-Virtual&q=Patient&q=Bed&q=System&oq=Physical-Virtual+Patient+Bed+System

http://patft.uspto.gov/netacgi/nph-Parser?Sect1=PTO1&Sect2=HITOFF&d=PALL&p=1&u=%2Fnetahtml%2FPTO%2Fsrchnum.htm&r=1&f=G&l=50&s1=10380921.PN.&OS=PN/10380921&RS=PN/10380921

https://sreal.ucf.edu/wp-content/uploads/2022/09/US10380921.pdf},

year = {2019},

date = {2019-08-13},

urldate = {2019-08-13},

number = {US10380921B2},

location = {3100 Technology Parkway},

abstract = {A patient simulation system for healthcare training is provided. The system includes one or more interchangeable shells comprising a physical anatomical model of at least a portion of a patient's body, the shell adapted to be illuminated from behind to provide one or more dynamic images viewable on the outer surface of the shells; a support system adapted to receive the shells via a mounting system, wherein the system comprises one or more image units adapted to render the one or more dynamic images viewable on the outer surface of the shells; one or more interface devices located about the patient shells to receive input and provide output; and one or more computing units in communication with the image units and interface devices, the computing units adapted to provide an interactive simulation for healthcare training.},

note = {Filed: 2015-07-01},

keywords = {},

pubstate = {published},

tppubtype = {patent}

}

Henry Fuchs; Mingsong Dou; Gregory Welch; Jan-Michael Frahm

Methods, systems, and computer readable media for unified scene acquisition and pose tracking in a wearable display Patent

US10365711B2, 2019, (Filed: 2013-05-17).

@patent{Fuchs2019,

title = {Methods, systems, and computer readable media for unified scene acquisition and pose tracking in a wearable display},

author = {Henry Fuchs and Mingsong Dou and Gregory Welch and Jan-Michael Frahm},

url = {https://patents.google.com/patent/US10365711B2/

http://patft.uspto.gov/netacgi/nph-Parser?Sect1=PTO1&Sect2=HITOFF&d=PALL&p=1&u=%2Fnetahtml%2FPTO%2Fsrchnum.htm&r=1&f=G&l=50&s1=10,365,711.PN.&OS=PN/10,365,711&RS=PN/10,365,711

},

year = {2019},

date = {2019-07-30},

number = {US10365711B2},

abstract = {Methods, systems, and computer readable media for unified scene acquisition and pose tracking in a wearable display are disclosed. According to one aspect, a system for unified scene acquisition and pose tracking in a wearable display includes a wearable frame configured to be worn by a user. Mounted on the frame are: at least one sensor for acquiring scene information for a real scene proximate to the user, the scene information including images and depth information; a pose tracker for estimating the user's head pose based on the acquired scene information; a rendering unit for generating a virtual reality (VR) image based on the acquired scene information and estimated head pose; and at least one display for displaying to the user a combination of the generated VR image and the scene proximate to the user.},

note = {Filed: 2013-05-17},

keywords = {},

pubstate = {published},

tppubtype = {patent}

}

Henry Fuchs; Gregory Welch

Methods, systems, and computer readable media for improved illumination of spatial augmented reality objects Patent

US10321107B2, 2019, (Filed: 2014-11-12).

@patent{Fuchs2019b,

title = {Methods, systems, and computer readable media for improved illumination of spatial augmented reality objects },

author = {Henry Fuchs and Gregory Welch},

url = {https://patents.google.com/patent/US10321107B2/

http://patft.uspto.gov/netacgi/nph-Parser?Sect1=PTO1&Sect2=HITOFF&d=PALL&p=1&u=%2Fnetahtml%2FPTO%2Fsrchnum.htm&r=1&f=G&l=50&s1=10321107.PN.&OS=PN/10321107&RS=PN/10321107

},

year = {2019},

date = {2019-06-11},

number = {US10321107B2},

abstract = {A system for illuminating a spatial augmented reality object includes an augmented reality object including a projection surface having a plurality of apertures formed through the projection surface. The system further includes a lenslets layer including a plurality of lenslets and conforming to curved regions of the of the projection surface for directing light through the apertures. The system further includes a camera for measuring ambient illumination in an environment of the projection surface. The system further includes a projected image illumination adjustment module for adjusting illumination of a captured video image. The system further includes a projector for projecting the illumination adjusted captured video image onto the projection surface via the lenslets layer and the apertures.},

note = {Filed: 2014-11-12},

keywords = {},

pubstate = {published},

tppubtype = {patent}

}

2017

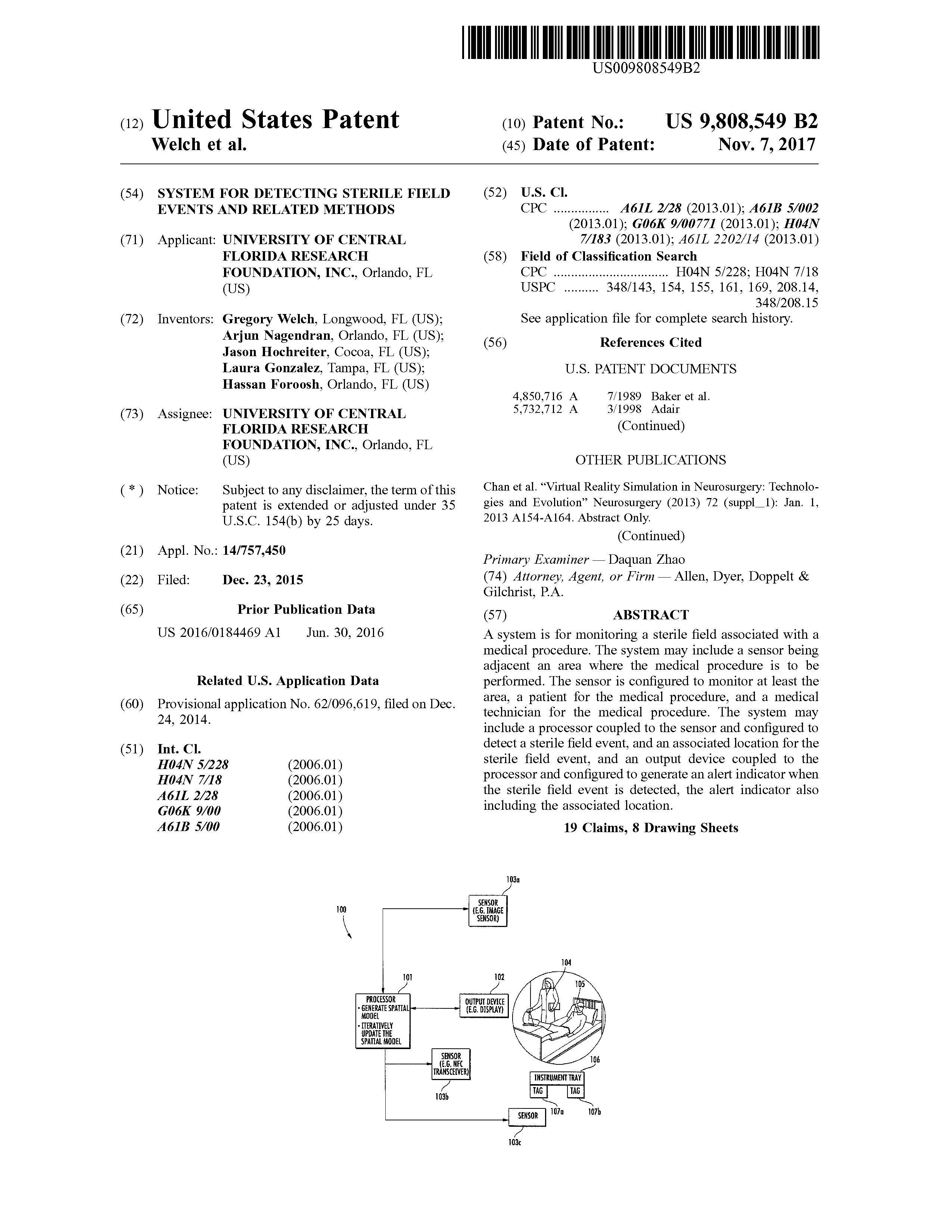

Gregory Welch; Arjun Nagendran; Jason Hochreiter; Laura Gonzalez; Hassan Foroosh

System for Detecting Sterile Field Events and Related Methods Patent

US 9808549B2, 2017, (Filed: 2015-12-23).

@patent{Welch2017ad,

title = {System for Detecting Sterile Field Events and Related Methods},

author = {Gregory Welch and Arjun Nagendran and Jason Hochreiter and Laura Gonzalez and Hassan Foroosh},

url = {https://patents.google.com/patent/US9808549B2/en?oq=US+9808549

http://patft.uspto.gov/netacgi/nph-Parser?Sect1=PTO1&Sect2=HITOFF&d=PALL&p=1&u=/netahtml/PTO/srchnum.htm&r=1&f=G&l=50&s1=9,808,549.PN.&OS=PN/9,808,549&RS=PN/9,808,549},

year = {2017},

date = {2017-11-07},

number = {US 9808549B2},

location = {US},

abstract = {A system is for monitoring a sterile field associated with a medical procedure. The system may include a sensor being adjacent an area where the medical procedure is to be performed. The sensor is configured to monitor at least the area, a patient for the medical procedure, and a medical technician for the medical procedure. The system may include a processor coupled to the sensor and configured to detect a sterile field event, and an associated location for the sterile field event, and an output device coupled to the processor and configured to generate an alert indicator when the sterile field event is detected, the alert indicator also including the associated location.},

note = {Filed: 2015-12-23},

keywords = {},

pubstate = {published},

tppubtype = {patent}

}

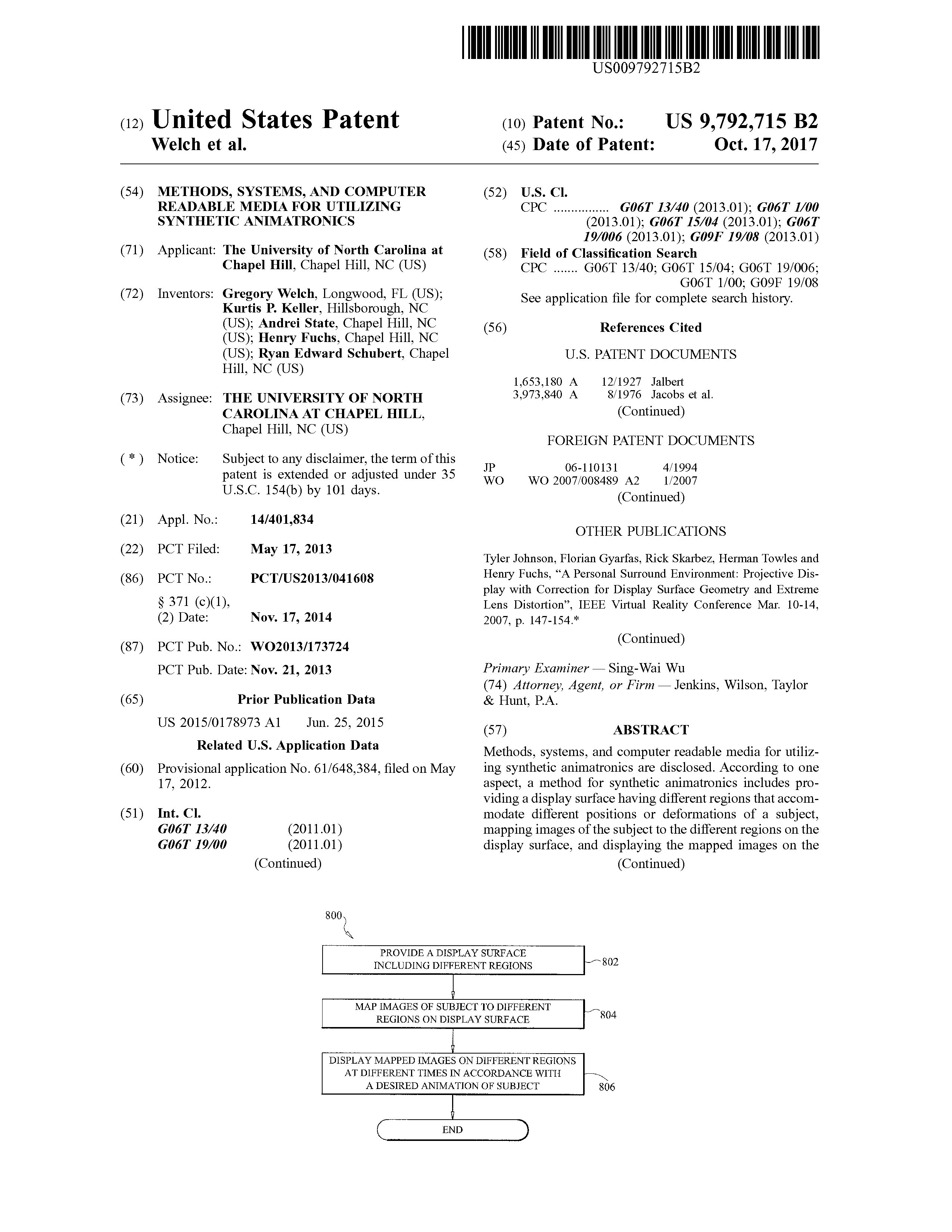

Gregory Welch; Kurtis Keller; Andrei State; Henry Fuchs; Ryan Schubert

Methods, systems, and computer readable media for utilizing synthetic animatronics Patent

US 9792715B2, 2017, (Filed: 2013-05-17).

@patent{Welch2017ac,

title = {Methods, systems, and computer readable media for utilizing synthetic animatronics},

author = {Gregory Welch and Kurtis Keller and Andrei State and Henry Fuchs and Ryan Schubert},

url = {https://patents.google.com/patent/US9792715B2/en?oq=US+9792715

http://patft.uspto.gov/netacgi/nph-Parser?Sect1=PTO1&Sect2=HITOFF&d=PALL&p=1&u=%2Fnetahtml%2FPTO%2Fsrchnum.htm&r=1&f=G&l=50&s1=9,792,715.PN.&OS=PN/9,792,715&RS=PN/9,792,715},

year = {2017},

date = {2017-10-17},

number = {US 9792715B2},

location = {US},

abstract = {Methods, systems, and computer readable media for utilizing synthetic animatronics are disclosed. According to one aspect, a method for synthetic animatronics includes providing a display surface having different regions that accommodate different positions or deformations of a subject, mapping images of the subject to the different regions on the display surface, and displaying the mapped images on the different regions of the display surface at different times in accordance with a desired animation of the subject.},

note = {Filed: 2013-05-17},

keywords = {},

pubstate = {published},

tppubtype = {patent}

}

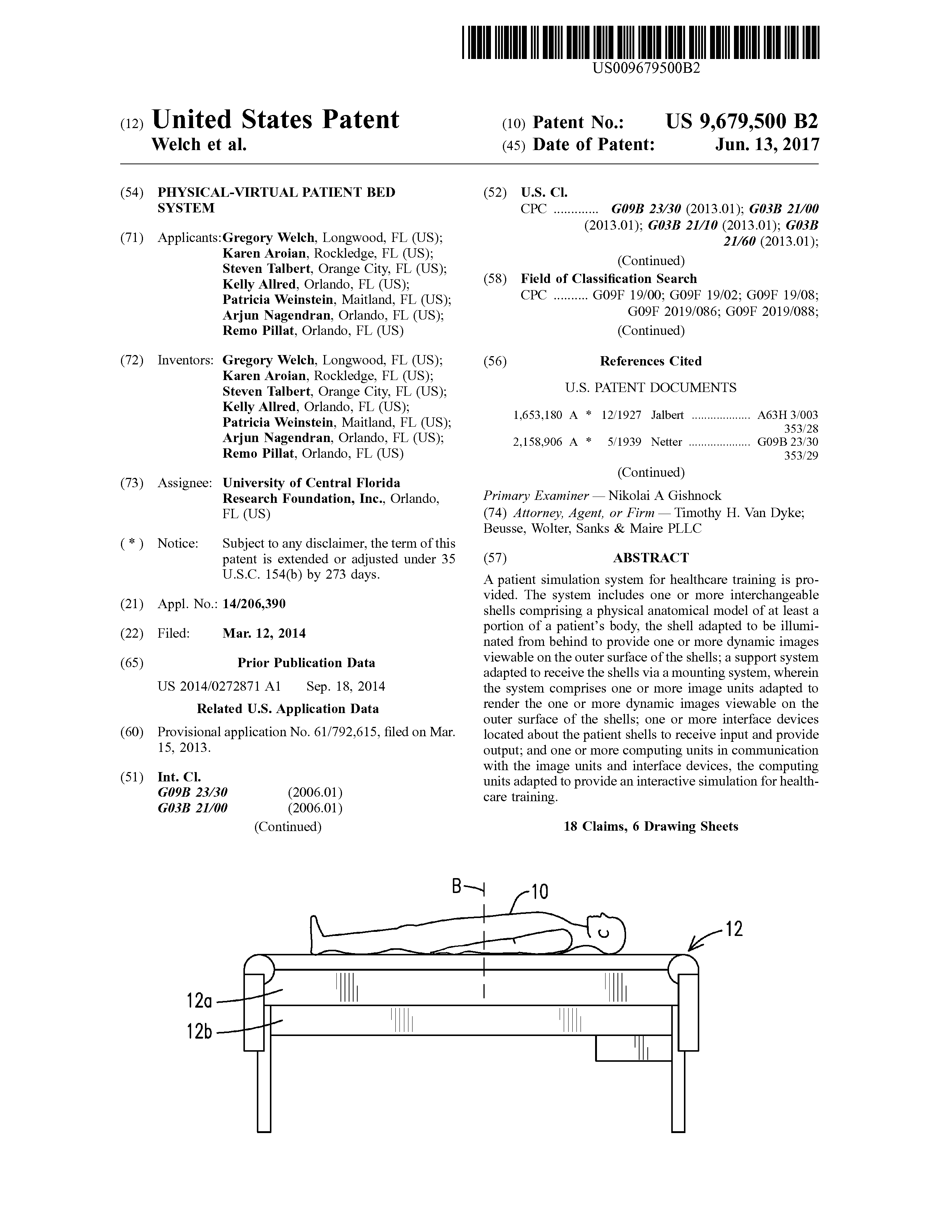

Gregory Welch; Karen Aroian; Steven Talbert; Kelly Allred; Patricia Weinstein; Arjun Nagendran; Remo Pillat

Physical-virtual patient bed system Patent

US 9679500B2, 2017, (Filed: 2014-03-12).

@patent{Welch2017ab,

title = {Physical-virtual patient bed system},

author = {Gregory Welch and Karen Aroian and Steven Talbert and Kelly Allred and Patricia Weinstein and Arjun Nagendran and Remo Pillat},

url = {https://patents.google.com/patent/US9679500B2/en?oq=US+9679500

http://patft.uspto.gov/netacgi/nph-Parser?Sect1=PTO1&Sect2=HITOFF&d=PALL&p=1&u=%2Fnetahtml%2FPTO%2Fsrchnum.htm&r=1&f=G&l=50&s1=9,679,500.PN.&OS=PN/9,679,500&RS=PN/9,679,500},

year = {2017},

date = {2017-06-13},

number = {US 9679500B2},

location = {US},

abstract = {A patient simulation system for healthcare training is provided. The system includes one or more interchangeable shells comprising a physical anatomical model of at least a portion of a patient's body, the shell adapted to be illuminated from behind to provide one or more dynamic images viewable on the outer surface of the shells; a support system adapted to receive the shells via a mounting system, wherein the system comprises one or more image units adapted to render the one or more dynamic images viewable on the outer surface of the shells; one or more interface devices located about the patient shells to receive input and provide output; and one or more computing units in communication with the image units and interface devices, the computing units adapted to provide an interactive simulation for healthcare training.},

note = {Filed: 2014-03-12},

keywords = {},

pubstate = {published},

tppubtype = {patent}

}

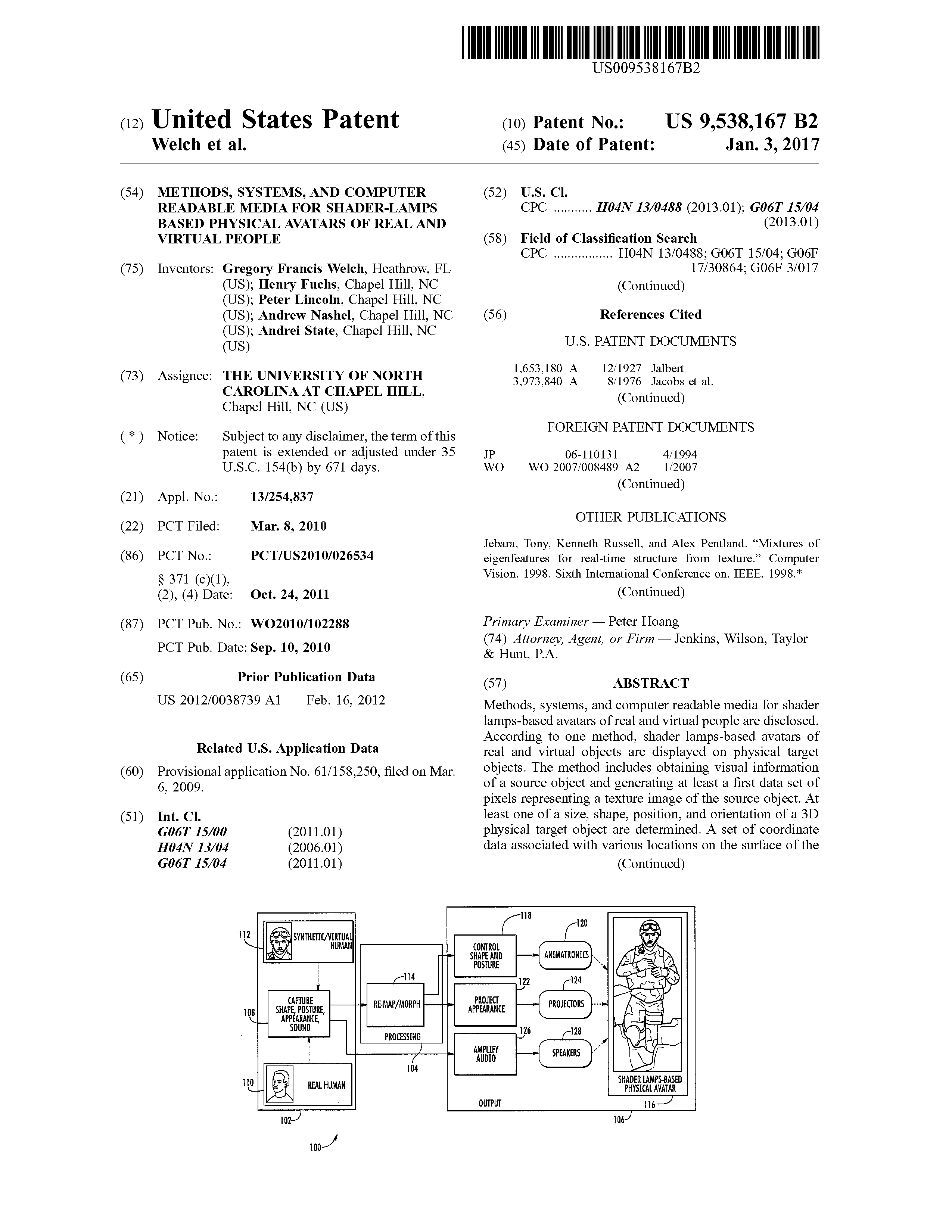

Gregory Welch; Henry Fuchs; Peter Lincoln; Andrew Nashel; Andrei State

Methods, systems, and computer readable media for shader-lamps based physical avatars of real and virtual people Patent

US 9538167B2, 2017, (Filed: 2010-03-08).

@patent{Welch2017aa,

title = {Methods, systems, and computer readable media for shader-lamps based physical avatars of real and virtual people},

author = {Gregory Welch and Henry Fuchs and Peter Lincoln and Andrew Nashel and Andrei State},

url = {https://patents.google.com/patent/US9538167B2/en?oq=US+9538167

http://patft.uspto.gov/netacgi/nph-Parser?Sect1=PTO1&Sect2=HITOFF&d=PALL&p=1&u=%2Fnetahtml%2FPTO%2Fsrchnum.htm&r=1&f=G&l=50&s1=9538167.PN.&OS=PN/9538167&RS=PN/9538167},

year = {2017},

date = {2017-01-03},

number = {US 9538167B2},

location = {US},

abstract = {Methods, systems, and computer readable media for shader lamps-based avatars of real and virtual people are disclosed. According to one method, shader lamps-based avatars of real and virtual objects are displayed on physical target objects. The method includes obtaining visual information of a source object and generating at least a first data set of pixels representing a texture image of the source object. At least one of a size, shape, position, and orientation of a 3D physical target object are determined. A set of coordinate data associated with various locations on the surface of the target object are also determined. The visual information is mapped to the physical target object. Mapping includes defining a relationship between the first and second sets of data, wherein each element of the first set is related to each element of the second set. The mapped visual information is displayed on the physical target object using a display module, such as one or more projectors located at various positions around the target object.},

note = {Filed: 2010-03-08},

keywords = {},

pubstate = {published},

tppubtype = {patent}

}

2014

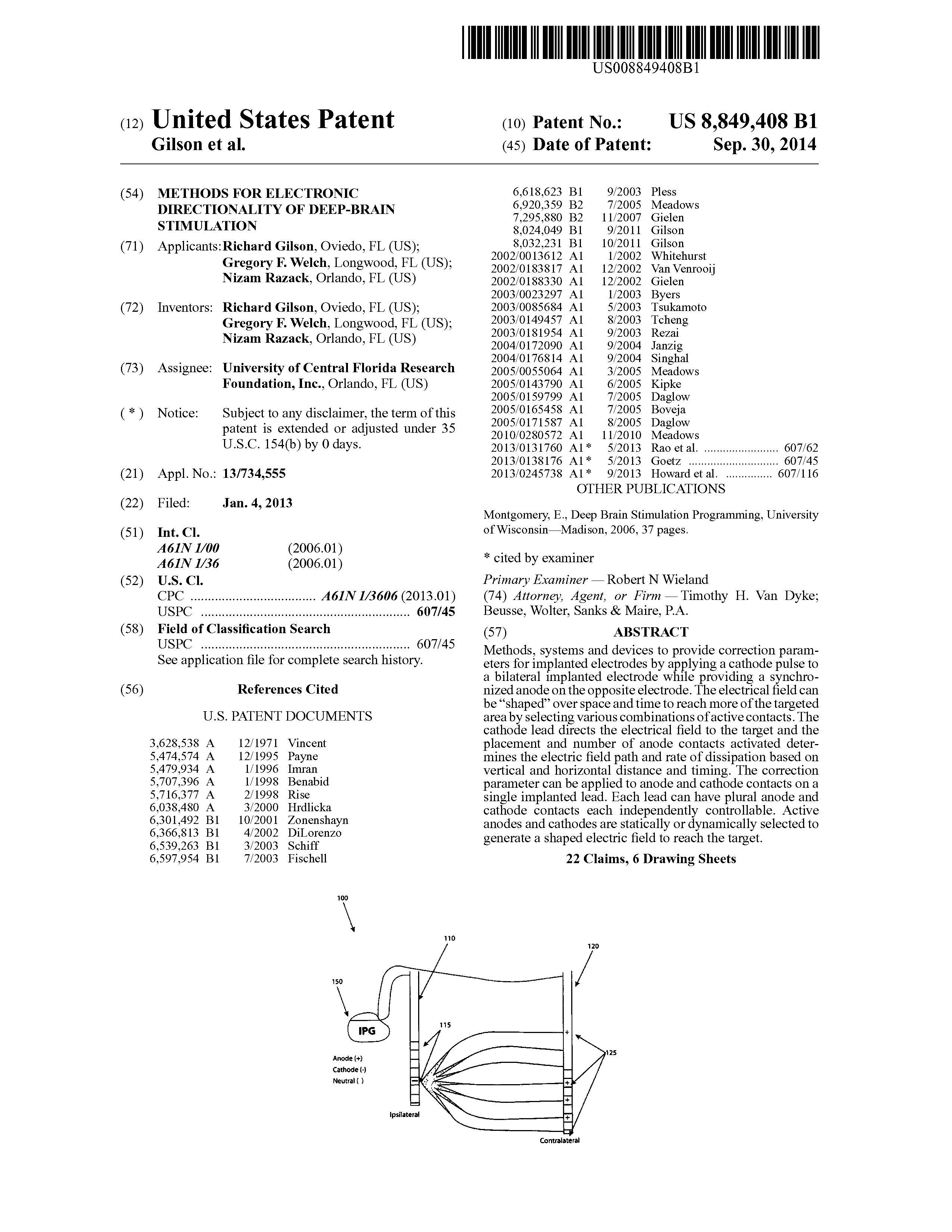

Richard Gilson; Greg Welch; Nizam Razack

Methods for Electronic Directionality of Deep-Brain Stimulation Patent

US 8849408B1, 2014, (Filed: 2013-01-04).

@patent{Gilson2014,

title = {Methods for Electronic Directionality of Deep-Brain Stimulation},

author = {Richard Gilson and Greg Welch and Nizam Razack},

url = {https://patents.google.com/patent/US8849408B1/en?oq=US+%238%2c849%2c408

http://patft.uspto.gov/netacgi/nph-Parser?Sect1=PTO1&Sect2=HITOFF&d=PALL&p=1&u=%2Fnetahtml%2FPTO%2Fsrchnum.htm&r=1&f=G&l=50&s1=8849408.PN.&OS=PN/8849408&RS=PN/8849408},

year = {2014},

date = {2014-09-30},

number = {US 8849408B1},

location = {US},

abstract = {Methods, systems and devices to provide correction parameters for implanted electrodes by applying a cathode pulse to a bilateral implanted electrode while providing a synchronized anode on the opposite electrode. The electrical field can be “shaped” over space and time to reach more of the targeted area by selecting various combinations of active contacts. The cathode lead directs the electrical field to the target and the placement and number of anode contacts activated determines the electric field path and rate of dissipation based on vertical and horizontal distance and timing. The correction parameter can be applied to anode and cathode contacts on a single implanted lead. Each lead can have plural anode and cathode contacts each independently controllable. Active anodes and cathodes are statically or dynamically selected to generate a shaped electric field to reach the target.},

note = {Filed: 2013-01-04},

keywords = {},

pubstate = {published},

tppubtype = {patent}

}

2006

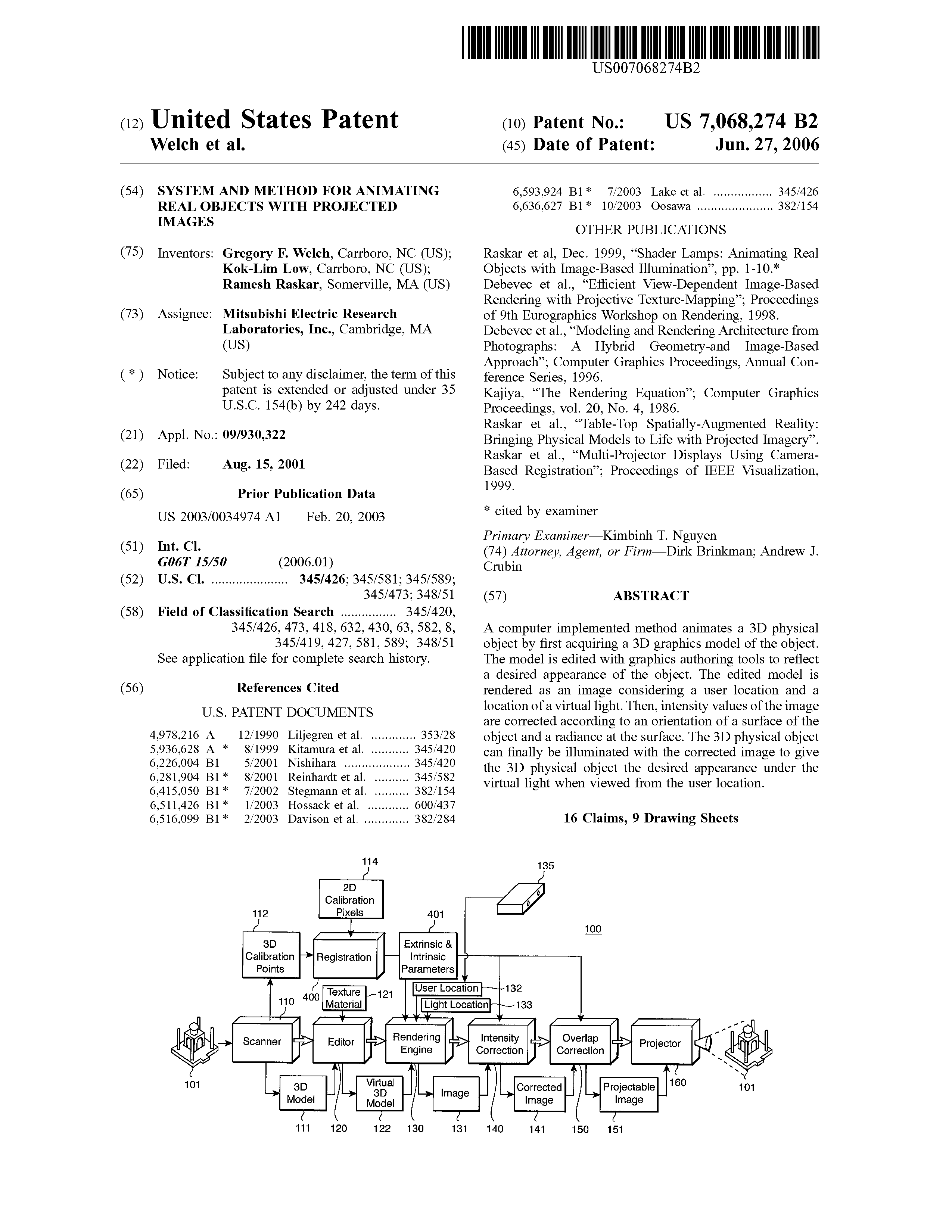

Greg Welch; Kok-Lim Low; Ramesh Raskar

System and method for animating real objects with projected images Patent

US 7068274B2, 2006, (Filed: 2001-08-15).

@patent{Welch2006c,

title = {System and method for animating real objects with projected images },

author = {Greg Welch and Kok-Lim Low and Ramesh Raskar},

url = {https://patents.google.com/patent/US7068274B2/en?oq=US+7%2c068%2c274

http://patft.uspto.gov/netacgi/nph-Parser?Sect1=PTO2&Sect2=HITOFF&p=1&u=%2Fnetahtml%2FPTO%2Fsearch-bool.html&r=1&f=G&l=50&co1=AND&d=PTXT&s1=7068274.PN.&OS=PN/7068274&RS=PN/7068274},

year = {2006},

date = {2006-06-27},

number = {US 7068274B2},

location = {US},

abstract = {A computer implemented method animates a 3D physical object by first acquiring a 3D graphics model of the object. The model is edited with graphics authoring tools to reflect a desired appearance of the object. The edited model is rendered as an image considering a user location and a location of a virtual light. Then, intensity values of the image are corrected according to an orientation of a surface of the object and a radiance at the surface. The 3D physical object can finally be illuminated with the corrected image to give the 3D physical object the desired appearance under the virtual light when viewed from the user location.},

note = {Filed: 2001-08-15},

keywords = {},

pubstate = {published},

tppubtype = {patent}

}

2005

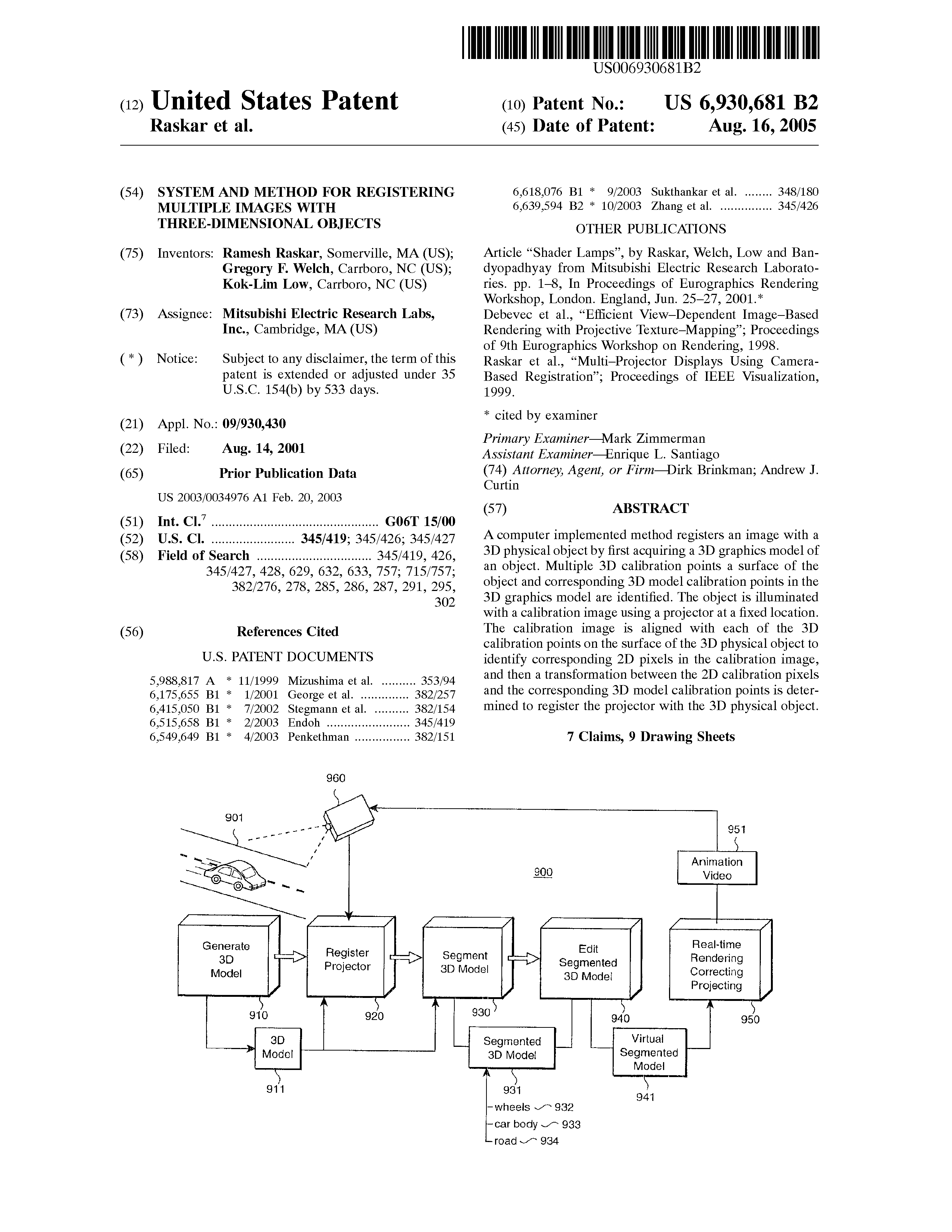

Ramesh Raskar; Gregory F. Welch; Kok-Lim Low

System and method for registering multiple images with three-dimensional objects Patent

US 630681B2, 2005, (Filed: 2014-08-14).

@patent{Raskar2005,

title = {System and method for registering multiple images with three-dimensional objects },

author = {Ramesh Raskar and Gregory F. Welch and Kok-Lim Low},

url = {https://patents.google.com/patent/US6930681B2/en?oq=US+6%2c930%2c681

http://patft.uspto.gov/netacgi/nph-Parser?Sect1=PTO2&Sect2=HITOFF&p=1&u=%2Fnetahtml%2FPTO%2Fsearch-bool.html&r=1&f=G&l=50&co1=AND&d=PTXT&s1=6,930,681.PN.&OS=PN/6,930,681&RS=PN/6930681},

year = {2005},

date = {2005-08-16},

number = {US 630681B2},

location = {US},

abstract = {A computer implemented method registers an image with a 3D physical object by first acquiring a 3D graphics model of an object. Multiple 3D calibration points a surface of the object and corresponding 3D model calibration points in the 3D graphics model are identified. The object is illuminated with a calibration image using a projector at a fixed location. The calibration image is aligned with each of the 3D calibration points on the surface of the 3D physical object to identify corresponding 2D pixels in the calibration image, and then a transformation between the 2D calibration pixels and the corresponding 3D model calibration points is determined to register the projector with the 3D physical object.},

note = {Filed: 2014-08-14},

keywords = {},

pubstate = {published},

tppubtype = {patent}

}

2004

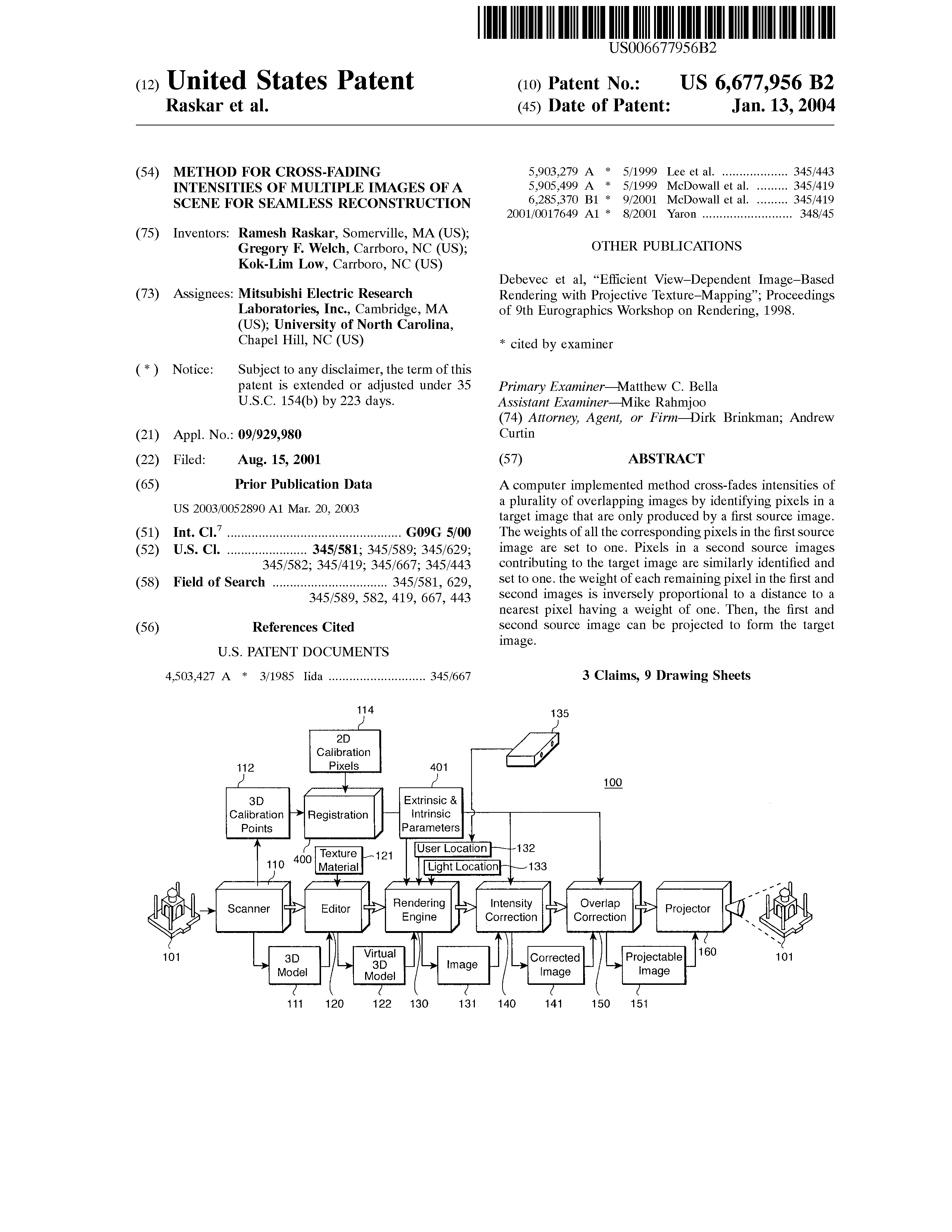

Ramesh Raskar; Gregory F. Welch; Kok-Lim Low

Method for cross-fading intensities of multiple images of a scene for seamless reconstruction Patent

US 6677956B2, 2004, (Filed: 2001-08-15).

@patent{Raskar2004,

title = {Method for cross-fading intensities of multiple images of a scene for seamless reconstruction },

author = {Ramesh Raskar and Gregory F. Welch and Kok-Lim Low},

url = {https://patents.google.com/patent/US6677956B2/en?oq=US+6%2c677%2c956

http://patft.uspto.gov/netacgi/nph-Parser?Sect1=PTO1&Sect2=HITOFF&d=PALL&p=1&u=%2Fnetahtml%2FPTO%2Fsrchnum.htm&r=1&f=G&l=50&s1=6,677,956.PN.&OS=PN/6,677,956&RS=PN/6,677,956},

year = {2004},

date = {2004-01-13},

number = {US 6677956B2},

location = {US},

abstract = {A computer implemented method cross-fades intensities of a plurality of overlapping images by identifying pixels in a target image that are only produced by a first source image. The weights of all the corresponding pixels in the first source image are set to one. Pixels in a second source images contributing to the target image are similarly identified and set to one. the weight of each remaining pixel in the first and second images is inversely proportional to a distance to a nearest pixel having a weight of one. Then, the first and second source image can be projected to form the target image.},

note = {Filed: 2001-08-15},

keywords = {},

pubstate = {published},

tppubtype = {patent}

}

1999

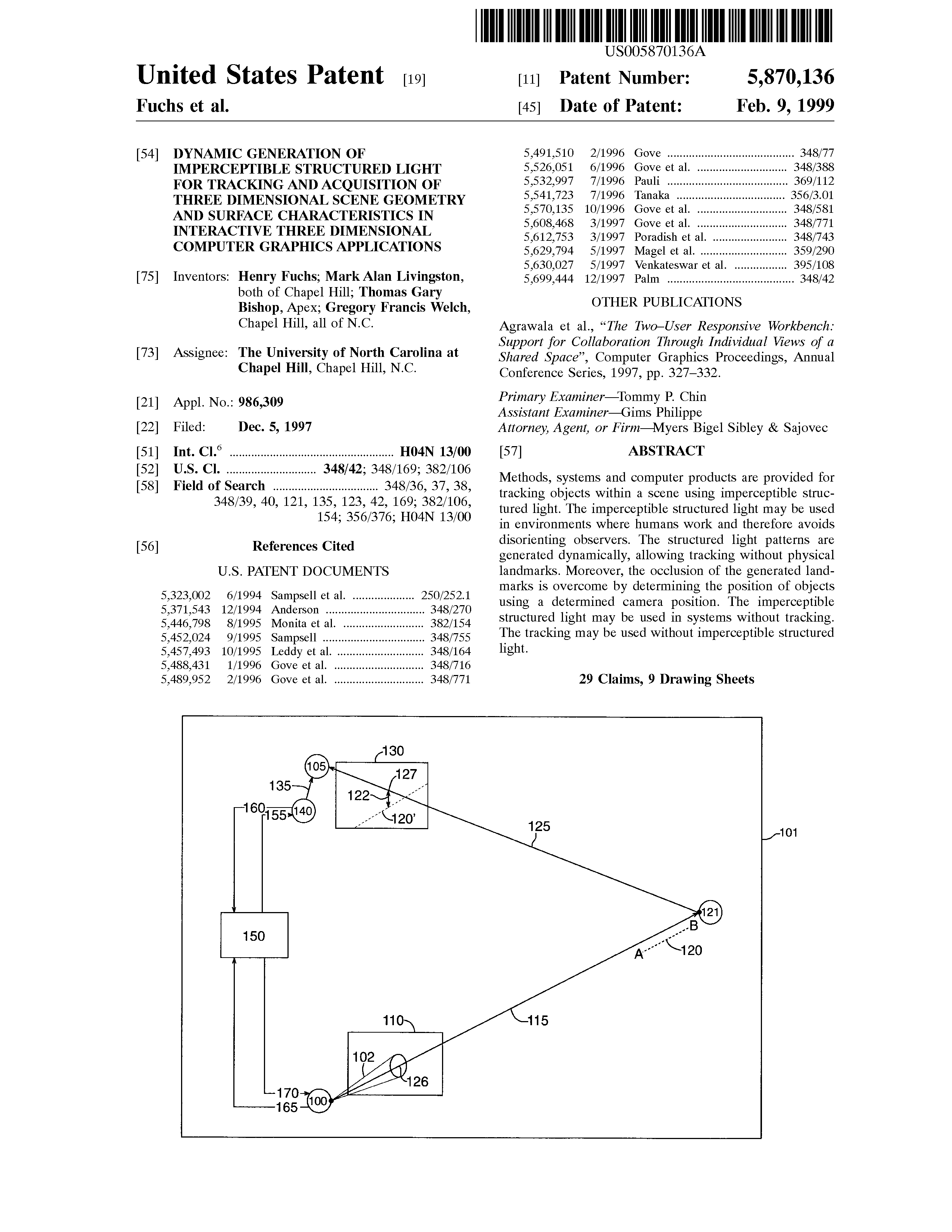

Henry Fuchs; Mark Livingston; Gary Bishop; Gregory Francis Welch

Dynamic generation of imperceptible structured light for tracking and acquisition of three dimensional scene geometry and surface characteristics in interactive three dimensional computer graphics applications Patent

US 5870136A, 1999, (Filed: 1997-12-05).

@patent{Fuchs1999b,

title = {Dynamic generation of imperceptible structured light for tracking and acquisition of three dimensional scene geometry and surface characteristics in interactive three dimensional computer graphics applications},

author = {Henry Fuchs and Mark Livingston and Gary Bishop and Gregory Francis Welch},

url = {https://patents.google.com/patent/US5870136A/en?oq=US+5%2c870%2c136

http://patft.uspto.gov/netacgi/nph-Parser?Sect1=PTO1&Sect2=HITOFF&d=PALL&p=1&u=%2Fnetahtml%2FPTO%2Fsrchnum.htm&r=1&f=G&l=50&s1=5,870,136.PN.&OS=PN/5,870,136&RS=PN/5,870,136},

year = {1999},

date = {1999-02-09},

number = {US 5870136A},

location = {US},

abstract = {Methods, systems and computer products are provided for tracking objects within a scene using imperceptible structured light. The imperceptible structured light may be used in environments where humans work and therefore avoids disorienting observers. The structured light patterns are generated dynamically, allowing tracking without physical landmarks. Moreover, the occlusion of the generated landmarks is overcome by determining the position of objects using a determined camera position. The imperceptible structured light may be used in systems without tracking. The tracking may be used without imperceptible structured light.},

note = {Filed: 1997-12-05},

keywords = {},

pubstate = {published},

tppubtype = {patent}

}