Dr. Gerd Bruder – Publications

NOTICE: This material is presented to ensure timely dissemination of scholarly and technical work. Copyright and all rights therein are retained by authors or by other copyright holders. All persons copying this information are expected to adhere to the terms and constraints invoked by each author’s copyright. In most cases, these works may not be reposted without the explicit permission of the copyright holder.

2006 |

|

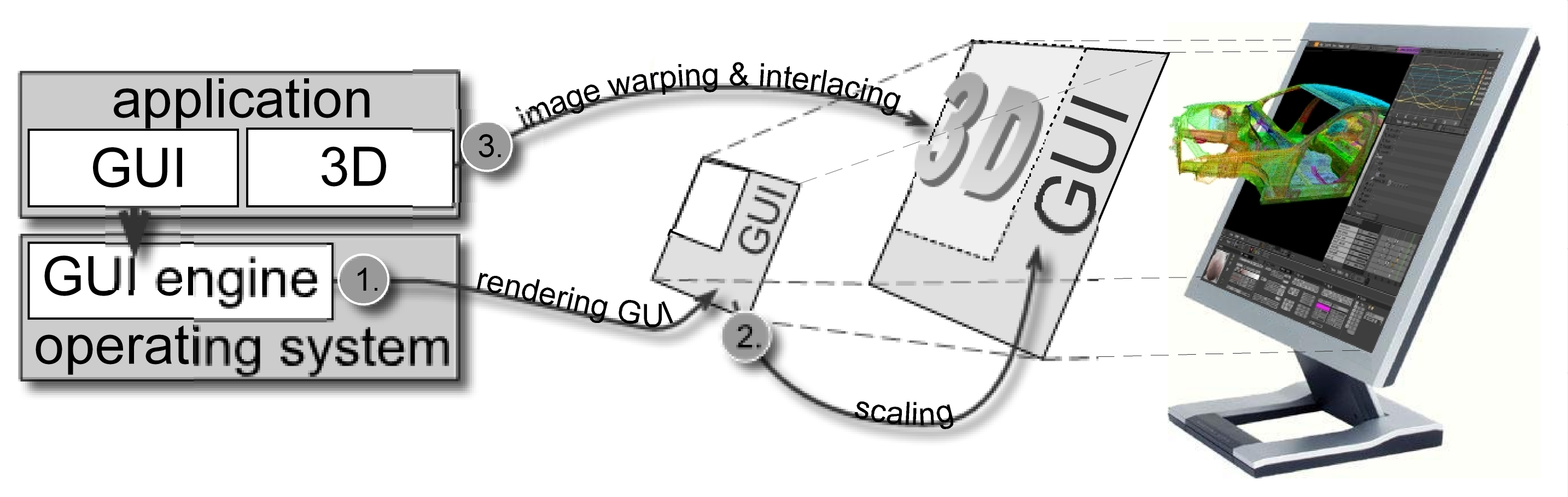

| Simultaneously Viewing Monoscopic and Stereoscopic Content on Vertical-Interlaced Autostereoscopic Displays Proceedings Article In: Proceedings of the ACM International Conference and Exhibition on Computer Graphics and Interactive Techniques (SIGGRAPH) (Conference DVD), ACM Press, 2006. |

| A Multiple View System for Modeling Building Entities Proceedings Article In: Proceedings of the International Conference on Coordinated & Multiple Views in Exploratory Visualization, pp. 69–78, IEEE Press, 2006. |