2023

|

| Zubin Choudhary; Gerd Bruder; Greg Welch Visual Hearing Aids: Artificial Visual Speech Stimuli for Audiovisual Speech Perception in Noise Conference Proceedings of the 29th ACM Symposium on Virtual Reality Software and Technology, 2023, 2023. @conference{Choudhary2023aids,

title = {Visual Hearing Aids: Artificial Visual Speech Stimuli for Audiovisual Speech Perception in Noise},

author = {Zubin Choudhary and Gerd Bruder and Greg Welch},

url = {https://sreal.ucf.edu/wp-content/uploads/2023/09/MAIN_VRST_23_SpeechPerception_Phone.pdf},

year = {2023},

date = {2023-10-09},

urldate = {2023-10-09},

booktitle = {Proceedings of the 29th ACM Symposium on Virtual Reality Software and Technology, 2023},

abstract = {Speech perception is optimal in quiet environments, but noise can impair comprehension and increase errors. In these situations, lip reading can help, but it is not always possible, such as during an audio call or when wearing a face mask. One approach to improve speech perception in these situations is to use an artificial visual lip reading aid. In this paper, we present a user study (𝑁 = 17) in which we compared three levels of audio stimuli visualizations and two levels of modulating the appearance of the visualization based on the speech signal, and we compared them against two control conditions: an audio-only condition, and a real human speaking. We measured participants’ speech reception thresholds (SRTs) to understand the effects of these visualizations on speech perception in noise. These thresholds indicate the decibel levels of the speech signal that are necessary for a listener to receive the speech correctly 50% of the time. Additionally, we measured the usability of the approaches and the user experience. We found that the different artificial visualizations improved participants’ speech reception compared to the audio-only baseline condition, but they were significantly poorer than the real human condition. This suggests that different visualizations can improve speech perception when the speaker’s face is not available. However, we also discuss limitations of current plug-and-play lip sync software and abstract representations of the speaker in the context of speech perception.},

keywords = {},

pubstate = {published},

tppubtype = {conference}

}

Speech perception is optimal in quiet environments, but noise can impair comprehension and increase errors. In these situations, lip reading can help, but it is not always possible, such as during an audio call or when wearing a face mask. One approach to improve speech perception in these situations is to use an artificial visual lip reading aid. In this paper, we present a user study (𝑁 = 17) in which we compared three levels of audio stimuli visualizations and two levels of modulating the appearance of the visualization based on the speech signal, and we compared them against two control conditions: an audio-only condition, and a real human speaking. We measured participants’ speech reception thresholds (SRTs) to understand the effects of these visualizations on speech perception in noise. These thresholds indicate the decibel levels of the speech signal that are necessary for a listener to receive the speech correctly 50% of the time. Additionally, we measured the usability of the approaches and the user experience. We found that the different artificial visualizations improved participants’ speech reception compared to the audio-only baseline condition, but they were significantly poorer than the real human condition. This suggests that different visualizations can improve speech perception when the speaker’s face is not available. However, we also discuss limitations of current plug-and-play lip sync software and abstract representations of the speaker in the context of speech perception. |

| Ryan Schubert; Gerd Bruder; Gregory F. Welch Intuitive User Interfaces for Real-Time Magnification in Augmented Reality Proceedings Article In: Proceedings of the ACM Symposium on Virtual Reality Software and Technology (VRST), pp. 1–10, 2023, (Best Paper Award). @inproceedings{schubert2023iu,

title = {Intuitive User Interfaces for Real-Time Magnification in Augmented Reality },

author = {Ryan Schubert and Gerd Bruder and Gregory F. Welch},

url = {https://sreal.ucf.edu/wp-content/uploads/2023/08/vrst23_bruder2023iu.pdf},

year = {2023},

date = {2023-10-09},

urldate = {2023-10-09},

booktitle = {Proceedings of the ACM Symposium on Virtual Reality Software and Technology (VRST)},

pages = {1--10},

abstract = {Various reasons exist why humans desire to magnify portions of our visually perceived surroundings, e.g., because they are too far away or too small to see with the naked eye. Different technologies are used to facilitate magnification, from telescopes to microscopes using monocular or binocular designs. In particular, modern digital cameras capable of optical and/or digital zoom are very flexible as their high-resolution imagery can be presented to users in real-time with displays and interfaces allowing control over the magnification. In this paper, we present a novel design space of intuitive augmented reality (AR) magnifications where an AR head-mounted display is used for the presentation of real-time magnified camera imagery. We present a user study evaluating and comparing different visual presentation methods and AR interaction techniques. Our results show different advantages for unimanual, bimanual, and situated AR magnification window interfaces, near versus far vergence distances for the image presentation, and five different user interfaces for specifying the scaling factor of the imagery.},

note = {Best Paper Award},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

Various reasons exist why humans desire to magnify portions of our visually perceived surroundings, e.g., because they are too far away or too small to see with the naked eye. Different technologies are used to facilitate magnification, from telescopes to microscopes using monocular or binocular designs. In particular, modern digital cameras capable of optical and/or digital zoom are very flexible as their high-resolution imagery can be presented to users in real-time with displays and interfaces allowing control over the magnification. In this paper, we present a novel design space of intuitive augmented reality (AR) magnifications where an AR head-mounted display is used for the presentation of real-time magnified camera imagery. We present a user study evaluating and comparing different visual presentation methods and AR interaction techniques. Our results show different advantages for unimanual, bimanual, and situated AR magnification window interfaces, near versus far vergence distances for the image presentation, and five different user interfaces for specifying the scaling factor of the imagery. |

| Zubin Choudhary; Nahal Norouzi; Austin Erickson; Ryan Schubert; Gerd Bruder; Gregory F. Welch Exploring the Social Influence of Virtual Humans Unintentionally Conveying Conflicting Emotions Conference Proceedings of the 30th IEEE Conference on Virtual Reality and 3D User Interfaces, IEEE VR 2023, 2023. @conference{Choudhary2023,

title = {Exploring the Social Influence of Virtual Humans Unintentionally Conveying Conflicting Emotions},

author = {Zubin Choudhary and Nahal Norouzi and Austin Erickson and Ryan Schubert and Gerd Bruder and Gregory F. Welch},

url = {https://sreal.ucf.edu/wp-content/uploads/2023/01/PostReview_ConflictingEmotions_IEEEVR23-1.pdf},

year = {2023},

date = {2023-03-29},

urldate = {2023-03-29},

booktitle = {Proceedings of the 30th IEEE Conference on Virtual Reality and 3D User Interfaces, IEEE VR 2023},

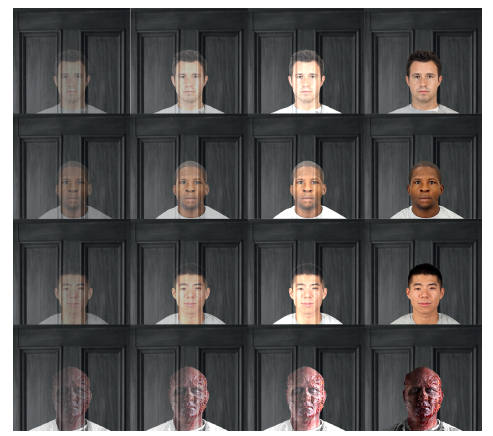

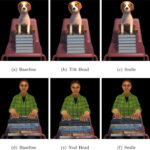

abstract = {The expression of human emotion is integral to social interaction, and in virtual reality it is increasingly common to develop virtual avatars that attempt to convey emotions by mimicking these visual and aural cues, i.e. the facial and vocal expressions. However, errors in (or the absence of) facial tracking can result in the rendering of incorrect facial expressions on these virtual avatars. For example, a virtual avatar may speak with a happy or unhappy vocal inflection while their facial expression remains otherwise neutral. In circumstances where there is conflict between the avatar’s facial and vocal expressions, it is possible that users will incorrectly interpret the

avatar’s emotion, which may have unintended consequences in terms of social influence or in terms of the outcome of the interaction.

In this paper, we present a human-subjects study (N = 22 ) aimed at understanding the impact of conflicting facial and vocal emotional expressions. Specifically we explored three levels of emotional valence (unhappy, neutral, and happy) expressed in both visual (facial) and aural (vocal) forms. We also investigate three levels of head scales (down-scaled, accurate, and up-scaled) to evaluate whether head scale affects user interpretation of the conveyed emotion. We find significant effects of different multimodal expressions on happiness and trust perception, while no significant effect was observed for head scales. Evidence from our results suggest that facial expressions have a stronger impact than vocal expressions. Additionally, as the difference between the two expressions increase, the less predictable the multimodal expression becomes. For example, for the happy-looking and happy-sounding multimodal expression, we expect and see high happiness rating and high trust, however if one of the two expressions change, this mismatch makes the expression less predictable. We discuss the relationships, implications, and guidelines for social applications that aim to leverage multimodal social cues.},

keywords = {},

pubstate = {published},

tppubtype = {conference}

}

The expression of human emotion is integral to social interaction, and in virtual reality it is increasingly common to develop virtual avatars that attempt to convey emotions by mimicking these visual and aural cues, i.e. the facial and vocal expressions. However, errors in (or the absence of) facial tracking can result in the rendering of incorrect facial expressions on these virtual avatars. For example, a virtual avatar may speak with a happy or unhappy vocal inflection while their facial expression remains otherwise neutral. In circumstances where there is conflict between the avatar’s facial and vocal expressions, it is possible that users will incorrectly interpret the

avatar’s emotion, which may have unintended consequences in terms of social influence or in terms of the outcome of the interaction.

In this paper, we present a human-subjects study (N = 22 ) aimed at understanding the impact of conflicting facial and vocal emotional expressions. Specifically we explored three levels of emotional valence (unhappy, neutral, and happy) expressed in both visual (facial) and aural (vocal) forms. We also investigate three levels of head scales (down-scaled, accurate, and up-scaled) to evaluate whether head scale affects user interpretation of the conveyed emotion. We find significant effects of different multimodal expressions on happiness and trust perception, while no significant effect was observed for head scales. Evidence from our results suggest that facial expressions have a stronger impact than vocal expressions. Additionally, as the difference between the two expressions increase, the less predictable the multimodal expression becomes. For example, for the happy-looking and happy-sounding multimodal expression, we expect and see high happiness rating and high trust, however if one of the two expressions change, this mismatch makes the expression less predictable. We discuss the relationships, implications, and guidelines for social applications that aim to leverage multimodal social cues. |

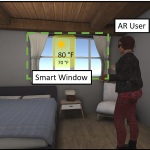

| Kangsoo Kim; Nahal Norouzi; Dongsik Jo; Gerd Bruder; Greg Welch The Augmented Reality Internet of Things: Opportunities of Embodied Interactions in Transreality Book Chapter In: Nee, Andrew Yeh Ching; Ong, Soh Khim (Ed.): Springer Handbook of Augmented Reality, pp. 797–829, Springer International Publishing, Cham, 2023, ISBN: 978-3-030-67822-7. @inbook{Kim2023aa,

title = {The Augmented Reality Internet of Things: Opportunities of Embodied Interactions in Transreality},

author = {Kangsoo Kim and Nahal Norouzi and Dongsik Jo and Gerd Bruder and Greg Welch},

editor = {Andrew Yeh Ching Nee and Soh Khim Ong},

url = {https://doi.org/10.1007/978-3-030-67822-7_32},

doi = {10.1007/978-3-030-67822-7_32},

isbn = {978-3-030-67822-7},

year = {2023},

date = {2023-01-01},

urldate = {2023-01-01},

booktitle = {Springer Handbook of Augmented Reality},

pages = {797--829},

publisher = {Springer International Publishing},

address = {Cham},

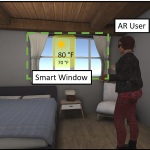

abstract = {Human society is encountering a new wave of advancements related to smart connected technologies with the convergence of different traditionally separate fields, which can be characterized by a fusion of technologies that merge and tightly integrate the physical, digital, and biological spheres. In this new paradigm of convergence, all the physical and digital things will become more and more intelligent and connected to each other through the Internet, and the boundary between them will blur and become seamless. In particular, augmented/mixed reality (AR/MR), which combines virtual content with the real environment, is experiencing an unprecedented golden era along with dramatic technological achievements and increasing public interest. Together with advanced artificial intelligence (AI) and ubiquitous computing empowered by the Internet of Things/Everything (IoT/IoE) systems, AR can be our ultimate interface to interact with both digital (virtual) and physical (real) worlds while pervasively mediating and enriching our lives.},

keywords = {},

pubstate = {published},

tppubtype = {inbook}

}

Human society is encountering a new wave of advancements related to smart connected technologies with the convergence of different traditionally separate fields, which can be characterized by a fusion of technologies that merge and tightly integrate the physical, digital, and biological spheres. In this new paradigm of convergence, all the physical and digital things will become more and more intelligent and connected to each other through the Internet, and the boundary between them will blur and become seamless. In particular, augmented/mixed reality (AR/MR), which combines virtual content with the real environment, is experiencing an unprecedented golden era along with dramatic technological achievements and increasing public interest. Together with advanced artificial intelligence (AI) and ubiquitous computing empowered by the Internet of Things/Everything (IoT/IoE) systems, AR can be our ultimate interface to interact with both digital (virtual) and physical (real) worlds while pervasively mediating and enriching our lives. |

2022

|

| Zubin Choudhary; Austin Erickson; Nahal Norouzi; Kangsoo Kim; Gerd Bruder; Greg Welch Virtual Big Heads in Extended Reality: Estimation of Ideal Head Scales and Perceptual Thresholds for Comfort and Facial Cues Journal Article In: ACM Transactions on Applied Perception, 2022. @article{Choudhary2022,

title = {Virtual Big Heads in Extended Reality: Estimation of Ideal Head Scales and Perceptual Thresholds for Comfort and Facial Cues},

author = {Zubin Choudhary and Austin Erickson and Nahal Norouzi and Kangsoo Kim and Gerd Bruder and Greg Welch},

url = {https://drive.google.com/file/d/1jdxwLchDH0RPouVENoSx8iSOyDmJhqKb/view?usp=sharing},

year = {2022},

date = {2022-11-02},

urldate = {2022-11-02},

journal = {ACM Transactions on Applied Perception},

abstract = {Extended reality (XR) technologies, such as virtual reality (VR) and augmented reality (AR), provide users, their avatars, and embodied agents a shared platform to collaborate in a spatial context. While traditional face-to-face communication is limited by users' proximity, meaning that another human's non-verbal embodied cues become more difficult to perceive the farther one is away from that person, In this paper, we describe and evaluate the ``Big Head'' technique, in which a human's head in VR/AR is scaled up relative to their distance from the observer as a mechanism for enhancing the visibility of non-verbal facial cues, such as facial expressions or eye gaze. To better understand and explore this technique, we present two complimentary human-subject experiments in this paper.

In our first experiment, we conducted a VR study with a head-mounted display (HMD) to understand the impact of increased or decreased head scales on participants' ability to perceive facial expressions as well as their sense of comfort and feeling of ``uncannniness'' over distances of up to 10 meters. We explored two different scaling methods and compared perceptual thresholds and user preferences. Our second experiment was performed in an outdoor AR environment with an optical see-through (OST) HMD. Participants were asked to estimate facial expressions and eye gaze, and identify a virtual human over large distances of 30, 60, and 90 meters. In both experiments, our results show significant differences in minimum, maximum, and ideal head scales for different distances and tasks related to perceiving faces, facial expressions, and eye gaze, while we also found that participants were more comfortable with slightly bigger heads at larger distances. We discuss our findings with respect to the technologies used, and we discuss implications and guidelines for practical applications that aim to leverage XR-enhanced facial cues.},

keywords = {},

pubstate = {published},

tppubtype = {article}

}

Extended reality (XR) technologies, such as virtual reality (VR) and augmented reality (AR), provide users, their avatars, and embodied agents a shared platform to collaborate in a spatial context. While traditional face-to-face communication is limited by users' proximity, meaning that another human's non-verbal embodied cues become more difficult to perceive the farther one is away from that person, In this paper, we describe and evaluate the ``Big Head'' technique, in which a human's head in VR/AR is scaled up relative to their distance from the observer as a mechanism for enhancing the visibility of non-verbal facial cues, such as facial expressions or eye gaze. To better understand and explore this technique, we present two complimentary human-subject experiments in this paper.

In our first experiment, we conducted a VR study with a head-mounted display (HMD) to understand the impact of increased or decreased head scales on participants' ability to perceive facial expressions as well as their sense of comfort and feeling of ``uncannniness'' over distances of up to 10 meters. We explored two different scaling methods and compared perceptual thresholds and user preferences. Our second experiment was performed in an outdoor AR environment with an optical see-through (OST) HMD. Participants were asked to estimate facial expressions and eye gaze, and identify a virtual human over large distances of 30, 60, and 90 meters. In both experiments, our results show significant differences in minimum, maximum, and ideal head scales for different distances and tasks related to perceiving faces, facial expressions, and eye gaze, while we also found that participants were more comfortable with slightly bigger heads at larger distances. We discuss our findings with respect to the technologies used, and we discuss implications and guidelines for practical applications that aim to leverage XR-enhanced facial cues. |

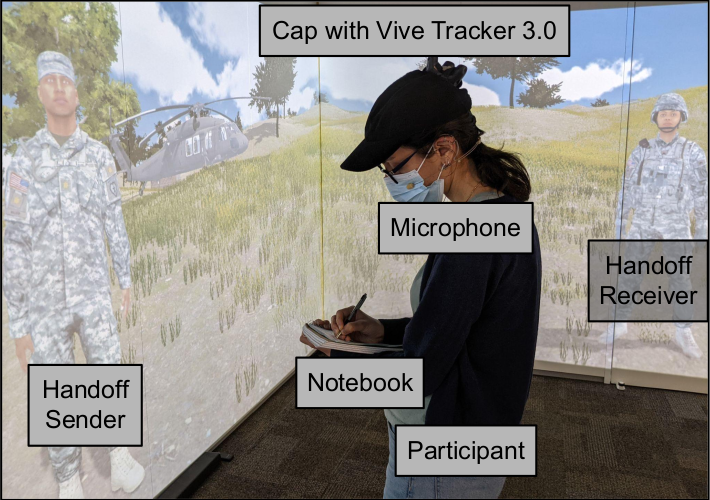

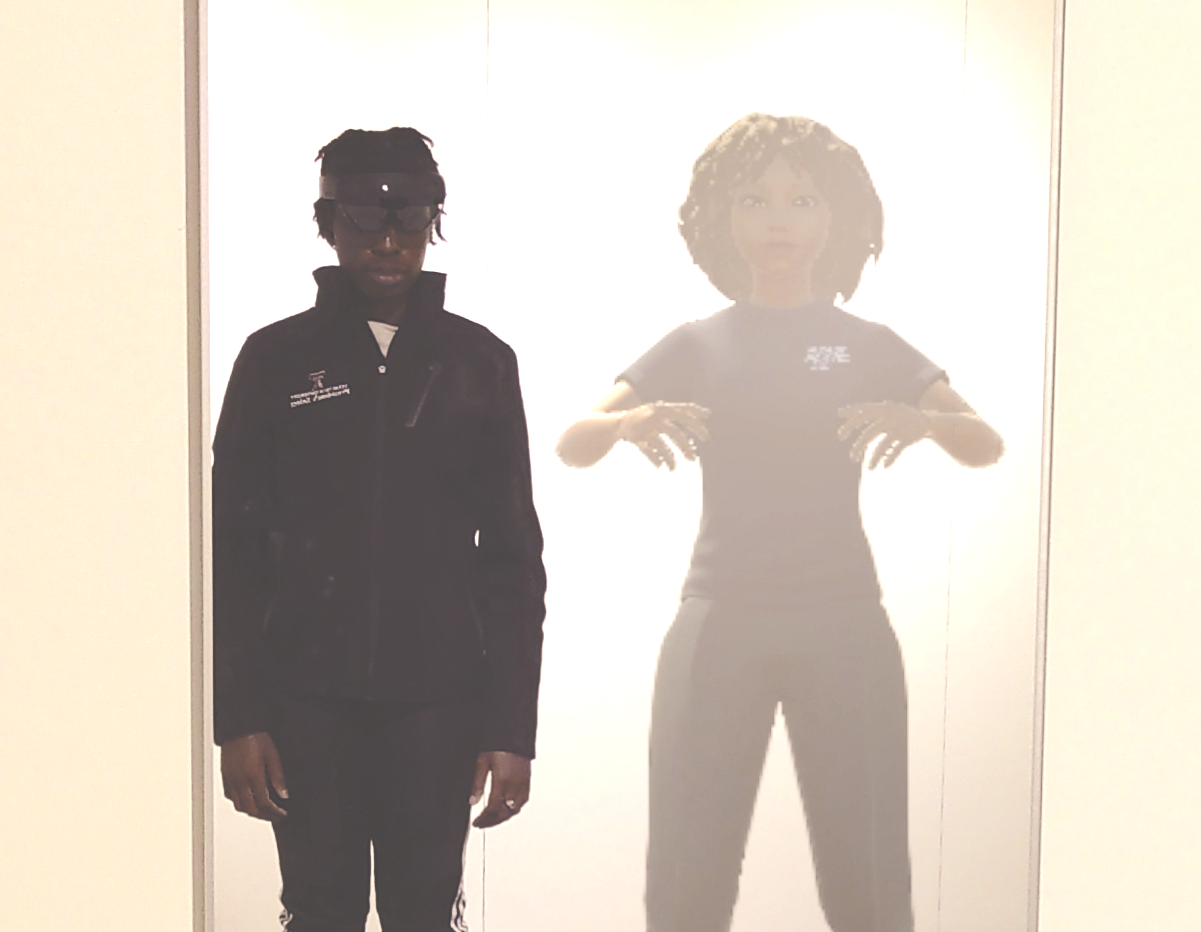

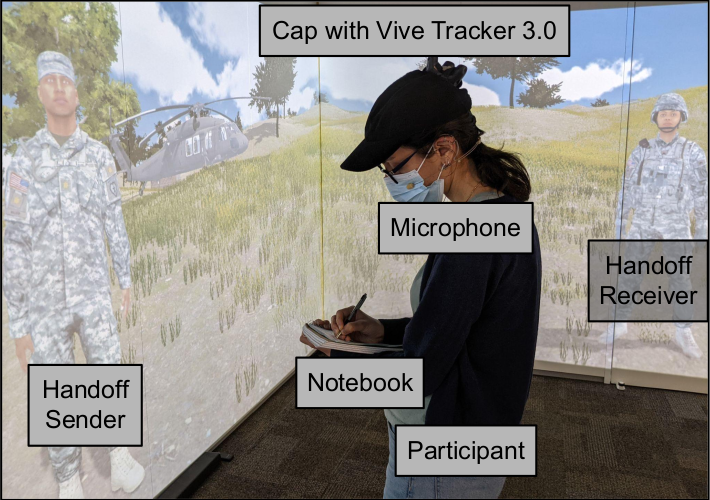

| Matt Gottsacker; Nahal Norouzi; Ryan Schubert; Frank Guido-Sanz; Gerd Bruder; Gregory F. Welch Effects of Environmental Noise Levels on Patient Handoff Communication in a Mixed Reality Simulation Proceedings Article In: 28th ACM Symposium on Virtual Reality Software and Technology (VRST '22), pp. 1-10, 2022, ISBN: 978-1-4503-9889-3/22/11. @inproceedings{gottsacker2022noise,

title = {Effects of Environmental Noise Levels on Patient Handoff Communication in a Mixed Reality Simulation},

author = {Matt Gottsacker and Nahal Norouzi and Ryan Schubert and Frank Guido-Sanz and Gerd Bruder and Gregory F. Welch},

url = {https://sreal.ucf.edu/wp-content/uploads/2022/10/main.pdf},

doi = {10.1145/3562939.3565627},

isbn = {978-1-4503-9889-3/22/11},

year = {2022},

date = {2022-10-27},

urldate = {2022-10-27},

booktitle = {28th ACM Symposium on Virtual Reality Software and Technology (VRST '22)},

pages = {1-10},

abstract = {When medical caregivers transfer patients to another person's care (a patient handoff), it is essential they effectively communicate the patient's condition to ensure the best possible health outcomes. Emergency situations caused by mass casualty events (e.g., natural disasters) introduce additional difficulties to handoff procedures such as environmental noise. We created a projected mixed reality simulation of a handoff scenario involving a medical evacuation by air and tested how low, medium, and high levels of helicopter noise affected participants' handoff experience, handoff performance, and behaviors. Through a human-subjects experimental design study (N = 21), we found that the addition of noise increased participants' subjective stress and task load, decreased their self-assessed and actual performance, and caused participants to speak louder. Participants also stood closer to the virtual human sending the handoff information when listening to the handoff than they stood to the receiver when relaying the handoff information. We discuss implications for the design of handoff training simulations and avenues for future handoff communication research.},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

When medical caregivers transfer patients to another person's care (a patient handoff), it is essential they effectively communicate the patient's condition to ensure the best possible health outcomes. Emergency situations caused by mass casualty events (e.g., natural disasters) introduce additional difficulties to handoff procedures such as environmental noise. We created a projected mixed reality simulation of a handoff scenario involving a medical evacuation by air and tested how low, medium, and high levels of helicopter noise affected participants' handoff experience, handoff performance, and behaviors. Through a human-subjects experimental design study (N = 21), we found that the addition of noise increased participants' subjective stress and task load, decreased their self-assessed and actual performance, and caused participants to speak louder. Participants also stood closer to the virtual human sending the handoff information when listening to the handoff than they stood to the receiver when relaying the handoff information. We discuss implications for the design of handoff training simulations and avenues for future handoff communication research. |

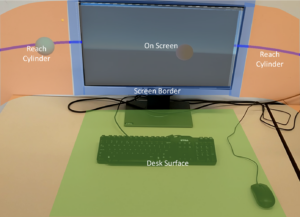

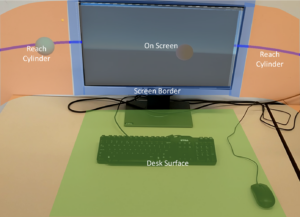

| Robbe Cools; Matt Gottsacker; Adalberto Simeone; Gerd Bruder; Gregory F. Welch; Steven Feiner Towards a Desktop–AR Prototyping Framework: Prototyping Cross-Reality Between Desktops and Augmented Reality Proceedings Article In: Adjunct Proceedings of IEEE International Symposium on Mixed and Augmented Reality (ISMAR), pp. 175-182, 2022. @inproceedings{gottsacker2022desktopar,

title = {Towards a Desktop–AR Prototyping Framework: Prototyping Cross-Reality Between Desktops and Augmented Reality},

author = {Robbe Cools and Matt Gottsacker and Adalberto Simeone and Gerd Bruder and Gregory F. Welch and Steven Feiner},

url = {https://sreal.ucf.edu/wp-content/uploads/2022/10/ISMAR2022_Workshop_on_Prototyping_Cross_Reality_Systems.pdf},

doi = {10.1109/ISMAR-Adjunct57072.2022.00040},

year = {2022},

date = {2022-10-22},

urldate = {2022-10-22},

booktitle = {Adjunct Proceedings of IEEE International Symposium on Mixed and Augmented Reality (ISMAR)},

pages = {175-182},

abstract = {Augmented reality (AR) head-worn displays (HWDs) allow users to view and interact with virtual objects anchored in the 3D space around them. These devices extend users’ digital interaction space compared to traditional desktop computing environments by both allowing users to interact with a larger virtual display and by affording new interactions (e.g., intuitive 3D manipulations) with virtual content. Yet, 2D desktop displays still have advantages over AR HWDs for common computing tasks and will continue to be used well into the future. Because of their not entirely overlapping set of affordances, AR HWDs and 2D desktops may be useful in a hybrid configuration; that is, users may benefit from being able to

work on computing tasks in either environment (or simultaneously in both environments) while transitioning virtual content between them. In support of such computing environments, we propose a prototyping framework for bidirectional Cross-Reality interactions between a desktop and an AR HWD. We further implemented a proof-of-concept seamless Desktop–AR display space, and describe two concrete use cases for our framework. In future work we aim to further develop our proof-of-concept into the proposed framework.},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

Augmented reality (AR) head-worn displays (HWDs) allow users to view and interact with virtual objects anchored in the 3D space around them. These devices extend users’ digital interaction space compared to traditional desktop computing environments by both allowing users to interact with a larger virtual display and by affording new interactions (e.g., intuitive 3D manipulations) with virtual content. Yet, 2D desktop displays still have advantages over AR HWDs for common computing tasks and will continue to be used well into the future. Because of their not entirely overlapping set of affordances, AR HWDs and 2D desktops may be useful in a hybrid configuration; that is, users may benefit from being able to

work on computing tasks in either environment (or simultaneously in both environments) while transitioning virtual content between them. In support of such computing environments, we propose a prototyping framework for bidirectional Cross-Reality interactions between a desktop and an AR HWD. We further implemented a proof-of-concept seamless Desktop–AR display space, and describe two concrete use cases for our framework. In future work we aim to further develop our proof-of-concept into the proposed framework. |

![[Poster] Adapting Michelson Contrast for use with Optical See-Through Displays](https://sreal.ucf.edu/wp-content/uploads/2022/10/phonefigure2-e1664926618216-150x150.png) | Austin Erickson; Gerd Bruder; Gregory F Welch [Poster] Adapting Michelson Contrast for use with Optical See-Through Displays Proceedings Article In: In Adjunct Proceedings of the IEEE International Symposium on Mixed and Augmented Reality, pp. 1–2, IEEE IEEE, 2022. @inproceedings{Erickson2022c,

title = {[Poster] Adapting Michelson Contrast for use with Optical See-Through Displays},

author = {Austin Erickson and Gerd Bruder and Gregory F Welch},

url = {https://sreal.ucf.edu/wp-content/uploads/2022/10/ISMARContrastModel_POSTER.pdf},

year = {2022},

date = {2022-10-17},

urldate = {2022-10-17},

booktitle = {In Adjunct Proceedings of the IEEE International Symposium on Mixed and Augmented Reality},

pages = {1--2},

publisher = {IEEE},

organization = {IEEE},

abstract = {Due to the additive light model employed by current optical see-through head-mounted displays (OST-HMDs), the perceived contrast of displayed imagery is reduced with increased environment luminance, often to the point where it becomes difficult for the user to accurately distinguish the presence of visual imagery. While existing contrast models, such as Weber contrast and Michelson contrast, can be used to predict when the observer will experience difficulty distinguishing and interpreting stimuli on traditional displays, these models must be adapted for use with additive displays. In this paper, we present a simplified model of luminance contrast for optical see-through displays derived from Michelson’s contrast equation and demonstrate two applications of the model: informing design decisions involving the color of virtual imagery and optimizing environment light attenuation through the use of neutral density filters.},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

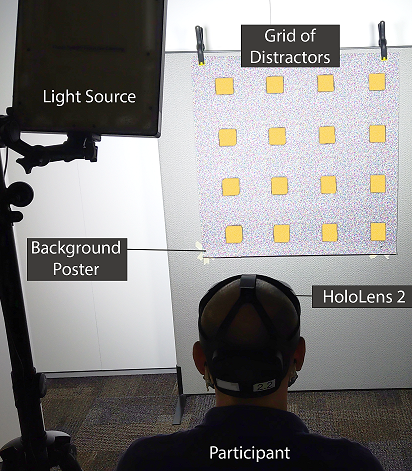

Due to the additive light model employed by current optical see-through head-mounted displays (OST-HMDs), the perceived contrast of displayed imagery is reduced with increased environment luminance, often to the point where it becomes difficult for the user to accurately distinguish the presence of visual imagery. While existing contrast models, such as Weber contrast and Michelson contrast, can be used to predict when the observer will experience difficulty distinguishing and interpreting stimuli on traditional displays, these models must be adapted for use with additive displays. In this paper, we present a simplified model of luminance contrast for optical see-through displays derived from Michelson’s contrast equation and demonstrate two applications of the model: informing design decisions involving the color of virtual imagery and optimizing environment light attenuation through the use of neutral density filters. |

![[POSTER] Exploring Cues and Signaling to Improve Cross-Reality Interruptions](https://sreal.ucf.edu/wp-content/uploads/2022/06/would_interrupt_red-300x300.png) | Matt Gottsacker; Raiffa Syamil; Pamela Wisniewski; Gerd Bruder; Carolina Cruz-Neira; Gregory F. Welch [POSTER] Exploring Cues and Signaling to Improve Cross-Reality Interruptions Proceedings Article In: Adjunct Proceedings of IEEE International Symposium on Mixed and Augmented Reality (ISMAR), pp. 827-832, 2022. @inproceedings{nokey,

title = {[POSTER] Exploring Cues and Signaling to Improve Cross-Reality Interruptions},

author = {Matt Gottsacker and Raiffa Syamil and Pamela Wisniewski and Gerd Bruder and Carolina Cruz-Neira and Gregory F. Welch},

url = {https://sreal.ucf.edu/wp-content/uploads/2022/09/ISMAR22_CrossReality_camready_3.pdf},

doi = {10.1109/ISMAR-Adjunct57072.2022.00179},

year = {2022},

date = {2022-10-15},

urldate = {2022-10-15},

booktitle = {Adjunct Proceedings of IEEE International Symposium on Mixed and Augmented Reality (ISMAR)},

pages = {827-832},

abstract = {In this paper, we report on initial work exploring the potential value of technology-mediated cues and signals to improve cross-reality interruptions. We investigated the use of color-coded visual cues (LED lights) to help a person decide when to interrupt a virtual reality (VR) user, and a gesture-based mechanism (waving at the user) to signal their desire to do so. To assess the potential value of these mechanisms we conducted a preliminary 2×3 within-subjects experimental design user study (N = 10) where the participants acted in the role of the interrupter. While we found that our visual cues improved participants’ experiences, our gesture-based signaling mechanism did not, as users did not trust it nor consider it as intuitive as a speech-based mechanism might be. Our preliminary findings motivate further investigation of interruption cues and signaling mechanisms to inform future VR head-worn display system designs.},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

In this paper, we report on initial work exploring the potential value of technology-mediated cues and signals to improve cross-reality interruptions. We investigated the use of color-coded visual cues (LED lights) to help a person decide when to interrupt a virtual reality (VR) user, and a gesture-based mechanism (waving at the user) to signal their desire to do so. To assess the potential value of these mechanisms we conducted a preliminary 2×3 within-subjects experimental design user study (N = 10) where the participants acted in the role of the interrupter. While we found that our visual cues improved participants’ experiences, our gesture-based signaling mechanism did not, as users did not trust it nor consider it as intuitive as a speech-based mechanism might be. Our preliminary findings motivate further investigation of interruption cues and signaling mechanisms to inform future VR head-worn display system designs. |

| Meelad Doroodchi; Priscilla Ramos; Austin Erickson; Hiroshi Furuya; Juanita Benjamin; Gerd Bruder; Gregory F. Welch Effects of Optical See-Through Displays on Self-Avatar Appearance in Augmented Reality Proceedings Article In: Proceedings of the International Symposium on Mixed and Augmented Reality (ISMAR), IEEE 2022. @inproceedings{Ramos2022,

title = {Effects of Optical See-Through Displays on Self-Avatar Appearance in Augmented Reality},

author = {Meelad Doroodchi and Priscilla Ramos and Austin Erickson and Hiroshi Furuya and Juanita Benjamin and Gerd Bruder and Gregory F. Welch},

url = {https://sreal.ucf.edu/wp-content/uploads/2022/10/IDEATExR2022_REU_Paper.pdf},

year = {2022},

date = {2022-08-17},

urldate = {2022-08-31},

booktitle = {Proceedings of the International Symposium on Mixed and Augmented Reality (ISMAR)},

organization = {IEEE},

abstract = {Display technologies in the fields of virtual and augmented reality can affect the appearance of human representations, such as avatars used in telepresence or entertainment applications. In this paper, we describe a user study (N=20) where participants saw themselves in a mirror side-by-side with their own avatar, through use of a HoloLens 2 optical see-through head-mounted display. Participants were tasked to match their avatar’s appearance to their own under two environment lighting conditions (200 lux and 2,000 lux). Our results showed that the intensity of environment lighting had a significant effect on participants selected skin colors for their avatars, where participants with dark skin colors tended to make their avatar’s skin color lighter, nearly to the level of participants with light skin color. Further, in particular female participants made their avatar’s hair color darker for the lighter environment lighting condition. We discuss our results with a view on technological limitations and effects on the diversity of avatar representations on optical see-through displays.},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

Display technologies in the fields of virtual and augmented reality can affect the appearance of human representations, such as avatars used in telepresence or entertainment applications. In this paper, we describe a user study (N=20) where participants saw themselves in a mirror side-by-side with their own avatar, through use of a HoloLens 2 optical see-through head-mounted display. Participants were tasked to match their avatar’s appearance to their own under two environment lighting conditions (200 lux and 2,000 lux). Our results showed that the intensity of environment lighting had a significant effect on participants selected skin colors for their avatars, where participants with dark skin colors tended to make their avatar’s skin color lighter, nearly to the level of participants with light skin color. Further, in particular female participants made their avatar’s hair color darker for the lighter environment lighting condition. We discuss our results with a view on technological limitations and effects on the diversity of avatar representations on optical see-through displays. |

| Austin Erickson; Gerd Bruder; Gregory F. Welch Analysis of the Saliency of Color-Based Dichoptic Cues in Optical See-Through Augmented Reality Journal Article In: Transactions on Visualization and Computer Graphics, pp. 1-15, 2022. @article{Erickson2022b,

title = {Analysis of the Saliency of Color-Based Dichoptic Cues in Optical See-Through Augmented Reality},

author = {Austin Erickson and Gerd Bruder and Gregory F. Welch},

editor = {Klaus Mueller},

url = {https://sreal.ucf.edu/wp-content/uploads/2022/07/ARPreattentiveCues-1.pdf},

doi = {10.1109/TVCG.2022.3195111},

year = {2022},

date = {2022-07-26},

urldate = {2022-07-26},

journal = {Transactions on Visualization and Computer Graphics},

pages = {1-15},

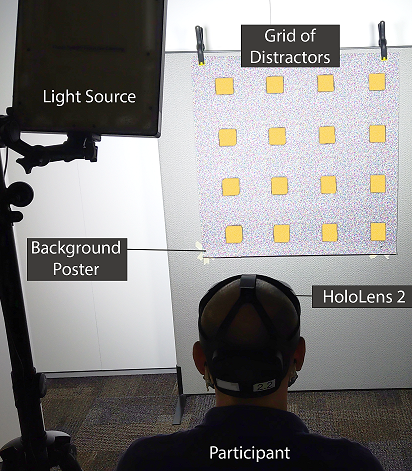

abstract = {In a future of pervasive augmented reality (AR), AR systems will need to be able to efficiently draw or guide the attention of the user to visual points of interest in their physical-virtual environment. Since AR imagery is overlaid on top of the user’s view of their physical environment, these attention guidance techniques must not only compete with other virtual imagery, but also with distracting or attention-grabbing features in the user’s physical environment. Because of the wide range of physical-virtual environments that pervasive AR users will find themselves in, it is difficult to design visual cues that “pop out” to the user without performing a visual analysis of the user’s environment, and changing the appearance of the cue to stand out from its surroundings.

In this paper, we present an initial investigation into the potential uses of dichoptic visual cues for optical see-through AR displays, specifically cues that involve having a difference in hue, saturation, or value between the user’s eyes. These types of cues have been shown to be preattentively processed by the user when presented on other stereoscopic displays, and may also be an effective method of drawing user attention on optical see-through AR displays. We present two user studies: one that evaluates the saliency of dichoptic visual cues on optical see-through displays, and one that evaluates their subjective qualities. Our results suggest that hue-based dichoptic cues or “Forbidden Colors” may be particularly effective for these purposes, achieving significantly lower error rates in a pop out task compared to value-based and saturation-based cues.},

keywords = {},

pubstate = {published},

tppubtype = {article}

}

In a future of pervasive augmented reality (AR), AR systems will need to be able to efficiently draw or guide the attention of the user to visual points of interest in their physical-virtual environment. Since AR imagery is overlaid on top of the user’s view of their physical environment, these attention guidance techniques must not only compete with other virtual imagery, but also with distracting or attention-grabbing features in the user’s physical environment. Because of the wide range of physical-virtual environments that pervasive AR users will find themselves in, it is difficult to design visual cues that “pop out” to the user without performing a visual analysis of the user’s environment, and changing the appearance of the cue to stand out from its surroundings.

In this paper, we present an initial investigation into the potential uses of dichoptic visual cues for optical see-through AR displays, specifically cues that involve having a difference in hue, saturation, or value between the user’s eyes. These types of cues have been shown to be preattentively processed by the user when presented on other stereoscopic displays, and may also be an effective method of drawing user attention on optical see-through AR displays. We present two user studies: one that evaluates the saliency of dichoptic visual cues on optical see-through displays, and one that evaluates their subjective qualities. Our results suggest that hue-based dichoptic cues or “Forbidden Colors” may be particularly effective for these purposes, achieving significantly lower error rates in a pop out task compared to value-based and saturation-based cues. |

| Pearly Chen; Mark Griswold; Hao Li; Sandra Lopez; Nahal Norouzi; Greg Welch Immersive Media Technologies: The Acceleration of Augmented and Virtual Reality in the Wake of COVID-19 Journal Article In: World Economic Forum, 2022. @article{Chen2022ky,

title = {Immersive Media Technologies: The Acceleration of Augmented and Virtual Reality in the Wake of COVID-19},

author = {Pearly Chen and Mark Griswold and Hao Li and Sandra Lopez and Nahal Norouzi and Greg Welch},

url = {https://sreal.ucf.edu/wp-content/uploads/2022/03/WEF_Immersive_Media_Technologies_2022.pdf

https://www.weforum.org/reports/immersive-media-technologies-the-acceleration-of-augmented-and-virtual-reality-in-the-wake-of-covid-19},

year = {2022},

date = {2022-06-20},

urldate = {2022-06-20},

journal = {World Economic Forum},

abstract = {The COVID-19 pandemic disrupted whole economies. Immersive media businesses, which focus on technologies that create or imitate the physical world through digital simulation, have been no exception. The Global Future Council on Augmented Reality and Virtual Reality, which is comprised of interdisciplinary thought leaders in immersive technology and media, has examined the transformative impact of the pandemic and the speed of adoption of these technologies across industries.},

howpublished = {url{https://www.weforum.org/reports/immersive-media-technologies-the-acceleration-of-augmented-and-virtual-reality-in-the-wake-of-covid-19}},

keywords = {},

pubstate = {published},

tppubtype = {article}

}

The COVID-19 pandemic disrupted whole economies. Immersive media businesses, which focus on technologies that create or imitate the physical world through digital simulation, have been no exception. The Global Future Council on Augmented Reality and Virtual Reality, which is comprised of interdisciplinary thought leaders in immersive technology and media, has examined the transformative impact of the pandemic and the speed of adoption of these technologies across industries. |

| Austin Erickson; Gerd Bruder; Gregory Welch; Isaac Bynum; Tabitha Peck; Jessica Good Perceived Humanness Bias in Additive Light Model Displays (Poster) Journal Article In: Journal of Vision, iss. Journal of Vision, 2022. @article{Erickson2022,

title = {Perceived Humanness Bias in Additive Light Model Displays (Poster)},

author = {Austin Erickson and Gerd Bruder and Gregory Welch and Isaac Bynum and Tabitha Peck and Jessica Good},

url = {https://www.visionsciences.org/presentation/?id=4201

},

year = {2022},

date = {2022-05-17},

urldate = {2022-05-17},

journal = {Journal of Vision},

issue = {Journal of Vision},

publisher = {Association for Research in Vision and Ophthalmology (ARVO)},

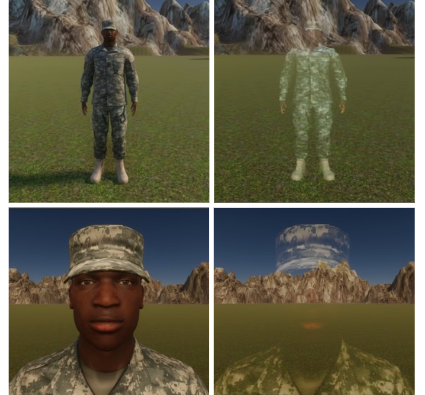

abstract = {Additive light model displays, such as optical see-through augmented reality displays, create imagery by adding light over a physical scene. While these types of displays are commonly used, they are limited in their ability to display dark, low-luminance colors. As a result of this, these displays cannot render the color black and other similar colors, and instead the resulting color is rendered as completely transparent. This optical limitation introduces perceptual problems, as virtual imagery with dark colors appears semi-transparent, while lighter colored imagery is more opaque. We generated an image set of virtual humans that captures the peculiarities of imagery shown on an additive display by performing a perceptual matching task between imagery shown on a Microsoft HoloLens and imagery shown on a flat panel display. We then used this image set to run an online user study to explore whether this optical limitation introduces bias in user perception of virtual humans of different skin colors. We evaluated virtual avatars and virtual humans at different opacity levels ranging from how they currently appear on the Microsoft HoloLens, to how they would appear on a display without transparency and color blending issues. Our results indicate that, regardless of skin tone, the perceived humanness of the virtual humans and avatars decreases with respect to opacity level. As a result of this, virtual humans with darker skin tones are perceived as less human compared to those with lighter skin tones. This result suggests that there may be an unintentional racial bias when using applications involving telepresence or virtual humans on additive light model displays. While optical and hardware solutions to this problem are likely years away, we emphasize that future work should investigate how some of these perceptual issues may be overcome via software-based methods.},

keywords = {},

pubstate = {published},

tppubtype = {article}

}

Additive light model displays, such as optical see-through augmented reality displays, create imagery by adding light over a physical scene. While these types of displays are commonly used, they are limited in their ability to display dark, low-luminance colors. As a result of this, these displays cannot render the color black and other similar colors, and instead the resulting color is rendered as completely transparent. This optical limitation introduces perceptual problems, as virtual imagery with dark colors appears semi-transparent, while lighter colored imagery is more opaque. We generated an image set of virtual humans that captures the peculiarities of imagery shown on an additive display by performing a perceptual matching task between imagery shown on a Microsoft HoloLens and imagery shown on a flat panel display. We then used this image set to run an online user study to explore whether this optical limitation introduces bias in user perception of virtual humans of different skin colors. We evaluated virtual avatars and virtual humans at different opacity levels ranging from how they currently appear on the Microsoft HoloLens, to how they would appear on a display without transparency and color blending issues. Our results indicate that, regardless of skin tone, the perceived humanness of the virtual humans and avatars decreases with respect to opacity level. As a result of this, virtual humans with darker skin tones are perceived as less human compared to those with lighter skin tones. This result suggests that there may be an unintentional racial bias when using applications involving telepresence or virtual humans on additive light model displays. While optical and hardware solutions to this problem are likely years away, we emphasize that future work should investigate how some of these perceptual issues may be overcome via software-based methods. |

| Frank Guido-Sanz; Mindi Anderson; Steven Talbert; Desiree A. Diaz; Gregory Welch; Alyssa Tanaka Using Simulation to Test Validity and Reliability of I-BIDS: A New Handoff Tool Journal Article In: Simulation & Gaming, vol. 53, no. 4, pp. 353-368, 2022. @article{Guido-Sanz2022ch,

title = {Using Simulation to Test Validity and Reliability of I-BIDS: A New Handoff Tool},

author = {Frank Guido-Sanz and Mindi Anderson and Steven Talbert and Desiree A. Diaz and Gregory Welch and Alyssa Tanaka},

url = {https://sreal.ucf.edu/wp-content/uploads/2022/07/Guido-Sanz2022ch.pdf},

year = {2022},

date = {2022-05-16},

urldate = {2022-05-16},

journal = {Simulation & Gaming},

volume = {53},

number = {4},

pages = {353-368},

keywords = {},

pubstate = {published},

tppubtype = {article}

}

|

| Nahal Norouzi; Kangsoo Kim; Gerd Bruder; Jeremy Bailenson; Pamela J. Wisniewski; Greg Welch The Advantages of Virtual Dogs Over Virtual People: Using Augmented Reality to Provide Social Support in Stressful Situations Journal Article In: International Journal of Human Computer Studies, 2022. @article{Norouzi2022b,

title = {The Advantages of Virtual Dogs Over Virtual People: Using Augmented Reality to Provide Social Support in Stressful Situations},

author = {Nahal Norouzi and Kangsoo Kim and Gerd Bruder and Jeremy Bailenson and Pamela J. Wisniewski and Greg Welch},

url = {https://sreal.ucf.edu/wp-content/uploads/2022/05/1-s2.0-S1071581922000659-main.pdf},

year = {2022},

date = {2022-05-01},

urldate = {2022-05-01},

journal = {International Journal of Human Computer Studies},

abstract = {Past research highlights the potential for leveraging both humans and animals as social support figures in one’s real life to enhance performance and reduce physiological and psychological stress. Some studies have shown that typically dogs are more effective than people. Various situational and interpersonal circumstances limit the opportunities for receiving support from actual animals in the real world introducing the need for alternative approaches. To that end, advances in augmented reality (AR) technology introduce new opportunities for realizing and investigating virtual dogs as social support figures. In this paper, we report on a within-subjects 3x1 (i.e., no support, virtual human, or virtual dog) experimental design study with 33 participants. We examined the effect on performance, attitude towards the task and the support figure, and stress and anxiety measured through both subjective questionnaires and heart rate data. Our mixed-methods analysis revealed that participants significantly preferred, and more positively evaluated, the virtual dog support figure than the other conditions. Emerged themes from a qualitative analysis of our participants’ post-study interview responses are aligned with these findings as some of our participants mentioned feeling more comfortable with the virtual dog compared to the virtual human although the virtual human was deemed more interactive. We did not find significant differences between our conditions in terms of change in average heart rate; however, average heart rate significantly increased during all conditions. Our research contributes to understanding how AR virtual support dogs can potentially be used to provide social support to people in stressful situations, especially when real support figures cannot be present. We discuss the implications of our findings and share insights for future research.},

keywords = {},

pubstate = {published},

tppubtype = {article}

}

Past research highlights the potential for leveraging both humans and animals as social support figures in one’s real life to enhance performance and reduce physiological and psychological stress. Some studies have shown that typically dogs are more effective than people. Various situational and interpersonal circumstances limit the opportunities for receiving support from actual animals in the real world introducing the need for alternative approaches. To that end, advances in augmented reality (AR) technology introduce new opportunities for realizing and investigating virtual dogs as social support figures. In this paper, we report on a within-subjects 3x1 (i.e., no support, virtual human, or virtual dog) experimental design study with 33 participants. We examined the effect on performance, attitude towards the task and the support figure, and stress and anxiety measured through both subjective questionnaires and heart rate data. Our mixed-methods analysis revealed that participants significantly preferred, and more positively evaluated, the virtual dog support figure than the other conditions. Emerged themes from a qualitative analysis of our participants’ post-study interview responses are aligned with these findings as some of our participants mentioned feeling more comfortable with the virtual dog compared to the virtual human although the virtual human was deemed more interactive. We did not find significant differences between our conditions in terms of change in average heart rate; however, average heart rate significantly increased during all conditions. Our research contributes to understanding how AR virtual support dogs can potentially be used to provide social support to people in stressful situations, especially when real support figures cannot be present. We discuss the implications of our findings and share insights for future research. |

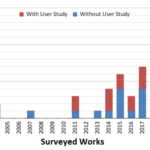

| Yifan Li, Kangsoo Kim, Austin Erickson, Nahal Norouzi, Jonathan Jules, Gerd Bruder, Greg Welch A Scoping Review of Assistance and Therapy with Head-Mounted Displays for People Who Are Visually Impaired Journal Article In: ACM Transactions on Accessible Computing, vol. 00, iss. 00, no. 00, pp. 25, 2022, ISSN: 1936-7228. @article{Li2022,

title = {A Scoping Review of Assistance and Therapy with Head-Mounted Displays for People Who Are Visually Impaired},

author = {Yifan Li, Kangsoo Kim, Austin Erickson, Nahal Norouzi, Jonathan Jules, Gerd Bruder, Greg Welch},

url = {https://sreal.ucf.edu/wp-content/uploads/2022/04/scoping.pdf},

doi = {10.1145/3522693},

issn = {1936-7228},

year = {2022},

date = {2022-04-21},

urldate = {2022-04-21},

journal = {ACM Transactions on Accessible Computing},

volume = {00},

number = {00},

issue = {00},

pages = {25},

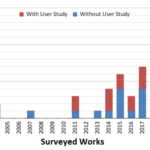

abstract = {Given the inherent visual affordances of Head-Mounted Displays (HMDs) used for Virtual and Augmented Reality (VR/AR), they have been actively used over many years as assistive and therapeutic devices for the people who are visually impaired. In this paper, we report on a scoping review of literature describing the use of HMDs in these areas. Our high-level objectives included detailed reviews and quantitative analyses of the literature, and the development of insights related to emerging trends and future research directions.

Our review began with a pool of 1251 papers collected through a variety of mechanisms. Through a structured screening process, we identified 61 English research papers employing HMDs to enhance the visual sense of people with visual impairments for more detailed analyses. Our analyses reveal that there is an increasing amount of HMD-based research on visual assistance and therapy, and there are trends in the approaches associated with the research objectives. For example, AR is most often used for visual assistive purposes, whereas VR is used for therapeutic purposes. We report on eight existing survey papers, and present detailed analyses of the 61 research papers, looking at the mitigation objectives of the researchers (assistive versus therapeutic), the approaches used, the types of HMDs, the targeted visual conditions, and the inclusion of user studies. In addition to our detailed reviews and analyses of the various characteristics, we present observations related to apparent emerging trends and future research directions.},

keywords = {},

pubstate = {published},

tppubtype = {article}

}

Given the inherent visual affordances of Head-Mounted Displays (HMDs) used for Virtual and Augmented Reality (VR/AR), they have been actively used over many years as assistive and therapeutic devices for the people who are visually impaired. In this paper, we report on a scoping review of literature describing the use of HMDs in these areas. Our high-level objectives included detailed reviews and quantitative analyses of the literature, and the development of insights related to emerging trends and future research directions.

Our review began with a pool of 1251 papers collected through a variety of mechanisms. Through a structured screening process, we identified 61 English research papers employing HMDs to enhance the visual sense of people with visual impairments for more detailed analyses. Our analyses reveal that there is an increasing amount of HMD-based research on visual assistance and therapy, and there are trends in the approaches associated with the research objectives. For example, AR is most often used for visual assistive purposes, whereas VR is used for therapeutic purposes. We report on eight existing survey papers, and present detailed analyses of the 61 research papers, looking at the mitigation objectives of the researchers (assistive versus therapeutic), the approaches used, the types of HMDs, the targeted visual conditions, and the inclusion of user studies. In addition to our detailed reviews and analyses of the various characteristics, we present observations related to apparent emerging trends and future research directions. |

![[DC] Balancing Realities by Improving Cross-Reality Interactions](https://sreal.ucf.edu/wp-content/uploads/2022/06/would_interrupt_red-300x300.png) | Matt Gottsacker [DC] Balancing Realities by Improving Cross-Reality Interactions Proceedings Article In: 2022 IEEE Conference on Virtual Reality and 3D User Interfaces Abstracts and Workshops (VRW), pp. 944-945, Christchurch, New Zealand, 2022. @inproceedings{gottsacker2022balancing,

title = {[DC] Balancing Realities by Improving Cross-Reality Interactions},

author = {Matt Gottsacker},

year = {2022},

date = {2022-04-20},

urldate = {2022-04-20},

booktitle = {2022 IEEE Conference on Virtual Reality and 3D User Interfaces Abstracts and Workshops (VRW)},

pages = {944-945},

address = {Christchurch, New Zealand},

abstract = {Virtual reality (VR) devices have a demonstrated capability to make users feel present in a virtual world. Research has shown that, at times, users desire a less immersive system that provides them aware-ness of and the ability to interact with elements from the real world and with a variety of devices. Understanding such cross-reality interactions is an under-explored research area that will become increasingly important as immersive devices become more ubiquitous. In this extended abstract, I provide an overview of my previous PhD research on facilitating cross-reality interactions between VR users and nearby non-VR interrupters. I discuss planned future research to investigate the social norms that are complicated by these interactions and design solutions that lead to meaningful interactions. These topics and questions will be discussed at the IEEE VR 2022 Doctoral Consortium.},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

Virtual reality (VR) devices have a demonstrated capability to make users feel present in a virtual world. Research has shown that, at times, users desire a less immersive system that provides them aware-ness of and the ability to interact with elements from the real world and with a variety of devices. Understanding such cross-reality interactions is an under-explored research area that will become increasingly important as immersive devices become more ubiquitous. In this extended abstract, I provide an overview of my previous PhD research on facilitating cross-reality interactions between VR users and nearby non-VR interrupters. I discuss planned future research to investigate the social norms that are complicated by these interactions and design solutions that lead to meaningful interactions. These topics and questions will be discussed at the IEEE VR 2022 Doctoral Consortium. |

| Jesus Ugarte; Nahal Norouzi; Austin Erickson; Gerd Bruder; Greg Welch

Distant Hand Interaction Framework in Augmented Reality Proceedings Article In: Proceedings of the 2022 IEEE Conference on Virtual Reality and 3D User Interfaces (VR), pp. 962-963, IEEE IEEE, Christchurch, New Zealand, 2022. @inproceedings{Ugarte2022,

title = {Distant Hand Interaction Framework in Augmented Reality},

author = {Jesus Ugarte and Nahal Norouzi and Austin Erickson and Gerd Bruder and Greg Welch

},

url = {https://sreal.ucf.edu/wp-content/uploads/2022/05/Distant_Hand_Interaction_Framework_in_Augmented_Reality.pdf},

doi = {10.1109/VRW55335.2022.00332},

year = {2022},

date = {2022-03-16},

urldate = {2022-03-16},

booktitle = {Proceedings of the 2022 IEEE Conference on Virtual Reality and 3D User Interfaces (VR)},

pages = {962-963},

publisher = {IEEE},

address = {Christchurch, New Zealand},

organization = {IEEE},

series = {2022 IEEE Conference on Virtual Reality and 3D User Interfaces Abstracts and Workshops (VRW)},

abstract = {Recent augmented reality (AR) head-mounted displays support shared experiences among multiple users in real physical spaces. While previous research looked at different embodied methods to enhance interpersonal communication cues, so far, less research looked at distant interaction in AR and, in particular, distant hand communication, which can open up new possibilities for scenarios, such as large-group collaboration. In this demonstration, we present a research framework for distant hand interaction in AR, including mapping techniques and visualizations. Our techniques are inspired by virtual reality (VR) distant hand interactions, but had to be adjusted due to the different context in AR and limited knowledge about the physical environment. We discuss different techniques for hand communication, including deictic pointing at a distance, distant drawing in AR, and distant communication through symbolic hand gestures.},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

Recent augmented reality (AR) head-mounted displays support shared experiences among multiple users in real physical spaces. While previous research looked at different embodied methods to enhance interpersonal communication cues, so far, less research looked at distant interaction in AR and, in particular, distant hand communication, which can open up new possibilities for scenarios, such as large-group collaboration. In this demonstration, we present a research framework for distant hand interaction in AR, including mapping techniques and visualizations. Our techniques are inspired by virtual reality (VR) distant hand interactions, but had to be adjusted due to the different context in AR and limited knowledge about the physical environment. We discuss different techniques for hand communication, including deictic pointing at a distance, distant drawing in AR, and distant communication through symbolic hand gestures. |

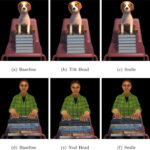

| Nahal Norouzi; Matthew Gottsacker; Gerd Bruder; Pamela Wisniewski; Jeremy Bailenson; Greg Welch Virtual Humans with Pets and Robots: Exploring the Influence of Social Priming on One’s Perception of a Virtual Human Proceedings Article In: Proceedings of the IEEE Conference on Virtual Reality and 3D User Interfaces (IEEE VR), Christchurch, New Zealand, 2022., pp. 10, IEEE, 2022. @inproceedings{Norouzi2022,

title = {Virtual Humans with Pets and Robots: Exploring the Influence of Social Priming on One’s Perception of a Virtual Human},

author = {Nahal Norouzi and Matthew Gottsacker and Gerd Bruder and Pamela Wisniewski and Jeremy Bailenson and Greg Welch},

url = {https://sreal.ucf.edu/wp-content/uploads/2022/01/2022007720.pdf},

year = {2022},

date = {2022-03-16},

urldate = {2022-03-16},

booktitle = {Proceedings of the IEEE Conference on Virtual Reality and 3D User Interfaces (IEEE VR), Christchurch, New Zealand, 2022.},

pages = {10},

publisher = {IEEE},

abstract = {Social priming is the idea that observations of a virtual human (VH)engaged in short social interactions with a real or virtual human bystander can positively influence users’ subsequent interactions with that VH. In this paper we investigate the question of whether the positive effects of social priming are limited to interactions with humanoid entities. For instance, virtual dogs offer an attractive candidate for non-humanoid entities, as previous research suggests multiple positive effects. In particular, real human dog owners receive more positive attention from strangers than non-dog owners. To examine the influence of such social priming we carried out a human-subjects experiment with four conditions: three social priming conditions where a participant initially observed a VH interacting with one of three virtual entities (another VH, a virtual pet dog, or a virtual personal robot), and a non-social priming condition where a VH (alone) was intently looking at her phone as if reading something. We recruited 24 participants and conducted a mixed-methods analysis. We found that a VH’s prior social interactions with another VH and a virtual dog significantly increased participants’ perceptions of the VHs’ affective attraction. Also, participants felt more inclined to interact with the VH in the future in all of the social priming conditions. Qualitatively, we found that the social priming conditions resulted in a more positive user experience than the non-social priming condition. Also, the virtual dog and the virtual robot were perceived as a source of positive surprise, with participants appreciating the non-humanoid interactions for various reasons, such as the avoidance of social anxieties sometimes associated with humans.},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

Social priming is the idea that observations of a virtual human (VH)engaged in short social interactions with a real or virtual human bystander can positively influence users’ subsequent interactions with that VH. In this paper we investigate the question of whether the positive effects of social priming are limited to interactions with humanoid entities. For instance, virtual dogs offer an attractive candidate for non-humanoid entities, as previous research suggests multiple positive effects. In particular, real human dog owners receive more positive attention from strangers than non-dog owners. To examine the influence of such social priming we carried out a human-subjects experiment with four conditions: three social priming conditions where a participant initially observed a VH interacting with one of three virtual entities (another VH, a virtual pet dog, or a virtual personal robot), and a non-social priming condition where a VH (alone) was intently looking at her phone as if reading something. We recruited 24 participants and conducted a mixed-methods analysis. We found that a VH’s prior social interactions with another VH and a virtual dog significantly increased participants’ perceptions of the VHs’ affective attraction. Also, participants felt more inclined to interact with the VH in the future in all of the social priming conditions. Qualitatively, we found that the social priming conditions resulted in a more positive user experience than the non-social priming condition. Also, the virtual dog and the virtual robot were perceived as a source of positive surprise, with participants appreciating the non-humanoid interactions for various reasons, such as the avoidance of social anxieties sometimes associated with humans. |

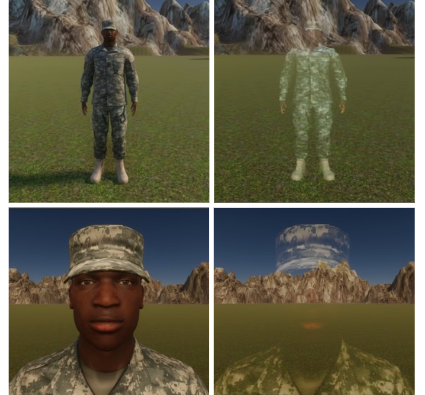

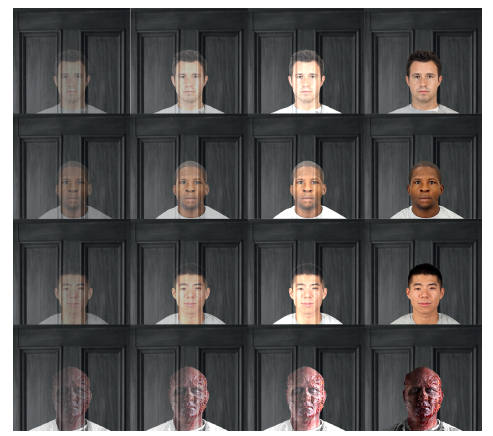

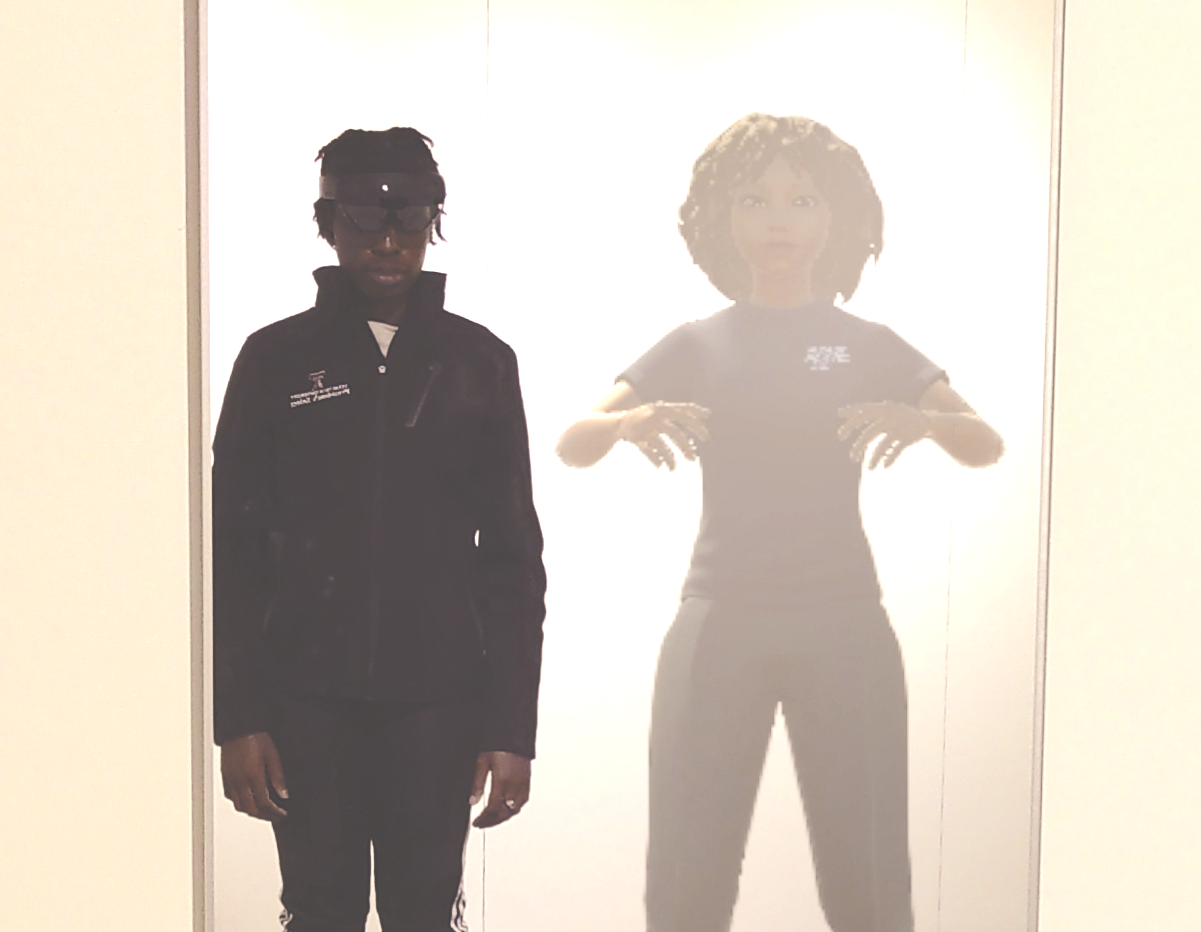

| Tabitha C. Peck; Jessica J. Good; Austin Erickson; Isaac Bynum; Gerd Bruder Effects of Transparency on Perceived Humanness: Implications for Rendering Skin Tones Using Optical See-Through Displays Journal Article In: IEEE Transactions on Visualization and Computer Graphics (TVCG), no. 01, pp. 1-11, 2022, ISSN: 1941-0506. @article{nokey,

title = {Effects of Transparency on Perceived Humanness: Implications for Rendering Skin Tones Using Optical See-Through Displays},

author = {Tabitha C. Peck; Jessica J. Good; Austin Erickson; Isaac Bynum; Gerd Bruder},

url = {https://sreal.ucf.edu/wp-content/uploads/2022/02/AR_and_Avatar_Transparency.pdf

https://www.youtube.com/watch?v=0tUlhbxhE6U&t=59s},

doi = {10.1109/TVCG.2022.3150521},

issn = {1941-0506},

year = {2022},

date = {2022-03-15},

urldate = {2022-03-15},

journal = {IEEE Transactions on Visualization and Computer Graphics (TVCG)},

number = {01},

pages = {1-11},

abstract = {Current optical see-through displays in the field of augmented reality are limited in their ability to display colors with low lightness in the hue, saturation, lightness (HSL) color space, causing such colors to appear transparent. This hardware limitation may add unintended bias into scenarios with virtual humans. Humans have varying skin tones including HSL colors with low lightness. When virtual humans are displayed with optical see-through devices, people with low lightness skin tones may be displayed semi-transparently while those with high lightness skin tones will be displayed more opaquely. For example, a Black avatar may appear semi-transparent in the same scene as a White avatar who will appear more opaque. We present an exploratory user study (N = 160) investigating whether differing opacity levels result in dehumanizing avatar and human faces. Results support that dehumanization occurs as opacity decreases. This suggests that in similar lighting, low lightness skin tones (e.g., Black faces) will be viewed as less human than high lightness skin tones (e.g., White faces). Additionally, the perceived emotionality of virtual human faces also predicts perceived humanness. Angry faces were seen overall as less human, and at lower opacity levels happy faces were seen as more human. Our results suggest that additional research is needed to understand the effects and interactions of emotionality and opacity on dehumanization. Further, we provide evidence that unintentional racial bias may be added when developing for optical see-through devices using virtual humans. We highlight the potential bias and discuss implications and directions for future research.},

keywords = {},

pubstate = {published},

tppubtype = {article}

}

Current optical see-through displays in the field of augmented reality are limited in their ability to display colors with low lightness in the hue, saturation, lightness (HSL) color space, causing such colors to appear transparent. This hardware limitation may add unintended bias into scenarios with virtual humans. Humans have varying skin tones including HSL colors with low lightness. When virtual humans are displayed with optical see-through devices, people with low lightness skin tones may be displayed semi-transparently while those with high lightness skin tones will be displayed more opaquely. For example, a Black avatar may appear semi-transparent in the same scene as a White avatar who will appear more opaque. We present an exploratory user study (N = 160) investigating whether differing opacity levels result in dehumanizing avatar and human faces. Results support that dehumanization occurs as opacity decreases. This suggests that in similar lighting, low lightness skin tones (e.g., Black faces) will be viewed as less human than high lightness skin tones (e.g., White faces). Additionally, the perceived emotionality of virtual human faces also predicts perceived humanness. Angry faces were seen overall as less human, and at lower opacity levels happy faces were seen as more human. Our results suggest that additional research is needed to understand the effects and interactions of emotionality and opacity on dehumanization. Further, we provide evidence that unintentional racial bias may be added when developing for optical see-through devices using virtual humans. We highlight the potential bias and discuss implications and directions for future research. |

![[Poster] Adapting Michelson Contrast for use with Optical See-Through Displays](https://sreal.ucf.edu/wp-content/uploads/2022/10/phonefigure2-e1664926618216-150x150.png)

![[POSTER] Exploring Cues and Signaling to Improve Cross-Reality Interruptions](https://sreal.ucf.edu/wp-content/uploads/2022/06/would_interrupt_red-300x300.png)

![[DC] Balancing Realities by Improving Cross-Reality Interactions](https://sreal.ucf.edu/wp-content/uploads/2022/06/would_interrupt_red-300x300.png)