2020

|

| Laura Gonzalez; Salam Daher; Greg Welch Neurological Assessment Using a Physical-Virtual Patient (PVP) Journal Article In: Simulation & Gaming, pp. 1–17, 2020. @article{Gonzalez2020aa,

title = {Neurological Assessment Using a Physical-Virtual Patient (PVP)},

author = {Laura Gonzalez and Salam Daher and Greg Welch},

url = {https://sreal.ucf.edu/wp-content/uploads/2020/08/Gonzalez2020aa.pdf},

year = {2020},

date = {2020-08-12},

journal = {Simulation & Gaming},

pages = {1--17},

keywords = {},

pubstate = {published},

tppubtype = {article}

}

|

| Salam Daher; Jason Hochreiter; Ryan Schubert; Laura Gonzalez; Juan Cendan; Mindi Anderson; Desiree A Diaz; Gregory F. Welch The Physical-Virtual Patient Simulator: A Physical Human Form with Virtual Appearance and Behavior Journal Article In: Simulation in Healthcare, vol. 15, no. 2, pp. 115–121, 2020, (see erratum at DOI: 10.1097/SIH.0000000000000481). @article{Daher2020aa,

title = {The Physical-Virtual Patient Simulator: A Physical Human Form with Virtual Appearance and Behavior},

author = {Salam Daher and Jason Hochreiter and Ryan Schubert and Laura Gonzalez and Juan Cendan and Mindi Anderson and Desiree A Diaz and Gregory F. Welch},

url = {https://sreal.ucf.edu/wp-content/uploads/2020/06/Daher2020aa1.pdf

https://journals.lww.com/simulationinhealthcare/Fulltext/2020/04000/The_Physical_Virtual_Patient_Simulator__A_Physical.9.aspx

https://journals.lww.com/simulationinhealthcare/Fulltext/2020/06000/Erratum_to_the_Physical_Virtual_Patient_Simulator_.12.aspx},

doi = {10.1097/SIH.0000000000000409},

year = {2020},

date = {2020-04-01},

journal = {Simulation in Healthcare},

volume = {15},

number = {2},

pages = {115--121},

note = {see erratum at DOI: 10.1097/SIH.0000000000000481},

keywords = {},

pubstate = {published},

tppubtype = {article}

}

|

2019

|

| Kendra Richards; Nikhil Mahalanobis; Kangsoo Kim; Ryan Schubert; Myungho Lee; Salam Daher; Nahal Norouzi; Jason Hochreiter; Gerd Bruder; Gregory F. Welch Analysis of Peripheral Vision and Vibrotactile Feedback During Proximal Search Tasks with Dynamic Virtual Entities in Augmented Reality Proceedings Article In: Proceedings of the ACM Symposium on Spatial User Interaction (SUI), pp. 3:1-3:9, ACM, 2019, ISBN: 978-1-4503-6975-6/19/10. @inproceedings{Richards2019b,

title = {Analysis of Peripheral Vision and Vibrotactile Feedback During Proximal Search Tasks with Dynamic Virtual Entities in Augmented Reality},

author = {Kendra Richards and Nikhil Mahalanobis and Kangsoo Kim and Ryan Schubert and Myungho Lee and Salam Daher and Nahal Norouzi and Jason Hochreiter and Gerd Bruder and Gregory F. Welch},

url = {https://sreal.ucf.edu/wp-content/uploads/2019/10/Richards2019b.pdf},

doi = {10.1145/3357251.3357585},

isbn = {978-1-4503-6975-6/19/10},

year = {2019},

date = {2019-10-19},

booktitle = {Proceedings of the ACM Symposium on Spatial User Interaction (SUI)},

pages = {3:1-3:9},

publisher = {ACM},

abstract = {A primary goal of augmented reality (AR) is to seamlessly embed virtual content into a real environment. There are many factors that can affect the perceived physicality and co-presence of virtual entities, including the hardware capabilities, the fidelity of the virtual behaviors, and sensory feedback associated with the interactions. In this paper, we present a study investigating participants' perceptions and behaviors during a time-limited search task in close proximity with virtual entities in AR. In particular, we analyze the effects of (i) visual conflicts in the periphery of an optical see-through head-mounted display, a Microsoft HoloLens, (ii) overall lighting in the physical environment, and (iii) multimodal feedback based on vibrotactile transducers mounted on a physical platform. Our results show significant benefits of vibrotactile feedback and reduced peripheral lighting for spatial and social presence, and engagement. We discuss implications of these effects for AR applications.},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

A primary goal of augmented reality (AR) is to seamlessly embed virtual content into a real environment. There are many factors that can affect the perceived physicality and co-presence of virtual entities, including the hardware capabilities, the fidelity of the virtual behaviors, and sensory feedback associated with the interactions. In this paper, we present a study investigating participants' perceptions and behaviors during a time-limited search task in close proximity with virtual entities in AR. In particular, we analyze the effects of (i) visual conflicts in the periphery of an optical see-through head-mounted display, a Microsoft HoloLens, (ii) overall lighting in the physical environment, and (iii) multimodal feedback based on vibrotactile transducers mounted on a physical platform. Our results show significant benefits of vibrotactile feedback and reduced peripheral lighting for spatial and social presence, and engagement. We discuss implications of these effects for AR applications. |

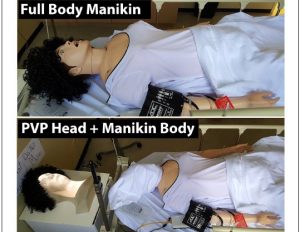

| Laura Gonzalez; Salam Daher; Gregory Welch Vera Real : Stroke Assessment Using a Physical Virtual Patient (PVP) Conference INACSL 2019. @conference{gonzalez_2019_vera,

title = {Vera Real : Stroke Assessment Using a Physical Virtual Patient (PVP)},

author = {Laura Gonzalez and Salam Daher and Gregory Welch},

url = {https://sreal.ucf.edu/wp-content/uploads/2019/07/INACSL-_Conference_VERA.pdf},

year = {2019},

date = {2019-06-21},

organization = {INACSL},

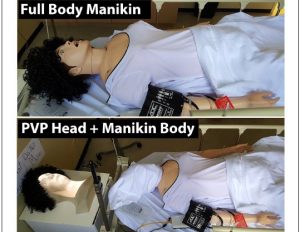

abstract = {Introduction: Simulation has revolutionized the way we teach and learn; and the pedagogy of simulation continues to mature. Mannequins have limitations such as its inability to exhibit emotions, idle movements, or interactive patient gaze. As a result, students struggle with suspension of disbelief and may be unable to relate to the “patient” authentically. Physical virtual patients (PVP) are a new type of simulator which combines the physicality of mannequins plus the richness of dynamic imagery such as blink, smile, and other facial expressions. The purpose of this study was to compare a traditional mannequin vs. a more realistic PVP head. The concept under consideration is realism and its influence on engagement and learning. Methods: The study used a pre-test, post-test, randomized in-between subject design (N=59) with undergraduate nursing students. Students assessed an evolving stroke patient, and completed post simulation questions to evaluate engagement and sense of urgency. A knowledge pre-simulation and post-simulation test were administered to evaluate learning. Results: Participants where more engaged with the PVP condition; which provoked a higher sense of urgency. There was a significant change between the pre-simulation test, and post-simulation test which supported increased learning for the PVP when compared to the mannequin. Discussion: This study demonstrated that increasing realism, could increase engagement which may result in a greater sense of urgency and learning. This PVP technology is a viable addition to mannequin based simulation. Future works includes extending this technology to a full body PVP. },

keywords = {},

pubstate = {published},

tppubtype = {conference}

}

Introduction: Simulation has revolutionized the way we teach and learn; and the pedagogy of simulation continues to mature. Mannequins have limitations such as its inability to exhibit emotions, idle movements, or interactive patient gaze. As a result, students struggle with suspension of disbelief and may be unable to relate to the “patient” authentically. Physical virtual patients (PVP) are a new type of simulator which combines the physicality of mannequins plus the richness of dynamic imagery such as blink, smile, and other facial expressions. The purpose of this study was to compare a traditional mannequin vs. a more realistic PVP head. The concept under consideration is realism and its influence on engagement and learning. Methods: The study used a pre-test, post-test, randomized in-between subject design (N=59) with undergraduate nursing students. Students assessed an evolving stroke patient, and completed post simulation questions to evaluate engagement and sense of urgency. A knowledge pre-simulation and post-simulation test were administered to evaluate learning. Results: Participants where more engaged with the PVP condition; which provoked a higher sense of urgency. There was a significant change between the pre-simulation test, and post-simulation test which supported increased learning for the PVP when compared to the mannequin. Discussion: This study demonstrated that increasing realism, could increase engagement which may result in a greater sense of urgency and learning. This PVP technology is a viable addition to mannequin based simulation. Future works includes extending this technology to a full body PVP. |

| Michelle Aebersold; Salam Daher; Cynthia Foronda; Jone Tiffany; Margaret Verkuyl Virtual/ Augmented Reality for Health Professions Education Symposium Conference INACSL 2019. @conference{inacsl_2019_arvr,

title = {Virtual/ Augmented Reality for Health Professions Education Symposium},

author = {Michelle Aebersold and Salam Daher and Cynthia Foronda and Jone Tiffany and Margaret Verkuyl},

url = {https://sreal.ucf.edu/wp-content/uploads/2019/08/INACSL-ConferenceAR-VRSymposium.pdf},

year = {2019},

date = {2019-06-19},

organization = {INACSL},

keywords = {},

pubstate = {published},

tppubtype = {conference}

}

|

| Salam Daher; Veenadhari Kollipara NCWIT Panel Presentation 05.05.2019. @misc{daher_2019_ncwit,

title = {NCWIT Panel},

author = {Salam Daher and Veenadhari Kollipara},

url = {https://sreal.ucf.edu/wp-content/uploads/2019/08/ncwit2019.png},

year = {2019},

date = {2019-05-05},

keywords = {},

pubstate = {published},

tppubtype = {presentation}

}

|

![[POSTER] Matching vs. Non-Matching Visuals and Shape for Embodied Virtual Healthcare Agents](https://sreal.ucf.edu/wp-content/uploads/2019/03/ieeevr_poster_thumbnail-1.png) | Salam Daher; Jason Hochreiter; Nahal Norouzi; Ryan Schubert; Gerd Bruder; Laura Gonzalez; Mindi Anderson; Desiree Diaz; Juan Cendan; Greg Welch [POSTER] Matching vs. Non-Matching Visuals and Shape for Embodied Virtual Healthcare Agents Proceedings Article In: Proceedings of IEEE Virtual Reality (VR), 2019, 2019. @inproceedings{daher2019matching,

title = {[POSTER] Matching vs. Non-Matching Visuals and Shape for Embodied Virtual Healthcare Agents},

author = {Salam Daher and Jason Hochreiter and Nahal Norouzi and Ryan Schubert and Gerd Bruder and Laura Gonzalez and Mindi Anderson and Desiree Diaz and Juan Cendan and Greg Welch},

url = {https://sreal.ucf.edu/wp-content/uploads/2019/03/IEEEVR2019_Poster_PVChildStudy.pdf},

year = {2019},

date = {2019-03-27},

publisher = {Proceedings of IEEE Virtual Reality (VR), 2019},

abstract = {Embodied virtual agents serving as patient simulators are widely used in medical training scenarios, ranging from physical patients to virtual patients presented via virtual and augmented reality technologies. Physical-virtual patients are a hybrid solution that combines the benefits of dynamic visuals integrated into a human-shaped physical

form that can also present other cues, such as pulse, breathing sounds, and temperature. Sometimes in simulation the visuals and shape do not match. We carried out a human-participant study employing graduate nursing students in pediatric patient simulations comprising conditions associated with matching/non-matching of the visuals and shape.},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

Embodied virtual agents serving as patient simulators are widely used in medical training scenarios, ranging from physical patients to virtual patients presented via virtual and augmented reality technologies. Physical-virtual patients are a hybrid solution that combines the benefits of dynamic visuals integrated into a human-shaped physical

form that can also present other cues, such as pulse, breathing sounds, and temperature. Sometimes in simulation the visuals and shape do not match. We carried out a human-participant study employing graduate nursing students in pediatric patient simulations comprising conditions associated with matching/non-matching of the visuals and shape. |

| Salam Daher Patient Simulators: the Past, Present, and Future Presentation 21.01.2019. @misc{daher_2019_otronicon,

title = {Patient Simulators: the Past, Present, and Future},

author = {Salam Daher},

url = {https://sreal.ucf.edu/wp-content/uploads/2019/08/otronicon_2019.pdf},

year = {2019},

date = {2019-01-21},

keywords = {},

pubstate = {published},

tppubtype = {presentation}

}

|

2018

|

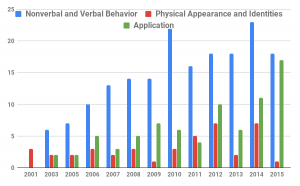

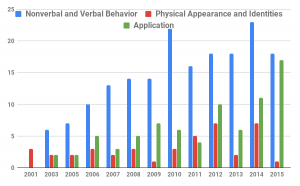

| Nahal Norouzi; Kangsoo Kim; Jason Hochreiter; Myungho Lee; Salam Daher; Gerd Bruder; Gregory Welch A Systematic Survey of 15 Years of User Studies Published in the Intelligent Virtual Agents Conference Proceedings Article In: IVA '18 Proceedings of the 18th International Conference on Intelligent Virtual Agents, pp. 17-22, ACM ACM, 2018, ISBN: 978-1-4503-6013-5/18/11. @inproceedings{Norouzi2018c,

title = {A Systematic Survey of 15 Years of User Studies Published in the Intelligent Virtual Agents Conference},

author = {Nahal Norouzi and Kangsoo Kim and Jason Hochreiter and Myungho Lee and Salam Daher and Gerd Bruder and Gregory Welch },

url = {https://sreal.ucf.edu/wp-content/uploads/2018/11/p17-norouzi-2.pdf},

doi = {10.1145/3267851.3267901},

isbn = {978-1-4503-6013-5/18/11},

year = {2018},

date = {2018-11-05},

booktitle = {IVA '18 Proceedings of the 18th International Conference on Intelligent Virtual Agents},

pages = {17-22},

publisher = {ACM},

organization = {ACM},

abstract = {The field of intelligent virtual agents (IVAs) has evolved immensely over the past 15 years, introducing new application opportunities in areas such as training, health care, and virtual assistants. In this survey paper, we provide a systematic review of the most influential user studies published in the IVA conference from 2001 to 2015 focusing on IVA development, human perception, and interactions. A total of 247 papers with 276 user studies have been classified and reviewed based on their contributions and impact. We identify the different areas of research and provide a summary of the papers with the highest impact. With the trends of past user studies and the current state of technology, we provide insights into future trends and research challenges.},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

The field of intelligent virtual agents (IVAs) has evolved immensely over the past 15 years, introducing new application opportunities in areas such as training, health care, and virtual assistants. In this survey paper, we provide a systematic review of the most influential user studies published in the IVA conference from 2001 to 2015 focusing on IVA development, human perception, and interactions. A total of 247 papers with 276 user studies have been classified and reviewed based on their contributions and impact. We identify the different areas of research and provide a summary of the papers with the highest impact. With the trends of past user studies and the current state of technology, we provide insights into future trends and research challenges. |

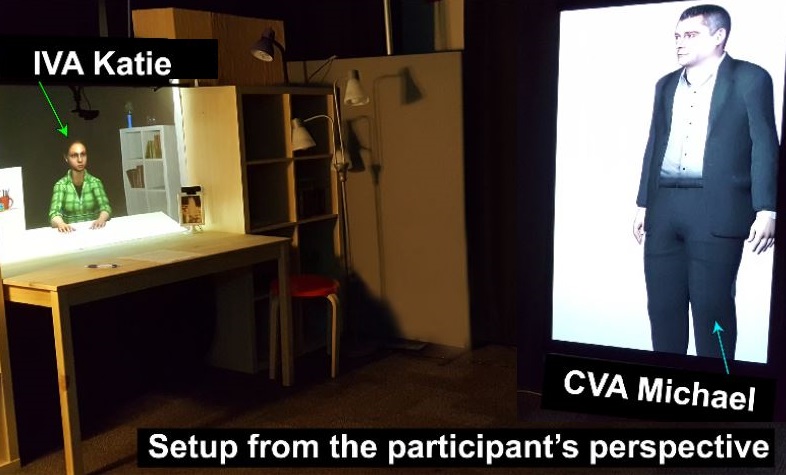

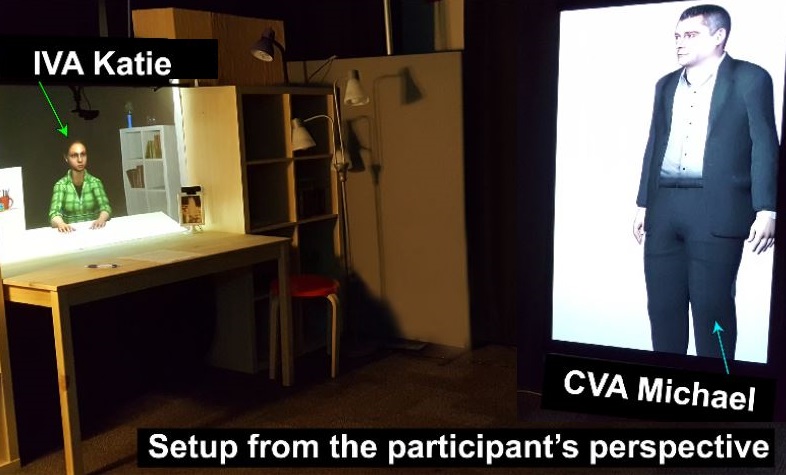

| Salam Daher; Jason Hochreiter; Nahal Norouzi; Laura Gonzalez; Gerd Bruder; Greg Welch Physical-Virtual Agents for Healthcare Simulation Proceedings Article In: Proceedings of IVA 2018, November 5-8, 2018, Sydney, NSW, Australia, ACM, 2018. @inproceedings{daher2018physical,

title = {Physical-Virtual Agents for Healthcare Simulation},

author = {Salam Daher and Jason Hochreiter and Nahal Norouzi and Laura Gonzalez and Gerd Bruder and Greg Welch},

url = {https://sreal.ucf.edu/wp-content/uploads/2018/10/IVA2018_StrokeStudy_CameraReady_Editor_20180911_1608.pdf},

year = {2018},

date = {2018-11-04},

booktitle = {Proceedings of IVA 2018, November 5-8, 2018, Sydney, NSW, Australia},

publisher = {ACM},

abstract = {Conventional Intelligent Virtual Agents (IVAs) focus primarily on the visual and auditory channels for both the agent and the interacting human: the agent displays a visual appearance and speech as output, while processing the human’s verbal and non-verbal behavior as input. However, some interactions, particularly those between a patient and healthcare provider, inherently include tactile components.We introduce an Intelligent Physical-Virtual Agent (IPVA) head that occupies an appropriate physical volume; can be touched; and via human-in-the-loop control can change appearance, listen, speak, and react physiologically in response to human behavior. Compared to a traditional IVA, it provides a physical affordance, allowing for more realistic and compelling human-agent interactions. In a user study focusing on neurological assessment of a simulated patient showing stroke symptoms, we compared the IPVA head with a high-fidelity touch-aware mannequin that has a static appearance. Various measures of the human subjects indicated greater attention, affinity for, and presence with the IPVA patient, all factors that can improve healthcare training.},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

Conventional Intelligent Virtual Agents (IVAs) focus primarily on the visual and auditory channels for both the agent and the interacting human: the agent displays a visual appearance and speech as output, while processing the human’s verbal and non-verbal behavior as input. However, some interactions, particularly those between a patient and healthcare provider, inherently include tactile components.We introduce an Intelligent Physical-Virtual Agent (IPVA) head that occupies an appropriate physical volume; can be touched; and via human-in-the-loop control can change appearance, listen, speak, and react physiologically in response to human behavior. Compared to a traditional IVA, it provides a physical affordance, allowing for more realistic and compelling human-agent interactions. In a user study focusing on neurological assessment of a simulated patient showing stroke symptoms, we compared the IPVA head with a high-fidelity touch-aware mannequin that has a static appearance. Various measures of the human subjects indicated greater attention, affinity for, and presence with the IPVA patient, all factors that can improve healthcare training. |

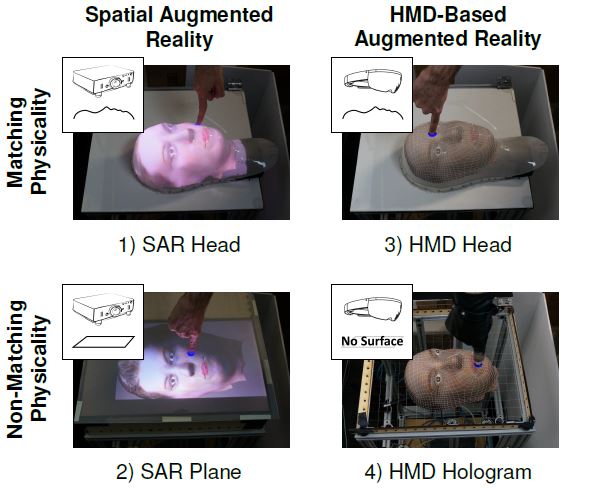

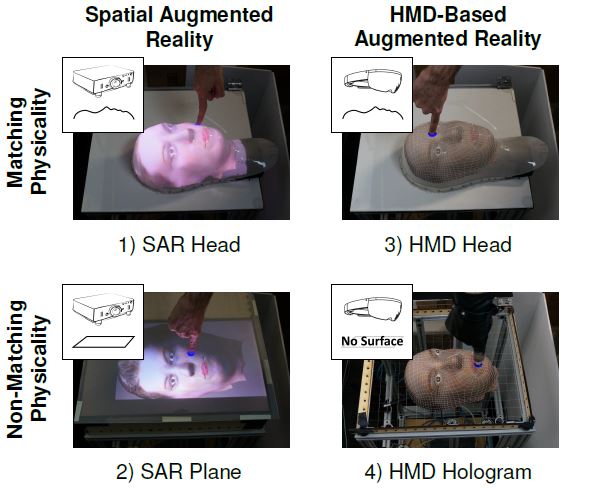

| Jason Hochreiter; Salam Daher; Gerd Bruder; Greg Welch Cognitive and touch performance effects of mismatched 3D physical and visual perceptions Proceedings Article In: IEEE Virtual Reality 2018 (VR 2018), 2018. @inproceedings{Hochreiter2018,

title = {Cognitive and touch performance effects of mismatched 3D physical and visual perceptions},

author = {Jason Hochreiter and Salam Daher and Gerd Bruder and Greg Welch},

url = {https://sreal.ucf.edu/wp-content/uploads/2018/05/hochreiter2018.pdf},

year = {2018},

date = {2018-03-22},

booktitle = {IEEE Virtual Reality 2018 (VR 2018)},

abstract = {In a controlled human-subject study we investigated the effects of mismatched physical and visual perception on cognitive load and performance in an Augmented Reality (AR) touching task by varying the physical fidelity (matching vs. non-matching physical shape) and visual mechanism (projector-based vs. HMD-based AR) of the representation. Participants touched visual targets on four corresponding physical-visual representations of a human head. We evaluated their performance in terms of touch accuracy, response time, and a cognitive load task requiring target size estimations during a concurrent (secondary) counting task. Results indicated higher performance, lower cognitive load, and increased usability when participants touched a matching physical head-shaped surface and with visuals provided by a projector from underneath.},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

In a controlled human-subject study we investigated the effects of mismatched physical and visual perception on cognitive load and performance in an Augmented Reality (AR) touching task by varying the physical fidelity (matching vs. non-matching physical shape) and visual mechanism (projector-based vs. HMD-based AR) of the representation. Participants touched visual targets on four corresponding physical-visual representations of a human head. We evaluated their performance in terms of touch accuracy, response time, and a cognitive load task requiring target size estimations during a concurrent (secondary) counting task. Results indicated higher performance, lower cognitive load, and increased usability when participants touched a matching physical head-shaped surface and with visuals provided by a projector from underneath. |

2017

|

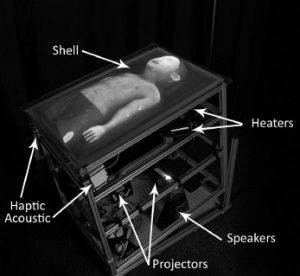

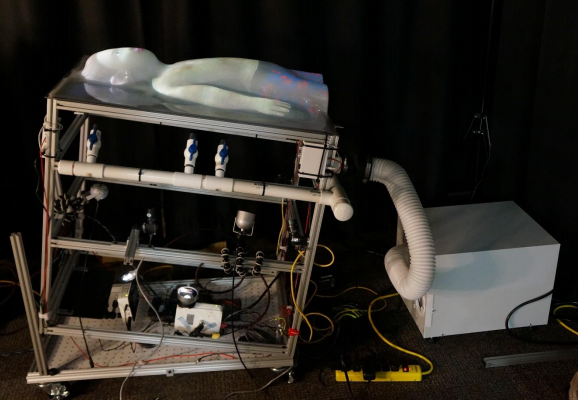

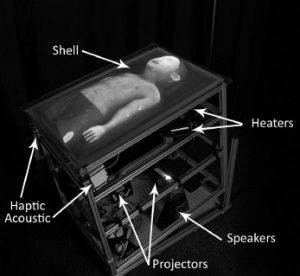

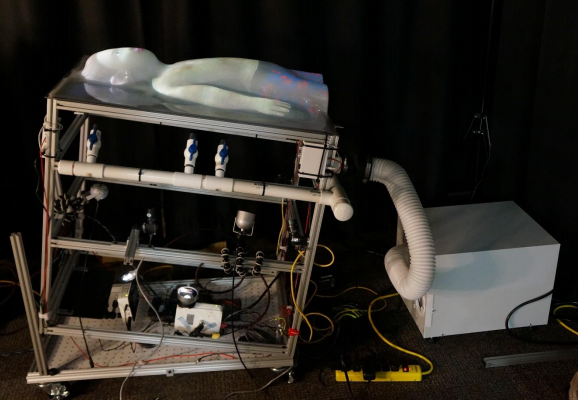

| Salam Daher Physical-Virtual Patient Bed Technical Report 2017, (Daher, Salam.(2017).Physical-Virtual Patient Bed. (Link Foundation Fellowship Final Reports: Modeling, Simulation, and Training Program.) Retrieved from The Scholarship Repository of Florida Institute of Technology website: https://repository.lib.fit.edu/). @techreport{daher2017linkfoundationreport,

title = {Physical-Virtual Patient Bed},

author = {Salam Daher},

url = {https://sreal.ucf.edu/linkfoundation_pvpbreport/},

year = {2017},

date = {2017-08-28},

note = {Daher, Salam.(2017).Physical-Virtual Patient Bed. (Link Foundation Fellowship Final Reports: Modeling, Simulation, and Training Program.) Retrieved from The Scholarship Repository of Florida Institute of Technology website: https://repository.lib.fit.edu/},

keywords = {},

pubstate = {published},

tppubtype = {techreport}

}

|

| Salam Daher; Kangsoo Kim; Myungho Lee; Ryan Schubert; Gerd Bruder; Jeremy Bailenson; Greg Welch Effects of Social Priming on Social Presence with Intelligent Virtual Agents Book Chapter In: Beskow, Jonas; Peters, Christopher; Castellano, Ginevra; O'Sullivan, Carol; Leite, Iolanda; Kopp, Stefan (Ed.): Intelligent Virtual Agents: 17th International Conference, IVA 2017, Stockholm, Sweden, August 27-30, 2017, Proceedings, vol. 10498, pp. 87-100, Springer International Publishing, 2017. @inbook{Daher2017ab,

title = {Effects of Social Priming on Social Presence with Intelligent Virtual Agents},

author = {Salam Daher and Kangsoo Kim and Myungho Lee and Ryan Schubert and Gerd Bruder and Jeremy Bailenson and Greg Welch},

editor = {Jonas Beskow and Christopher Peters and Ginevra Castellano and Carol O'Sullivan and Iolanda Leite and Stefan Kopp},

url = {https://sreal.ucf.edu/wp-content/uploads/2017/12/Daher2017ab.pdf},

doi = {10.1007/978-3-319-67401-8_10},

year = {2017},

date = {2017-08-26},

booktitle = {Intelligent Virtual Agents: 17th International Conference, IVA 2017, Stockholm, Sweden, August 27-30, 2017, Proceedings},

volume = {10498},

pages = {87-100},

publisher = {Springer International Publishing},

keywords = {},

pubstate = {published},

tppubtype = {inbook}

}

|

![[DC] Optical See-Through vs. Spatial Augmented Reality Simulators for Medical Applications](https://sreal.ucf.edu/wp-content/uploads/2018/08/doctoralConsortium.png) | Salam Daher [DC] Optical See-Through vs. Spatial Augmented Reality Simulators for Medical Applications Proceedings Article In: Virtual Reality (VR), 2017 IEEE, pp. 417-418, IEEE 2017. @inproceedings{daher2017optical,

title = {[DC] Optical See-Through vs. Spatial Augmented Reality Simulators for Medical Applications},

author = {Salam Daher},

url = {https://sreal.ucf.edu/dc_ieeevr2017_20170226_0828/},

doi = {10.1109/VR.2017.7892354},

year = {2017},

date = {2017-05-20},

booktitle = {Virtual Reality (VR), 2017 IEEE},

pages = {417-418},

organization = {IEEE},

abstract = {Currently healthcare practitioners use standardized patients, physical mannequins, and virtual patients as surrogates for real patients to provide a safe learning environment for students. Each of these

simulators has different limitation that could be mitigated with various degrees of fidelity to represent medical cues. As we are exploring different ways to simulate a human patient and their effects on

learning, we would like to compare the dynamic visuals between spatial augmented reality and a optical see-through augmented reality where a patient is rendered using the HoloLens and how that

affects depth perception, task completion, and social presence.},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

Currently healthcare practitioners use standardized patients, physical mannequins, and virtual patients as surrogates for real patients to provide a safe learning environment for students. Each of these

simulators has different limitation that could be mitigated with various degrees of fidelity to represent medical cues. As we are exploring different ways to simulate a human patient and their effects on

learning, we would like to compare the dynamic visuals between spatial augmented reality and a optical see-through augmented reality where a patient is rendered using the HoloLens and how that

affects depth perception, task completion, and social presence. |

![[POSTER] Can Social Presence be Contagious? Effects of Social Presence Priming on Interaction with Virtual Humans](https://sreal.ucf.edu/wp-content/uploads/2017/02/Daher2017aa.png) | Salam Daher; Kangsoo Kim; Myungho Lee; Gerd Bruder; Ryan Schubert; Jeremy Bailenson; Greg Welch [POSTER] Can Social Presence be Contagious? Effects of Social Presence Priming on Interaction with Virtual Humans Proceedings Article In: 3D User Interfaces (3DUI), 2017 IEEE Symposium on , 2017. @inproceedings{Daher2017aa,

title = {[POSTER] Can Social Presence be Contagious? Effects of Social Presence Priming on Interaction with Virtual Humans},

author = {Salam Daher and Kangsoo Kim and Myungho Lee and Gerd Bruder and Ryan Schubert and Jeremy Bailenson and Greg Welch},

url = {https://sreal.ucf.edu/wp-content/uploads/2017/02/Daher2017aa_red.pdf},

doi = {10.1109/3DUI.2017.7893341 },

year = {2017},

date = {2017-03-18},

booktitle = {3D User Interfaces (3DUI), 2017 IEEE Symposium on },

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

|

2016

|

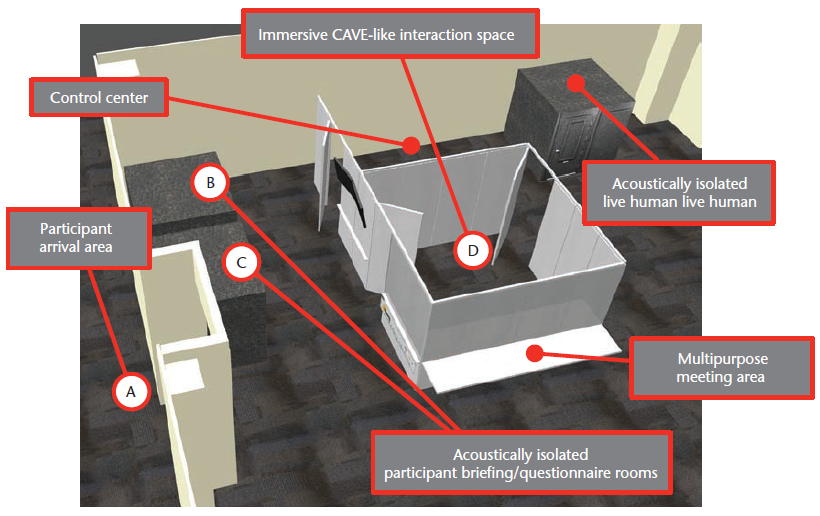

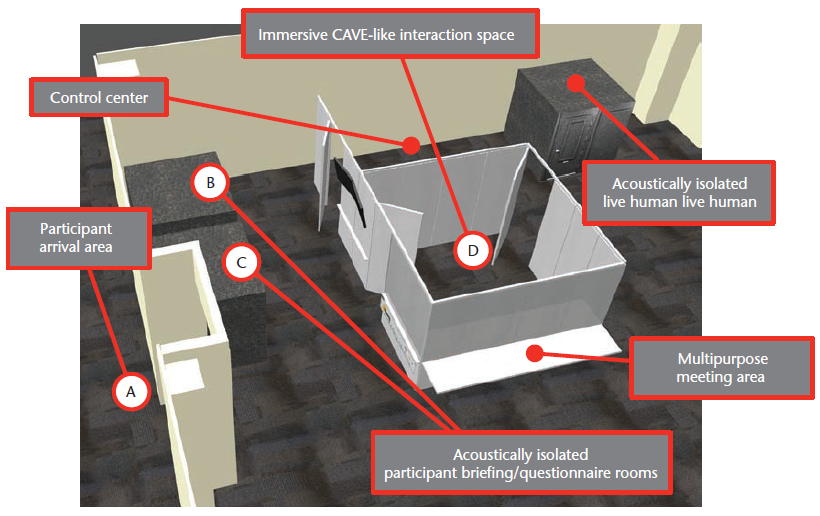

| Ryan Schubert; Greg Welch; Salam Daher; Andrew Raij HuSIS: A Dedicated Space for Studying Human Interactions Journal Article In: IEEE Computer Graphics and Applications, vol. 36, no. 6, 2016. @article{Schubert2016aa,

title = {HuSIS: A Dedicated Space for Studying Human Interactions},

author = {Ryan Schubert and Greg Welch and Salam Daher and Andrew Raij},

url = {https://sreal.ucf.edu/wp-content/uploads/2017/02/Schubert2016aa.pdf},

year = {2016},

date = {2016-11-01},

journal = {IEEE Computer Graphics and Applications},

volume = {36},

number = {6},

publisher = {IEEE Computer Society Press},

keywords = {},

pubstate = {published},

tppubtype = {article}

}

|

![[POSTER] Humanikins: Humanity Transfer to Physical Manikins](https://sreal.ucf.edu/wp-content/uploads/2018/08/humanikin.png) | Salam Daher; Greg Welch [POSTER] Humanikins: Humanity Transfer to Physical Manikins Conference 22nd Medicine Meets Virtual Reality (NextMed / MMVR), Los Angeles, CA, USA, 2016. @conference{Daher2016,

title = {[POSTER] Humanikins: Humanity Transfer to Physical Manikins},

author = {Salam Daher and Greg Welch},

url = {https://sreal.ucf.edu/daher_s/},

year = {2016},

date = {2016-04-07},

booktitle = {22nd Medicine Meets Virtual Reality (NextMed / MMVR)},

address = {Los Angeles, CA, USA},

keywords = {},

pubstate = {published},

tppubtype = {conference}

}

|

![[POSTER] Interactive Rear‐Projection Physical‐Virtual Patient Simulators](https://sreal.ucf.edu/wp-content/uploads/2018/08/mmvr_greg-1024x420.png) | Greg Welch; Salam Daher; Jason Hochreiter; Laura Gonzalez [POSTER] Interactive Rear‐Projection Physical‐Virtual Patient Simulators Conference 22nd Medicine Meets Virtual Reality (NextMed / MMVR), Los Angeles, CA, USA, 2016. @conference{Welch2016ab,

title = {[POSTER] Interactive Rear‐Projection Physical‐Virtual Patient Simulators},

author = {Greg Welch and Salam Daher and Jason Hochreiter and Laura Gonzalez},

url = {https://sreal.ucf.edu/welch_gf-20160121/},

year = {2016},

date = {2016-04-07},

booktitle = {22nd Medicine Meets Virtual Reality (NextMed / MMVR)},

address = {Los Angeles, CA, USA},

keywords = {},

pubstate = {published},

tppubtype = {conference}

}

|

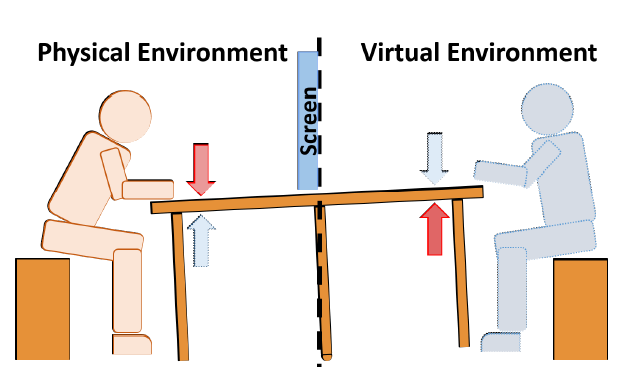

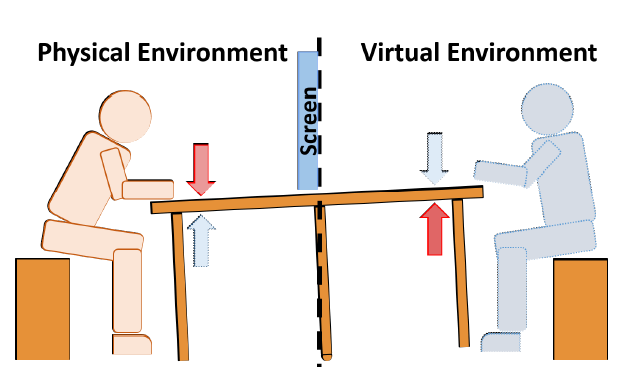

| Myungho Lee; Kangsoo Kim; Salam Daher; Andrew Raij; Ryan Schubert; Jeremy Bailenson; Gregory Welch The wobbly table: Increased social presence via subtle incidental movement of a real-virtual table Proceedings Article In: 2016 IEEE Virtual Reality (VR), pp. 11-17, 2016. @inproceedings{Lee2016,

title = {The wobbly table: Increased social presence via subtle incidental movement of a real-virtual table},

author = {Myungho Lee and Kangsoo Kim and Salam Daher and Andrew Raij and Ryan Schubert and Jeremy Bailenson and Gregory Welch},

url = {https://sreal.ucf.edu/wp-content/uploads/2017/02/Lee2016.pdf},

doi = {10.1109/VR.2016.7504683},

year = {2016},

date = {2016-03-01},

booktitle = {2016 IEEE Virtual Reality (VR)},

pages = {11-17},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

|

![[POSTER] Exploring Social Presence Transfer in Real-Virtual Human Interaction](https://sreal.ucf.edu/wp-content/uploads/2017/02/Daher2016aa.png) | Salam Daher; Kangsoo Kim; Myungho Lee; Andrew Raij; Ryan Schubert; Jeremy Bailenson; Greg Welch [POSTER] Exploring Social Presence Transfer in Real-Virtual Human Interaction Conference Proceedings of IEEE Virtual Reality 2016, Greenville, SC, USA, 2016. @conference{Daher2016aa,

title = {[POSTER] Exploring Social Presence Transfer in Real-Virtual Human Interaction},

author = {Salam Daher and Kangsoo Kim and Myungho Lee and Andrew Raij and Ryan Schubert and Jeremy Bailenson and Greg Welch},

url = {https://sreal.ucf.edu/wp-content/uploads/2017/02/Daher2016aa.pdf},

year = {2016},

date = {2016-03-01},

booktitle = {Proceedings of IEEE Virtual Reality 2016},

address = {Greenville, SC, USA},

keywords = {},

pubstate = {published},

tppubtype = {conference}

}

|

![[POSTER] Matching vs. Non-Matching Visuals and Shape for Embodied Virtual Healthcare Agents](https://sreal.ucf.edu/wp-content/uploads/2019/03/ieeevr_poster_thumbnail-1.png)

![[DC] Optical See-Through vs. Spatial Augmented Reality Simulators for Medical Applications](https://sreal.ucf.edu/wp-content/uploads/2018/08/doctoralConsortium.png)

![[POSTER] Can Social Presence be Contagious? Effects of Social Presence Priming on Interaction with Virtual Humans](https://sreal.ucf.edu/wp-content/uploads/2017/02/Daher2017aa.png)

![[POSTER] Humanikins: Humanity Transfer to Physical Manikins](https://sreal.ucf.edu/wp-content/uploads/2018/08/humanikin.png)

![[POSTER] Interactive Rear‐Projection Physical‐Virtual Patient Simulators](https://sreal.ucf.edu/wp-content/uploads/2018/08/mmvr_greg-1024x420.png)

![[POSTER] Exploring Social Presence Transfer in Real-Virtual Human Interaction](https://sreal.ucf.edu/wp-content/uploads/2017/02/Daher2016aa.png)